Sašo Džeroski

Quantifying Behavioural Distance Between Mathematical Expressions

Aug 21, 2024Abstract:Existing symbolic regression methods organize the space of candidate mathematical expressions primarily based on their syntactic, structural similarity. However, this approach overlooks crucial equivalences between expressions that arise from mathematical symmetries, such as commutativity, associativity, and distribution laws for arithmetic operations. Consequently, expressions with similar errors on a given data set are apart from each other in the search space. This leads to a rough error landscape in the search space that efficient local, gradient-based methods cannot explore. This paper proposes and implements a measure of a behavioral distance, BED, that clusters together expressions with similar errors. The experimental results show that the stochastic method for calculating BED achieves consistency with a modest number of sampled values for evaluating the expressions. This leads to computational efficiency comparable to the tree-based syntactic distance. Our findings also reveal that BED significantly improves the smoothness of the error landscape in the search space for symbolic regression.

Comparing Algorithm Selection Approaches on Black-Box Optimization Problems

Jun 30, 2023Abstract:Performance complementarity of solvers available to tackle black-box optimization problems gives rise to the important task of algorithm selection (AS). Automated AS approaches can help replace tedious and labor-intensive manual selection, and have already shown promising performance in various optimization domains. Automated AS relies on machine learning (ML) techniques to recommend the best algorithm given the information about the problem instance. Unfortunately, there are no clear guidelines for choosing the most appropriate one from a variety of ML techniques. Tree-based models such as Random Forest or XGBoost have consistently demonstrated outstanding performance for automated AS. Transformers and other tabular deep learning models have also been increasingly applied in this context. We investigate in this work the impact of the choice of the ML technique on AS performance. We compare four ML models on the task of predicting the best solver for the BBOB problems for 7 different runtime budgets in 2 dimensions. While our results confirm that a per-instance AS has indeed impressive potential, we also show that the particular choice of the ML technique is of much minor importance.

Algorithm Instance Footprint: Separating Easily Solvable and Challenging Problem Instances

Jun 01, 2023

Abstract:In black-box optimization, it is essential to understand why an algorithm instance works on a set of problem instances while failing on others and provide explanations of its behavior. We propose a methodology for formulating an algorithm instance footprint that consists of a set of problem instances that are easy to be solved and a set of problem instances that are difficult to be solved, for an algorithm instance. This behavior of the algorithm instance is further linked to the landscape properties of the problem instances to provide explanations of which properties make some problem instances easy or challenging. The proposed methodology uses meta-representations that embed the landscape properties of the problem instances and the performance of the algorithm into the same vector space. These meta-representations are obtained by training a supervised machine learning regression model for algorithm performance prediction and applying model explainability techniques to assess the importance of the landscape features to the performance predictions. Next, deterministic clustering of the meta-representations demonstrates that using them captures algorithm performance across the space and detects regions of poor and good algorithm performance, together with an explanation of which landscape properties are leading to it.

Artificial intelligence to advance Earth observation: a perspective

May 15, 2023Abstract:Earth observation (EO) is a prime instrument for monitoring land and ocean processes, studying the dynamics at work, and taking the pulse of our planet. This article gives a bird's eye view of the essential scientific tools and approaches informing and supporting the transition from raw EO data to usable EO-based information. The promises, as well as the current challenges of these developments, are highlighted under dedicated sections. Specifically, we cover the impact of (i) Computer vision; (ii) Machine learning; (iii) Advanced processing and computing; (iv) Knowledge-based AI; (v) Explainable AI and causal inference; (vi) Physics-aware models; (vii) User-centric approaches; and (viii) the much-needed discussion of ethical and societal issues related to the massive use of ML technologies in EO.

Automated Scientific Discovery: From Equation Discovery to Autonomous Discovery Systems

May 03, 2023

Abstract:The paper surveys automated scientific discovery, from equation discovery and symbolic regression to autonomous discovery systems and agents. It discusses the individual approaches from a "big picture" perspective and in context, but also discusses open issues and recent topics like the various roles of deep neural networks in this area, aiding in the discovery of human-interpretable knowledge. Further, we will present closed-loop scientific discovery systems, starting with the pioneering work on the Adam system up to current efforts in fields from material science to astronomy. Finally, we will elaborate on autonomy from a machine learning perspective, but also in analogy to the autonomy levels in autonomous driving. The maximal level, level five, is defined to require no human intervention at all in the production of scientific knowledge. Achieving this is one step towards solving the Nobel Turing Grand Challenge to develop AI Scientists: AI systems capable of making Nobel-quality scientific discoveries highly autonomously at a level comparable, and possibly superior, to the best human scientists by 2050.

Efficient Generator of Mathematical Expressions for Symbolic Regression

Feb 20, 2023Abstract:We propose an approach to symbolic regression based on a novel variational autoencoder for generating hierarchical structures, HVAE. It combines simple atomic units with shared weights to recursively encode and decode the individual nodes in the hierarchy. Encoding is performed bottom-up and decoding top-down. We empirically show that HVAE can be trained efficiently with small corpora of mathematical expressions and can accurately encode expressions into a smooth low-dimensional latent space. The latter can be efficiently explored with various optimization methods to address the task of symbolic regression. Indeed, random search through the latent space of HVAE performs better than random search through expressions generated by manually crafted probabilistic grammars for mathematical expressions. Finally, EDHiE system for symbolic regression, which applies an evolutionary algorithm to the latent space of HVAE, reconstructs equations from a standard symbolic regression benchmark better than a state-of-the-art system based on a similar combination of deep learning and evolutionary algorithms.\v{z}

Using Knowledge Graphs for Performance Prediction of Modular Optimization Algorithms

Jan 24, 2023Abstract:Empirical data plays an important role in evolutionary computation research. To make better use of the available data, ontologies have been proposed in the literature to organize their storage in a structured way. However, the full potential of these formal methods to capture our domain knowledge has yet to be demonstrated. In this work, we evaluate a performance prediction model built on top of the extension of the recently proposed OPTION ontology. More specifically, we first extend the OPTION ontology with the vocabulary needed to represent modular black-box optimization algorithms. Then, we use the extended OPTION ontology, to create knowledge graphs with fixed-budget performance data for two modular algorithm frameworks, modCMA, and modDE, for the 24 noiseless BBOB benchmark functions. We build the performance prediction model using a knowledge graph embedding-based methodology. Using a number of different evaluation scenarios, we show that a triple classification approach, a fairly standard predictive modeling task in the context of knowledge graphs, can correctly predict whether a given algorithm instance will be able to achieve a certain target precision for a given problem instance. This approach requires feature representation of algorithms and problems. While the latter is already well developed, we hope that our work will motivate the community to collaborate on appropriate algorithm representations.

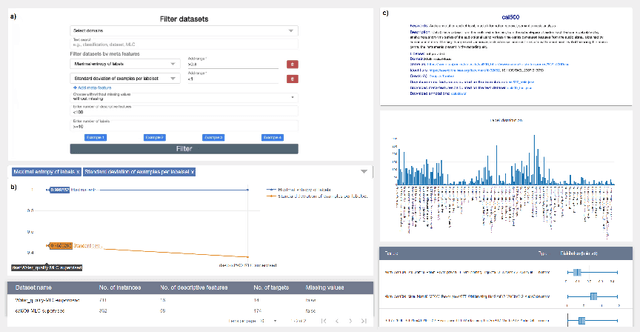

FAIRification of MLC data

Nov 23, 2022

Abstract:The multi-label classification (MLC) task has increasingly been receiving interest from the machine learning (ML) community, as evidenced by the growing number of papers and methods that appear in the literature. Hence, ensuring proper, correct, robust, and trustworthy benchmarking is of utmost importance for the further development of the field. We believe that this can be achieved by adhering to the recently emerged data management standards, such as the FAIR (Findable, Accessible, Interoperable, and Reusable) and TRUST (Transparency, Responsibility, User focus, Sustainability, and Technology) principles. To FAIRify the MLC datasets, we introduce an ontology-based online catalogue of MLC datasets that follow these principles. The catalogue extensively describes many MLC datasets with comprehensible meta-features, MLC-specific semantic descriptions, and different data provenance information. The MLC data catalogue is extensively described in our recent publication in Nature Scientific Reports, Kostovska & Bogatinovski et al., and available at: http://semantichub.ijs.si/MLCdatasets. In addition, we provide an ontology-based system for easy access and querying of performance/benchmark data obtained from a comprehensive MLC benchmark study. The system is available at: http://semantichub.ijs.si/MLCbenchmark.

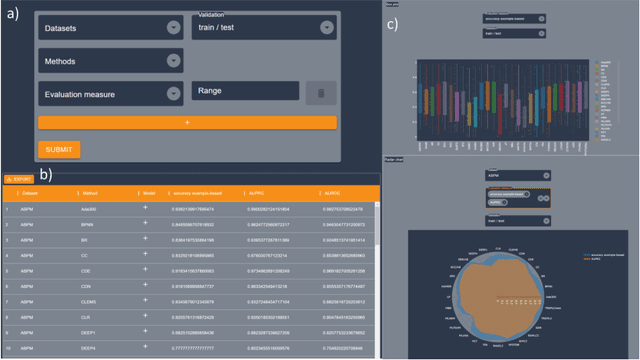

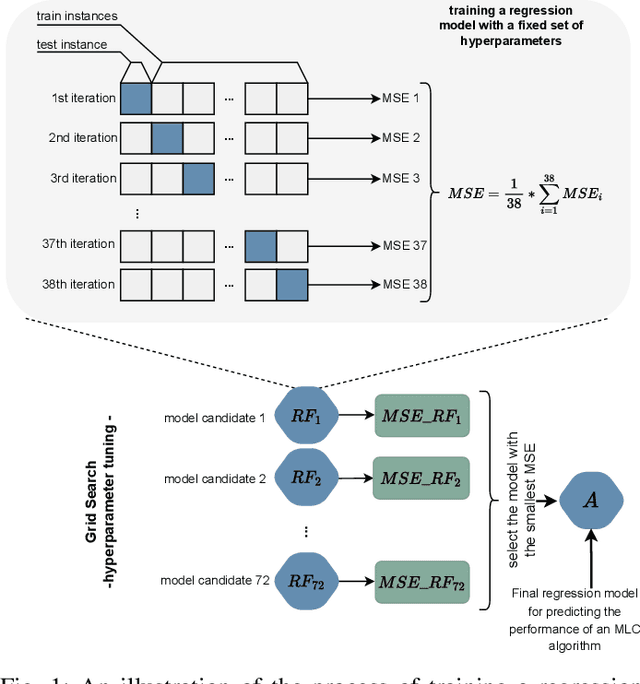

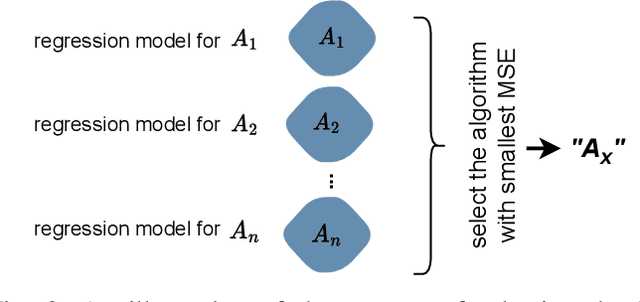

Explainable Model-specific Algorithm Selection for Multi-Label Classification

Nov 21, 2022

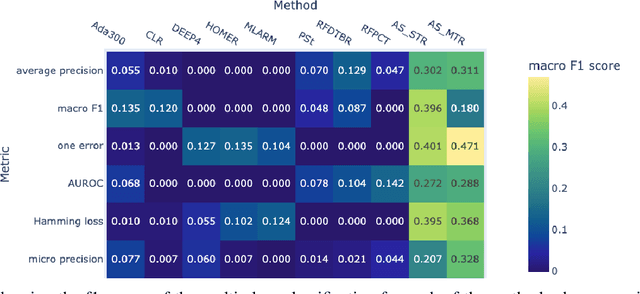

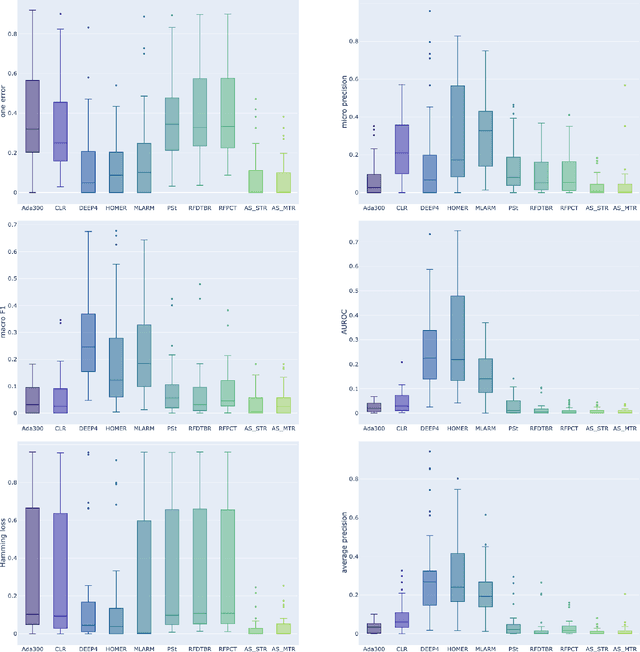

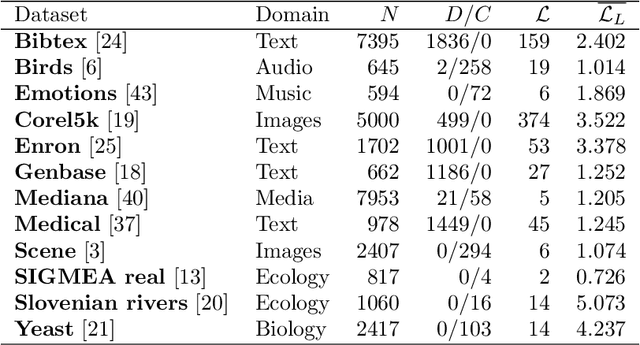

Abstract:Multi-label classification (MLC) is an ML task of predictive modeling in which a data instance can simultaneously belong to multiple classes. MLC is increasingly gaining interest in different application domains such as text mining, computer vision, and bioinformatics. Several MLC algorithms have been proposed in the literature, resulting in a meta-optimization problem that the user needs to address: which MLC approach to select for a given dataset? To address this algorithm selection problem, we investigate in this work the quality of an automated approach that uses characteristics of the datasets - so-called features - and a trained algorithm selector to choose which algorithm to apply for a given task. For our empirical evaluation, we use a portfolio of 38 datasets. We consider eight MLC algorithms, whose quality we evaluate using six different performance metrics. We show that our automated algorithm selector outperforms any of the single MLC algorithms, and this is for all evaluated performance measures. Our selection approach is explainable, a characteristic that we exploit to investigate which meta-features have the largest influence on the decisions made by the algorithm selector. Finally, we also quantify the importance of the most significant meta-features for various domains.

Semi-supervised Predictive Clustering Trees for (Hierarchical) Multi-label Classification

Jul 19, 2022

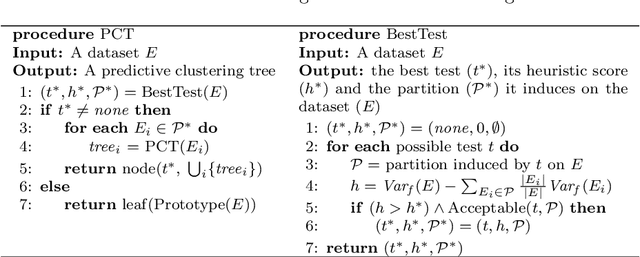

Abstract:Semi-supervised learning (SSL) is a common approach to learning predictive models using not only labeled examples, but also unlabeled examples. While SSL for the simple tasks of classification and regression has received a lot of attention from the research community, this is not properly investigated for complex prediction tasks with structurally dependent variables. This is the case of multi-label classification and hierarchical multi-label classification tasks, which may require additional information, possibly coming from the underlying distribution in the descriptive space provided by unlabeled examples, to better face the challenging task of predicting simultaneously multiple class labels. In this paper, we investigate this aspect and propose a (hierarchical) multi-label classification method based on semi-supervised learning of predictive clustering trees. We also extend the method towards ensemble learning and propose a method based on the random forest approach. Extensive experimental evaluation conducted on 23 datasets shows significant advantages of the proposed method and its extension with respect to their supervised counterparts. Moreover, the method preserves interpretability and reduces the time complexity of classical tree-based models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge