Wenjin Wang

Multi-Satellite Multi-Stream Beamspace Massive MIMO Transmission

Dec 26, 2025Abstract:This paper studies multi-satellite multi-stream (MSMS) beamspace transmission, where multiple satellites cooperate to form a distributed multiple-input multiple-output (MIMO) system and jointly deliver multiple data streams to multi-antenna user terminals (UTs), and beamspace transmission combines earth-moving beamforming with beam-domain precoding. For the first time, we formulate the signal model for MSMS beamspace MIMO transmission. Under synchronization errors, multi-antenna UTs enable the distributed MIMO channel to exhibit higher rank, supporting multiple data streams. Beamspace MIMO retains conventional codebook based beamforming while providing the performance gains of precoding. Based on the signal model, we propose statistical channel state information (sCSI)-based optimization of satellite clustering, beam selection, and transmit precoding, using a sum-rate upper-bound approximation. With given satellite clustering and beam selection, we cast precoder design as an equivalent covariance decomposition-based weighted minimum mean square error (CDWMMSE) problem. To obtain tractable algorithms, we develop a closed-form covariance decomposition required by CDWMMSE and derive an iterative MSMS beam-domain precoder under sCSI. Following this, we further propose several heuristic closed-form precoders to avoid iterative cost. For satellite clustering, we enhance a competition-based algorithm by introducing a mechanism to regulate the number of satellites serving certain UT. Furthermore, we design a two-stage low-complexity beam selection algorithm focused on enhancing the effective channel power. Simulations under practical configurations validate the proposed methods across the number of data streams, receive antennas, serving satellites, and active beams, and show that beamspace transmission approaches conventional MIMO performance at lower complexity.

QoS-Aware Hierarchical Reinforcement Learning for Joint Link Selection and Trajectory Optimization in SAGIN-Supported UAV Mobility Management

Dec 17, 2025Abstract:Due to the significant variations in unmanned aerial vehicle (UAV) altitude and horizontal mobility, it becomes difficult for any single network to ensure continuous and reliable threedimensional coverage. Towards that end, the space-air-ground integrated network (SAGIN) has emerged as an essential architecture for enabling ubiquitous UAV connectivity. To address the pronounced disparities in coverage and signal characteristics across heterogeneous networks, this paper formulates UAV mobility management in SAGIN as a constrained multi-objective joint optimization problem. The formulation couples discrete link selection with continuous trajectory optimization. Building on this, we propose a two-level multi-agent hierarchical deep reinforcement learning (HDRL) framework that decomposes the problem into two alternately solvable subproblems. To map complex link selection decisions into a compact discrete action space, we conceive a double deep Q-network (DDQN) algorithm in the top-level, which achieves stable and high-quality policy learning through double Q-value estimation. To handle the continuous trajectory action space while satisfying quality of service (QoS) constraints, we integrate the maximum-entropy mechanism of the soft actor-critic (SAC) and employ a Lagrangian-based constrained SAC (CSAC) algorithm in the lower-level that dynamically adjusts the Lagrange multipliers to balance constraint satisfaction and policy optimization. Moreover, the proposed algorithm can be extended to multi-UAV scenarios under the centralized training and decentralized execution (CTDE) paradigm, which enables more generalizable policies. Simulation results demonstrate that the proposed scheme substantially outperforms existing benchmarks in throughput, link switching frequency and QoS satisfaction.

Unlocking Symbol-Level Precoding Efficiency Through Tensor Equivariant Neural Network

Oct 02, 2025Abstract:Although symbol-level precoding (SLP) based on constructive interference (CI) exploitation offers performance gains, its high complexity remains a bottleneck. This paper addresses this challenge with an end-to-end deep learning (DL) framework with low inference complexity that leverages the structure of the optimal SLP solution in the closed-form and its inherent tensor equivariance (TE), where TE denotes that a permutation of the input induces the corresponding permutation of the output. Building upon the computationally efficient model-based formulations, as well as their known closed-form solutions, we analyze their relationship with linear precoding (LP) and investigate the corresponding optimality condition. We then construct a mapping from the problem formulation to the solution and prove its TE, based on which the designed networks reveal a specific parameter-sharing pattern that delivers low computational complexity and strong generalization. Leveraging these, we propose the backbone of the framework with an attention-based TE module, achieving linear computational complexity. Furthermore, we demonstrate that such a framework is also applicable to imperfect CSI scenarios, where we design a TE-based network to map the CSI, statistics, and symbols to auxiliary variables. Simulation results show that the proposed framework captures substantial performance gains of optimal SLP, while achieving an approximately 80-times speedup over conventional methods and maintaining strong generalization across user numbers and symbol block lengths.

Tensor-Structured Bayesian Channel Prediction for Upper Mid-Band XL-MIMO Systems

Aug 11, 2025Abstract:The upper mid-band balances coverage and capacity for the future cellular systems and also embraces XL-MIMO systems, offering enhanced spectral and energy efficiency. However, these benefits are significantly degraded under mobility due to channel aging, and further exacerbated by the unique near-field (NF) and spatial non-stationarity (SnS) propagation in such systems. To address this challenge, we propose a novel channel prediction approach that incorporates dedicated channel modeling, probabilistic representations, and Bayesian inference algorithms for this emerging scenario. Specifically, we develop tensor-structured channel models in both the spatial-frequency-temporal (SFT) and beam-delay-Doppler (BDD) domains, which leverage temporal correlations among multiple pilot symbols for channel prediction. The factor matrices of multi-linear transformations are parameterized by BDD domain grids and SnS factors, where beam domain grids are jointly determined by angles and slopes under spatial-chirp based NF representations. To enable tractable inference, we replace environment-dependent BDD domain grids with uniformly sampled ones, and introduce perturbation parameters in each domain to mitigate grid mismatch. We further propose a hybrid beam domain strategy that integrates angle-only sampling with slope hyperparameterization to avoid the computational burden of explicit slope sampling. Based on the probabilistic models, we develop tensor-structured bi-layer inference (TS-BLI) algorithm under the expectation-maximization (EM) framework, which reduces computational complexity via tensor operations by leveraging the bi-layer factor graph for approximate E-step inference and an alternating strategy with closed-form updates in the M-step. Numerical simulations based on the near-practical channel simulator demonstrate the superior channel prediction performance of the proposed algorithm.

DMRS-Based Uplink Channel Estimation for MU-MIMO Systems with Location-Specific SCSI Acquisition

Jun 13, 2025Abstract:With the growing number of users in multi-user multiple-input multiple-output (MU-MIMO) systems, demodulation reference signals (DMRSs) are efficiently multiplexed in the code domain via orthogonal cover codes (OCC) to ensure orthogonality and minimize pilot interference. In this paper, we investigate uplink DMRS-based channel estimation for MU-MIMO systems with Type II OCC pattern standardized in 3GPP Release 18, leveraging location-specific statistical channel state information (SCSI) to enhance performance. Specifically, we propose a SCSI-assisted Bayesian channel estimator (SA-BCE) based on the minimum mean square error criterion to suppress the pilot interference and noise, albeit at the cost of cubic computational complexity due to matrix inversions. To reduce this complexity while maintaining performance, we extend the scheme to a windowed version (SA-WBCE), which incorporates antenna-frequency domain windowing and beam-delay domain processing to exploit asymptotic sparsity and mitigate energy leakage in practical systems. To avoid the frequent real-time SCSI acquisition, we construct a grid-based location-specific SCSI database based on the principle of spatial consistency, and subsequently leverage the uplink received signals within each grid to extract the SCSI. Facilitated by the multilinear structure of wireless channels, we formulate the SCSI acquisition problem within each grid as a tensor decomposition problem, where the factor matrices are parameterized by the multi-path powers, delays, and angles. The computational complexity of SCSI acquisition can be significantly reduced by exploiting the Vandermonde structure of the factor matrices. Simulation results demonstrate that the proposed location-specific SCSI database construction method achieves high accuracy, while the SA-BCE and SA-WBCE significantly outperform state-of-the-art benchmarks in MU-MIMO systems.

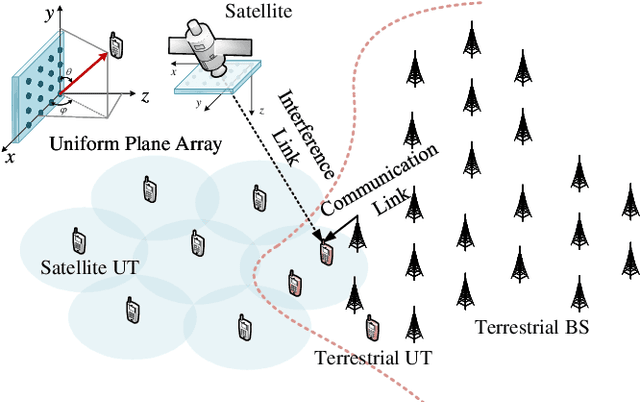

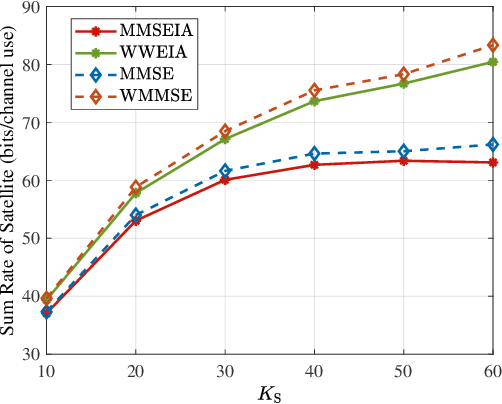

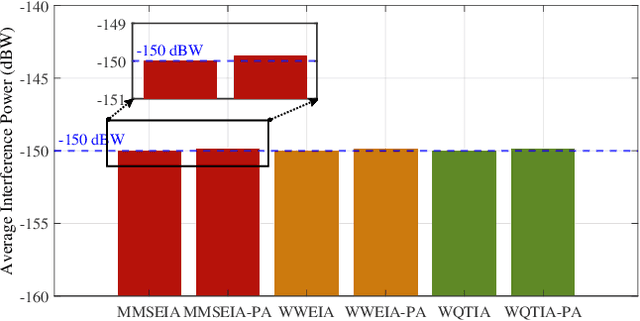

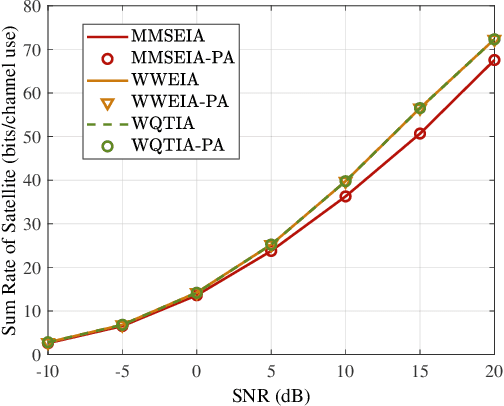

Interference in Spectrum-Sharing Integrated Terrestrial and Satellite Networks: Modeling, Approximation, and Robust Transmit Beamforming

Jun 13, 2025

Abstract:This paper investigates robust transmit (TX) beamforming from the satellite to user terminals (UTs), based on statistical channel state information (CSI). The proposed design specifically targets the mitigation of satellite-to-terrestrial interference in spectrum-sharing integrated terrestrial and satellite networks. By leveraging the distribution information of terrestrial UTs, we first establish an interference model from the satellite to terrestrial systems without shared CSI. Based on this, robust TX beamforming schemes are developed under both the interference threshold and the power budget. Two optimization criteria are considered: satellite weighted sum rate maximization and mean square error minimization. The former achieves a superior achievable rate performance through an iterative optimization framework, whereas the latter enables a low-complexity closed-form solution at the expense of reduced rate, with interference constraints satisfied via a bisection method. To avoid complex integral calculations and the dependence on user distribution information in inter-system interference evaluations, we propose a terrestrial base station position-aided approximation method, and the approximation errors are subsequently analyzed. Numerical simulations validate the effectiveness of our proposed schemes.

Time-Continuous Frequency Allocation for Feeder Links of Mega Constellations with Multi-Antenna Gateway Stations

May 18, 2025Abstract:With the recent rapid advancement of mega low earth orbit (LEO) satellite constellations, multi-antenna gateway station (MAGS) has emerged as a key enabler to support extremely high system capacity via massive feeder links. However, the densification of both space and ground segment leads to reduced spatial separation between links, posing unprecedented challenges of interference exacerbation. This paper investigates graph coloring-based frequency allocation methods for interference mitigation (IM) of mega LEO systems. We first reveal the characteristics of MAGS interference pattern and formulate the IM problem into a $K$-coloring problem using an adaptive threshold method. Then we propose two tailored graph coloring algorithms, namely Generalized Global (GG) and Clique-Based Tabu Search (CTS), to solve this problem. GG employs a low-complexity greedy conflict avoidance strategy, while CTS leverages the unique clique structure brought by MAGSs to enhance IM performance. Subsequently, we innovatively modify them to achieve time-continuous frequency allocation, which is crucial to ensure the stability of feeder links. Moreover, we further devise two mega constellation decomposition methods to alleviate the complexity burden of satellite operators. Finally, we propose a list coloring-based vacant subchannel utilization method to further improve spectrum efficiency and system capacity. Simulation results on Starlink constellation of the first and second generations with 34396 satellites demonstrate the effectiveness and superiority of the proposed methodology.

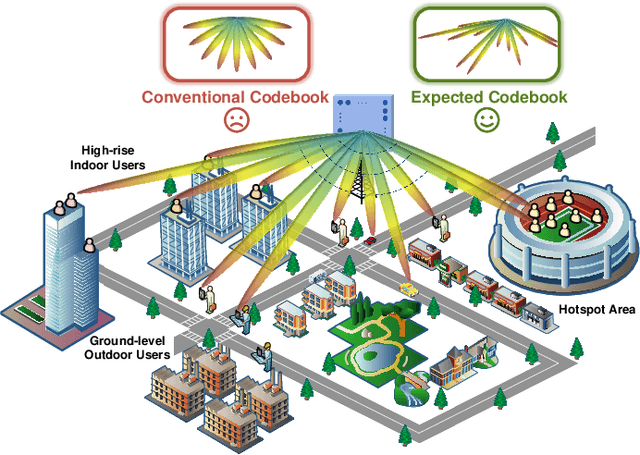

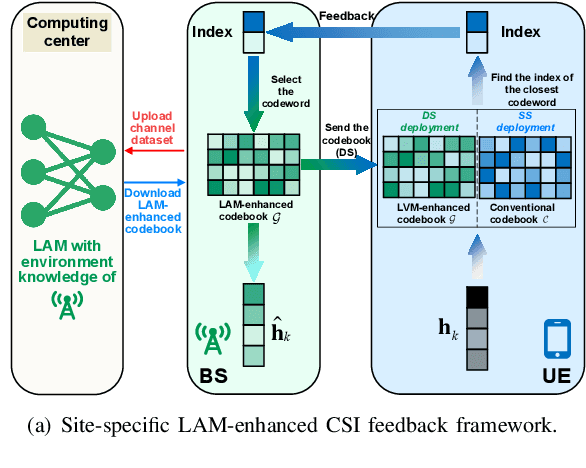

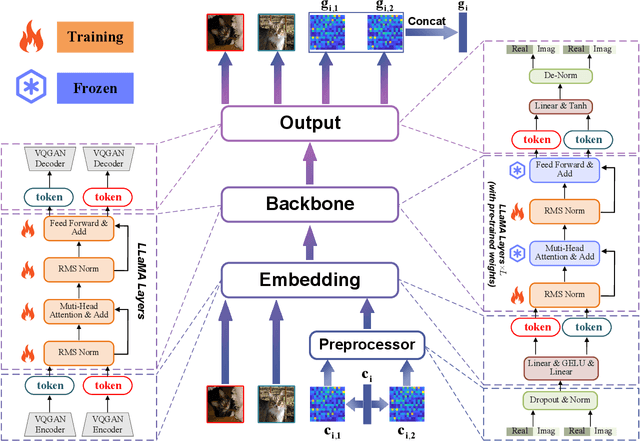

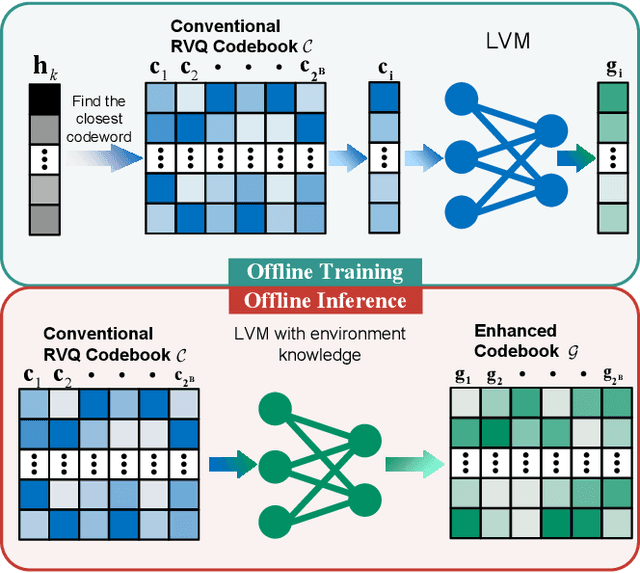

Extract the Best, Discard the Rest: CSI Feedback with Offline Large AI Models

May 13, 2025

Abstract:Large AI models (LAMs) have shown strong potential in wireless communication tasks, but their practical deployment remains hindered by latency and computational constraints. In this work, we focus on the challenge of integrating LAMs into channel state information (CSI) feedback for frequency-division duplex (FDD) massive multiple-intput multiple-output (MIMO) systems. To this end, we propose two offline frameworks, namely site-specific LAM-enhanced CSI feedback (SSLCF) and multi-scenario LAM-enhanced CSI feedback (MSLCF), that incorporate LAMs into the codebook-based CSI feedback paradigm without requiring real-time inference. Specifically, SSLCF generates a site-specific enhanced codebook through fine-tuning on locally collected CSI data, while MSLCF improves generalization by pre-generating a set of environment-aware codebooks. Both of these frameworks build upon the LAM with vision-based backbone, which is pre-trained on large-scale image datasets and fine-tuned with CSI data to generate customized codebooks. This resulting network named LVM4CF captures the structural similarity between CSI and image, allowing the LAM to refine codewords tailored to the specific environments. To optimize the codebook refinement capability of LVM4CF under both single- and dual-side deployment modes, we further propose corresponding training and inference algorithms. Simulation results show that our frameworks significantly outperform existing schemes in both reconstruction accuracy and system throughput, without introducing additional inference latency or computational overhead. These results also support the core design methodology of our proposed frameworks, extracting the best and discarding the rest, as a promising pathway for integrating LAMs into future wireless systems.

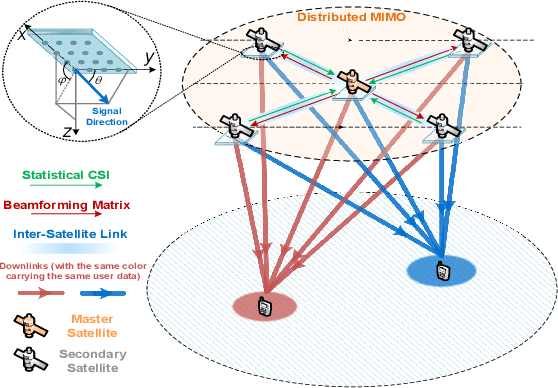

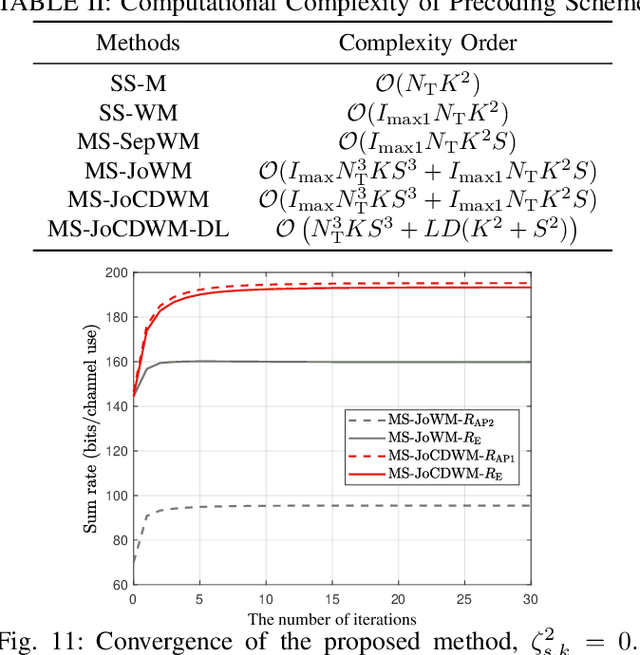

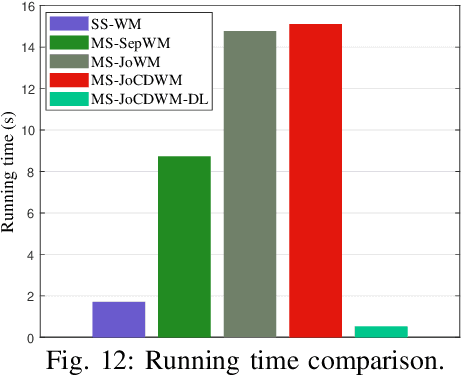

Statistical CSI-Based Distributed Precoding Design for OFDM-Cooperative Multi-Satellite Systems

May 12, 2025

Abstract:This paper investigates the design of distributed precoding for multi-satellite massive MIMO transmissions. We first conduct a detailed analysis of the transceiver model, in which delay and Doppler precompensation is introduced to ensure coherent transmission. In this analysis, we examine the impact of precompensation errors on the transmission model, emphasize the near-independence of inter-satellite interference, and ultimately derive the received signal model. Based on such signal model, we formulate an approximate expected rate maximization problem that considers both statistical channel state information (sCSI) and compensation errors. Unlike conventional approaches that recast such problems as weighted minimum mean square error (WMMSE) minimization, we demonstrate that this transformation fails to maintain equivalence in the considered scenario. To address this, we introduce an equivalent covariance decomposition-based WMMSE (CDWMMSE) formulation derived based on channel covariance matrix decomposition. Taking advantage of the channel characteristics, we develop a low-complexity decomposition method and propose an optimization algorithm. To further reduce computational complexity, we introduce a model-driven scalable deep learning (DL) approach that leverages the equivariance of the mapping from sCSI to the unknown variables in the optimal closed-form solution, enhancing performance through novel dense Transformer network and scaling-invariant loss function design. Simulation results validate the effectiveness and robustness of the proposed method in some practical scenarios. We also demonstrate that the DL approach can adapt to dynamic settings with varying numbers of users and satellites.

Distributed U6G ELAA Communication Systems: Channel Measurement and Small-Scale Fading Characterization

Apr 29, 2025Abstract:The distributed upper 6 GHz (U6G) extra-large scale antenna array (ELAA) is a key enabler for future wireless communication systems, offering higher throughput and wider coverage, similar to existing ELAA systems, while effectively mitigating unaffordable complexity and hardware overhead. Uncertain channel characteristics, however, present significant bottleneck problems that hinder the hardware structure and algorithm design of the distributed U6G ELAA system. In response, we construct a U6G channel sounder and carry out extensive measurement campaigns across various typical scenarios. Initially, U6G channel characteristics, particularly small-scale fading characteristics, are unveiled and compared across different scenarios. Subsequently, the U6G ELAA channel characteristics are analyzed using a virtual array comprising 64 elements. Furthermore, inspired by the potential for distributed processing, we investigate U6G ELAA channel characteristics from the perspectives of subarrays and sub-bands, including subarray-wise nonstationarities, consistencies, far-field approximations, and sub-band characteristics. Through a combination of analysis and measurement validation, several insights and benefits, particularly suitable for distributed processing in U6G ELAA systems, are revealed, which provides practical validation for the deployment of U6G ELAA systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge