Yiming Zhu

Multi-Satellite Multi-Stream Beamspace Massive MIMO Transmission

Dec 26, 2025Abstract:This paper studies multi-satellite multi-stream (MSMS) beamspace transmission, where multiple satellites cooperate to form a distributed multiple-input multiple-output (MIMO) system and jointly deliver multiple data streams to multi-antenna user terminals (UTs), and beamspace transmission combines earth-moving beamforming with beam-domain precoding. For the first time, we formulate the signal model for MSMS beamspace MIMO transmission. Under synchronization errors, multi-antenna UTs enable the distributed MIMO channel to exhibit higher rank, supporting multiple data streams. Beamspace MIMO retains conventional codebook based beamforming while providing the performance gains of precoding. Based on the signal model, we propose statistical channel state information (sCSI)-based optimization of satellite clustering, beam selection, and transmit precoding, using a sum-rate upper-bound approximation. With given satellite clustering and beam selection, we cast precoder design as an equivalent covariance decomposition-based weighted minimum mean square error (CDWMMSE) problem. To obtain tractable algorithms, we develop a closed-form covariance decomposition required by CDWMMSE and derive an iterative MSMS beam-domain precoder under sCSI. Following this, we further propose several heuristic closed-form precoders to avoid iterative cost. For satellite clustering, we enhance a competition-based algorithm by introducing a mechanism to regulate the number of satellites serving certain UT. Furthermore, we design a two-stage low-complexity beam selection algorithm focused on enhancing the effective channel power. Simulations under practical configurations validate the proposed methods across the number of data streams, receive antennas, serving satellites, and active beams, and show that beamspace transmission approaches conventional MIMO performance at lower complexity.

TAPOM: Task-Space Topology-Guided Motion Planning for Manipulating Elongated Object in Cluttered Environments

Nov 07, 2025Abstract:Robotic manipulation in complex, constrained spaces is vital for widespread applications but challenging, particularly when navigating narrow passages with elongated objects. Existing planning methods often fail in these low-clearance scenarios due to the sampling difficulties or the local minima. This work proposes Topology-Aware Planning for Object Manipulation (TAPOM), which explicitly incorporates task-space topological analysis to enable efficient planning. TAPOM uses a high-level analysis to identify critical pathways and generate guiding keyframes, which are utilized in a low-level planner to find feasible configuration space trajectories. Experimental validation demonstrates significantly high success rates and improved efficiency over state-of-the-art methods on low-clearance manipulation tasks. This approach offers broad implications for enhancing manipulation capabilities of robots in complex real-world environments.

Dual-Domain Perspective on Degradation-Aware Fusion: A VLM-Guided Robust Infrared and Visible Image Fusion Framework

Sep 05, 2025Abstract:Most existing infrared-visible image fusion (IVIF) methods assume high-quality inputs, and therefore struggle to handle dual-source degraded scenarios, typically requiring manual selection and sequential application of multiple pre-enhancement steps. This decoupled pre-enhancement-to-fusion pipeline inevitably leads to error accumulation and performance degradation. To overcome these limitations, we propose Guided Dual-Domain Fusion (GD^2Fusion), a novel framework that synergistically integrates vision-language models (VLMs) for degradation perception with dual-domain (frequency/spatial) joint optimization. Concretely, the designed Guided Frequency Modality-Specific Extraction (GFMSE) module performs frequency-domain degradation perception and suppression and discriminatively extracts fusion-relevant sub-band features. Meanwhile, the Guided Spatial Modality-Aggregated Fusion (GSMAF) module carries out cross-modal degradation filtering and adaptive multi-source feature aggregation in the spatial domain to enhance modality complementarity and structural consistency. Extensive qualitative and quantitative experiments demonstrate that GD^2Fusion achieves superior fusion performance compared with existing algorithms and strategies in dual-source degraded scenarios. The code will be publicly released after acceptance of this paper.

FSATFusion: Frequency-Spatial Attention Transformer for Infrared and Visible Image Fusion

Jun 12, 2025Abstract:The infrared and visible images fusion (IVIF) is receiving increasing attention from both the research community and industry due to its excellent results in downstream applications. Existing deep learning approaches often utilize convolutional neural networks to extract image features. However, the inherently capacity of convolution operations to capture global context can lead to information loss, thereby restricting fusion performance. To address this limitation, we propose an end-to-end fusion network named the Frequency-Spatial Attention Transformer Fusion Network (FSATFusion). The FSATFusion contains a frequency-spatial attention Transformer (FSAT) module designed to effectively capture discriminate features from source images. This FSAT module includes a frequency-spatial attention mechanism (FSAM) capable of extracting significant features from feature maps. Additionally, we propose an improved Transformer module (ITM) to enhance the ability to extract global context information of vanilla Transformer. We conducted both qualitative and quantitative comparative experiments, demonstrating the superior fusion quality and efficiency of FSATFusion compared to other state-of-the-art methods. Furthermore, our network was tested on two additional tasks without any modifications, to verify the excellent generalization capability of FSATFusion. Finally, the object detection experiment demonstrated the superiority of FSATFusion in downstream visual tasks. Our code is available at https://github.com/Lmmh058/FSATFusion.

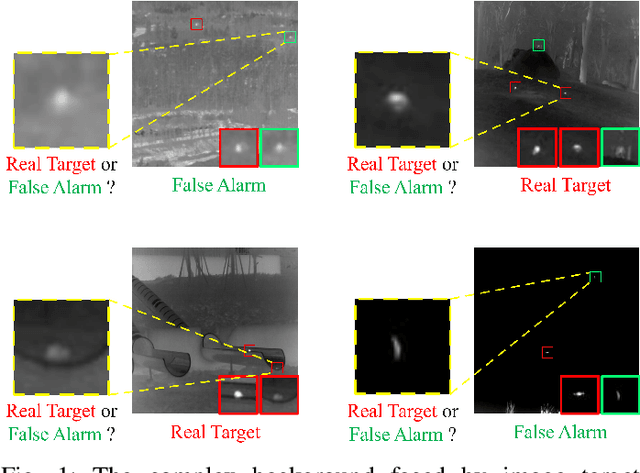

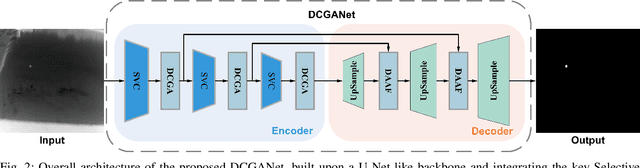

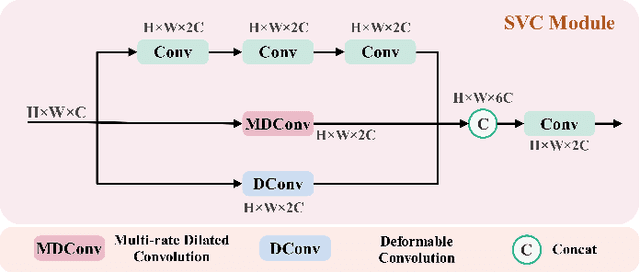

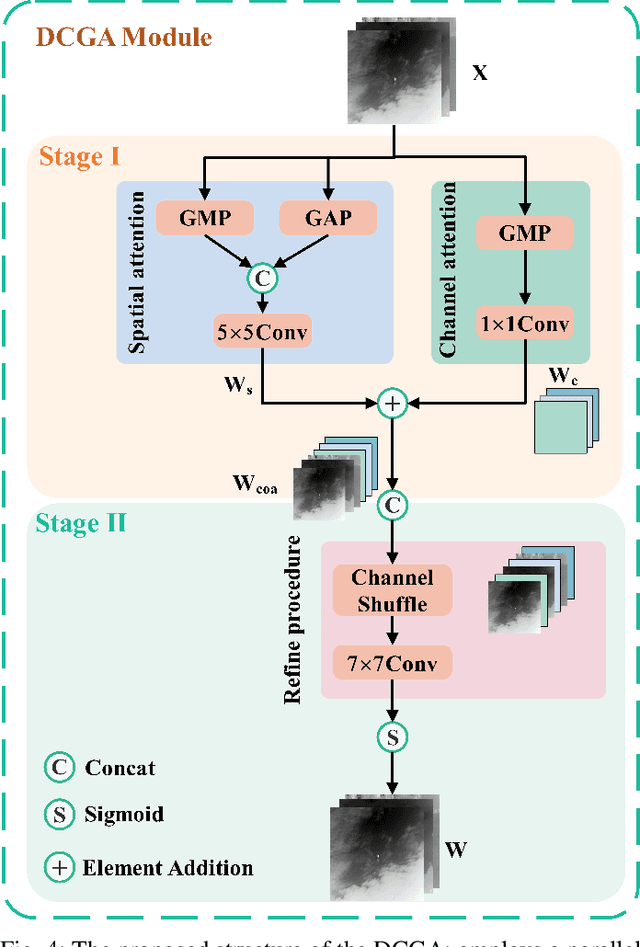

Selective Variable Convolution Meets Dynamic Content Guided Attention for Infrared Small Target Detection

Apr 30, 2025

Abstract:Infrared Small Target Detection (IRSTD) system aims to identify small targets in complex backgrounds. Due to the convolution operation in Convolutional Neural Networks (CNNs), applying traditional CNNs to IRSTD presents challenges, since the feature extraction of small targets is often insufficient, resulting in the loss of critical features. To address these issues, we propose a dynamic content guided attention multiscale feature aggregation network (DCGANet), which adheres to the attention principle of 'coarse-to-fine' and achieves high detection accuracy. First, we propose a selective variable convolution (SVC) module that integrates the benefits of standard convolution, irregular deformable convolution, and multi-rate dilated convolution. This module is designed to expand the receptive field and enhance non-local features, thereby effectively improving the discrimination of targets from backgrounds. Second, the core component of DCGANet is a two-stage content guided attention module. This module employs two-stage attention mechanism to initially direct the network's focus to salient regions within the feature maps and subsequently determine whether these regions correspond to targets or background interference. By retaining the most significant responses, this mechanism effectively suppresses false alarms. Additionally, we propose adaptive dynamic feature fusion (ADFF) module to substitute for static feature cascading. This dynamic feature fusion strategy enables DCGANet to adaptively integrate contextual features, thereby enhancing its ability to discriminate true targets from false alarms. DCGANet has achieved new benchmarks across multiple datasets.

Make Both Ends Meet: A Synergistic Optimization Infrared Small Target Detection with Streamlined Computational Overhead

Apr 30, 2025

Abstract:Infrared small target detection(IRSTD) is widely recognized as a challenging task due to the inherent limitations of infrared imaging, including low signal-to-noise ratios, lack of texture details, and complex background interference. While most existing methods model IRSTD as a semantic segmentation task, but they suffer from two critical drawbacks: (1)blurred target boundaries caused by long-distance imaging dispersion; and (2) excessive computational overhead due to indiscriminate feature stackin. To address these issues, we propose the Lightweight Efficiency Infrared Small Target Detection (LE-IRSTD), a lightweight and efficient framework based on YOLOv8n, with following key innovations. Firstly, we identify that the multiple bottleneck structures within the C2f component of the YOLOv8-n backbone contribute to an increased computational burden. Therefore, we implement the Mobile Inverted Bottleneck Convolution block (MBConvblock) and Bottleneck Structure block (BSblock) in the backbone, effectively balancing the trade-off between computational efficiency and the extraction of deep semantic information. Secondly, we introduce the Attention-based Variable Convolution Stem (AVCStem) structure, substituting the final convolution with Variable Kernel Convolution (VKConv), which allows for adaptive convolutional kernels that can transform into various shapes, facilitating the receptive field for the extraction of targets. Finally, we employ Global Shuffle Convolution (GSConv) to shuffle the channel dimension features obtained from different convolutional approaches, thereby enhancing the robustness and generalization capabilities of our method. Experimental results demonstrate that our LE-IRSTD method achieves compelling results in both accuracy and lightweight performance, outperforming several state-of-the-art deep learning methods.

DAAF:Degradation-Aware Adaptive Fusion Framework for Robust Infrared and Visible Images Fusion

Apr 15, 2025

Abstract:Existing infrared and visible image fusion(IVIF) algorithms often prioritize high-quality images, neglecting image degradation such as low light and noise, which limits the practical potential. This paper propose Degradation-Aware Adaptive image Fusion (DAAF), which achieves unified modeling of adaptive degradation optimization and image fusion. Specifically, DAAF comprises an auxiliary Adaptive Degradation Optimization Network (ADON) and a Feature Interactive Local-Global Fusion (FILGF) Network. Firstly, ADON includes infrared and visible-light branches. Within the infrared branch, frequency-domain feature decomposition and extraction are employed to isolate Gaussian and stripe noise. In the visible-light branch, Retinex decomposition is applied to extract illumination and reflectance components, enabling complementary enhancement of detail and illumination distribution. Subsequently, FILGF performs interactive multi-scale local-global feature fusion. Local feature fusion consists of intra-inter model feature complement, while global feature fusion is achieved through a interactive cross-model attention. Extensive experiments have shown that DAAF outperforms current IVIF algorithms in normal and complex degradation scenarios.

Characterizing LLM-driven Social Network: The Chirper.ai Case

Apr 14, 2025Abstract:Large language models (LLMs) demonstrate the ability to simulate human decision-making processes, enabling their use as agents in modeling sophisticated social networks, both offline and online. Recent research has explored collective behavioral patterns and structural characteristics of LLM agents within simulated networks. However, empirical comparisons between LLM-driven and human-driven online social networks remain scarce, limiting our understanding of how LLM agents differ from human users. This paper presents a large-scale analysis of Chirper.ai, an X/Twitter-like social network entirely populated by LLM agents, comprising over 65,000 agents and 7.7 million AI-generated posts. For comparison, we collect a parallel dataset from Mastodon, a human-driven decentralized social network, with over 117,000 users and 16 million posts. We examine key differences between LLM agents and humans in posting behaviors, abusive content, and social network structures. Our findings provide critical insights into the evolving landscape of online social network analysis in the AI era, offering a comprehensive profile of LLM agents in social simulations.

A Tensor-Structured Approach to Dynamic Channel Prediction for Massive MIMO Systems with Temporal Non-Stationarity

Dec 09, 2024

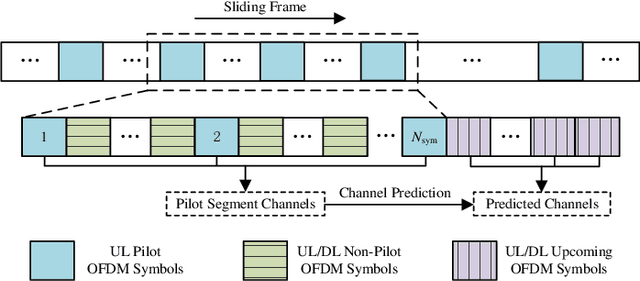

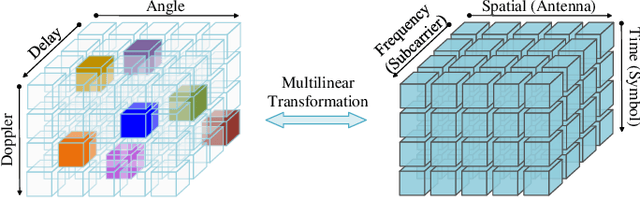

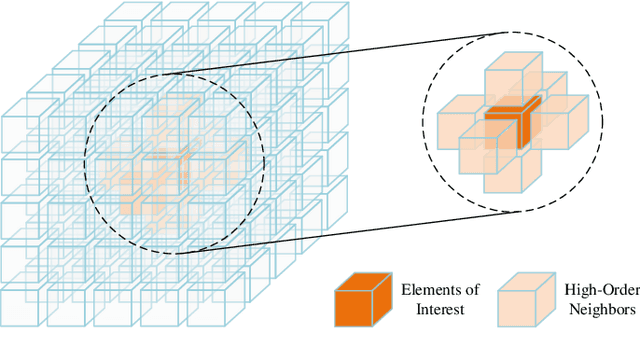

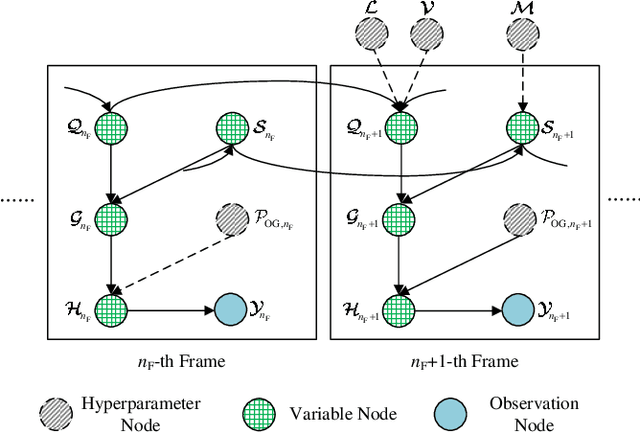

Abstract:In moderate- to high-mobility scenarios, channel state information (CSI) varies rapidly and becomes temporally non-stationary, leading to significant performance degradation in channel reciprocity-dependent massive multiple-input multiple-output (MIMO) transmission. To address this challenge, we propose a tensor-structured approach to dynamic channel prediction (TS-DCP) for massive MIMO systems with temporal non-stationarity, leveraging dual-timescale and cross-domain correlations. Specifically, due to the inherent spatial consistency, non-stationary channels on long-timescales are treated as stationary on short-timescales, decoupling complicated correlations into more tractable dual-timescale ones. To exploit such property, we frame the pilot symbols, capturing short-timescale correlations within frames by Doppler domain modeling and long-timescale correlations across frames by Markov/autoregressive processes. Based on this, we develop the tensor-structured signal model in the spatial-frequency-temporal domain, incorporating correlated angle-delay-Doppler domain channels and Vandermonde-structured factor matrices. Furthermore, we model cross-domain correlations within each frame, arising from clustered scatterer distributions, using tensor-structured upgradations of Markov processes and coupled Gaussian distributions. Following these probabilistic models, we formulate the TS-DCP as the variational free energy (VFE) minimization problem, designing trial belief structures through online approximation and the Bethe method. This yields the online TS-DCP algorithm derived from a dual-layer VFE optimization process, where both outer and inner layers leverage the multilinear structure of channels to reduce computational complexity significantly. Numerical simulations demonstrate the significant superiority of the proposed algorithm over benchmarks in terms of channel prediction performance.

Gradient is All You Need: Gradient-Based Attention Fusion for Infrared Small Target Detection

Sep 29, 2024

Abstract:Infrared small target detection (IRSTD) is widely used in civilian and military applications. However, IRSTD encounters several challenges, including the tendency for small and dim targets to be obscured by complex backgrounds. To address this issue, we propose the Gradient Network (GaNet), which aims to extract and preserve edge and gradient information of small targets. GaNet employs the Gradient Transformer (GradFormer) module, simulating central difference convolutions (CDC) to extract and integrate gradient features with deeper features. Furthermore, we propose a global feature extraction model (GFEM) that offers a comprehensive perspective to prevent the network from focusing solely on details while neglecting the background information. We compare the network with state-of-the-art (SOTA) approaches, and the results demonstrate that our method performs effectively. Our source code is available at https://github.com/greekinRoma/Gradient-Transformer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge