Brendan McMahan

On Design Principles for Private Adaptive Optimizers

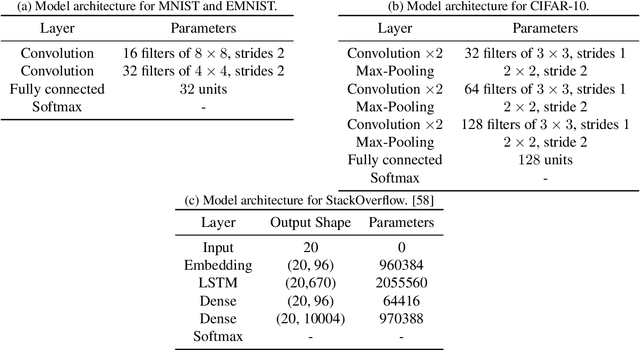

Jul 01, 2025Abstract:The spherical noise added to gradients in differentially private (DP) training undermines the performance of adaptive optimizers like AdaGrad and Adam, and hence many recent works have proposed algorithms to address this challenge. However, the empirical results in these works focus on simple tasks and models and the conclusions may not generalize to model training in practice. In this paper we survey several of these variants, and develop better theoretical intuition for them as well as perform empirical studies comparing them. We find that a common intuition of aiming for unbiased estimates of second moments of gradients in adaptive optimizers is misguided, and instead that a simple technique called scale-then-privatize (which does not achieve unbiased second moments) has more desirable theoretical behaviors and outperforms all other variants we study on a small-scale language model training task. We additionally argue that scale-then-privatize causes the noise addition to better match the application of correlated noise mechanisms which are more desirable to use in practice.

It's My Data Too: Private ML for Datasets with Multi-User Training Examples

Mar 05, 2025

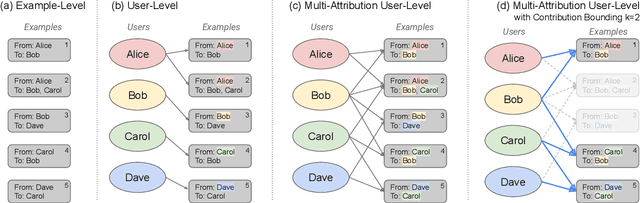

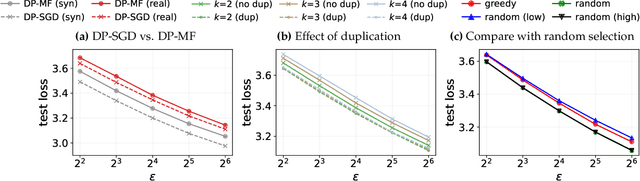

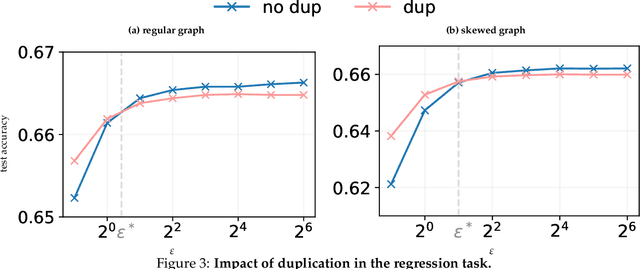

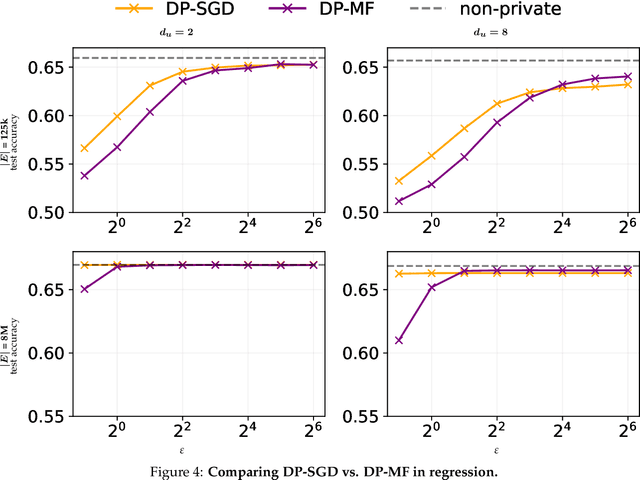

Abstract:We initiate a study of algorithms for model training with user-level differential privacy (DP), where each example may be attributed to multiple users, which we call the multi-attribution model. We first provide a carefully chosen definition of user-level DP under the multi-attribution model. Training in the multi-attribution model is facilitated by solving the contribution bounding problem, i.e. the problem of selecting a subset of the dataset for which each user is associated with a limited number of examples. We propose a greedy baseline algorithm for the contribution bounding problem. We then empirically study this algorithm for a synthetic logistic regression task and a transformer training task, including studying variants of this baseline algorithm that optimize the subset chosen using different techniques and criteria. We find that the baseline algorithm remains competitive with its variants in most settings, and build a better understanding of the practical importance of a bias-variance tradeoff inherent in solutions to the contribution bounding problem.

Confidential Federated Computations

Apr 16, 2024Abstract:Federated Learning and Analytics (FLA) have seen widespread adoption by technology platforms for processing sensitive on-device data. However, basic FLA systems have privacy limitations: they do not necessarily require anonymization mechanisms like differential privacy (DP), and provide limited protections against a potentially malicious service provider. Adding DP to a basic FLA system currently requires either adding excessive noise to each device's updates, or assuming an honest service provider that correctly implements the mechanism and only uses the privatized outputs. Secure multiparty computation (SMPC) -based oblivious aggregations can limit the service provider's access to individual user updates and improve DP tradeoffs, but the tradeoffs are still suboptimal, and they suffer from scalability challenges and susceptibility to Sybil attacks. This paper introduces a novel system architecture that leverages trusted execution environments (TEEs) and open-sourcing to both ensure confidentiality of server-side computations and provide externally verifiable privacy properties, bolstering the robustness and trustworthiness of private federated computations.

Convergence of Gradient Descent with Linearly Correlated Noise and Applications to Differentially Private Learning

Feb 02, 2023

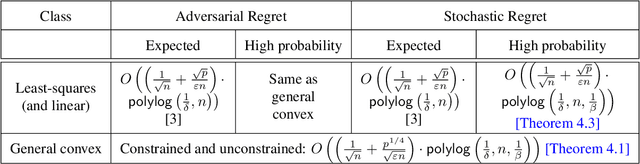

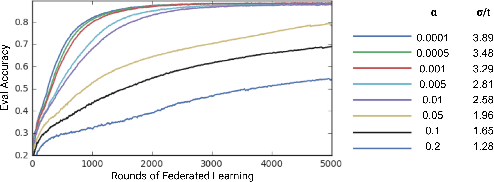

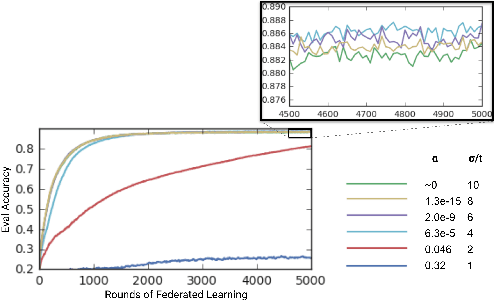

Abstract:We study stochastic optimization with linearly correlated noise. Our study is motivated by recent methods for optimization with differential privacy (DP), such as DP-FTRL, which inject noise via matrix factorization mechanisms. We propose an optimization problem that distils key facets of these DP methods and that involves perturbing gradients by linearly correlated noise. We derive improved convergence rates for gradient descent in this framework for convex and non-convex loss functions. Our theoretical analysis is novel and might be of independent interest. We use these convergence rates to develop new, effective matrix factorizations for differentially private optimization, and highlight the benefits of these factorizations theoretically and empirically.

Federated Select: A Primitive for Communication- and Memory-Efficient Federated Learning

Aug 19, 2022

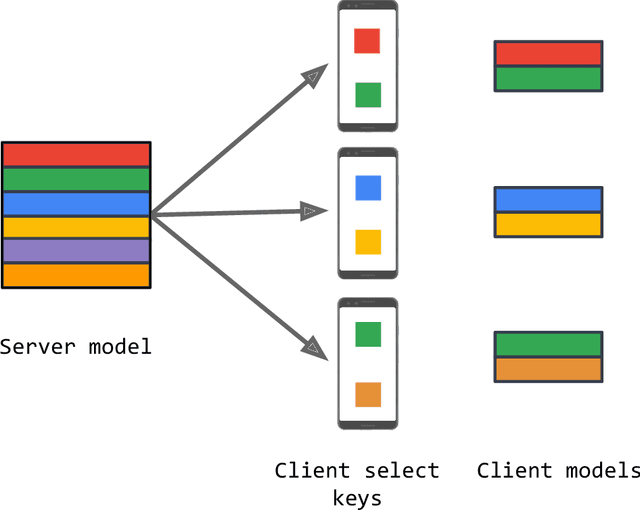

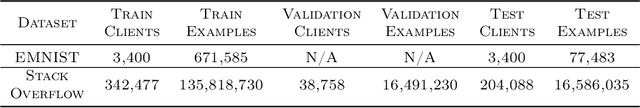

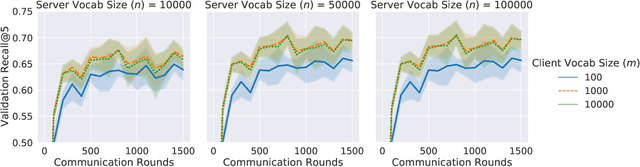

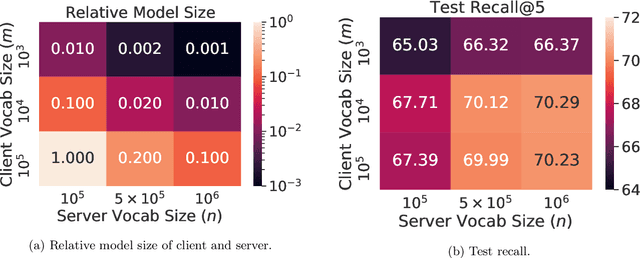

Abstract:Federated learning (FL) is a framework for machine learning across heterogeneous client devices in a privacy-preserving fashion. To date, most FL algorithms learn a "global" server model across multiple rounds. At each round, the same server model is broadcast to all participating clients, updated locally, and then aggregated across clients. In this work, we propose a more general procedure in which clients "select" what values are sent to them. Notably, this allows clients to operate on smaller, data-dependent slices. In order to make this practical, we outline a primitive, federated select, which enables client-specific selection in realistic FL systems. We discuss how to use federated select for model training and show that it can lead to drastic reductions in communication and client memory usage, potentially enabling the training of models too large to fit on-device. We also discuss the implications of federated select on privacy and trust, which in turn affect possible system constraints and design. Finally, we discuss open questions concerning model architectures, privacy-preserving technologies, and practical FL systems.

Private Online Prefix Sums via Optimal Matrix Factorizations

Feb 16, 2022

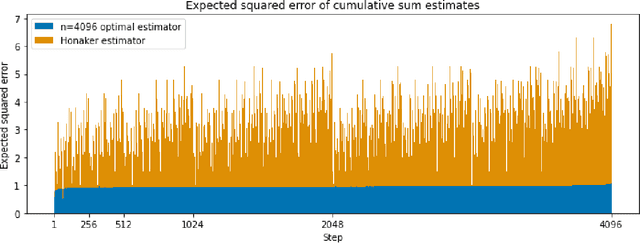

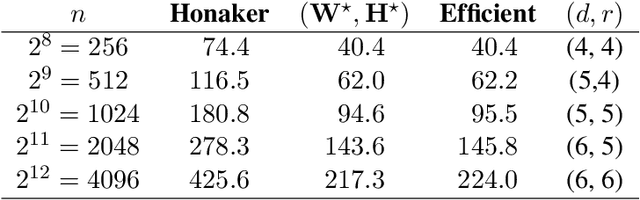

Abstract:Motivated by differentially-private (DP) training of machine learning models and other applications, we investigate the problem of computing prefix sums in the online (streaming) setting with DP. This problem has previously been addressed by special-purpose tree aggregation schemes with hand-crafted estimators. We show that these previous schemes can all be viewed as specific instances of a broad class of matrix-factorization-based DP mechanisms, and that in fact much better mechanisms exist in this class. In particular, we characterize optimal factorizations of linear queries under online constraints, deriving existence, uniqueness, and explicit expressions that allow us to efficiently compute optimal mechanisms, including for online prefix sums. These solutions improve over the existing state-of-the-art by a significant constant factor, and avoid some of the artifacts introduced by the use of the tree data structure.

Practical and Private (Deep) Learning without Sampling or Shuffling

Feb 26, 2021

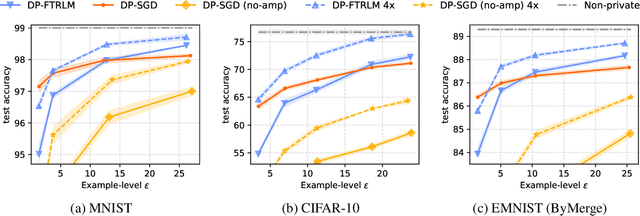

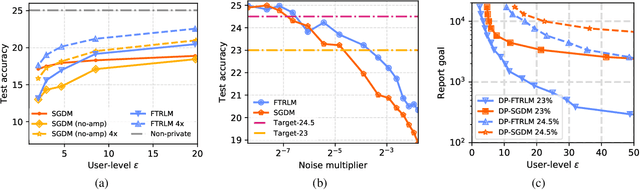

Abstract:We consider training models with differential privacy (DP) using mini-batch gradients. The existing state-of-the-art, Differentially Private Stochastic Gradient Descent (DP-SGD), requires privacy amplification by sampling or shuffling to obtain the best privacy/accuracy/computation trade-offs. Unfortunately, the precise requirements on exact sampling and shuffling can be hard to obtain in important practical scenarios, particularly federated learning (FL). We design and analyze a DP variant of Follow-The-Regularized-Leader (DP-FTRL) that compares favorably (both theoretically and empirically) to amplified DP-SGD, while allowing for much more flexible data access patterns. DP-FTRL does not use any form of privacy amplification.

Federated Learning with Autotuned Communication-Efficient Secure Aggregation

Nov 30, 2019

Abstract:Federated Learning enables mobile devices to collaboratively learn a shared inference model while keeping all the training data on a user's device, decoupling the ability to do machine learning from the need to store the data in the cloud. Existing work on federated learning with limited communication demonstrates how random rotation can enable users' model updates to be quantized much more efficiently, reducing the communication cost between users and the server. Meanwhile, secure aggregation enables the server to learn an aggregate of at least a threshold number of device's model contributions without observing any individual device's contribution in unaggregated form. In this paper, we highlight some of the challenges of setting the parameters for secure aggregation to achieve communication efficiency, especially in the context of the aggressively quantized inputs enabled by random rotation. We then develop a recipe for auto-tuning communication-efficient secure aggregation, based on specific properties of random rotation and secure aggregation -- namely, the predictable distribution of vector entries post-rotation and the modular wrapping inherent in secure aggregation. We present both theoretical results and initial experiments.

Graph Oracle Models, Lower Bounds, and Gaps for Parallel Stochastic Optimization

Jul 31, 2018

Abstract:We suggest a general oracle-based framework that captures different parallel stochastic optimization settings described by a dependency graph, and derive generic lower bounds in terms of this graph. We then use the framework and derive lower bounds for several specific parallel optimization settings, including delayed updates and parallel processing with intermittent communication. We highlight gaps between lower and upper bounds on the oracle complexity, and cases where the "natural" algorithms are not known to be optimal.

Federated Optimization:Distributed Optimization Beyond the Datacenter

Nov 11, 2015

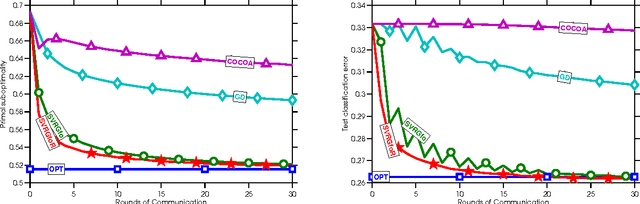

Abstract:We introduce a new and increasingly relevant setting for distributed optimization in machine learning, where the data defining the optimization are distributed (unevenly) over an extremely large number of \nodes, but the goal remains to train a high-quality centralized model. We refer to this setting as Federated Optimization. In this setting, communication efficiency is of utmost importance. A motivating example for federated optimization arises when we keep the training data locally on users' mobile devices rather than logging it to a data center for training. Instead, the mobile devices are used as nodes performing computation on their local data in order to update a global model. We suppose that we have an extremely large number of devices in our network, each of which has only a tiny fraction of data available totally; in particular, we expect the number of data points available locally to be much smaller than the number of devices. Additionally, since different users generate data with different patterns, we assume that no device has a representative sample of the overall distribution. We show that existing algorithms are not suitable for this setting, and propose a new algorithm which shows encouraging experimental results. This work also sets a path for future research needed in the context of federated optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge