Ziyang Yu

ICPC: In-context Prompt Compression with Faster Inference

Jan 03, 2025

Abstract:Despite the recent success of Large Language Models (LLMs), it remains challenging to feed LLMs with long prompts due to the fixed size of LLM inputs. As a remedy, prompt compression becomes a promising solution by removing redundant tokens in the prompt. However, using LLM in the existing works requires additional computation resources and leads to memory overheads. To address it, we propose ICPC (In-context Prompt Compression), a novel and scalable prompt compression method that adaptively reduces the prompt length. The key idea of ICPC is to calculate the probability of each word appearing in the prompt using encoders and calculate information carried by each word through the information function, which effectively reduces the information loss during prompt compression and increases the speed of compression. Empirically, we demonstrate that ICPC can effectively compress long texts of different categories and thus achieve better performance and speed on different types of NLP tasks.

Domain Adaptation-based Edge Computing for Cross-Conditions Fault Diagnosis

Nov 15, 2024

Abstract:Fault diagnosis technology supports the healthy operation of mechanical equipment. However, the variations conditions during the operation of mechanical equipment lead to significant disparities in data distribution, posing challenges to fault diagnosis. Furthermore, when deploying applications, traditional methods often encounter issues such as latency and data security. Therefore, conducting fault diagnosis and deploying application methods under cross-operating conditions holds significant value. This paper proposes a domain adaptation-based lightweight fault diagnosis framework for edge computing scenarios. Incorporating the local maximum mean discrepancy into knowledge transfer aligns the feature distributions of different domains in a high-dimensional feature space, to discover a common feature space across domains. The acquired fault diagnosis expertise from the cloud-model is transferred to the lightweight edge-model using adaptation knowledge transfer methods. While ensuring real-time diagnostic capabilities, accurate fault diagnosis is achieved across working conditions. We conducted validation experiments on the NVIDIA Jetson Xavier NX kit. In terms of diagnostic performance, the proposed method significantly improved diagnostic accuracy, with average increases of 34.44% and 17.33% compared to the comparison method, respectively. Regarding lightweight effectiveness, proposed method achieved an average inference speed increase of 80.47%. Additionally, compared to the cloud-model, the parameter count of the edge-model decreased by 96.37%, while the Flops decreased by 83.08%.

Force-Guided Bridge Matching for Full-Atom Time-Coarsened Dynamics of Peptides

Aug 27, 2024Abstract:Molecular Dynamics (MD) simulations are irreplaceable and ubiquitous in fields of materials science, chemistry, pharmacology just to name a few. Conventional MD simulations are plagued by numerical stability as well as long equilibration time issues, which limits broader applications of MD simulations. Recently, a surge of deep learning approaches have been devised for time-coarsened dynamics, which learns the state transition mechanism over much larger time scales to overcome these limitations. However, only a few methods target the underlying Boltzmann distribution by resampling techniques, where proposals are rarely accepted as new states with low efficiency. In this work, we propose a force-guided bridge matching model, FBM, a novel framework that first incorporates physical priors into bridge matching for full-atom time-coarsened dynamics. With the guidance of our well-designed intermediate force field, FBM is feasible to target the Boltzmann-like distribution by direct inference without extra steps. Experiments on small peptides verify our superiority in terms of comprehensive metrics and demonstrate transferability to unseen peptide systems.

A Survey of Geometric Graph Neural Networks: Data Structures, Models and Applications

Mar 01, 2024Abstract:Geometric graph is a special kind of graph with geometric features, which is vital to model many scientific problems. Unlike generic graphs, geometric graphs often exhibit physical symmetries of translations, rotations, and reflections, making them ineffectively processed by current Graph Neural Networks (GNNs). To tackle this issue, researchers proposed a variety of Geometric Graph Neural Networks equipped with invariant/equivariant properties to better characterize the geometry and topology of geometric graphs. Given the current progress in this field, it is imperative to conduct a comprehensive survey of data structures, models, and applications related to geometric GNNs. In this paper, based on the necessary but concise mathematical preliminaries, we provide a unified view of existing models from the geometric message passing perspective. Additionally, we summarize the applications as well as the related datasets to facilitate later research for methodology development and experimental evaluation. We also discuss the challenges and future potential directions of Geometric GNNs at the end of this survey.

Equivariant Pretrained Transformer for Unified Geometric Learning on Multi-Domain 3D Molecules

Feb 20, 2024Abstract:Pretraining on a large number of unlabeled 3D molecules has showcased superiority in various scientific applications. However, prior efforts typically focus on pretraining models on a specific domain, either proteins or small molecules, missing the opportunity to leverage the cross-domain knowledge. To mitigate this gap, we introduce Equivariant Pretrained Transformer (EPT), a novel pretraining framework designed to harmonize the geometric learning of small molecules and proteins. To be specific, EPT unifies the geometric modeling of multi-domain molecules via the block-enhanced representation that can attend a broader context of each atom. Upon transformer framework, EPT is further enhanced with E(3) equivariance to facilitate the accurate representation of 3D structures. Another key innovation of EPT is its block-level pretraining task, which allows for joint pretraining on datasets comprising both small molecules and proteins. Experimental evaluations on a diverse group of benchmarks, including ligand binding affinity prediction, molecular property prediction, and protein property prediction, show that EPT significantly outperforms previous SOTA methods for affinity prediction, and achieves the best or comparable performance with existing domain-specific pretraining models for other tasks.

Rigid Protein-Protein Docking via Equivariant Elliptic-Paraboloid Interface Prediction

Jan 17, 2024Abstract:The study of rigid protein-protein docking plays an essential role in a variety of tasks such as drug design and protein engineering. Recently, several learning-based methods have been proposed for the task, exhibiting much faster docking speed than those computational methods. In this paper, we propose a novel learning-based method called ElliDock, which predicts an elliptic paraboloid to represent the protein-protein docking interface. To be specific, our model estimates elliptic paraboloid interfaces for the two input proteins respectively, and obtains the roto-translation transformation for docking by making two interfaces coincide. By its design, ElliDock is independently equivariant with respect to arbitrary rotations/translations of the proteins, which is an indispensable property to ensure the generalization of the docking process. Experimental evaluations show that ElliDock achieves the fastest inference time among all compared methods and is strongly competitive with current state-of-the-art learning-based models such as DiffDock-PP and Multimer particularly for antibody-antigen docking.

Beyond Efficiency: A Systematic Survey of Resource-Efficient Large Language Models

Jan 04, 2024Abstract:The burgeoning field of Large Language Models (LLMs), exemplified by sophisticated models like OpenAI's ChatGPT, represents a significant advancement in artificial intelligence. These models, however, bring forth substantial challenges in the high consumption of computational, memory, energy, and financial resources, especially in environments with limited resource capabilities. This survey aims to systematically address these challenges by reviewing a broad spectrum of techniques designed to enhance the resource efficiency of LLMs. We categorize methods based on their optimization focus: computational, memory, energy, financial, and network resources and their applicability across various stages of an LLM's lifecycle, including architecture design, pretraining, finetuning, and system design. Additionally, the survey introduces a nuanced categorization of resource efficiency techniques by their specific resource types, which uncovers the intricate relationships and mappings between various resources and corresponding optimization techniques. A standardized set of evaluation metrics and datasets is also presented to facilitate consistent and fair comparisons across different models and techniques. By offering a comprehensive overview of the current sota and identifying open research avenues, this survey serves as a foundational reference for researchers and practitioners, aiding them in developing more sustainable and efficient LLMs in a rapidly evolving landscape.

Staleness-Alleviated Distributed GNN Training via Online Dynamic-Embedding Prediction

Aug 25, 2023

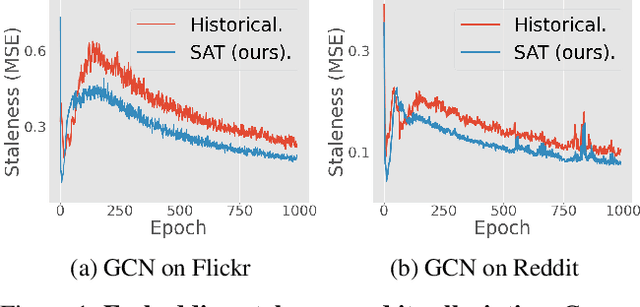

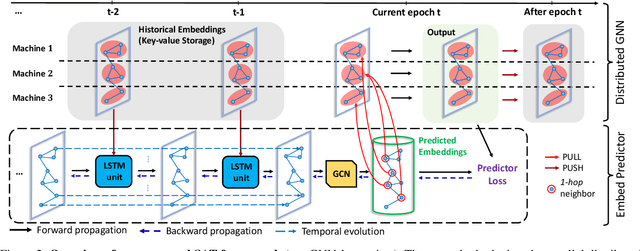

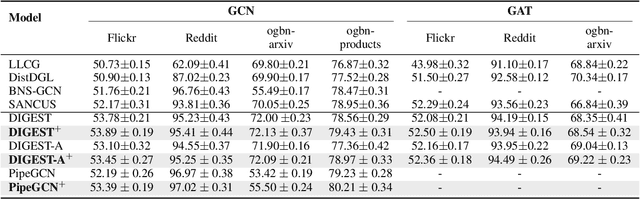

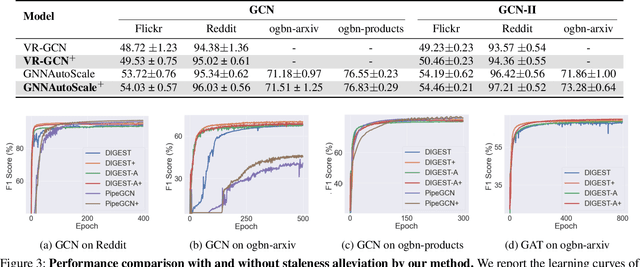

Abstract:Despite the recent success of Graph Neural Networks (GNNs), it remains challenging to train GNNs on large-scale graphs due to neighbor explosions. As a remedy, distributed computing becomes a promising solution by leveraging abundant computing resources (e.g., GPU). However, the node dependency of graph data increases the difficulty of achieving high concurrency in distributed GNN training, which suffers from the massive communication overhead. To address it, Historical value approximation is deemed a promising class of distributed training techniques. It utilizes an offline memory to cache historical information (e.g., node embedding) as an affordable approximation of the exact value and achieves high concurrency. However, such benefits come at the cost of involving dated training information, leading to staleness, imprecision, and convergence issues. To overcome these challenges, this paper proposes SAT (Staleness-Alleviated Training), a novel and scalable distributed GNN training framework that reduces the embedding staleness adaptively. The key idea of SAT is to model the GNN's embedding evolution as a temporal graph and build a model upon it to predict future embedding, which effectively alleviates the staleness of the cached historical embedding. We propose an online algorithm to train the embedding predictor and the distributed GNN alternatively and further provide a convergence analysis. Empirically, we demonstrate that SAT can effectively reduce embedding staleness and thus achieve better performance and convergence speed on multiple large-scale graph datasets.

DevelSet: Deep Neural Level Set for Instant Mask Optimization

Mar 18, 2023Abstract:With the feature size continuously shrinking in advanced technology nodes, mask optimization is increasingly crucial in the conventional design flow, accompanied by an explosive growth in prohibitive computational overhead in optical proximity correction (OPC) methods. Recently, inverse lithography technique (ILT) has drawn significant attention and is becoming prevalent in emerging OPC solutions. However, ILT methods are either time-consuming or in weak performance of mask printability and manufacturability. In this paper, we present DevelSet, a GPU and deep neural network (DNN) accelerated level set OPC framework for metal layer. We first improve the conventional level set-based ILT algorithm by introducing the curvature term to reduce mask complexity and applying GPU acceleration to overcome computational bottlenecks. To further enhance printability and fast iterative convergence, we propose a novel deep neural network delicately designed with level set intrinsic principles to facilitate the joint optimization of DNN and GPU accelerated level set optimizer. Experimental results show that DevelSet framework surpasses the state-of-the-art methods in printability and boost the runtime performance achieving instant level (around 1 second).

AdaOPC: A Self-Adaptive Mask Optimization Framework For Real Design Patterns

Mar 15, 2023Abstract:Optical proximity correction (OPC) is a widely-used resolution enhancement technique (RET) for printability optimization. Recently, rigorous numerical optimization and fast machine learning are the research focus of OPC in both academia and industry, each of which complements the other in terms of robustness or efficiency. We inspect the pattern distribution on a design layer and find that different sub-regions have different pattern complexity. Besides, we also find that many patterns repetitively appear in the design layout, and these patterns may possibly share optimized masks. We exploit these properties and propose a self-adaptive OPC framework to improve efficiency. Firstly we choose different OPC solvers adaptively for patterns of different complexity from an extensible solver pool to reach a speed/accuracy co-optimization. Apart from that, we prove the feasibility of reusing optimized masks for repeated patterns and hence, build a graph-based dynamic pattern library reusing stored masks to further speed up the OPC flow. Experimental results show that our framework achieves substantial improvement in both performance and efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge