Zhiyong Huang

GIFT: Games as Informal Training for Generalizable LLMs

Jan 09, 2026Abstract:While Large Language Models (LLMs) have achieved remarkable success in formal learning tasks such as mathematics and code generation, they still struggle with the "practical wisdom" and generalizable intelligence, such as strategic creativity and social reasoning, that characterize human cognition. This gap arises from a lack of informal learning, which thrives on interactive feedback rather than goal-oriented instruction. In this paper, we propose treating Games as a primary environment for LLM informal learning, leveraging their intrinsic reward signals and abstracted complexity to cultivate diverse competencies. To address the performance degradation observed in multi-task learning, we introduce a Nested Training Framework. Unlike naive task mixing optimizing an implicit "OR" objective, our framework employs sequential task composition to enforce an explicit "AND" objective, compelling the model to master multiple abilities simultaneously to achieve maximal rewards. Using GRPO-based reinforcement learning across Matrix Games, TicTacToe, and Who's the Spy games, we demonstrate that integrating game-based informal learning not only prevents task interference but also significantly bolsters the model's generalization across broad ability-oriented benchmarks. The framework and implementation are publicly available.

AdamMeme: Adaptively Probe the Reasoning Capacity of Multimodal Large Language Models on Harmfulness

Jul 02, 2025Abstract:The proliferation of multimodal memes in the social media era demands that multimodal Large Language Models (mLLMs) effectively understand meme harmfulness. Existing benchmarks for assessing mLLMs on harmful meme understanding rely on accuracy-based, model-agnostic evaluations using static datasets. These benchmarks are limited in their ability to provide up-to-date and thorough assessments, as online memes evolve dynamically. To address this, we propose AdamMeme, a flexible, agent-based evaluation framework that adaptively probes the reasoning capabilities of mLLMs in deciphering meme harmfulness. Through multi-agent collaboration, AdamMeme provides comprehensive evaluations by iteratively updating the meme data with challenging samples, thereby exposing specific limitations in how mLLMs interpret harmfulness. Extensive experiments show that our framework systematically reveals the varying performance of different target mLLMs, offering in-depth, fine-grained analyses of model-specific weaknesses. Our code is available at https://github.com/Lbotirx/AdamMeme.

Can Large Language Models Improve Spectral Graph Neural Networks?

Jun 17, 2025Abstract:Spectral Graph Neural Networks (SGNNs) have attracted significant attention due to their ability to approximate arbitrary filters. They typically rely on supervision from downstream tasks to adaptively learn appropriate filters. However, under label-scarce conditions, SGNNs may learn suboptimal filters, leading to degraded performance. Meanwhile, the remarkable success of Large Language Models (LLMs) has inspired growing interest in exploring their potential within the GNN domain. This naturally raises an important question: \textit{Can LLMs help overcome the limitations of SGNNs and enhance their performance?} In this paper, we propose a novel approach that leverages LLMs to estimate the homophily of a given graph. The estimated homophily is then used to adaptively guide the design of polynomial spectral filters, thereby improving the expressiveness and adaptability of SGNNs across diverse graph structures. Specifically, we introduce a lightweight pipeline in which the LLM generates homophily-aware priors, which are injected into the filter coefficients to better align with the underlying graph topology. Extensive experiments on benchmark datasets demonstrate that our LLM-driven SGNN framework consistently outperforms existing baselines under both homophilic and heterophilic settings, with minimal computational and monetary overhead.

3DSwapping: Texture Swapping For 3D Object From Single Reference Image

Mar 24, 2025Abstract:3D texture swapping allows for the customization of 3D object textures, enabling efficient and versatile visual transformations in 3D editing. While no dedicated method exists, adapted 2D editing and text-driven 3D editing approaches can serve this purpose. However, 2D editing requires frame-by-frame manipulation, causing inconsistencies across views, while text-driven 3D editing struggles to preserve texture characteristics from reference images. To tackle these challenges, we introduce 3DSwapping, a 3D texture swapping method that integrates: 1) progressive generation, 2) view-consistency gradient guidance, and 3) prompt-tuned gradient guidance. To ensure view consistency, our progressive generation process starts by editing a single reference image and gradually propagates the edits to adjacent views. Our view-consistency gradient guidance further reinforces consistency by conditioning the generation model on feature differences between consistent and inconsistent outputs. To preserve texture characteristics, we introduce prompt-tuning-based gradient guidance, which learns a token that precisely captures the difference between the reference image and the 3D object. This token then guides the editing process, ensuring more consistent texture preservation across views. Overall, 3DSwapping integrates these novel strategies to achieve higher-fidelity texture transfer while preserving structural coherence across multiple viewpoints. Extensive qualitative and quantitative evaluations confirm that our three novel components enable convincing and effective 2D texture swapping for 3D objects. Code will be available upon acceptance.

Addressing Heterogeneity and Heterophily in Graphs: A Heterogeneous Heterophilic Spectral Graph Neural Network

Oct 17, 2024Abstract:Graph Neural Networks (GNNs) have garnered significant scholarly attention for their powerful capabilities in modeling graph structures. Despite this, two primary challenges persist: heterogeneity and heterophily. Existing studies often address heterogeneous and heterophilic graphs separately, leaving a research gap in the understanding of heterogeneous heterophilic graphs-those that feature diverse node or relation types with dissimilar connected nodes. To address this gap, we investigate the application of spectral graph filters within heterogeneous graphs. Specifically, we propose a Heterogeneous Heterophilic Spectral Graph Neural Network (H2SGNN), which employs a dual-module approach: local independent filtering and global hybrid filtering. The local independent filtering module applies polynomial filters to each subgraph independently to adapt to different homophily, while the global hybrid filtering module captures interactions across different subgraphs. Extensive empirical evaluations on four real-world datasets demonstrate the superiority of H2SGNN compared to state-of-the-art methods.

SSNeRF: Sparse View Semi-supervised Neural Radiance Fields with Augmentation

Aug 17, 2024

Abstract:Sparse view NeRF is challenging because limited input images lead to an under constrained optimization problem for volume rendering. Existing methods address this issue by relying on supplementary information, such as depth maps. However, generating this supplementary information accurately remains problematic and often leads to NeRF producing images with undesired artifacts. To address these artifacts and enhance robustness, we propose SSNeRF, a sparse view semi supervised NeRF method based on a teacher student framework. Our key idea is to challenge the NeRF module with progressively severe sparse view degradation while providing high confidence pseudo labels. This approach helps the NeRF model become aware of noise and incomplete information associated with sparse views, thus improving its robustness. The novelty of SSNeRF lies in its sparse view specific augmentations and semi supervised learning mechanism. In this approach, the teacher NeRF generates novel views along with confidence scores, while the student NeRF, perturbed by the augmented input, learns from the high confidence pseudo labels. Our sparse view degradation augmentation progressively injects noise into volume rendering weights, perturbs feature maps in vulnerable layers, and simulates sparse view blurriness. These augmentation strategies force the student NeRF to recognize degradation and produce clearer rendered views. By transferring the student's parameters to the teacher, the teacher gains increased robustness in subsequent training iterations. Extensive experiments demonstrate the effectiveness of our SSNeRF in generating novel views with less sparse view degradation. We will release code upon acceptance.

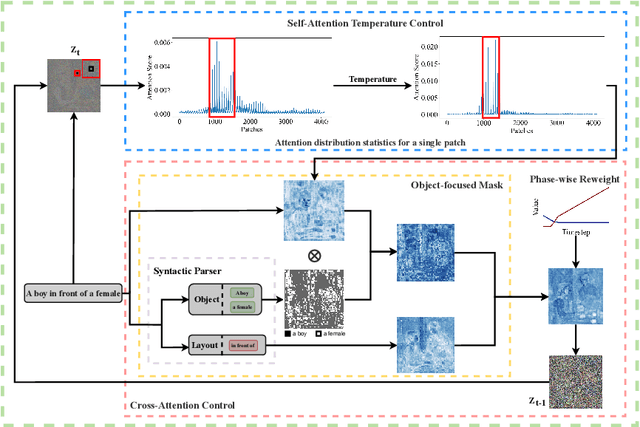

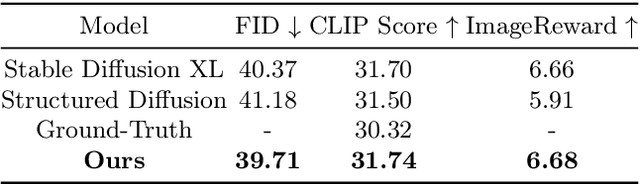

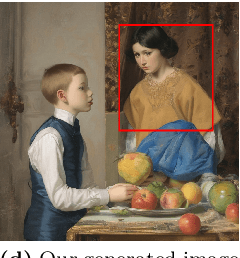

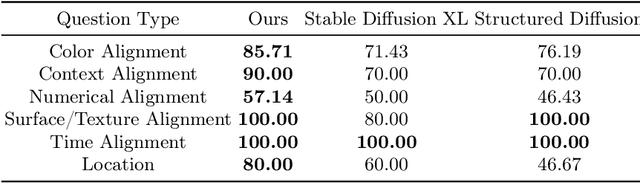

Towards Better Text-to-Image Generation Alignment via Attention Modulation

Apr 22, 2024

Abstract:In text-to-image generation tasks, the advancements of diffusion models have facilitated the fidelity of generated results. However, these models encounter challenges when processing text prompts containing multiple entities and attributes. The uneven distribution of attention results in the issues of entity leakage and attribute misalignment. Training from scratch to address this issue requires numerous labeled data and is resource-consuming. Motivated by this, we propose an attribution-focusing mechanism, a training-free phase-wise mechanism by modulation of attention for diffusion model. One of our core ideas is to guide the model to concentrate on the corresponding syntactic components of the prompt at distinct timesteps. To achieve this, we incorporate a temperature control mechanism within the early phases of the self-attention modules to mitigate entity leakage issues. An object-focused masking scheme and a phase-wise dynamic weight control mechanism are integrated into the cross-attention modules, enabling the model to discern the affiliation of semantic information between entities more effectively. The experimental results in various alignment scenarios demonstrate that our model attain better image-text alignment with minimal additional computational cost.

Towards Controllable Time Series Generation

Mar 06, 2024Abstract:Time Series Generation (TSG) has emerged as a pivotal technique in synthesizing data that accurately mirrors real-world time series, becoming indispensable in numerous applications. Despite significant advancements in TSG, its efficacy frequently hinges on having large training datasets. This dependency presents a substantial challenge in data-scarce scenarios, especially when dealing with rare or unique conditions. To confront these challenges, we explore a new problem of Controllable Time Series Generation (CTSG), aiming to produce synthetic time series that can adapt to various external conditions, thereby tackling the data scarcity issue. In this paper, we propose \textbf{C}ontrollable \textbf{T}ime \textbf{S}eries (\textsf{CTS}), an innovative VAE-agnostic framework tailored for CTSG. A key feature of \textsf{CTS} is that it decouples the mapping process from standard VAE training, enabling precise learning of a complex interplay between latent features and external conditions. Moreover, we develop a comprehensive evaluation scheme for CTSG. Extensive experiments across three real-world time series datasets showcase \textsf{CTS}'s exceptional capabilities in generating high-quality, controllable outputs. This underscores its adeptness in seamlessly integrating latent features with external conditions. Extending \textsf{CTS} to the image domain highlights its remarkable potential for explainability and further reinforces its versatility across different modalities.

Multi-Prompts Learning with Cross-Modal Alignment for Attribute-based Person Re-Identification

Dec 28, 2023

Abstract:The fine-grained attribute descriptions can significantly supplement the valuable semantic information for person image, which is vital to the success of person re-identification (ReID) task. However, current ReID algorithms typically failed to effectively leverage the rich contextual information available, primarily due to their reliance on simplistic and coarse utilization of image attributes. Recent advances in artificial intelligence generated content have made it possible to automatically generate plentiful fine-grained attribute descriptions and make full use of them. Thereby, this paper explores the potential of using the generated multiple person attributes as prompts in ReID tasks with off-the-shelf (large) models for more accurate retrieval results. To this end, we present a new framework called Multi-Prompts ReID (MP-ReID), based on prompt learning and language models, to fully dip fine attributes to assist ReID task. Specifically, MP-ReID first learns to hallucinate diverse, informative, and promptable sentences for describing the query images. This procedure includes (i) explicit prompts of which attributes a person has and furthermore (ii) implicit learnable prompts for adjusting/conditioning the criteria used towards this person identity matching. Explicit prompts are obtained by ensembling generation models, such as ChatGPT and VQA models. Moreover, an alignment module is designed to fuse multi-prompts (i.e., explicit and implicit ones) progressively and mitigate the cross-modal gap. Extensive experiments on the existing attribute-involved ReID datasets, namely, Market1501 and DukeMTMC-reID, demonstrate the effectiveness and rationality of the proposed MP-ReID solution.

TSGBench: Time Series Generation Benchmark

Sep 07, 2023

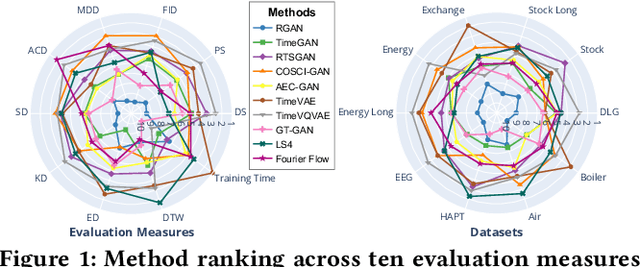

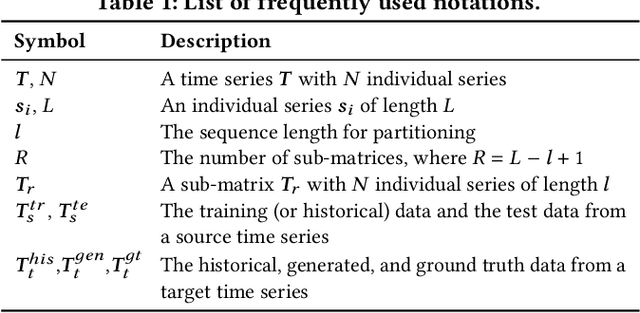

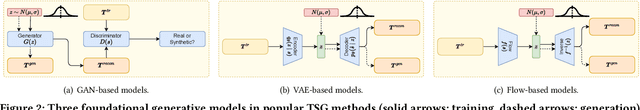

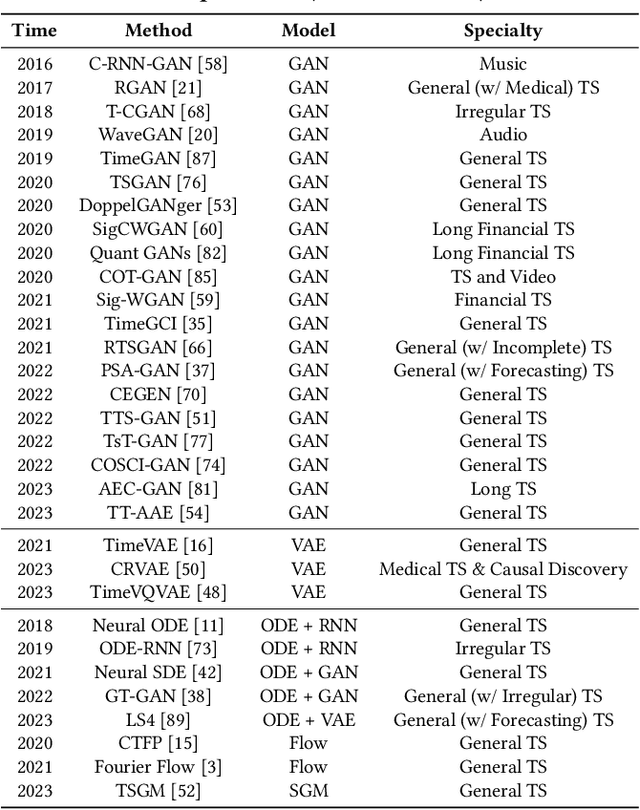

Abstract:Synthetic Time Series Generation (TSG) is crucial in a range of applications, including data augmentation, anomaly detection, and privacy preservation. Although significant strides have been made in this field, existing methods exhibit three key limitations: (1) They often benchmark against similar model types, constraining a holistic view of performance capabilities. (2) The use of specialized synthetic and private datasets introduces biases and hampers generalizability. (3) Ambiguous evaluation measures, often tied to custom networks or downstream tasks, hinder consistent and fair comparison. To overcome these limitations, we introduce \textsf{TSGBench}, the inaugural TSG Benchmark, designed for a unified and comprehensive assessment of TSG methods. It comprises three modules: (1) a curated collection of publicly available, real-world datasets tailored for TSG, together with a standardized preprocessing pipeline; (2) a comprehensive evaluation measures suite including vanilla measures, new distance-based assessments, and visualization tools; (3) a pioneering generalization test rooted in Domain Adaptation (DA), compatible with all methods. We have conducted extensive experiments across ten real-world datasets from diverse domains, utilizing ten advanced TSG methods and twelve evaluation measures, all gauged through \textsf{TSGBench}. The results highlight its remarkable efficacy and consistency. More importantly, \textsf{TSGBench} delivers a statistical breakdown of method rankings, illuminating performance variations across different datasets and measures, and offering nuanced insights into the effectiveness of each method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge