Zhengkun Tian

MSR-86K: An Evolving, Multilingual Corpus with 86,300 Hours of Transcribed Audio for Speech Recognition Research

Jun 26, 2024

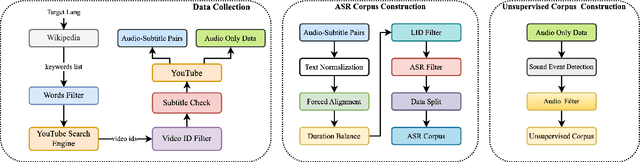

Abstract:Recently, multilingual artificial intelligence assistants, exemplified by ChatGPT, have gained immense popularity. As a crucial gateway to human-computer interaction, multilingual automatic speech recognition (ASR) has also garnered significant attention, as evidenced by systems like Whisper. However, the proprietary nature of the training data has impeded researchers' efforts to study multilingual ASR. This paper introduces MSR-86K, an evolving, large-scale multilingual corpus for speech recognition research. The corpus is derived from publicly accessible videos on YouTube, comprising 15 languages and a total of 86,300 hours of transcribed ASR data. We also introduce how to use the MSR-86K corpus and other open-source corpora to train a robust multilingual ASR model that is competitive with Whisper. MSR-86K will be publicly released on HuggingFace, and we believe that such a large corpus will pave new avenues for research in multilingual ASR.

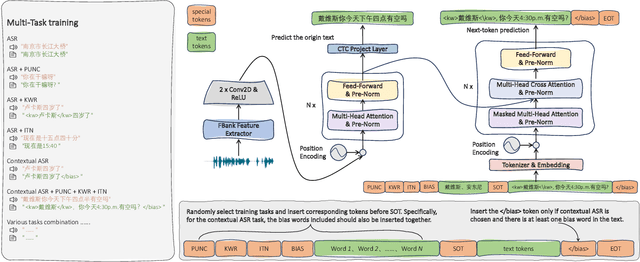

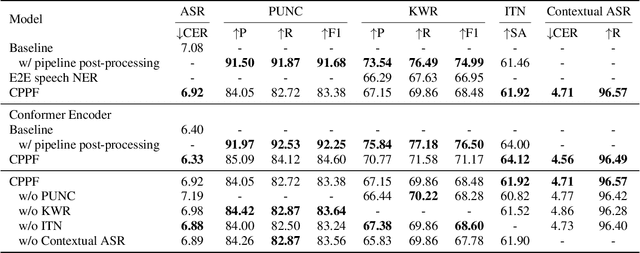

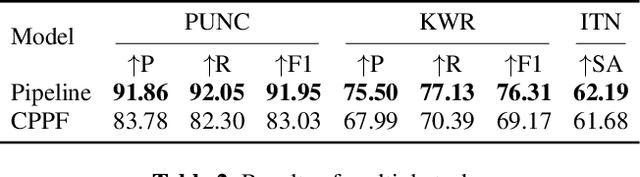

CPPF: A contextual and post-processing-free model for automatic speech recognition

Sep 21, 2023

Abstract:ASR systems have become increasingly widespread in recent years. However, their textual outputs often require post-processing tasks before they can be practically utilized. To address this issue, we draw inspiration from the multifaceted capabilities of LLMs and Whisper, and focus on integrating multiple ASR text processing tasks related to speech recognition into the ASR model. This integration not only shortens the multi-stage pipeline, but also prevents the propagation of cascading errors, resulting in direct generation of post-processed text. In this study, we focus on ASR-related processing tasks, including Contextual ASR and multiple ASR post processing tasks. To achieve this objective, we introduce the CPPF model, which offers a versatile and highly effective alternative to ASR processing. CPPF seamlessly integrates these tasks without any significant loss in recognition performance.

TST: Time-Sparse Transducer for Automatic Speech Recognition

Jul 17, 2023

Abstract:End-to-end model, especially Recurrent Neural Network Transducer (RNN-T), has achieved great success in speech recognition. However, transducer requires a great memory footprint and computing time when processing a long decoding sequence. To solve this problem, we propose a model named time-sparse transducer, which introduces a time-sparse mechanism into transducer. In this mechanism, we obtain the intermediate representations by reducing the time resolution of the hidden states. Then the weighted average algorithm is used to combine these representations into sparse hidden states followed by the decoder. All the experiments are conducted on a Mandarin dataset AISHELL-1. Compared with RNN-T, the character error rate of the time-sparse transducer is close to RNN-T and the real-time factor is 50.00% of the original. By adjusting the time resolution, the time-sparse transducer can also reduce the real-time factor to 16.54% of the original at the expense of a 4.94% loss of precision.

* 10 pages

SceneFake: An Initial Dataset and Benchmarks for Scene Fake Audio Detection

Nov 11, 2022

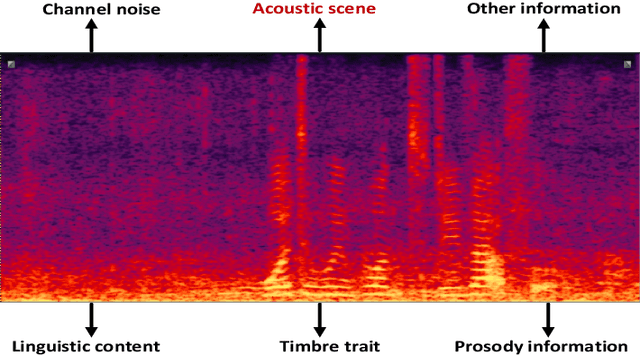

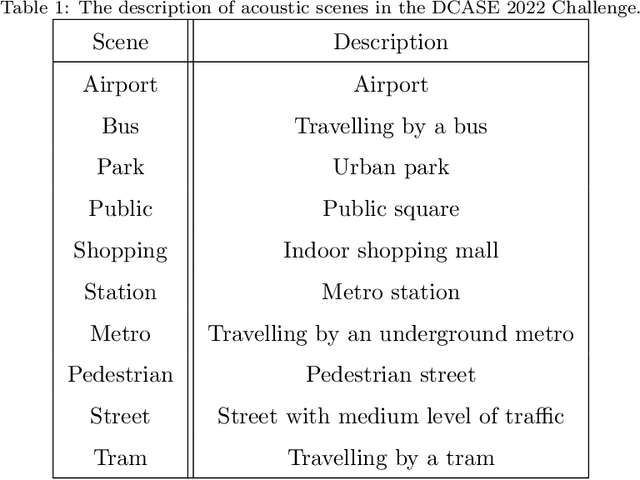

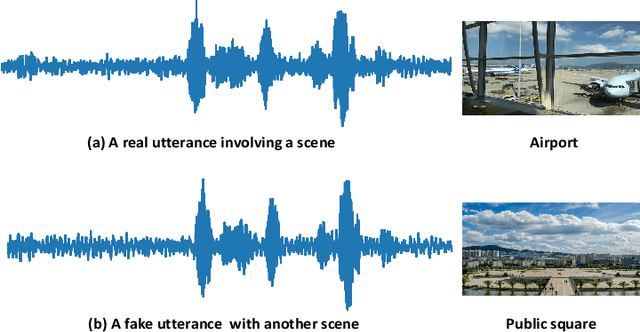

Abstract:Previous databases have been designed to further the development of fake audio detection. However, fake utterances are mostly generated by altering timbre, prosody, linguistic content or channel noise of original audios. They ignore a fake situation, in which the attacker manipulates an acoustic scene of the original audio with another forgery one. It will pose a major threat to our society if some people misuse the manipulated audio with malicious purpose. Therefore, this motivates us to fill in the gap. This paper designs such a dataset for scene fake audio detection (SceneFake). A manipulated audio in the SceneFake dataset involves only tampering the acoustic scene of an utterance by using speech enhancement technologies. We can not only detect fake utterances on a seen test set but also evaluate the generalization of fake detection models to unseen manipulation attacks. Some benchmark results are described on the SceneFake dataset. Besides, an analysis of fake attacks with different speech enhancement technologies and signal-to-noise ratios are presented on the dataset. The results show that scene manipulated utterances can not be detected reliably by the existing baseline models of ASVspoof 2019. Furthermore, the detection of unseen scene manipulation audio is still challenging.

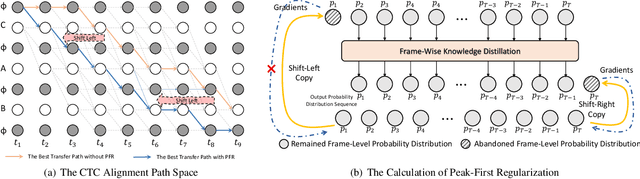

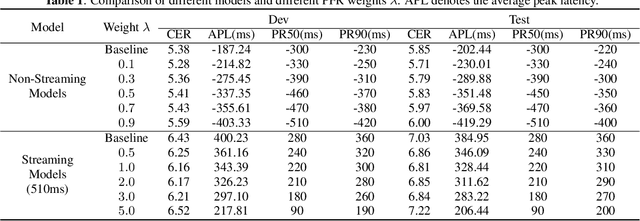

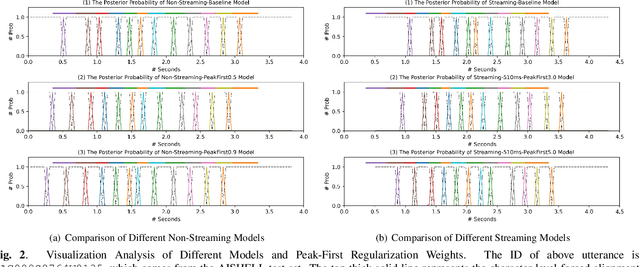

Peak-First CTC: Reducing the Peak Latency of CTC Models by Applying Peak-First Regularization

Nov 07, 2022

Abstract:The CTC model has been widely applied to many application scenarios because of its simple structure, excellent performance, and fast inference speed. There are many peaks in the probability distribution predicted by the CTC models, and each peak represents a non-blank token. The recognition latency of CTC models can be reduced by encouraging the model to predict peaks earlier. Existing methods to reduce latency require modifying the transition relationship between tokens in the forward-backward algorithm, and the gradient calculation. Some of these methods even depend on the forced alignment results provided by other pretrained models. The above methods are complex to implement. To reduce the peak latency, we propose a simple and novel method named peak-first regularization, which utilizes a frame-wise knowledge distillation function to force the probability distribution of the CTC model to shift left along the time axis instead of directly modifying the calculation process of CTC loss and gradients. All the experiments are conducted on a Chinese Mandarin dataset AISHELL-1. We have verified the effectiveness of the proposed regularization on both streaming and non-streaming CTC models respectively. The results show that the proposed method can reduce the average peak latency by about 100 to 200 milliseconds with almost no degradation of recognition accuracy.

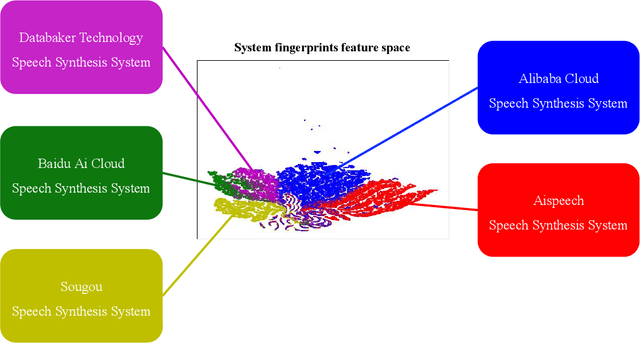

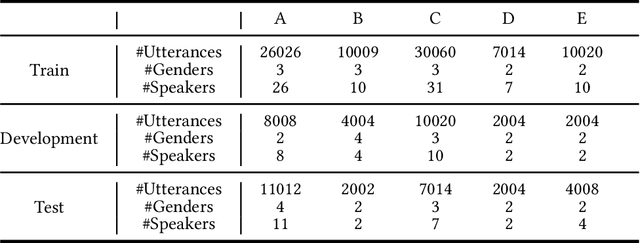

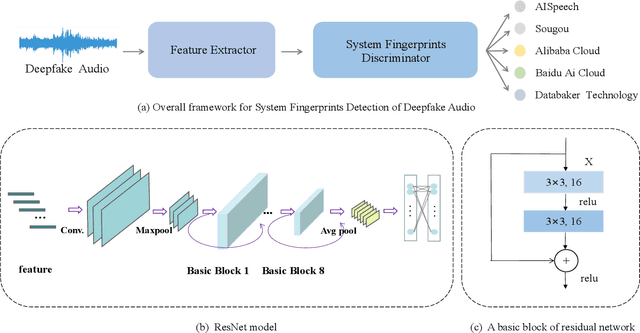

System Fingerprints Detection for DeepFake Audio: An Initial Dataset and Investigation

Aug 21, 2022

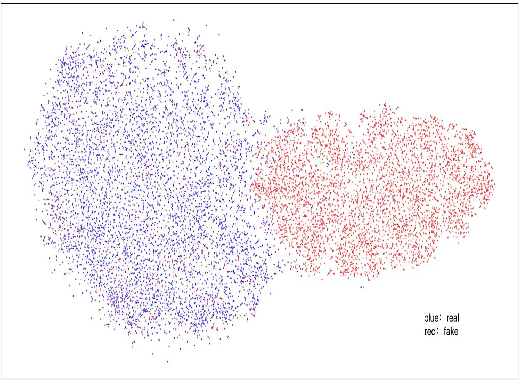

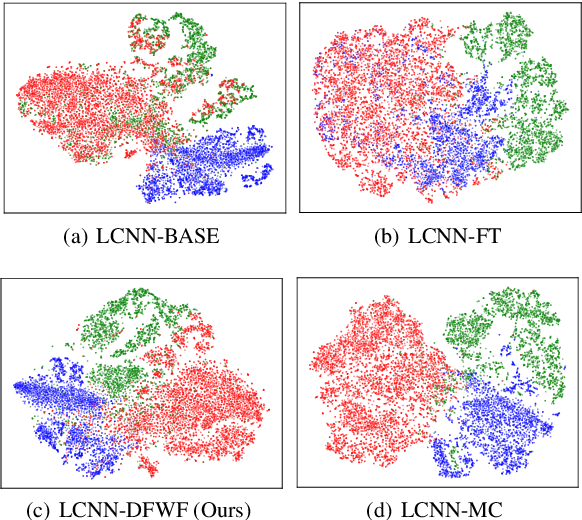

Abstract:Many effective attempts have been made for deepfake audio detection. However, they can only distinguish between real and fake. For many practical application scenarios, what tool or algorithm generated the deepfake audio also is needed. This raises a question: Can we detect the system fingerprints of deepfake audio? Therefore, this paper conducts a preliminary investigation to detect system fingerprints of deepfake audio. Experiments are conducted on deepfake audio datasets from five latest deep-learning speech synthesis systems. The results show that LFCC features are relatively more suitable for system fingerprints detection. Moreover, the ResNet achieves the best detection results among LCNN and x-vector based models. The t-SNE visualization shows that different speech synthesis systems generate distinct system fingerprints.

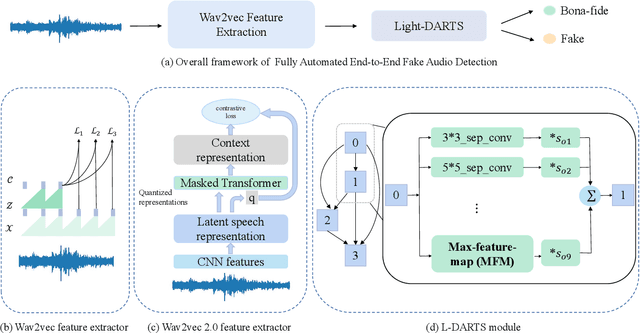

Fully Automated End-to-End Fake Audio Detection

Aug 20, 2022

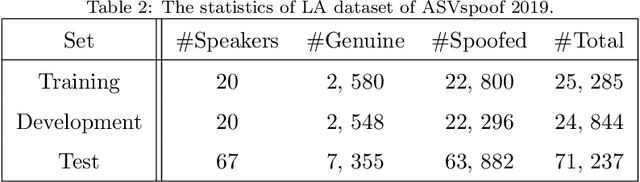

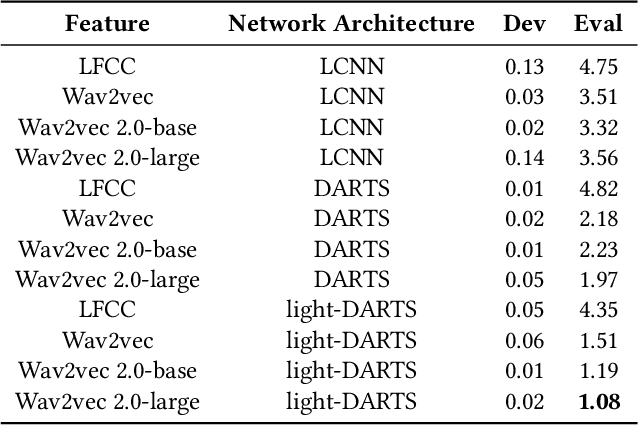

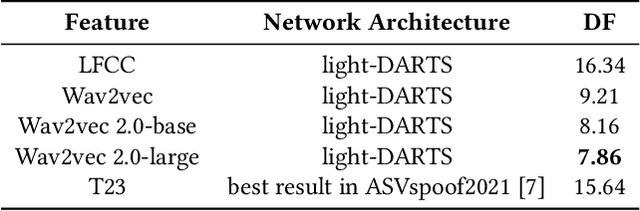

Abstract:The existing fake audio detection systems often rely on expert experience to design the acoustic features or manually design the hyperparameters of the network structure. However, artificial adjustment of the parameters can have a relatively obvious influence on the results. It is almost impossible to manually set the best set of parameters. Therefore this paper proposes a fully automated end-toend fake audio detection method. We first use wav2vec pre-trained model to obtain a high-level representation of the speech. Furthermore, for the network structure, we use a modified version of the differentiable architecture search (DARTS) named light-DARTS. It learns deep speech representations while automatically learning and optimizing complex neural structures consisting of convolutional operations and residual blocks. The experimental results on the ASVspoof 2019 LA dataset show that our proposed system achieves an equal error rate (EER) of 1.08%, which outperforms the state-of-the-art single system.

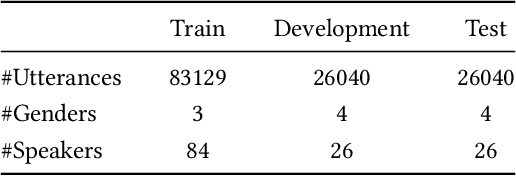

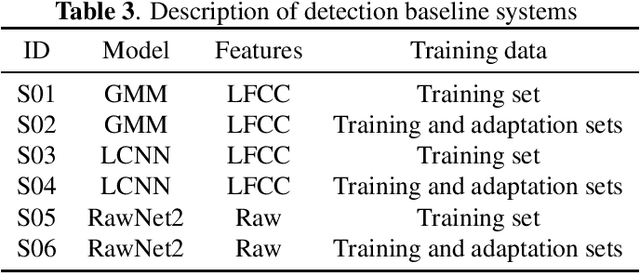

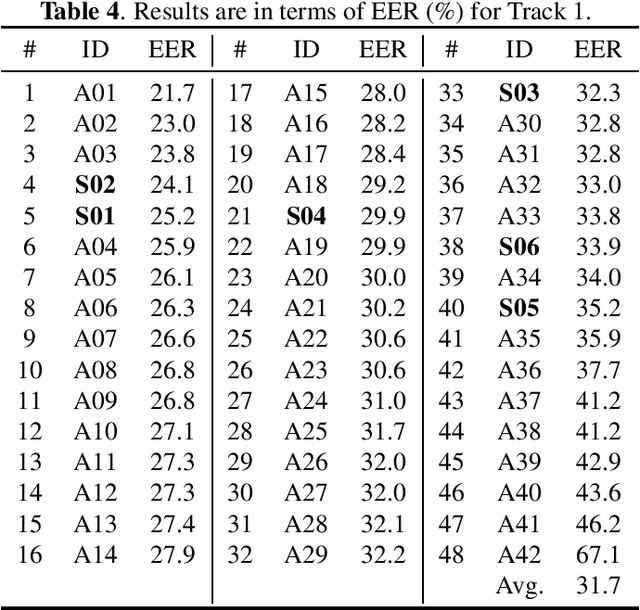

ADD 2022: the First Audio Deep Synthesis Detection Challenge

Feb 26, 2022

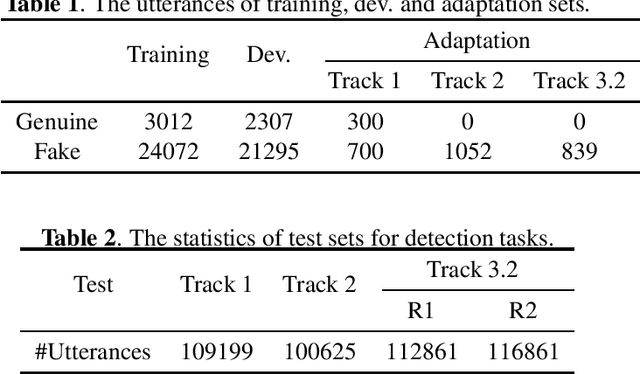

Abstract:Audio deepfake detection is an emerging topic, which was included in the ASVspoof 2021. However, the recent shared tasks have not covered many real-life and challenging scenarios. The first Audio Deep synthesis Detection challenge (ADD) was motivated to fill in the gap. The ADD 2022 includes three tracks: low-quality fake audio detection (LF), partially fake audio detection (PF) and audio fake game (FG). The LF track focuses on dealing with bona fide and fully fake utterances with various real-world noises etc. The PF track aims to distinguish the partially fake audio from the real. The FG track is a rivalry game, which includes two tasks: an audio generation task and an audio fake detection task. In this paper, we describe the datasets, evaluation metrics, and protocols. We also report major findings that reflect the recent advances in audio deepfake detection tasks.

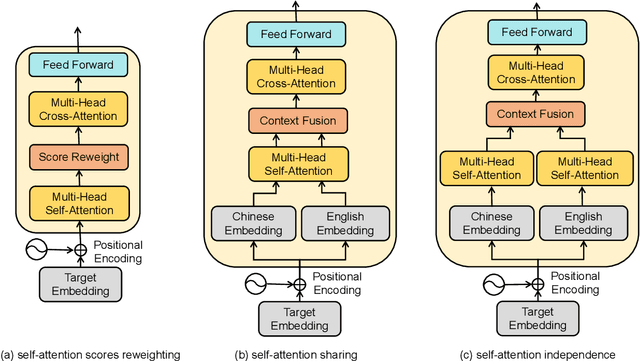

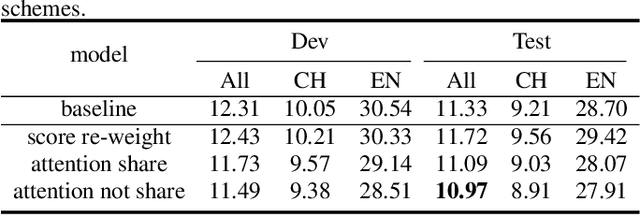

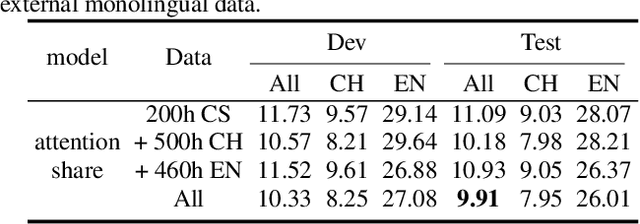

Reducing language context confusion for end-to-end code-switching automatic speech recognition

Jan 28, 2022

Abstract:Code-switching is about dealing with alternative languages in the communication process. Training end-to-end (E2E) automatic speech recognition (ASR) systems for code-switching is known to be a challenging problem because of the lack of data compounded by the increased language context confusion due to the presence of more than one language. In this paper, we propose a language-related attention mechanism to reduce multilingual context confusion for the E2E code-switching ASR model based on the Equivalence Constraint Theory (EC). The linguistic theory requires that any monolingual fragment that occurs in the code-switching sentence must occur in one of the monolingual sentences. It establishes a bridge between monolingual data and code-switching data. By calculating the respective attention of multiple languages, our method can efficiently transfer language knowledge from rich monolingual data. We evaluate our method on ASRU 2019 Mandarin-English code-switching challenge dataset. Compared with the baseline model, the proposed method achieves 11.37% relative mix error rate reduction.

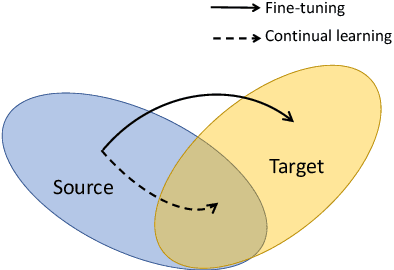

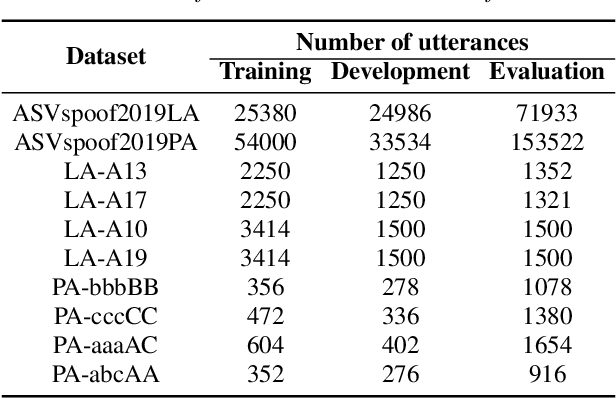

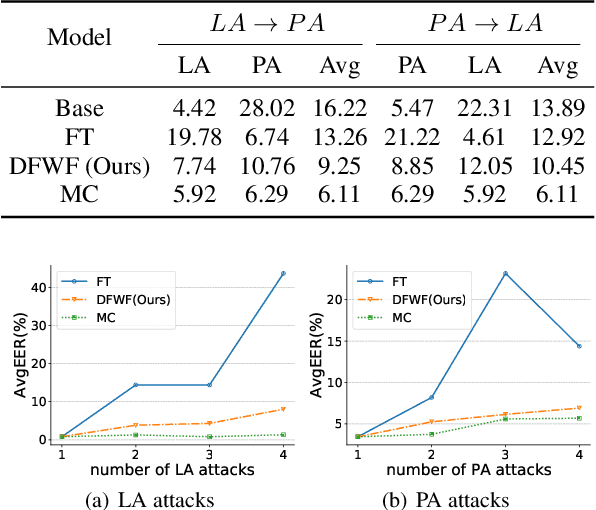

Continual Learning for Fake Audio Detection

Apr 15, 2021

Abstract:Fake audio attack becomes a major threat to the speaker verification system. Although current detection approaches have achieved promising results on dataset-specific scenarios, they encounter difficulties on unseen spoofing data. Fine-tuning and retraining from scratch have been applied to incorporate new data. However, fine-tuning leads to performance degradation on previous data. Retraining takes a lot of time and computation resources. Besides, previous data are unavailable due to privacy in some situations. To solve the above problems, this paper proposes detecting fake without forgetting, a continual-learning-based method, to make the model learn new spoofing attacks incrementally. A knowledge distillation loss is introduced to loss function to preserve the memory of original model. Supposing the distribution of genuine voice is consistent among different scenarios, an extra embedding similarity loss is used as another constraint to further do a positive sample alignment. Experiments are conducted on the ASVspoof2019 dataset. The results show that our proposed method outperforms fine-tuning by the relative reduction of average equal error rate up to 81.62%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge