Zeyu Ma

OSNIP: Breaking the Privacy-Utility-Efficiency Trilemma in LLM Inference via Obfuscated Semantic Null Space

Jan 30, 2026Abstract:We propose Obfuscated Semantic Null space Injection for Privacy (OSNIP), a lightweight client-side encryption framework for privacy-preserving LLM inference. Generalizing the geometric intuition of linear kernels to the high-dimensional latent space of LLMs, we formally define the ``Obfuscated Semantic Null Space'', a high-dimensional regime that preserves semantic fidelity while enforcing near-orthogonality to the original embedding. By injecting perturbations that project the original embedding into this space, OSNIP ensures privacy without any post-processing. Furthermore, OSNIP employs a key-dependent stochastic mapping that synthesizes individualized perturbation trajectories unique to each user. Evaluations on 12 generative and classification benchmarks show that OSNIP achieves state-of-the-art performance, sharply reducing attack success rates while maintaining strong model utility under strict security constraints.

ORCA: Object Recognition and Comprehension for Archiving Marine Species

Dec 24, 2025Abstract:Marine visual understanding is essential for monitoring and protecting marine ecosystems, enabling automatic and scalable biological surveys. However, progress is hindered by limited training data and the lack of a systematic task formulation that aligns domain-specific marine challenges with well-defined computer vision tasks, thereby limiting effective model application. To address this gap, we present ORCA, a multi-modal benchmark for marine research comprising 14,647 images from 478 species, with 42,217 bounding box annotations and 22,321 expert-verified instance captions. The dataset provides fine-grained visual and textual annotations that capture morphology-oriented attributes across diverse marine species. To catalyze methodological advances, we evaluate 18 state-of-the-art models on three tasks: object detection (closed-set and open-vocabulary), instance captioning, and visual grounding. Results highlight key challenges, including species diversity, morphological overlap, and specialized domain demands, underscoring the difficulty of marine understanding. ORCA thus establishes a comprehensive benchmark to advance research in marine domain. Project Page: http://orca.hkustvgd.com/.

Evaluating Robustness of Monocular Depth Estimation with Procedural Scene Perturbations

Jul 01, 2025Abstract:Recent years have witnessed substantial progress on monocular depth estimation, particularly as measured by the success of large models on standard benchmarks. However, performance on standard benchmarks does not offer a complete assessment, because most evaluate accuracy but not robustness. In this work, we introduce PDE (Procedural Depth Evaluation), a new benchmark which enables systematic robustness evaluation. PDE uses procedural generation to create 3D scenes that test robustness to various controlled perturbations, including object, camera, material and lighting changes. Our analysis yields interesting findings on what perturbations are challenging for state-of-the-art depth models, which we hope will inform further research. Code and data are available at https://github.com/princeton-vl/proc-depth-eval.

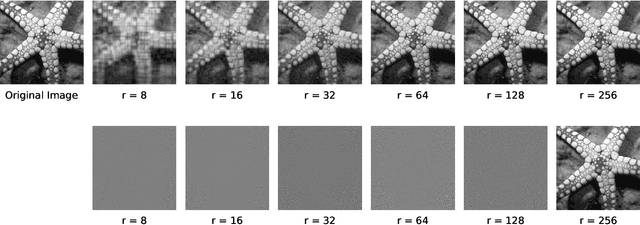

OMNI-DC: Highly Robust Depth Completion with Multiresolution Depth Integration

Nov 28, 2024

Abstract:Depth completion (DC) aims to predict a dense depth map from an RGB image and sparse depth observations. Existing methods for DC generalize poorly on new datasets or unseen sparse depth patterns, limiting their practical applications. We propose OMNI-DC, a highly robust DC model that generalizes well across various scenarios. Our method incorporates a novel multi-resolution depth integration layer and a probability-based loss, enabling it to deal with sparse depth maps of varying densities. Moreover, we train OMNI-DC on a mixture of synthetic datasets with a scale normalization technique. To evaluate our model, we establish a new evaluation protocol named Robust-DC for zero-shot testing under various sparse depth patterns. Experimental results on Robust-DC and conventional benchmarks show that OMNI-DC significantly outperforms the previous state of the art. The checkpoints, training code, and evaluations are available at https://github.com/princeton-vl/OMNI-DC.

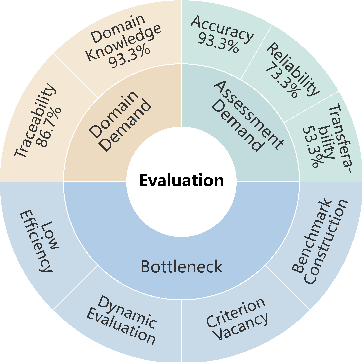

Revisiting Benchmark and Assessment: An Agent-based Exploratory Dynamic Evaluation Framework for LLMs

Oct 15, 2024

Abstract:While various vertical domain large language models (LLMs) have been developed, the challenge of automatically evaluating their performance across different domains remains significant in addressing real-world user needs. Current benchmark-based evaluation methods exhibit rigid, purposeless interactions and rely on pre-collected static datasets that are costly to build, inflexible across domains, and misaligned with practical user needs. To address this, we revisit the evaluation components and introduce two definitions: **Benchmark+**, which extends traditional QA benchmarks into a more flexible ``strategy-criterion'' format; and **Assessment+**, which enhances the interaction process for greater exploration and enables both quantitative metrics and qualitative insights that capture nuanced target LLM behaviors from richer multi-turn interactions. We propose an agent-based evaluation framework called *TestAgent*, which implements these two concepts through retrieval augmented generation and reinforcement learning. Experiments on tasks ranging from building vertical domain evaluation from scratch to activating existing benchmarks demonstrate the effectiveness of *TestAgent* across various scenarios. We believe this work offers an interesting perspective on automatic evaluation for LLMs.

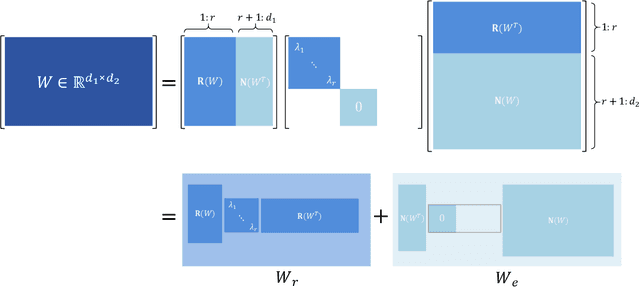

SVFit: Parameter-Efficient Fine-Tuning of Large Pre-Trained Models Using Singular Values

Sep 09, 2024

Abstract:Large pre-trained models (LPMs) have demonstrated exceptional performance in diverse natural language processing and computer vision tasks. However, fully fine-tuning these models poses substantial memory challenges, particularly in resource-constrained environments. Parameter-efficient fine-tuning (PEFT) methods, such as LoRA, mitigate this issue by adjusting only a small subset of parameters. Nevertheless, these methods typically employ random initialization for low-rank matrices, which can lead to inefficiencies in gradient descent and diminished generalizability due to suboptimal starting points. To address these limitations, we propose SVFit, a novel PEFT approach that leverages singular value decomposition (SVD) to initialize low-rank matrices using critical singular values as trainable parameters. Specifically, SVFit performs SVD on the pre-trained weight matrix to obtain the best rank-r approximation matrix, emphasizing the most critical singular values that capture over 99% of the matrix's information. These top-r singular values are then used as trainable parameters to scale the fundamental subspaces of the matrix, facilitating rapid domain adaptation. Extensive experiments across various pre-trained models in natural language understanding, text-to-image generation, and image classification tasks reveal that SVFit outperforms LoRA while requiring 16 times fewer trainable parameters.

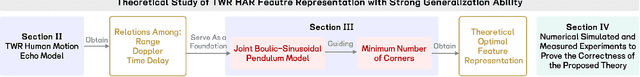

Through-the-Wall Radar Human Activity Micro-Doppler Signature Representation Method Based on Joint Boulic-Sinusoidal Pendulum Model

Aug 22, 2024

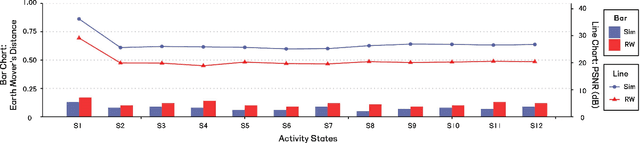

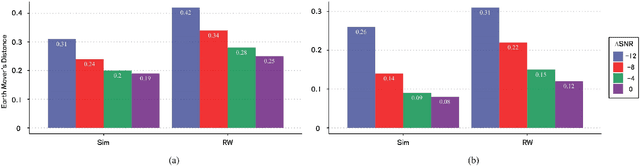

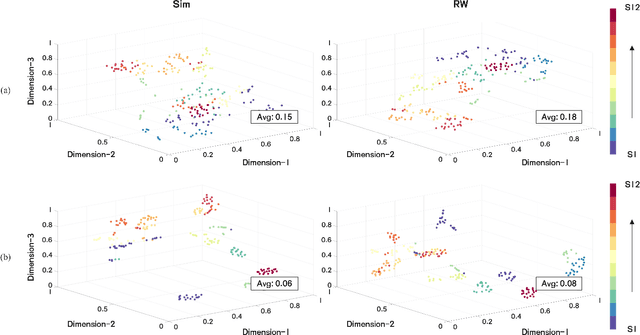

Abstract:With the help of micro-Doppler signature, ultra-wideband (UWB) through-the-wall radar (TWR) enables the reconstruction of range and velocity information of limb nodes to accurately identify indoor human activities. However, existing methods are usually trained and validated directly using range-time maps (RTM) and Doppler-time maps (DTM), which have high feature redundancy and poor generalization ability. In order to solve this problem, this paper proposes a human activity micro-Doppler signature representation method based on joint Boulic-sinusoidal pendulum motion model. In detail, this paper presents a simplified joint Boulic-sinusoidal pendulum human motion model by taking head, torso, both hands and feet into consideration improved from Boulic-Thalmann kinematic model. The paper also calculates the minimum number of key points needed to describe the Doppler and micro-Doppler information sufficiently. Both numerical simulations and experiments are conducted to verify the effectiveness. The results demonstrate that the proposed number of key points of micro-Doppler signature can precisely represent the indoor human limb node motion characteristics, and substantially improve the generalization capability of the existing methods for different testers.

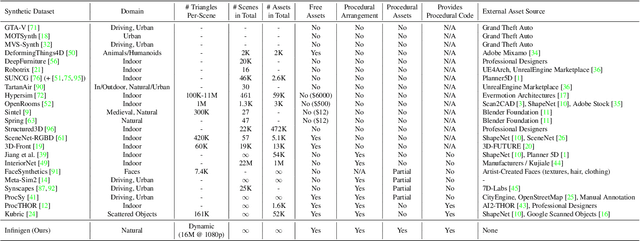

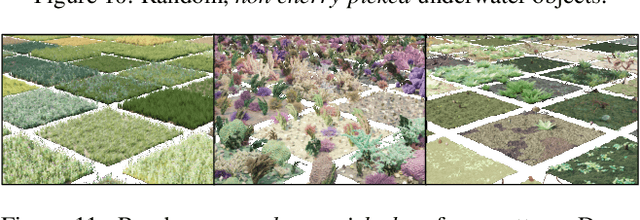

Infinigen Indoors: Photorealistic Indoor Scenes using Procedural Generation

Jun 17, 2024

Abstract:We introduce Infinigen Indoors, a Blender-based procedural generator of photorealistic indoor scenes. It builds upon the existing Infinigen system, which focuses on natural scenes, but expands its coverage to indoor scenes by introducing a diverse library of procedural indoor assets, including furniture, architecture elements, appliances, and other day-to-day objects. It also introduces a constraint-based arrangement system, which consists of a domain-specific language for expressing diverse constraints on scene composition, and a solver that generates scene compositions that maximally satisfy the constraints. We provide an export tool that allows the generated 3D objects and scenes to be directly used for training embodied agents in real-time simulators such as Omniverse and Unreal. Infinigen Indoors is open-sourced under the BSD license. Please visit https://infinigen.org for code and videos.

View-Dependent Octree-based Mesh Extraction in Unbounded Scenes for Procedural Synthetic Data

Dec 13, 2023Abstract:Procedural synthetic data generation has received increasing attention in computer vision. Procedural signed distance functions (SDFs) are a powerful tool for modeling large-scale detailed scenes, but existing mesh extraction methods have artifacts or performance profiles that limit their use for synthetic data. We propose OcMesher, a mesh extraction algorithm that efficiently handles high-detail unbounded scenes with perfect view-consistency, with easy export to downstream real-time engines. The main novelty of our solution is an algorithm to construct an octree based on a given SDF and multiple camera views. We performed extensive experiments, and show our solution produces better synthetic data for training and evaluation of computer vision models.

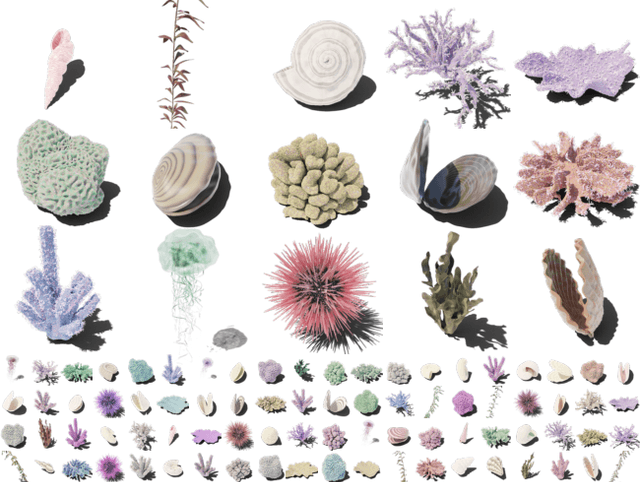

Infinite Photorealistic Worlds using Procedural Generation

Jun 26, 2023

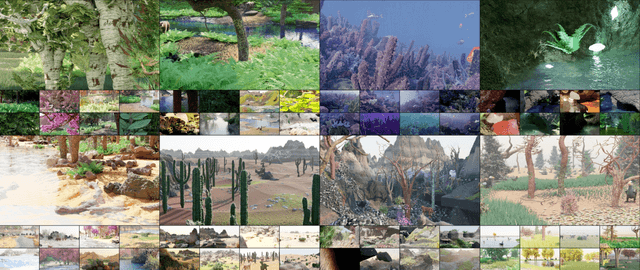

Abstract:We introduce Infinigen, a procedural generator of photorealistic 3D scenes of the natural world. Infinigen is entirely procedural: every asset, from shape to texture, is generated from scratch via randomized mathematical rules, using no external source and allowing infinite variation and composition. Infinigen offers broad coverage of objects and scenes in the natural world including plants, animals, terrains, and natural phenomena such as fire, cloud, rain, and snow. Infinigen can be used to generate unlimited, diverse training data for a wide range of computer vision tasks including object detection, semantic segmentation, optical flow, and 3D reconstruction. We expect Infinigen to be a useful resource for computer vision research and beyond. Please visit https://infinigen.org for videos, code and pre-generated data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge