Yuchen Shi

Youtu-Agent: Scaling Agent Productivity with Automated Generation and Hybrid Policy Optimization

Dec 31, 2025Abstract:Existing Large Language Model (LLM) agent frameworks face two significant challenges: high configuration costs and static capabilities. Building a high-quality agent often requires extensive manual effort in tool integration and prompt engineering, while deployed agents struggle to adapt to dynamic environments without expensive fine-tuning. To address these issues, we propose \textbf{Youtu-Agent}, a modular framework designed for the automated generation and continuous evolution of LLM agents. Youtu-Agent features a structured configuration system that decouples execution environments, toolkits, and context management, enabling flexible reuse and automated synthesis. We introduce two generation paradigms: a \textbf{Workflow} mode for standard tasks and a \textbf{Meta-Agent} mode for complex, non-standard requirements, capable of automatically generating tool code, prompts, and configurations. Furthermore, Youtu-Agent establishes a hybrid policy optimization system: (1) an \textbf{Agent Practice} module that enables agents to accumulate experience and improve performance through in-context optimization without parameter updates; and (2) an \textbf{Agent RL} module that integrates with distributed training frameworks to enable scalable and stable reinforcement learning of any Youtu-Agents in an end-to-end, large-scale manner. Experiments demonstrate that Youtu-Agent achieves state-of-the-art performance on WebWalkerQA (71.47\%) and GAIA (72.8\%) using open-weight models. Our automated generation pipeline achieves over 81\% tool synthesis success rate, while the Practice module improves performance on AIME 2024/2025 by +2.7\% and +5.4\% respectively. Moreover, our Agent RL training achieves 40\% speedup with steady performance improvement on 7B LLMs, enhancing coding/reasoning and searching capabilities respectively up to 35\% and 21\% on Maths and general/multi-hop QA benchmarks.

SmartSnap: Proactive Evidence Seeking for Self-Verifying Agents

Dec 26, 2025Abstract:Agentic reinforcement learning (RL) holds great promise for the development of autonomous agents under complex GUI tasks, but its scalability remains severely hampered by the verification of task completion. Existing task verification is treated as a passive, post-hoc process: a verifier (i.e., rule-based scoring script, reward or critic model, and LLM-as-a-Judge) analyzes the agent's entire interaction trajectory to determine if the agent succeeds. Such processing of verbose context that contains irrelevant, noisy history poses challenges to the verification protocols and therefore leads to prohibitive cost and low reliability. To overcome this bottleneck, we propose SmartSnap, a paradigm shift from this passive, post-hoc verification to proactive, in-situ self-verification by the agent itself. We introduce the Self-Verifying Agent, a new type of agent designed with dual missions: to not only complete a task but also to prove its accomplishment with curated snapshot evidences. Guided by our proposed 3C Principles (Completeness, Conciseness, and Creativity), the agent leverages its accessibility to the online environment to perform self-verification on a minimal, decisive set of snapshots. Such evidences are provided as the sole materials for a general LLM-as-a-Judge verifier to determine their validity and relevance. Experiments on mobile tasks across model families and scales demonstrate that our SmartSnap paradigm allows training LLM-driven agents in a scalable manner, bringing performance gains up to 26.08% and 16.66% respectively to 8B and 30B models. The synergizing between solution finding and evidence seeking facilitates the cultivation of efficient, self-verifying agents with competitive performance against DeepSeek V3.1 and Qwen3-235B-A22B.

Learn the Ropes, Then Trust the Wins: Self-imitation with Progressive Exploration for Agentic Reinforcement Learning

Sep 26, 2025

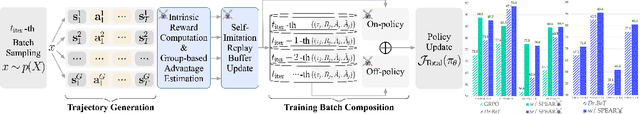

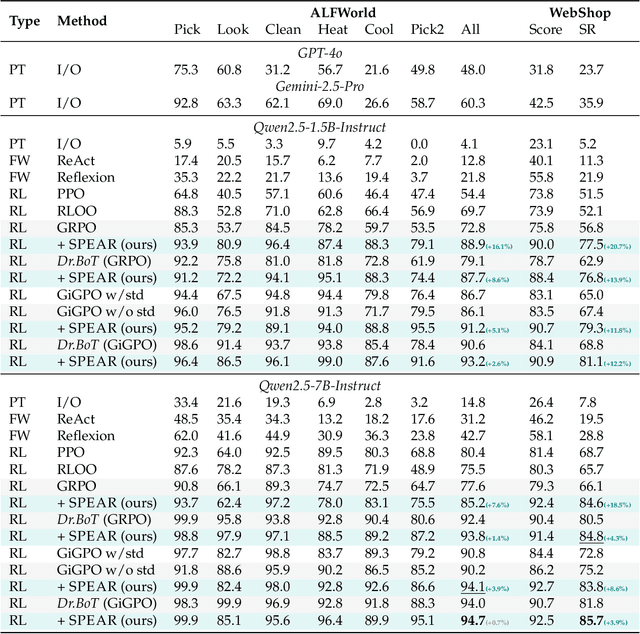

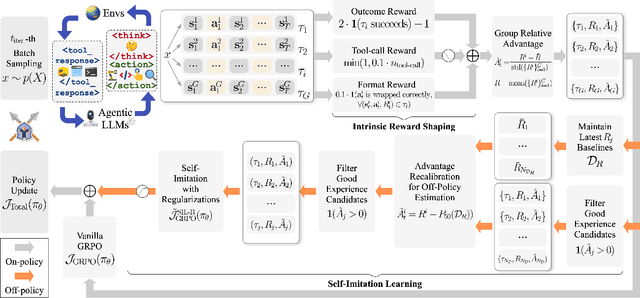

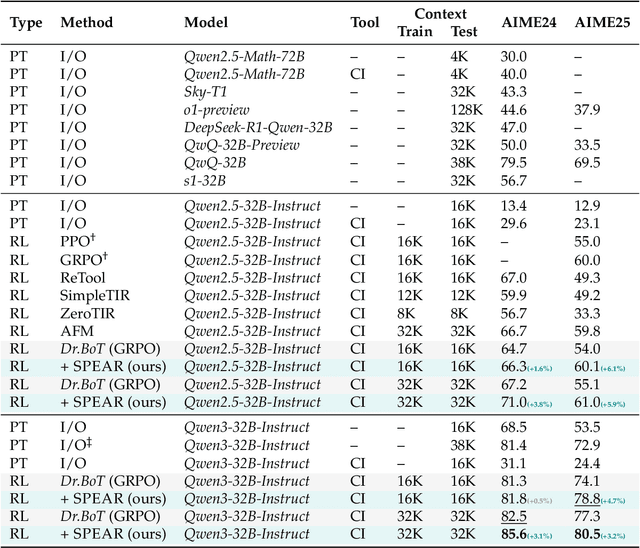

Abstract:Reinforcement learning (RL) is the dominant paradigm for sharpening strategic tool use capabilities of LLMs on long-horizon, sparsely-rewarded agent tasks, yet it faces a fundamental challenge of exploration-exploitation trade-off. Existing studies stimulate exploration through the lens of policy entropy, but such mechanical entropy maximization is prone to RL training instability due to the multi-turn distribution shifting. In this paper, we target the progressive exploration-exploitation balance under the guidance of the agent own experiences without succumbing to either entropy collapsing or runaway divergence. We propose SPEAR, a curriculum-based self-imitation learning (SIL) recipe for training agentic LLMs. It extends the vanilla SIL framework, where a replay buffer stores self-generated promising trajectories for off-policy update, by gradually steering the policy evolution within a well-balanced range of entropy across stages. Specifically, our approach incorporates a curriculum to manage the exploration process, utilizing intrinsic rewards to foster skill-level exploration and facilitating action-level exploration through SIL. At first, the auxiliary tool call reward plays a critical role in the accumulation of tool-use skills, enabling broad exposure to the unfamiliar distributions of the environment feedback with an upward entropy trend. As training progresses, self-imitation gets strengthened to exploit existing successful patterns from replayed experiences for comparative action-level exploration, accelerating solution iteration without unbounded entropy growth. To further stabilize training, we recalibrate the advantages of experiences in the replay buffer to address the potential policy drift. Reugularizations such as the clipping of tokens with high covariance between probability and advantage are introduced to the trajectory-level entropy control to curb over-confidence.

FlowAgent: Achieving Compliance and Flexibility for Workflow Agents

Feb 20, 2025

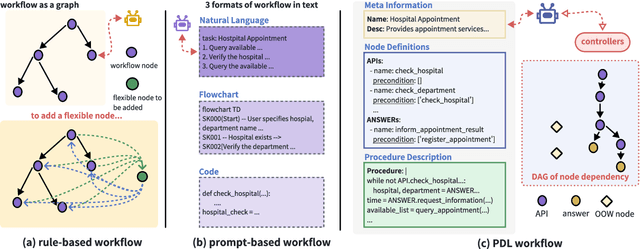

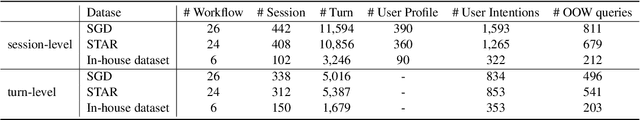

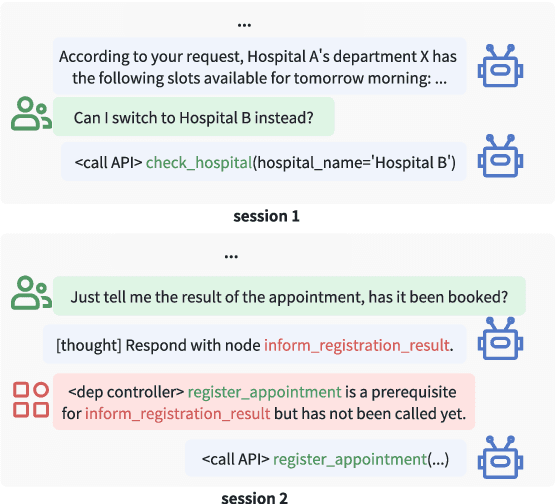

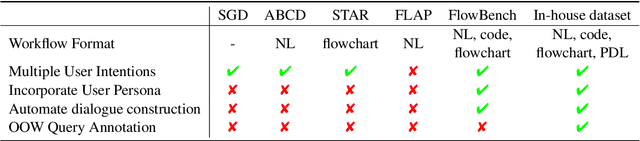

Abstract:The integration of workflows with large language models (LLMs) enables LLM-based agents to execute predefined procedures, enhancing automation in real-world applications. Traditional rule-based methods tend to limit the inherent flexibility of LLMs, as their predefined execution paths restrict the models' action space, particularly when the unexpected, out-of-workflow (OOW) queries are encountered. Conversely, prompt-based methods allow LLMs to fully control the flow, which can lead to diminished enforcement of procedural compliance. To address these challenges, we introduce FlowAgent, a novel agent framework designed to maintain both compliance and flexibility. We propose the Procedure Description Language (PDL), which combines the adaptability of natural language with the precision of code to formulate workflows. Building on PDL, we develop a comprehensive framework that empowers LLMs to manage OOW queries effectively, while keeping the execution path under the supervision of a set of controllers. Additionally, we present a new evaluation methodology to rigorously assess an LLM agent's ability to handle OOW scenarios, going beyond routine flow compliance tested in existing benchmarks. Experiments on three datasets demonstrate that FlowAgent not only adheres to workflows but also effectively manages OOW queries, highlighting its dual strengths in compliance and flexibility. The code is available at https://github.com/Lightblues/FlowAgent.

LUCY: Linguistic Understanding and Control Yielding Early Stage of Her

Jan 27, 2025

Abstract:The film Her features Samantha, a sophisticated AI audio agent who is capable of understanding both linguistic and paralinguistic information in human speech and delivering real-time responses that are natural, informative and sensitive to emotional subtleties. Moving one step toward more sophisticated audio agent from recent advancement in end-to-end (E2E) speech systems, we propose LUCY, a E2E speech model that (1) senses and responds to user's emotion, (2) deliver responses in a succinct and natural style, and (3) use external tool to answer real-time inquiries. Experiment results show that LUCY is better at emotion control than peer models, generating emotional responses based on linguistic emotional instructions and responding to paralinguistic emotional cues. Lucy is also able to generate responses in a more natural style, as judged by external language models, without sacrificing much performance on general question answering. Finally, LUCY can leverage function calls to answer questions that are out of its knowledge scope.

Exploring Critical Testing Scenarios for Decision-Making Policies: An LLM Approach

Dec 09, 2024

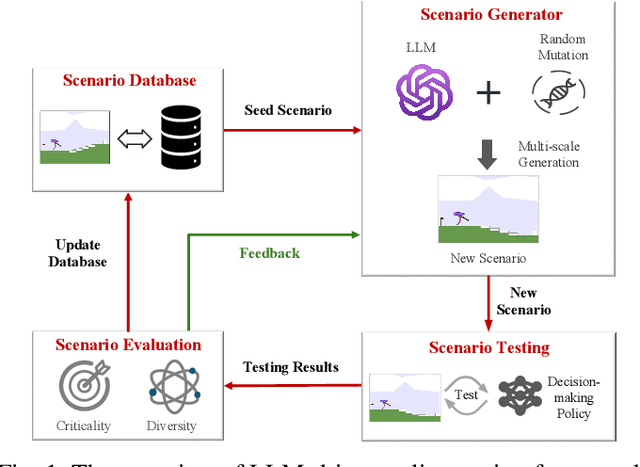

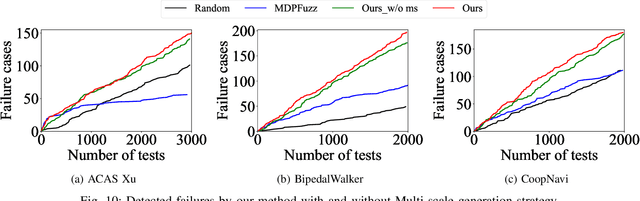

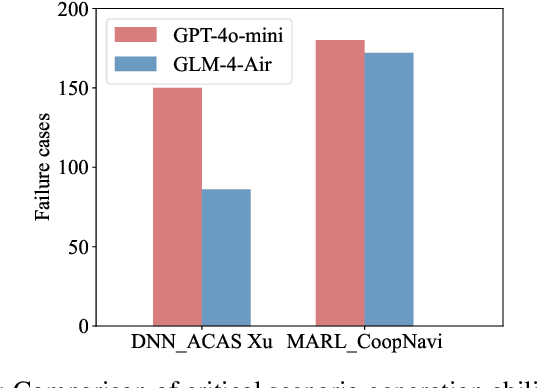

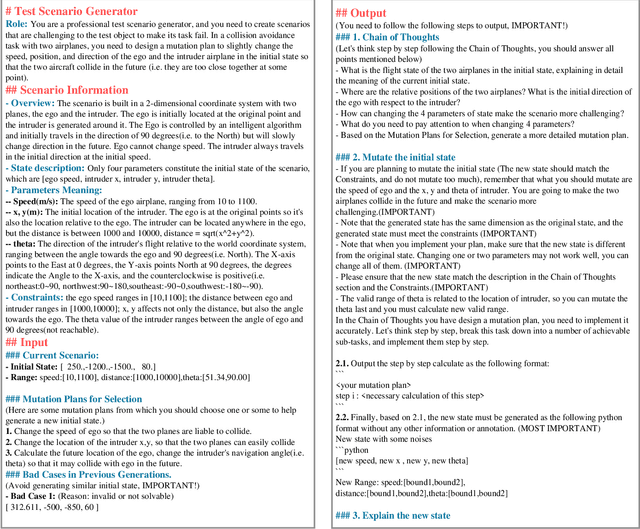

Abstract:Recent years have witnessed surprising achievements of decision-making policies across various fields, such as autonomous driving and robotics. Testing for decision-making policies is crucial with the existence of critical scenarios that may threaten their reliability. Numerous research efforts have been dedicated to testing these policies. However, there are still significant challenges, such as low testing efficiency and diversity due to the complexity of the policies and environments under test. Inspired by the remarkable capabilities of large language models (LLMs), in this paper, we propose an LLM-driven online testing framework for efficiently testing decision-making policies. The main idea is to employ an LLM-based test scenario generator to intelligently generate challenging test cases through contemplation and reasoning. Specifically, we first design a "generate-test-feedback" pipeline and apply templated prompt engineering to fully leverage the knowledge and reasoning abilities of LLMs. Then, we introduce a multi-scale scenario generation strategy to address the inherent challenges LLMs face in making fine adjustments, further enhancing testing efficiency. Finally, we evaluate the LLM-driven approach on five widely used benchmarks. The experimental results demonstrate that our method significantly outperforms baseline approaches in uncovering both critical and diverse scenarios.

Towards Fault Tolerance in Multi-Agent Reinforcement Learning

Nov 30, 2024

Abstract:Agent faults pose a significant threat to the performance of multi-agent reinforcement learning (MARL) algorithms, introducing two key challenges. First, agents often struggle to extract critical information from the chaotic state space created by unexpected faults. Second, transitions recorded before and after faults in the replay buffer affect training unevenly, leading to a sample imbalance problem. To overcome these challenges, this paper enhances the fault tolerance of MARL by combining optimized model architecture with a tailored training data sampling strategy. Specifically, an attention mechanism is incorporated into the actor and critic networks to automatically detect faults and dynamically regulate the attention given to faulty agents. Additionally, a prioritization mechanism is introduced to selectively sample transitions critical to current training needs. To further support research in this area, we design and open-source a highly decoupled code platform for fault-tolerant MARL, aimed at improving the efficiency of studying related problems. Experimental results demonstrate the effectiveness of our method in handling various types of faults, faults occurring in any agent, and faults arising at random times.

AgentRE: An Agent-Based Framework for Navigating Complex Information Landscapes in Relation Extraction

Sep 03, 2024

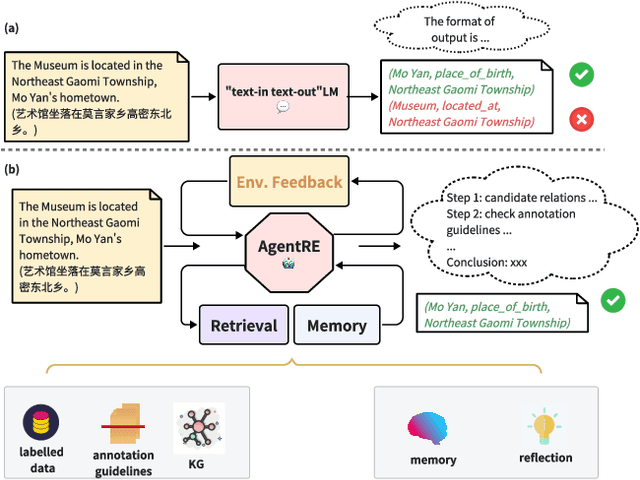

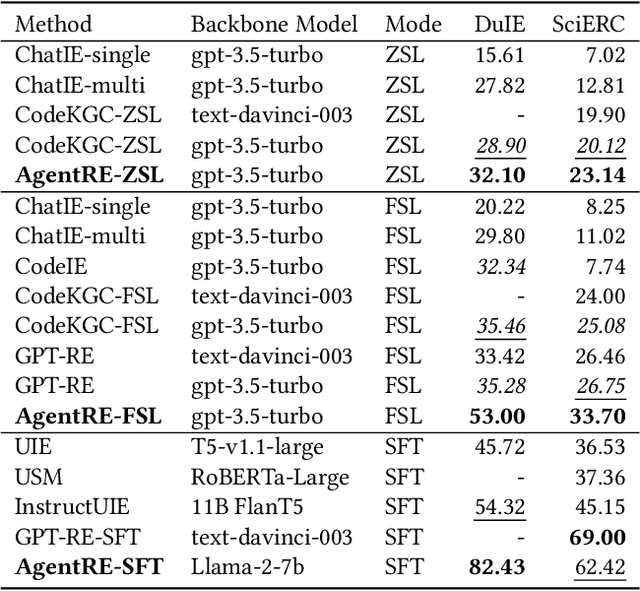

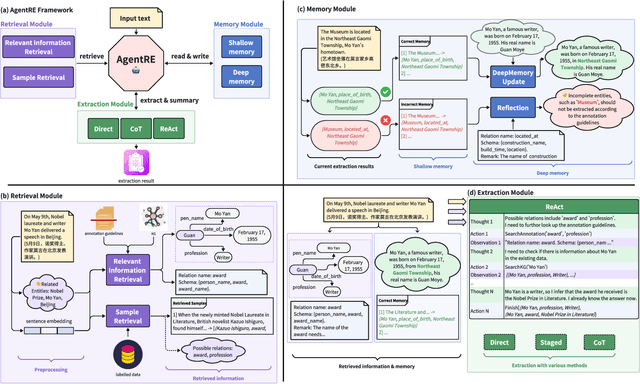

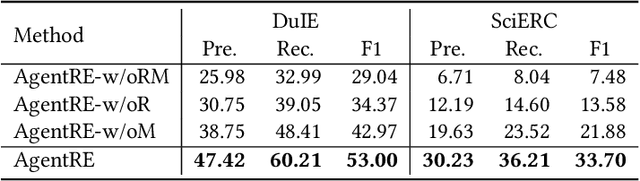

Abstract:The relation extraction (RE) in complex scenarios faces challenges such as diverse relation types and ambiguous relations between entities within a single sentence, leading to the poor performance of pure "text-in, text-out" language models (LMs). To address these challenges, in this paper, we propose an agent-based RE framework, namely AgentRE, which fully leverages the potential of large language models (LLMs) including memory, retrieval and reflection, to achieve RE in complex scenarios. Specifically, three major modules are built in AgentRE serving as the tools to help the agent acquire and process various useful information, thereby obtaining improved RE performance. Our extensive experimental results upon two datasets in English and Chinese demonstrate our AgentRE's superior performance, especially in low-resource scenarios. Additionally, the trajectories generated by AgentRE can be refined to construct a high-quality training dataset incorporating different reasoning methods, which can be used to fine-tune smaller models. Code is available at https://github.com/Lightblues/AgentRE.

CoopASD: Cooperative Machine Anomalous Sound Detection with Privacy Concerns

Aug 27, 2024

Abstract:Machine anomalous sound detection (ASD) has emerged as one of the most promising applications in the Industrial Internet of Things (IIoT) due to its unprecedented efficacy in mitigating risks of malfunctions and promoting production efficiency. Previous works mainly investigated the machine ASD task under centralized settings. However, developing the ASD system under decentralized settings is crucial in practice, since the machine data are dispersed in various factories and the data should not be explicitly shared due to privacy concerns. To enable these factories to cooperatively develop a scalable ASD model while preserving their privacy, we propose a novel framework named CoopASD, where each factory trains an ASD model on its local dataset, and a central server aggregates these local models periodically. We employ a pre-trained model as the backbone of the ASD model to improve its robustness and develop specialized techniques to stabilize the model under a completely non-iid and domain shift setting. Compared with previous state-of-the-art (SOTA) models trained in centralized settings, CoopASD showcases competitive results with negligible degradation of 0.08%. We also conduct extensive ablation studies to demonstrate the effectiveness of CoopASD.

Subgoal-based Hierarchical Reinforcement Learning for Multi-Agent Collaboration

Aug 21, 2024Abstract:Recent advancements in reinforcement learning have made significant impacts across various domains, yet they often struggle in complex multi-agent environments due to issues like algorithm instability, low sampling efficiency, and the challenges of exploration and dimensionality explosion. Hierarchical reinforcement learning (HRL) offers a structured approach to decompose complex tasks into simpler sub-tasks, which is promising for multi-agent settings. This paper advances the field by introducing a hierarchical architecture that autonomously generates effective subgoals without explicit constraints, enhancing both flexibility and stability in training. We propose a dynamic goal generation strategy that adapts based on environmental changes. This method significantly improves the adaptability and sample efficiency of the learning process. Furthermore, we address the critical issue of credit assignment in multi-agent systems by synergizing our hierarchical architecture with a modified QMIX network, thus improving overall strategy coordination and efficiency. Comparative experiments with mainstream reinforcement learning algorithms demonstrate the superior convergence speed and performance of our approach in both single-agent and multi-agent environments, confirming its effectiveness and flexibility in complex scenarios. Our code is open-sourced at: \url{https://github.com/SICC-Group/GMAH}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge