Danya Yao

Timealign: A multi-modal object detection method for time misalignment fusing in autonomous driving

Dec 13, 2024

Abstract:The multi-modal perception methods are thriving in the autonomous driving field due to their better usage of complementary data from different sensors. Such methods depend on calibration and synchronization between sensors to get accurate environmental information. There have already been studies about space-alignment robustness in autonomous driving object detection process, however, the research for time-alignment is relatively few. As in reality experiments, LiDAR point clouds are more challenging for real-time data transfer, our study used historical frames of LiDAR to better align features when the LiDAR data lags exist. We designed a Timealign module to predict and combine LiDAR features with observation to tackle such time misalignment based on SOTA GraphBEV framework.

Towards Fault Tolerance in Multi-Agent Reinforcement Learning

Nov 30, 2024

Abstract:Agent faults pose a significant threat to the performance of multi-agent reinforcement learning (MARL) algorithms, introducing two key challenges. First, agents often struggle to extract critical information from the chaotic state space created by unexpected faults. Second, transitions recorded before and after faults in the replay buffer affect training unevenly, leading to a sample imbalance problem. To overcome these challenges, this paper enhances the fault tolerance of MARL by combining optimized model architecture with a tailored training data sampling strategy. Specifically, an attention mechanism is incorporated into the actor and critic networks to automatically detect faults and dynamically regulate the attention given to faulty agents. Additionally, a prioritization mechanism is introduced to selectively sample transitions critical to current training needs. To further support research in this area, we design and open-source a highly decoupled code platform for fault-tolerant MARL, aimed at improving the efficiency of studying related problems. Experimental results demonstrate the effectiveness of our method in handling various types of faults, faults occurring in any agent, and faults arising at random times.

A re-calibration method for object detection with multi-modal alignment bias in autonomous driving

May 27, 2024

Abstract:Multi-modal object detection in autonomous driving has achieved great breakthroughs due to the usage of fusing complementary information from different sensors. The calibration in fusion between sensors such as LiDAR and camera is always supposed to be precise in previous work. However, in reality, calibration matrices are fixed when the vehicles leave the factory, but vibration, bumps, and data lags may cause calibration bias. As the research on the calibration influence on fusion detection performance is relatively few, flexible calibration dependency multi-sensor detection method has always been attractive. In this paper, we conducted experiments on SOTA detection method EPNet++ and proved slight bias on calibration can reduce the performance seriously. We also proposed a re-calibration model based on semantic segmentation which can be combined with a detection algorithm to improve the performance and robustness of multi-modal calibration bias.

Synthetic Datasets for Autonomous Driving: A Survey

Apr 24, 2023

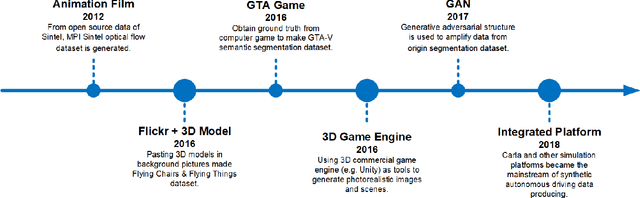

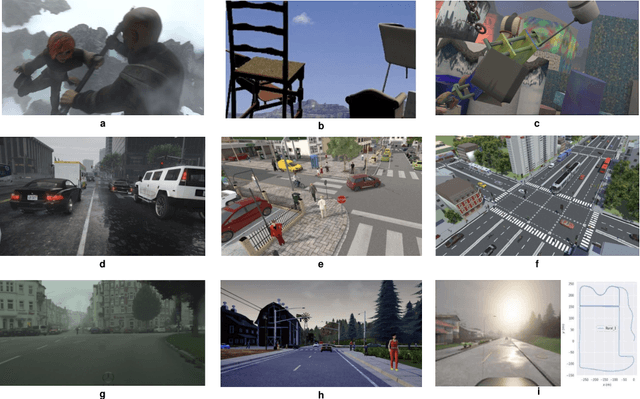

Abstract:Autonomous driving techniques have been flourishing in recent years while thirsting for huge amounts of high-quality data. However, it is difficult for real-world datasets to keep up with the pace of changing requirements due to their expensive and time-consuming experimental and labeling costs. Therefore, more and more researchers are turning to synthetic datasets to easily generate rich and changeable data as an effective complement to the real world and to improve the performance of algorithms. In this paper, we summarize the evolution of synthetic dataset generation methods and review the work to date in synthetic datasets related to single and multi-task categories for to autonomous driving study. We also discuss the role that synthetic dataset plays the evaluation, gap test, and positive effect in autonomous driving related algorithm testing, especially on trustworthiness and safety aspects. Finally, we discuss general trends and possible development directions. To the best of our knowledge, this is the first survey focusing on the application of synthetic datasets in autonomous driving. This survey also raises awareness of the problems of real-world deployment of autonomous driving technology and provides researchers with a possible solution.

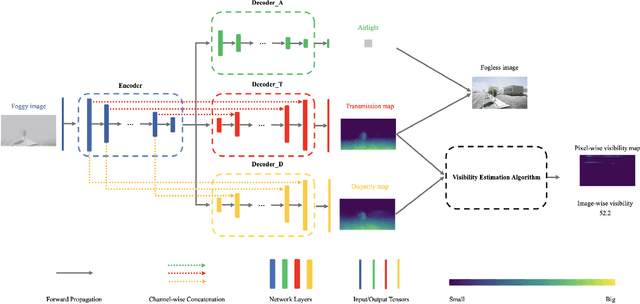

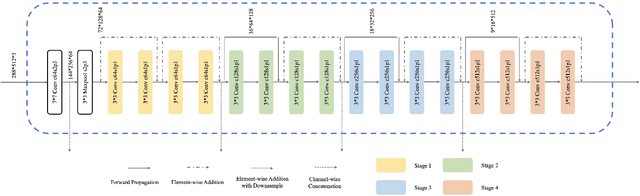

DMRVisNet: Deep Multi-head Regression Network for Pixel-wise Visibility Estimation Under Foggy Weather

Dec 08, 2021

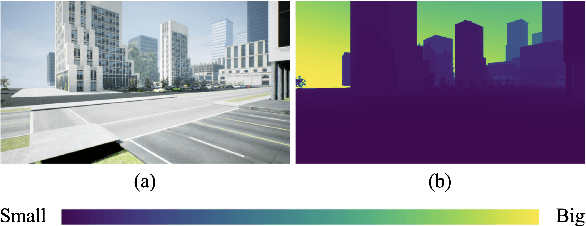

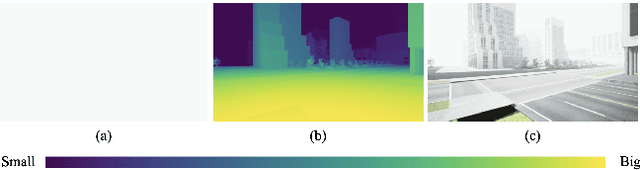

Abstract:Scene perception is essential for driving decision-making and traffic safety. However, fog, as a kind of common weather, frequently appears in the real world, especially in the mountain areas, making it difficult to accurately observe the surrounding environments. Therefore, precisely estimating the visibility under foggy weather can significantly benefit traffic management and safety. To address this, most current methods use professional instruments outfitted at fixed locations on the roads to perform the visibility measurement; these methods are expensive and less flexible. In this paper, we propose an innovative end-to-end convolutional neural network framework to estimate the visibility leveraging Koschmieder's law exclusively using the image data. The proposed method estimates the visibility by integrating the physical model into the proposed framework, instead of directly predicting the visibility value via the convolutional neural work. Moreover, we estimate the visibility as a pixel-wise visibility map against those of previous visibility measurement methods which solely predict a single value for an entire image. Thus, the estimated result of our method is more informative, particularly in uneven fog scenarios, which can benefit to developing a more precise early warning system for foggy weather, thereby better protecting the intelligent transportation infrastructure systems and promoting its development. To validate the proposed framework, a virtual dataset, FACI, containing 3,000 foggy images in different concentrations, is collected using the AirSim platform. Detailed experiments show that the proposed method achieves performance competitive to those of state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge