Yiting Liu

CL-bench: A Benchmark for Context Learning

Feb 03, 2026Abstract:Current language models (LMs) excel at reasoning over prompts using pre-trained knowledge. However, real-world tasks are far more complex and context-dependent: models must learn from task-specific context and leverage new knowledge beyond what is learned during pre-training to reason and resolve tasks. We term this capability context learning, a crucial ability that humans naturally possess but has been largely overlooked. To this end, we introduce CL-bench, a real-world benchmark consisting of 500 complex contexts, 1,899 tasks, and 31,607 verification rubrics, all crafted by experienced domain experts. Each task is designed such that the new content required to resolve it is contained within the corresponding context. Resolving tasks in CL-bench requires models to learn from the context, ranging from new domain-specific knowledge, rule systems, and complex procedures to laws derived from empirical data, all of which are absent from pre-training. This goes far beyond long-context tasks that primarily test retrieval or reading comprehension, and in-context learning tasks, where models learn simple task patterns via instructions and demonstrations. Our evaluations of ten frontier LMs find that models solve only 17.2% of tasks on average. Even the best-performing model, GPT-5.1, solves only 23.7%, revealing that LMs have yet to achieve effective context learning, which poses a critical bottleneck for tackling real-world, complex context-dependent tasks. CL-bench represents a step towards building LMs with this fundamental capability, making them more intelligent and advancing their deployment in real-world scenarios.

Beyond Activation Patterns: A Weight-Based Out-of-Context Explanation of Sparse Autoencoder Features

Jan 30, 2026Abstract:Sparse autoencoders (SAEs) have emerged as a powerful technique for decomposing language model representations into interpretable features. Current interpretation methods infer feature semantics from activation patterns, but overlook that features are trained to reconstruct activations that serve computational roles in the forward pass. We introduce a novel weight-based interpretation framework that measures functional effects through direct weight interactions, requiring no activation data. Through three experiments on Gemma-2 and Llama-3.1 models, we demonstrate that (1) 1/4 of features directly predict output tokens, (2) features actively participate in attention mechanisms with depth-dependent structure, and (3) semantic and non-semantic feature populations exhibit distinct distribution profiles in attention circuits. Our analysis provides the missing out-of-context half of SAE feature interpretability.

MAD-NG: Meta-Auto-Decoder Neural Galerkin Method for Solving Parametric Partial Differential Equations

Dec 25, 2025Abstract:Parametric partial differential equations (PDEs) are fundamental for modeling a wide range of physical and engineering systems influenced by uncertain or varying parameters. Traditional neural network-based solvers, such as Physics-Informed Neural Networks (PINNs) and Deep Galerkin Methods, often face challenges in generalization and long-time prediction efficiency due to their dependence on full space-time approximations. To address these issues, we propose a novel and scalable framework that significantly enhances the Neural Galerkin Method (NGM) by incorporating the Meta-Auto-Decoder (MAD) paradigm. Our approach leverages space-time decoupling to enable more stable and efficient time integration, while meta-learning-driven adaptation allows rapid generalization to unseen parameter configurations with minimal retraining. Furthermore, randomized sparse updates effectively reduce computational costs without compromising accuracy. Together, these advancements enable our method to achieve physically consistent, long-horizon predictions for complex parameterized evolution equations with significantly lower computational overhead. Numerical experiments on benchmark problems demonstrate that our methods performs comparatively well in terms of accuracy, robustness, and adaptability.

Bridging the Initialization Gap: A Co-Optimization Framework for Mixed-Size Global Placement

Nov 13, 2025Abstract:Global placement is a critical step with high computational complexity in VLSI physical design. Modern analytical placers formulate the placement problem as a nonlinear optimization, where initialization strongly affects both convergence behavior and final placement quality. However, existing initialization methods exhibit a trade-off: area-aware initializers account for cell areas but are computationally expensive and can dominate total runtime, while fast point-based initializers ignore cell area, leading to a modeling gap that impairs convergence and solution quality. We propose a lightweight co-optimization framework that bridges this initialization gap through two strategies. First, an area-hint refinement initializer incorporates heuristic cell area information into a signed graph signal by augmenting the netlist graph with virtual nodes and negative-weight edges, yielding an area-aware and spectrally smooth placement initialization. Second, a macro-schedule placement procedure progressively restores area constraints, enabling a smooth transition from the refined initializer to the full area-aware objective and producing high-quality placement results. We evaluate the framework on macro-heavy ISPD2005 academic benchmarks and two real-world industrial designs across two technology nodes (12 cases in total). Experimental results show that our method consistently improves half-perimeter wirelength (HPWL) over point-based initializers in 11 out of 12 cases, achieving up to 2.2% HPWL reduction, while running approximately 100 times faster than the state-of-the-art area-aware initializer.

GeoFM: Enhancing Geometric Reasoning of MLLMs via Synthetic Data Generation through Formal Language

Oct 31, 2025

Abstract:Multi-modal Large Language Models (MLLMs) have gained significant attention in both academia and industry for their capabilities in handling multi-modal tasks. However, these models face challenges in mathematical geometric reasoning due to the scarcity of high-quality geometric data. To address this issue, synthetic geometric data has become an essential strategy. Current methods for generating synthetic geometric data involve rephrasing or expanding existing problems and utilizing predefined rules and templates to create geometric images and problems. However, these approaches often produce data that lacks diversity or is prone to noise. Additionally, the geometric images synthesized by existing methods tend to exhibit limited variation and deviate significantly from authentic geometric diagrams. To overcome these limitations, we propose GeoFM, a novel method for synthesizing geometric data. GeoFM uses formal languages to explore combinations of conditions within metric space, generating high-fidelity geometric problems that differ from the originals while ensuring correctness through a symbolic engine. Experimental results show that our synthetic data significantly outperforms existing methods. The model trained with our data surpass the proprietary GPT-4o model by 18.7\% on geometry problem-solving tasks in MathVista and by 16.5\% on GeoQA. Additionally, it exceeds the performance of a leading open-source model by 5.7\% on MathVista and by 2.7\% on GeoQA.

MEET: A Million-Scale Dataset for Fine-Grained Geospatial Scene Classification with Zoom-Free Remote Sensing Imagery

Mar 14, 2025Abstract:Accurate fine-grained geospatial scene classification using remote sensing imagery is essential for a wide range of applications. However, existing approaches often rely on manually zooming remote sensing images at different scales to create typical scene samples. This approach fails to adequately support the fixed-resolution image interpretation requirements in real-world scenarios. To address this limitation, we introduce the Million-scale finE-grained geospatial scEne classification dataseT (MEET), which contains over 1.03 million zoom-free remote sensing scene samples, manually annotated into 80 fine-grained categories. In MEET, each scene sample follows a scene-inscene layout, where the central scene serves as the reference, and auxiliary scenes provide crucial spatial context for finegrained classification. Moreover, to tackle the emerging challenge of scene-in-scene classification, we present the Context-Aware Transformer (CAT), a model specifically designed for this task, which adaptively fuses spatial context to accurately classify the scene samples. CAT adaptively fuses spatial context to accurately classify the scene samples by learning attentional features that capture the relationships between the center and auxiliary scenes. Based on MEET, we establish a comprehensive benchmark for fine-grained geospatial scene classification, evaluating CAT against 11 competitive baselines. The results demonstrate that CAT significantly outperforms these baselines, achieving a 1.88% higher balanced accuracy (BA) with the Swin-Large backbone, and a notable 7.87% improvement with the Swin-Huge backbone. Further experiments validate the effectiveness of each module in CAT and show the practical applicability of CAT in the urban functional zone mapping. The source code and dataset will be publicly available at https://jerrywyn.github.io/project/MEET.html.

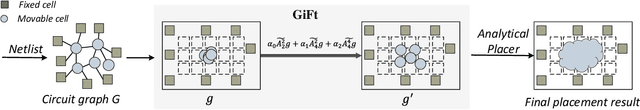

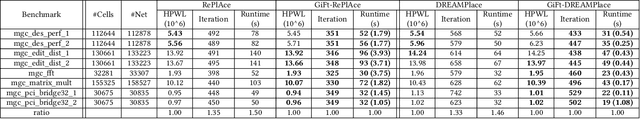

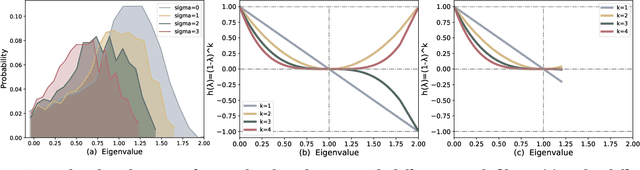

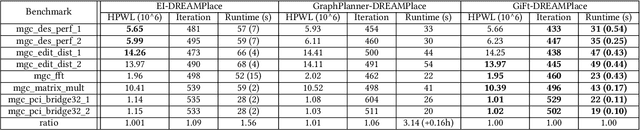

The Power of Graph Signal Processing for Chip Placement Acceleration

Feb 24, 2025

Abstract:Placement is a critical task with high computation complexity in VLSI physical design. Modern analytical placers formulate the placement objective as a nonlinear optimization task, which suffers a long iteration time. To accelerate and enhance the placement process, recent studies have turned to deep learning-based approaches, particularly leveraging graph convolution networks (GCNs). However, learning-based placers require time- and data-consuming model training due to the complexity of circuit placement that involves large-scale cells and design-specific graph statistics. This paper proposes GiFt, a parameter-free technique for accelerating placement, rooted in graph signal processing. GiFt excels at capturing multi-resolution smooth signals of circuit graphs to generate optimized placement solutions without the need for time-consuming model training, and meanwhile significantly reduces the number of iterations required by analytical placers. Experimental results show that GiFt significantly improving placement efficiency, while achieving competitive or superior performance compared to state-of-the-art placers. In particular, compared to DREAMPlace, the recently proposed GPU-accelerated analytical placer, GF-Placer improves total runtime over 45%.

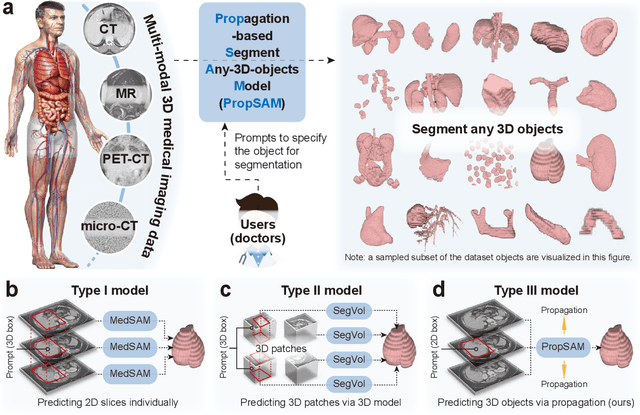

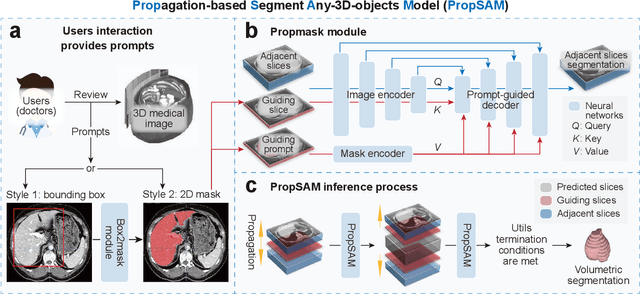

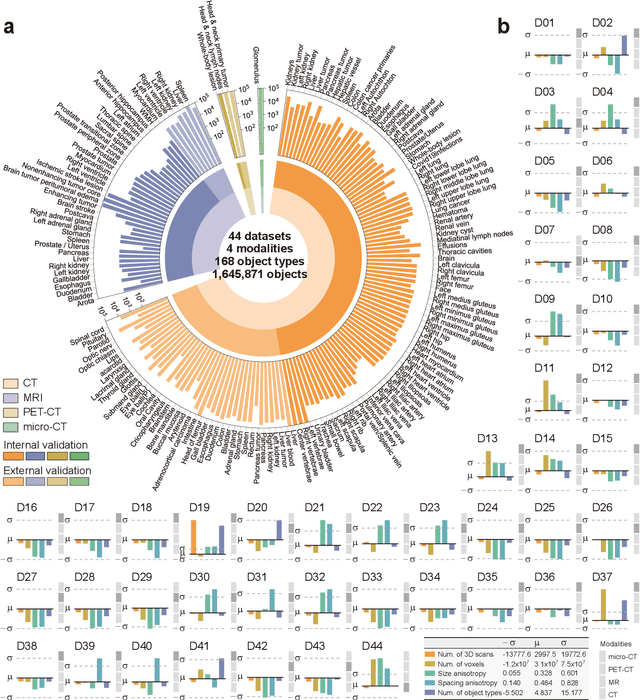

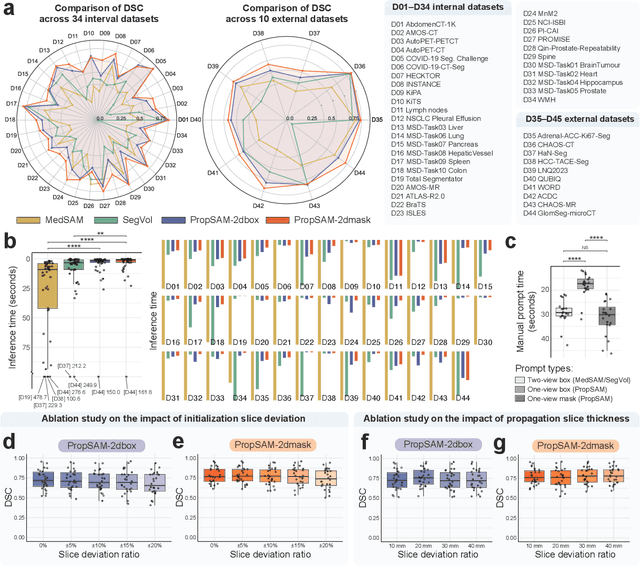

PropSAM: A Propagation-Based Model for Segmenting Any 3D Objects in Multi-Modal Medical Images

Aug 25, 2024

Abstract:Volumetric segmentation is crucial for medical imaging but is often constrained by labor-intensive manual annotations and the need for scenario-specific model training. Furthermore, existing general segmentation models are inefficient due to their design and inferential approaches. Addressing this clinical demand, we introduce PropSAM, a propagation-based segmentation model that optimizes the use of 3D medical structure information. PropSAM integrates a CNN-based UNet for intra-slice processing with a Transformer-based module for inter-slice propagation, focusing on structural and semantic continuities to enhance segmentation across various modalities. Distinctively, PropSAM operates on a one-view prompt, such as a 2D bounding box or sketch mask, unlike conventional models that require two-view prompts. It has demonstrated superior performance, significantly improving the Dice Similarity Coefficient (DSC) across 44 medical datasets and various imaging modalities, outperforming models like MedSAM and SegVol with an average DSC improvement of 18.1%. PropSAM also maintains stable predictions despite prompt deviations and varying propagation configurations, confirmed by one-way ANOVA tests with P>0.5985 and P>0.6131, respectively. Moreover, PropSAM's efficient architecture enables faster inference speeds (Wilcoxon rank-sum test, P<0.001) and reduces user interaction time by 37.8% compared to two-view prompt models. Its ability to handle irregular and complex objects with robust performance further demonstrates its potential in clinical settings, facilitating more automated and reliable medical imaging analyses with minimal retraining.

propnet: Propagating 2D Annotation to 3D Segmentation for Gastric Tumors on CT Scans

May 29, 2023

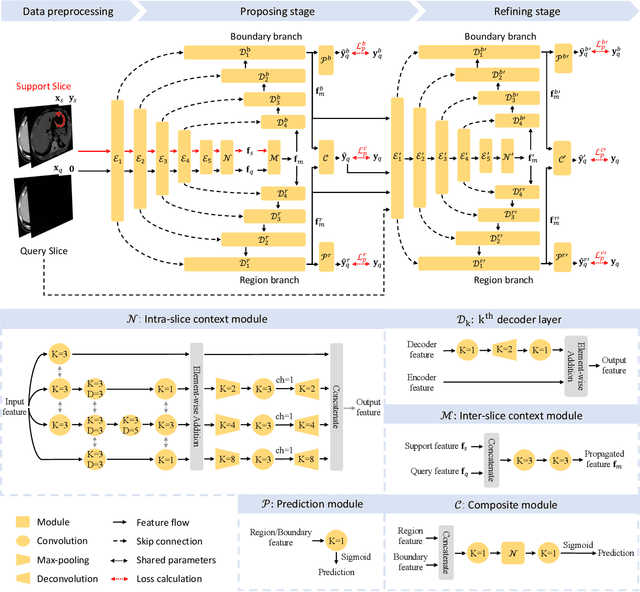

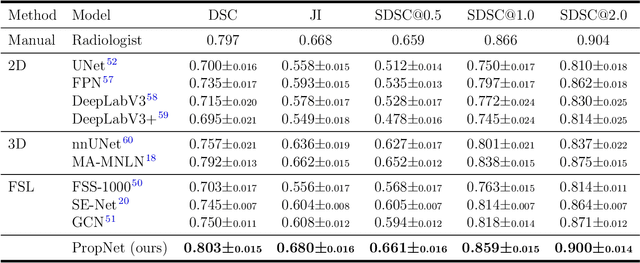

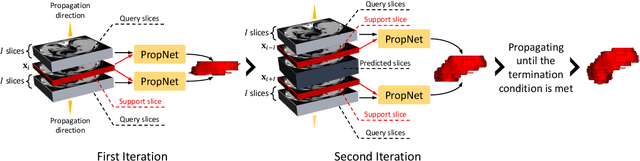

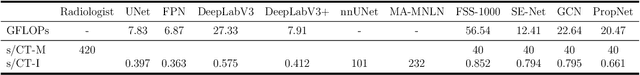

Abstract:**Background:** Accurate 3D CT scan segmentation of gastric tumors is pivotal for diagnosis and treatment. The challenges lie in the irregular shapes, blurred boundaries of tumors, and the inefficiency of existing methods. **Purpose:** We conducted a study to introduce a model, utilizing human-guided knowledge and unique modules, to address the challenges of 3D tumor segmentation. **Methods:** We developed the PropNet framework, propagating radiologists' knowledge from 2D annotations to the entire 3D space. This model consists of a proposing stage for coarse segmentation and a refining stage for improved segmentation, using two-way branches for enhanced performance and an up-down strategy for efficiency. **Results:** With 98 patient scans for training and 30 for validation, our method achieves a significant agreement with manual annotation (Dice of 0.803) and improves efficiency. The performance is comparable in different scenarios and with various radiologists' annotations (Dice between 0.785 and 0.803). Moreover, the model shows improved prognostic prediction performance (C-index of 0.620 vs. 0.576) on an independent validation set of 42 patients with advanced gastric cancer. **Conclusions:** Our model generates accurate tumor segmentation efficiently and stably, improving prognostic performance and reducing high-throughput image reading workload. This model can accelerate the quantitative analysis of gastric tumors and enhance downstream task performance.

Learning to Check Contract Inconsistencies

Dec 15, 2020

Abstract:Contract consistency is important in ensuring the legal validity of the contract. In many scenarios, a contract is written by filling the blanks in a precompiled form. Due to carelessness, two blanks that should be filled with the same (or different)content may be incorrectly filled with different (or same) content. This will result in the issue of contract inconsistencies, which may severely impair the legal validity of the contract. Traditional methods to address this issue mainly rely on manual contract review, which is labor-intensive and costly. In this work, we formulate a novel Contract Inconsistency Checking (CIC) problem, and design an end-to-end framework, called Pair-wise Blank Resolution (PBR), to solve the CIC problem with high accuracy. Our PBR model contains a novel BlankCoder to address the challenge of modeling meaningless blanks. BlankCoder adopts a two-stage attention mechanism that adequately associates a meaningless blank with its relevant descriptions while avoiding the incorporation of irrelevant context words. Experiments conducted on real-world datasets show the promising performance of our method with a balanced accuracy of 94.05% and an F1 score of 90.90% in the CIC problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge