PropSAM: A Propagation-Based Model for Segmenting Any 3D Objects in Multi-Modal Medical Images

Paper and Code

Aug 25, 2024

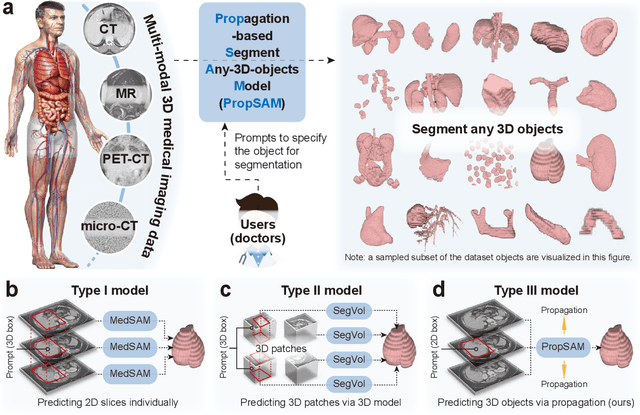

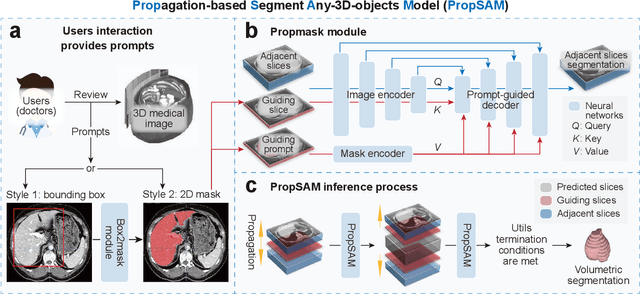

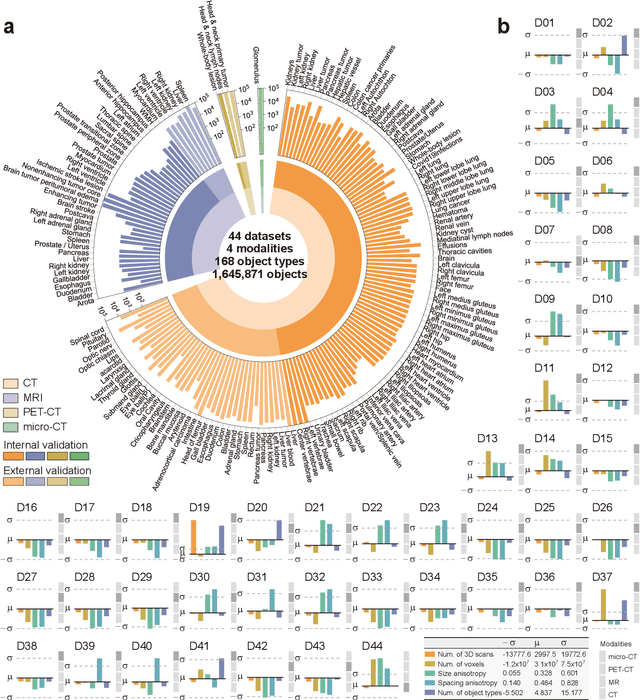

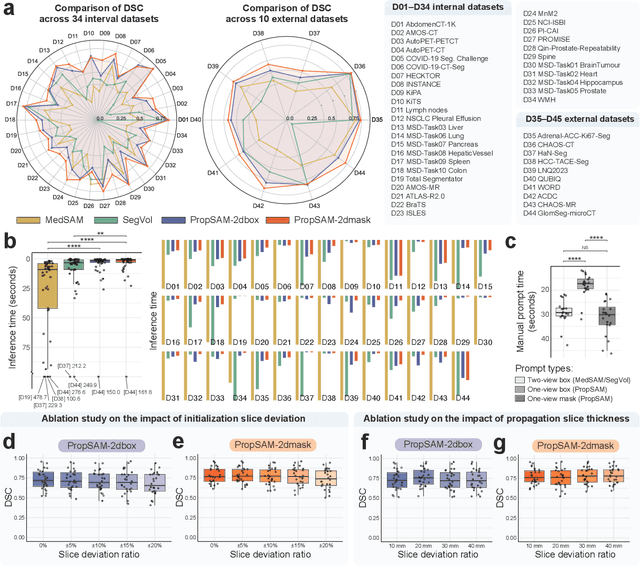

Volumetric segmentation is crucial for medical imaging but is often constrained by labor-intensive manual annotations and the need for scenario-specific model training. Furthermore, existing general segmentation models are inefficient due to their design and inferential approaches. Addressing this clinical demand, we introduce PropSAM, a propagation-based segmentation model that optimizes the use of 3D medical structure information. PropSAM integrates a CNN-based UNet for intra-slice processing with a Transformer-based module for inter-slice propagation, focusing on structural and semantic continuities to enhance segmentation across various modalities. Distinctively, PropSAM operates on a one-view prompt, such as a 2D bounding box or sketch mask, unlike conventional models that require two-view prompts. It has demonstrated superior performance, significantly improving the Dice Similarity Coefficient (DSC) across 44 medical datasets and various imaging modalities, outperforming models like MedSAM and SegVol with an average DSC improvement of 18.1%. PropSAM also maintains stable predictions despite prompt deviations and varying propagation configurations, confirmed by one-way ANOVA tests with P>0.5985 and P>0.6131, respectively. Moreover, PropSAM's efficient architecture enables faster inference speeds (Wilcoxon rank-sum test, P<0.001) and reduces user interaction time by 37.8% compared to two-view prompt models. Its ability to handle irregular and complex objects with robust performance further demonstrates its potential in clinical settings, facilitating more automated and reliable medical imaging analyses with minimal retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge