Hai Zhou

LLA: Enhancing Security and Privacy for Generative Models with Logic-Locked Accelerators

Dec 26, 2025Abstract:We introduce LLA, an effective intellectual property (IP) protection scheme for generative AI models. LLA leverages the synergy between hardware and software to defend against various supply chain threats, including model theft, model corruption, and information leakage. On the software side, it embeds key bits into neurons that can trigger outliers to degrade performance and applies invariance transformations to obscure the key values. On the hardware side, it integrates a lightweight locking module into the AI accelerator while maintaining compatibility with various dataflow patterns and toolchains. An accelerator with a pre-stored secret key acts as a license to access the model services provided by the IP owner. The evaluation results show that LLA can withstand a broad range of oracle-guided key optimization attacks, while incurring a minimal computational overhead of less than 0.1% for 7,168 key bits.

The Power of Graph Signal Processing for Chip Placement Acceleration

Feb 24, 2025

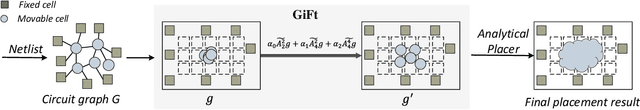

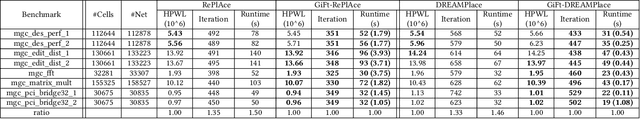

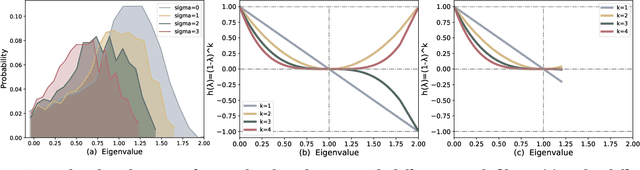

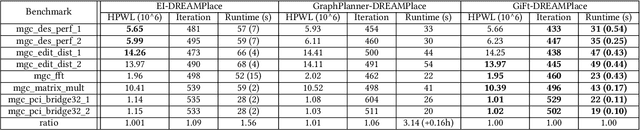

Abstract:Placement is a critical task with high computation complexity in VLSI physical design. Modern analytical placers formulate the placement objective as a nonlinear optimization task, which suffers a long iteration time. To accelerate and enhance the placement process, recent studies have turned to deep learning-based approaches, particularly leveraging graph convolution networks (GCNs). However, learning-based placers require time- and data-consuming model training due to the complexity of circuit placement that involves large-scale cells and design-specific graph statistics. This paper proposes GiFt, a parameter-free technique for accelerating placement, rooted in graph signal processing. GiFt excels at capturing multi-resolution smooth signals of circuit graphs to generate optimized placement solutions without the need for time-consuming model training, and meanwhile significantly reduces the number of iterations required by analytical placers. Experimental results show that GiFt significantly improving placement efficiency, while achieving competitive or superior performance compared to state-of-the-art placers. In particular, compared to DREAMPlace, the recently proposed GPU-accelerated analytical placer, GF-Placer improves total runtime over 45%.

Certifying Global Robustness for Deep Neural Networks

May 31, 2024

Abstract:A globally robust deep neural network resists perturbations on all meaningful inputs. Current robustness certification methods emphasize local robustness, struggling to scale and generalize. This paper presents a systematic and efficient method to evaluate and verify global robustness for deep neural networks, leveraging the PAC verification framework for solid guarantees on verification results. We utilize probabilistic programs to characterize meaningful input regions, setting a realistic standard for global robustness. Additionally, we introduce the cumulative robustness curve as a criterion in evaluating global robustness. We design a statistical method that combines multi-level splitting and regression analysis for the estimation, significantly reducing the execution time. Experimental results demonstrate the efficiency and effectiveness of our verification method and its capability to find rare and diversified counterexamples for adversarial training.

Incentivizing Federated Learning

May 22, 2022

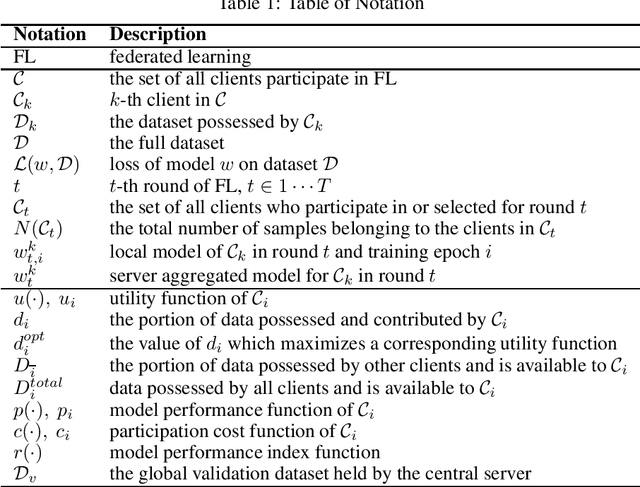

Abstract:Federated Learning is an emerging distributed collaborative learning paradigm used by many of applications nowadays. The effectiveness of federated learning relies on clients' collective efforts and their willingness to contribute local data. However, due to privacy concerns and the costs of data collection and model training, clients may not always contribute all the data they possess, which would negatively affect the performance of the global model. This paper presents an incentive mechanism that encourages clients to contribute as much data as they can obtain. Unlike previous incentive mechanisms, our approach does not monetize data. Instead, we implicitly use model performance as a reward, i.e., significant contributors are paid off with better models. We theoretically prove that clients will use as much data as they can possibly possess to participate in federated learning under certain conditions with our incentive mechanism

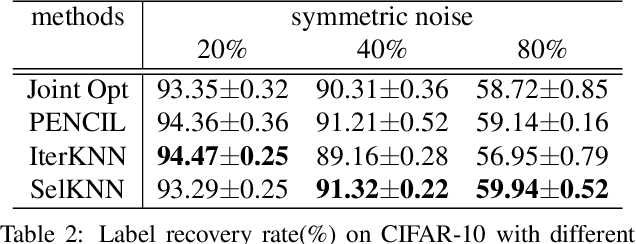

KNN-enhanced Deep Learning Against Noisy Labels

Dec 08, 2020

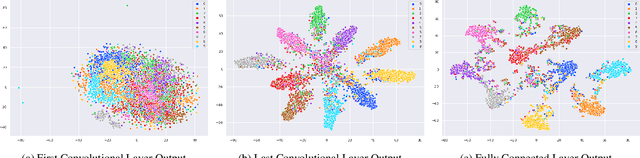

Abstract:Supervised learning on Deep Neural Networks (DNNs) is data hungry. Optimizing performance of DNN in the presence of noisy labels has become of paramount importance since collecting a large dataset will usually bring in noisy labels. Inspired by the robustness of K-Nearest Neighbors (KNN) against data noise, in this work, we propose to apply deep KNN for label cleanup. Our approach leverages DNNs for feature extraction and KNN for ground-truth label inference. We iteratively train the neural network and update labels to simultaneously proceed towards higher label recovery rate and better classification performance. Experiment results show that under the same setting, our approach outperforms existing label correction methods and achieves better accuracy on multiple datasets, e.g.,76.78% on Clothing1M dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge