Xun Lin

Time Is All It Takes: Spike-Retiming Attacks on Event-Driven Spiking Neural Networks

Feb 03, 2026Abstract:Spiking neural networks (SNNs) compute with discrete spikes and exploit temporal structure, yet most adversarial attacks change intensities or event counts instead of timing. We study a timing-only adversary that retimes existing spikes while preserving spike counts and amplitudes in event-driven SNNs, thus remaining rate-preserving. We formalize a capacity-1 spike-retiming threat model with a unified trio of budgets: per-spike jitter $\mathcal{B}_{\infty}$, total delay $\mathcal{B}_{1}$, and tamper count $\mathcal{B}_{0}$. Feasible adversarial examples must satisfy timeline consistency and non-overlap, which makes the search space discrete and constrained. To optimize such retimings at scale, we use projected-in-the-loop (PIL) optimization: shift-probability logits yield a differentiable soft retiming for backpropagation, and a strict projection in the forward pass produces a feasible discrete schedule that satisfies capacity-1, non-overlap, and the chosen budget at every step. The objective maximizes task loss on the projected input and adds a capacity regularizer together with budget-aware penalties, which stabilizes gradients and aligns optimization with evaluation. Across event-driven benchmarks (CIFAR10-DVS, DVS-Gesture, N-MNIST) and diverse SNN architectures, we evaluate under binary and integer event grids and a range of retiming budgets, and also test models trained with timing-aware adversarial training designed to counter timing-only attacks. For example, on DVS-Gesture the attack attains high success (over $90\%$) while touching fewer than $2\%$ of spikes under $\mathcal{B}_{0}$. Taken together, our results show that spike retiming is a practical and stealthy attack surface that current defenses struggle to counter, providing a clear reference for temporal robustness in event-driven SNNs. Code is available at https://github.com/yuyi-sd/Spike-Retiming-Attacks.

StegaVAR: Privacy-Preserving Video Action Recognition via Steganographic Domain Analysis

Dec 14, 2025

Abstract:Despite the rapid progress of deep learning in video action recognition (VAR) in recent years, privacy leakage in videos remains a critical concern. Current state-of-the-art privacy-preserving methods often rely on anonymization. These methods suffer from (1) low concealment, where producing visually distorted videos that attract attackers' attention during transmission, and (2) spatiotemporal disruption, where degrading essential spatiotemporal features for accurate VAR. To address these issues, we propose StegaVAR, a novel framework that embeds action videos into ordinary cover videos and directly performs VAR in the steganographic domain for the first time. Throughout both data transmission and action analysis, the spatiotemporal information of hidden secret video remains complete, while the natural appearance of cover videos ensures the concealment of transmission. Considering the difficulty of steganographic domain analysis, we propose Secret Spatio-Temporal Promotion (STeP) and Cross-Band Difference Attention (CroDA) for analysis within the steganographic domain. STeP uses the secret video to guide spatiotemporal feature extraction in the steganographic domain during training. CroDA suppresses cover interference by capturing cross-band semantic differences. Experiments demonstrate that StegaVAR achieves superior VAR and privacy-preserving performance on widely used datasets. Moreover, our framework is effective for multiple steganographic models.

Learning Representation and Synergy Invariances: A Povable Framework for Generalized Multimodal Face Anti-Spoofing

Nov 18, 2025

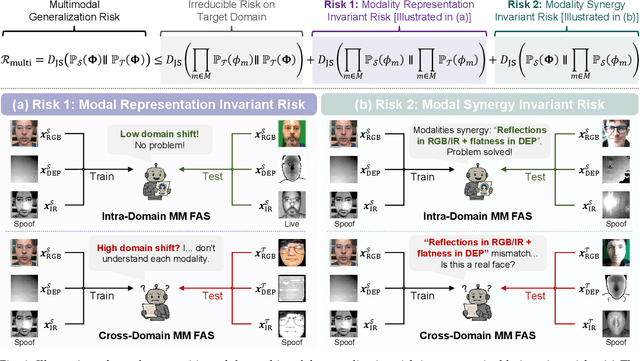

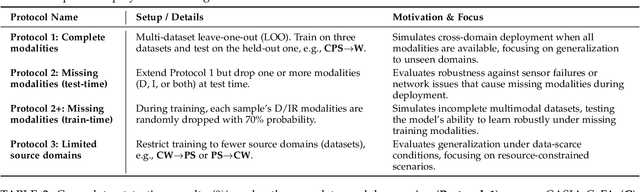

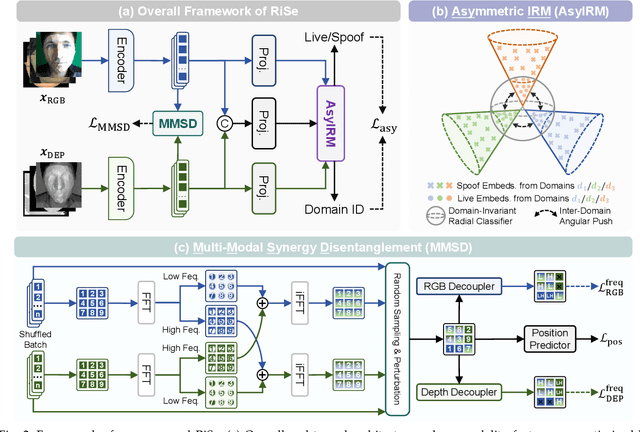

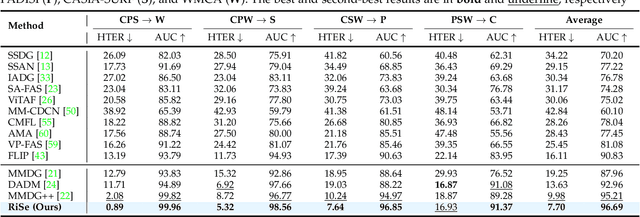

Abstract:Multimodal Face Anti-Spoofing (FAS) methods, which integrate multiple visual modalities, often suffer even more severe performance degradation than unimodal FAS when deployed in unseen domains. This is mainly due to two overlooked risks that affect cross-domain multimodal generalization. The first is the modal representation invariant risk, i.e., whether representations remain generalizable under domain shift. We theoretically show that the inherent class asymmetry in FAS (diverse spoofs vs. compact reals) enlarges the upper bound of generalization error, and this effect is further amplified in multimodal settings. The second is the modal synergy invariant risk, where models overfit to domain-specific inter-modal correlations. Such spurious synergy cannot generalize to unseen attacks in target domains, leading to performance drops. To solve these issues, we propose a provable framework, namely Multimodal Representation and Synergy Invariance Learning (RiSe). For representation risk, RiSe introduces Asymmetric Invariant Risk Minimization (AsyIRM), which learns an invariant spherical decision boundary in radial space to fit asymmetric distributions, while preserving domain cues in angular space. For synergy risk, RiSe employs Multimodal Synergy Disentanglement (MMSD), a self-supervised task enhancing intrinsic, generalizable modal features via cross-sample mixing and disentanglement. Theoretical analysis and experiments verify RiSe, which achieves state-of-the-art cross-domain performance.

SVC 2025: the First Multimodal Deception Detection Challenge

Aug 06, 2025Abstract:Deception detection is a critical task in real-world applications such as security screening, fraud prevention, and credibility assessment. While deep learning methods have shown promise in surpassing human-level performance, their effectiveness often depends on the availability of high-quality and diverse deception samples. Existing research predominantly focuses on single-domain scenarios, overlooking the significant performance degradation caused by domain shifts. To address this gap, we present the SVC 2025 Multimodal Deception Detection Challenge, a new benchmark designed to evaluate cross-domain generalization in audio-visual deception detection. Participants are required to develop models that not only perform well within individual domains but also generalize across multiple heterogeneous datasets. By leveraging multimodal data, including audio, video, and text, this challenge encourages the design of models capable of capturing subtle and implicit deceptive cues. Through this benchmark, we aim to foster the development of more adaptable, explainable, and practically deployable deception detection systems, advancing the broader field of multimodal learning. By the conclusion of the workshop competition, a total of 21 teams had submitted their final results. https://sites.google.com/view/svc-mm25 for more information.

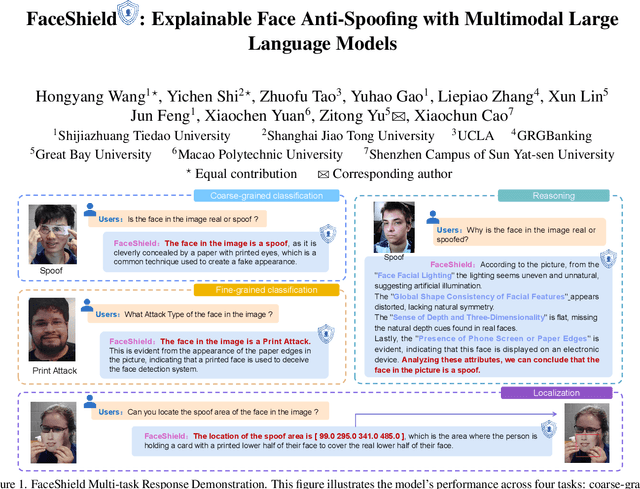

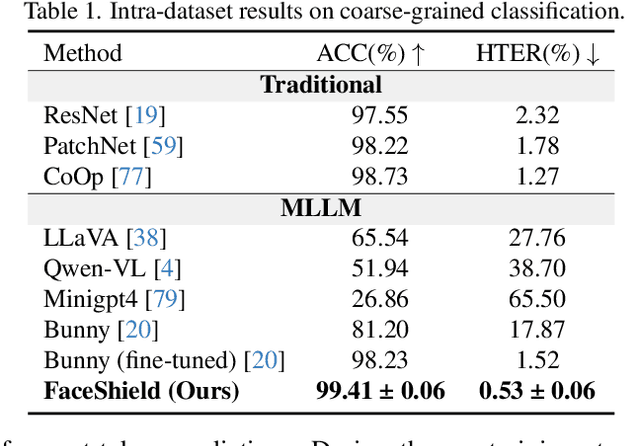

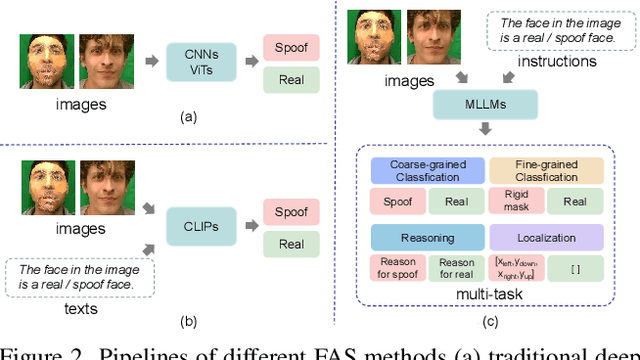

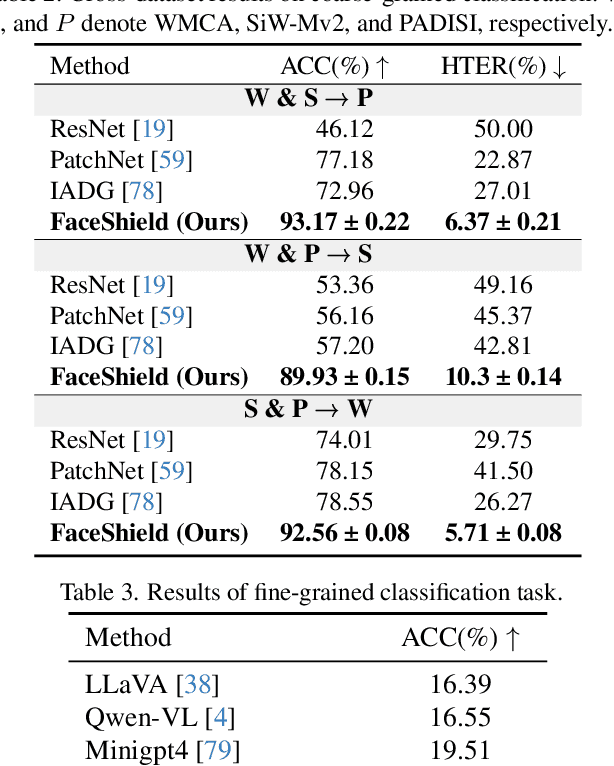

FaceShield: Explainable Face Anti-Spoofing with Multimodal Large Language Models

May 14, 2025

Abstract:Face anti-spoofing (FAS) is crucial for protecting facial recognition systems from presentation attacks. Previous methods approached this task as a classification problem, lacking interpretability and reasoning behind the predicted results. Recently, multimodal large language models (MLLMs) have shown strong capabilities in perception, reasoning, and decision-making in visual tasks. However, there is currently no universal and comprehensive MLLM and dataset specifically designed for FAS task. To address this gap, we propose FaceShield, a MLLM for FAS, along with the corresponding pre-training and supervised fine-tuning (SFT) datasets, FaceShield-pre10K and FaceShield-sft45K. FaceShield is capable of determining the authenticity of faces, identifying types of spoofing attacks, providing reasoning for its judgments, and detecting attack areas. Specifically, we employ spoof-aware vision perception (SAVP) that incorporates both the original image and auxiliary information based on prior knowledge. We then use an prompt-guided vision token masking (PVTM) strategy to random mask vision tokens, thereby improving the model's generalization ability. We conducted extensive experiments on three benchmark datasets, demonstrating that FaceShield significantly outperforms previous deep learning models and general MLLMs on four FAS tasks, i.e., coarse-grained classification, fine-grained classification, reasoning, and attack localization. Our instruction datasets, protocols, and codes will be released soon.

Denoising and Alignment: Rethinking Domain Generalization for Multimodal Face Anti-Spoofing

May 14, 2025Abstract:Face Anti-Spoofing (FAS) is essential for the security of facial recognition systems in diverse scenarios such as payment processing and surveillance. Current multimodal FAS methods often struggle with effective generalization, mainly due to modality-specific biases and domain shifts. To address these challenges, we introduce the \textbf{M}ulti\textbf{m}odal \textbf{D}enoising and \textbf{A}lignment (\textbf{MMDA}) framework. By leveraging the zero-shot generalization capability of CLIP, the MMDA framework effectively suppresses noise in multimodal data through denoising and alignment mechanisms, thereby significantly enhancing the generalization performance of cross-modal alignment. The \textbf{M}odality-\textbf{D}omain Joint \textbf{D}ifferential \textbf{A}ttention (\textbf{MD2A}) module in MMDA concurrently mitigates the impacts of domain and modality noise by refining the attention mechanism based on extracted common noise features. Furthermore, the \textbf{R}epresentation \textbf{S}pace \textbf{S}oft (\textbf{RS2}) Alignment strategy utilizes the pre-trained CLIP model to align multi-domain multimodal data into a generalized representation space in a flexible manner, preserving intricate representations and enhancing the model's adaptability to various unseen conditions. We also design a \textbf{U}-shaped \textbf{D}ual \textbf{S}pace \textbf{A}daptation (\textbf{U-DSA}) module to enhance the adaptability of representations while maintaining generalization performance. These improvements not only enhance the framework's generalization capabilities but also boost its ability to represent complex representations. Our experimental results on four benchmark datasets under different evaluation protocols demonstrate that the MMDA framework outperforms existing state-of-the-art methods in terms of cross-domain generalization and multimodal detection accuracy. The code will be released soon.

Towards Model Resistant to Transferable Adversarial Examples via Trigger Activation

Apr 20, 2025Abstract:Adversarial examples, characterized by imperceptible perturbations, pose significant threats to deep neural networks by misleading their predictions. A critical aspect of these examples is their transferability, allowing them to deceive {unseen} models in black-box scenarios. Despite the widespread exploration of defense methods, including those on transferability, they show limitations: inefficient deployment, ineffective defense, and degraded performance on clean images. In this work, we introduce a novel training paradigm aimed at enhancing robustness against transferable adversarial examples (TAEs) in a more efficient and effective way. We propose a model that exhibits random guessing behavior when presented with clean data $\boldsymbol{x}$ as input, and generates accurate predictions when with triggered data $\boldsymbol{x}+\boldsymbol{\tau}$. Importantly, the trigger $\boldsymbol{\tau}$ remains constant for all data instances. We refer to these models as \textbf{models with trigger activation}. We are surprised to find that these models exhibit certain robustness against TAEs. Through the consideration of first-order gradients, we provide a theoretical analysis of this robustness. Moreover, through the joint optimization of the learnable trigger and the model, we achieve improved robustness to transferable attacks. Extensive experiments conducted across diverse datasets, evaluating a variety of attacking methods, underscore the effectiveness and superiority of our approach.

AdaMHF: Adaptive Multimodal Hierarchical Fusion for Survival Prediction

Mar 27, 2025Abstract:The integration of pathologic images and genomic data for survival analysis has gained increasing attention with advances in multimodal learning. However, current methods often ignore biological characteristics, such as heterogeneity and sparsity, both within and across modalities, ultimately limiting their adaptability to clinical practice. To address these challenges, we propose AdaMHF: Adaptive Multimodal Hierarchical Fusion, a framework designed for efficient, comprehensive, and tailored feature extraction and fusion. AdaMHF is specifically adapted to the uniqueness of medical data, enabling accurate predictions with minimal resource consumption, even under challenging scenarios with missing modalities. Initially, AdaMHF employs an experts expansion and residual structure to activate specialized experts for extracting heterogeneous and sparse features. Extracted tokens undergo refinement via selection and aggregation, reducing the weight of non-dominant features while preserving comprehensive information. Subsequently, the encoded features are hierarchically fused, allowing multi-grained interactions across modalities to be captured. Furthermore, we introduce a survival prediction benchmark designed to resolve scenarios with missing modalities, mirroring real-world clinical conditions. Extensive experiments on TCGA datasets demonstrate that AdaMHF surpasses current state-of-the-art (SOTA) methods, showcasing exceptional performance in both complete and incomplete modality settings.

BIG-MoE: Bypass Isolated Gating MoE for Generalized Multimodal Face Anti-Spoofing

Dec 24, 2024Abstract:In the domain of facial recognition security, multimodal Face Anti-Spoofing (FAS) is essential for countering presentation attacks. However, existing technologies encounter challenges due to modality biases and imbalances, as well as domain shifts. Our research introduces a Mixture of Experts (MoE) model to address these issues effectively. We identified three limitations in traditional MoE approaches to multimodal FAS: (1) Coarse-grained experts' inability to capture nuanced spoofing indicators; (2) Gated networks' susceptibility to input noise affecting decision-making; (3) MoE's sensitivity to prompt tokens leading to overfitting with conventional learning methods. To mitigate these, we propose the Bypass Isolated Gating MoE (BIG-MoE) framework, featuring: (1) Fine-grained experts for enhanced detection of subtle spoofing cues; (2) An isolation gating mechanism to counteract input noise; (3) A novel differential convolutional prompt bypass enriching the gating network with critical local features, thereby improving perceptual capabilities. Extensive experiments on four benchmark datasets demonstrate significant generalization performance improvement in multimodal FAS task. The code is released at https://github.com/murInJ/BIG-MoE.

EPE-P: Evidence-based Parameter-efficient Prompting for Multimodal Learning with Missing Modalities

Dec 23, 2024

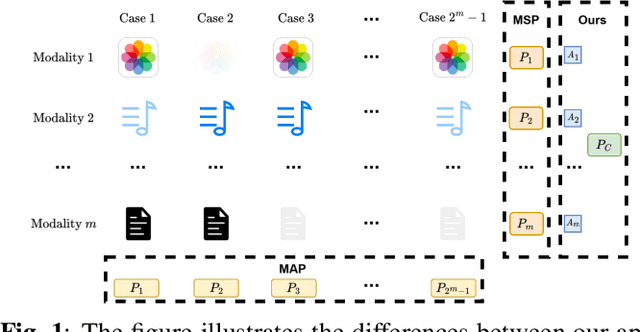

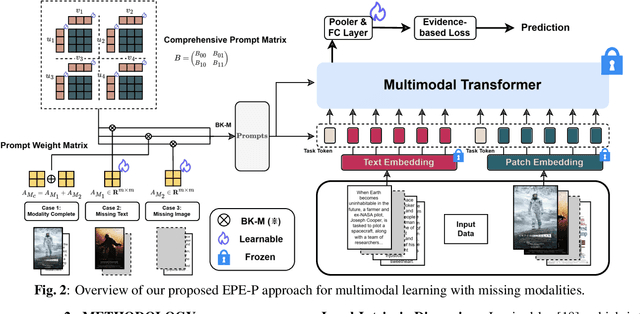

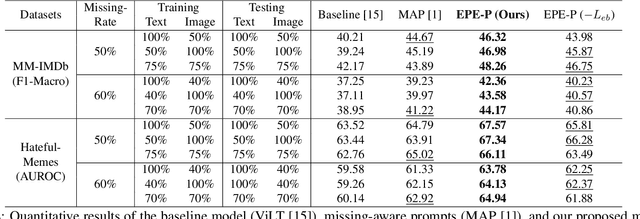

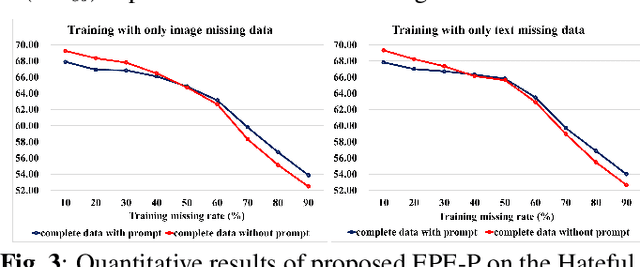

Abstract:Missing modalities are a common challenge in real-world multimodal learning scenarios, occurring during both training and testing. Existing methods for managing missing modalities often require the design of separate prompts for each modality or missing case, leading to complex designs and a substantial increase in the number of parameters to be learned. As the number of modalities grows, these methods become increasingly inefficient due to parameter redundancy. To address these issues, we propose Evidence-based Parameter-Efficient Prompting (EPE-P), a novel and parameter-efficient method for pretrained multimodal networks. Our approach introduces a streamlined design that integrates prompting information across different modalities, reducing complexity and mitigating redundant parameters. Furthermore, we propose an Evidence-based Loss function to better handle the uncertainty associated with missing modalities, improving the model's decision-making. Our experiments demonstrate that EPE-P outperforms existing prompting-based methods in terms of both effectiveness and efficiency. The code is released at https://github.com/Boris-Jobs/EPE-P_MLLMs-Robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge