Xing Lin

Super-resolution imaging using super-oscillatory diffractive neural networks

Jun 27, 2024

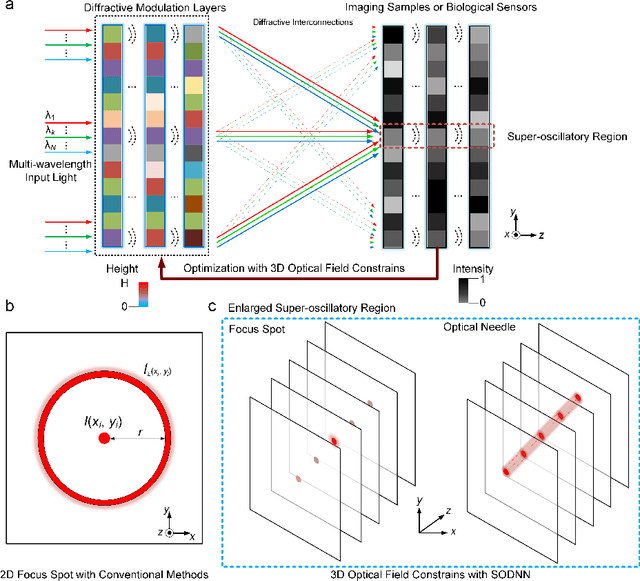

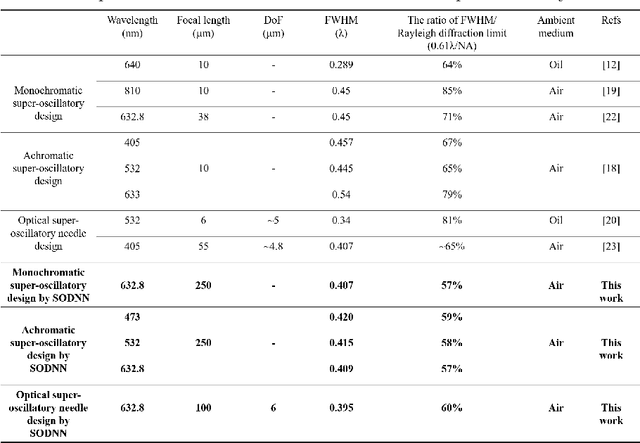

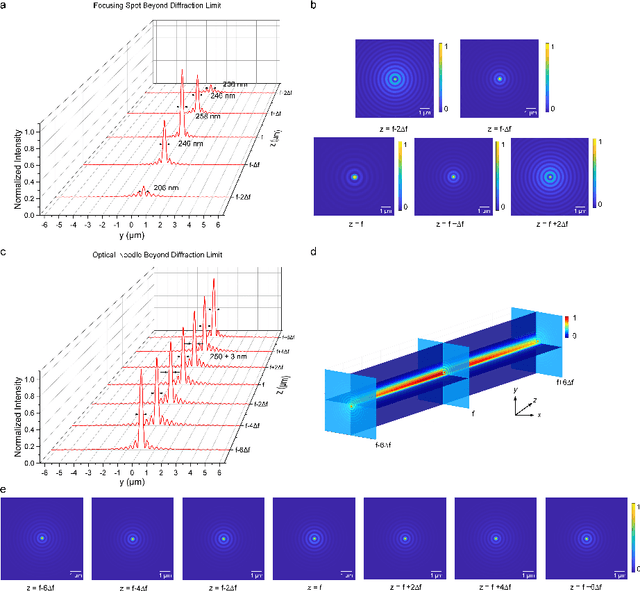

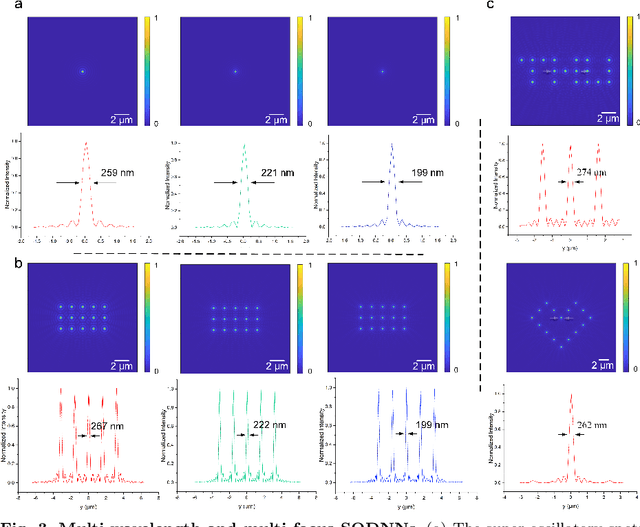

Abstract:Optical super-oscillation enables far-field super-resolution imaging beyond diffraction limits. However, the existing super-oscillatory lens for the spatial super-resolution imaging system still confronts critical limitations in performance due to the lack of a more advanced design method and the limited design degree of freedom. Here, we propose an optical super-oscillatory diffractive neural network, i.e., SODNN, that can achieve super-resolved spatial resolution for imaging beyond the diffraction limit with superior performance over existing methods. SODNN is constructed by utilizing diffractive layers to implement optical interconnections and imaging samples or biological sensors to implement nonlinearity, which modulates the incident optical field to create optical super-oscillation effects in 3D space and generate the super-resolved focal spots. By optimizing diffractive layers with 3D optical field constraints under an incident wavelength size of $\lambda$, we achieved a super-oscillatory spot with a full width at half maximum of 0.407$\lambda$ in the far field distance over 400$\lambda$ without side-lobes over the field of view, having a long depth of field over 10$\lambda$. Furthermore, the SODNN implements a multi-wavelength and multi-focus spot array that effectively avoids chromatic aberrations. Our research work will inspire the development of intelligent optical instruments to facilitate the applications of imaging, sensing, perception, etc.

Slimmed optical neural networks with multiplexed neuron sets and a corresponding backpropagation training algorithm

Aug 27, 2023Abstract:Due to their intrinsic capabilities on parallel signal processing, optical neural networks (ONNs) have attracted extensive interests recently as a potential alternative to electronic artificial neural networks (ANNs) with reduced power consumption and low latency. Preliminary confirmation of the parallelism in optical computing has been widely done by applying the technology of wavelength division multiplexing (WDM) in the linear transformation part of neural networks. However, inter-channel crosstalk has obstructed WDM technologies to be deployed in nonlinear activation in ONNs. Here, we propose a universal WDM structure called multiplexed neuron sets (MNS) which apply WDM technologies to optical neurons and enable ONNs to be further compressed. A corresponding back-propagation (BP) training algorithm is proposed to alleviate or even cancel the influence of inter-channel crosstalk on MNS-based WDM-ONNs. For simplicity, semiconductor optical amplifiers (SOAs) are employed as an example of MNS to construct a WDM-ONN trained with the new algorithm. The result shows that the combination of MNS and the corresponding BP training algorithm significantly downsize the system and improve the energy efficiency to tens of times while giving similar performance to traditional ONNs.

Dual adaptive training of photonic neural networks

Dec 09, 2022Abstract:Photonic neural network (PNN) is a remarkable analog artificial intelligence (AI) accelerator that computes with photons instead of electrons to feature low latency, high energy efficiency, and high parallelism. However, the existing training approaches cannot address the extensive accumulation of systematic errors in large-scale PNNs, resulting in a significant decrease in model performance in physical systems. Here, we propose dual adaptive training (DAT) that allows the PNN model to adapt to substantial systematic errors and preserves its performance during the deployment. By introducing the systematic error prediction networks with task-similarity joint optimization, DAT achieves the high similarity mapping between the PNN numerical models and physical systems and high-accurate gradient calculations during the dual backpropagation training. We validated the effectiveness of DAT by using diffractive PNNs and interference-based PNNs on image classification tasks. DAT successfully trained large-scale PNNs under major systematic errors and preserved the model classification accuracies comparable to error-free systems. The results further demonstrated its superior performance over the state-of-the-art in situ training approaches. DAT provides critical support for constructing large-scale PNNs to achieve advanced architectures and can be generalized to other types of AI systems with analog computing errors.

Optical multi-task learning using multi-wavelength diffractive deep neural networks

Nov 30, 2022Abstract:Photonic neural networks are brain-inspired information processing technology using photons instead of electrons to perform artificial intelligence (AI) tasks. However, existing architectures are designed for a single task but fail to multiplex different tasks in parallel within a single monolithic system due to the task competition that deteriorates the model performance. This paper proposes a novel optical multi-task learning system by designing multi-wavelength diffractive deep neural networks (D2NNs) with the joint optimization method. By encoding multi-task inputs into multi-wavelength channels, the system can increase the computing throughput and significantly alle-viate the competition to perform multiple tasks in parallel with high accuracy. We design the two-task and four-task D2NNs with two and four spectral channels, respectively, for classifying different inputs from MNIST, FMNIST, KMNIST, and EMNIST databases. The numerical evaluations demonstrate that, under the same network size, mul-ti-wavelength D2NNs achieve significantly higher classification accuracies for multi-task learning than single-wavelength D2NNs. Furthermore, by increasing the network size, the multi-wavelength D2NNs for simultaneously performing multiple tasks achieve comparable classification accuracies with respect to the individual training of multiple single-wavelength D2NNs to perform tasks separately. Our work paves the way for developing the wave-length-division multiplexing technology to achieve high-throughput neuromorphic photonic computing and more general AI systems to perform multiple tasks in parallel.

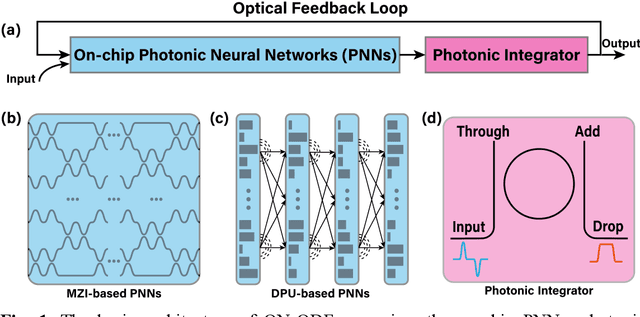

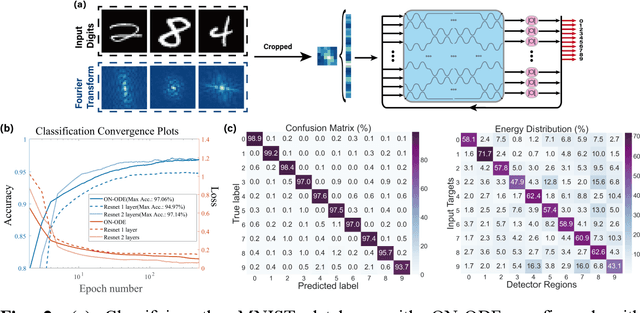

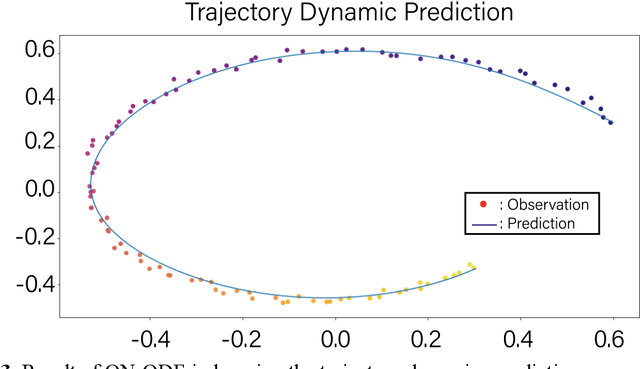

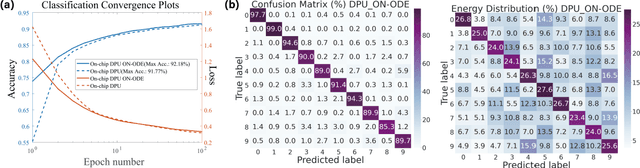

Optical Neural Ordinary Differential Equations

Sep 26, 2022

Abstract:Increasing the layer number of on-chip photonic neural networks (PNNs) is essential to improve its model performance. However, the successively cascading of network hidden layers results in larger integrated photonic chip areas. To address this issue, we propose the optical neural ordinary differential equations (ON-ODE) architecture that parameterizes the continuous dynamics of hidden layers with optical ODE solvers. The ON-ODE comprises the PNNs followed by the photonic integrator and optical feedback loop, which can be configured to represent residual neural networks (ResNet) and recurrent neural networks with effectively reduced chip area occupancy. For the interference-based optoelectronic nonlinear hidden layer, the numerical experiments demonstrate that the single hidden layer ON-ODE can achieve approximately the same accuracy as the two-layer optical ResNet in image classification tasks. Besides, the ONODE improves the model classification accuracy for the diffraction-based all-optical linear hidden layer. The time-dependent dynamics property of ON-ODE is further applied for trajectory prediction with high accuracy.

All-optical graph representation learning using integrated diffractive photonic computing units

Apr 23, 2022

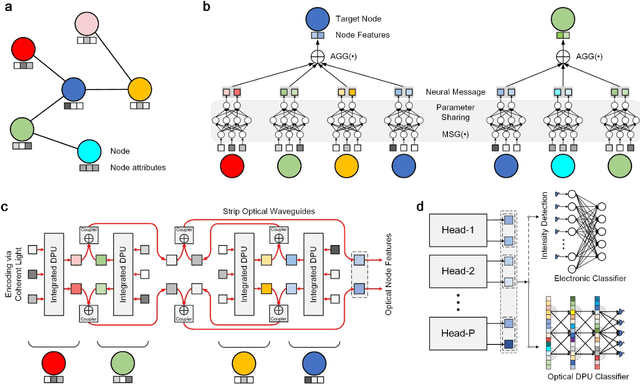

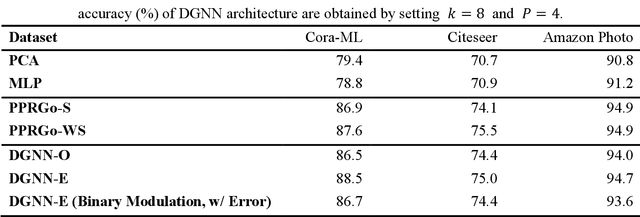

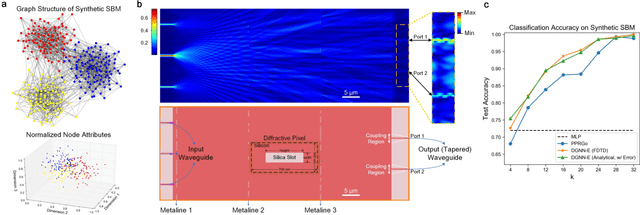

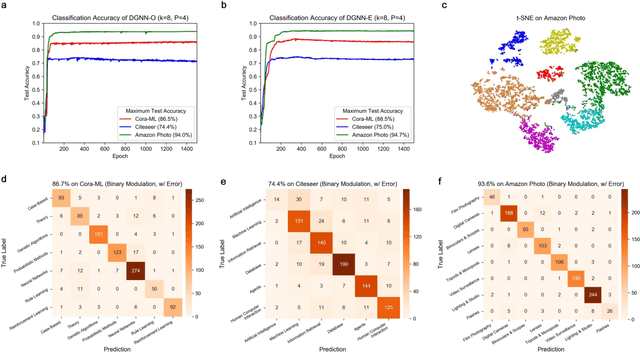

Abstract:Photonic neural networks perform brain-inspired computations using photons instead of electrons that can achieve substantially improved computing performance. However, existing architectures can only handle data with regular structures, e.g., images or videos, but fail to generalize to graph-structured data beyond Euclidean space, e.g., social networks or document co-citation networks. Here, we propose an all-optical graph representation learning architecture, termed diffractive graph neural network (DGNN), based on the integrated diffractive photonic computing units (DPUs) to address this limitation. Specifically, DGNN optically encodes node attributes into strip optical waveguides, which are transformed by DPUs and aggregated by on-chip optical couplers to extract their feature representations. Each DPU comprises successive passive layers of metalines to modulate the electromagnetic optical field via diffraction, where the metaline structures are learnable parameters shared across graph nodes. DGNN captures complex dependencies among the node neighborhoods and eliminates the nonlinear transition functions during the light-speed optical message passing over graph structures. We demonstrate the use of DGNN extracted features for node and graph-level classification tasks with benchmark databases and achieve superior performance. Our work opens up a new direction for designing application-specific integrated photonic circuits for high-efficiency processing of large-scale graph data structures using deep learning.

Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit

Aug 26, 2020

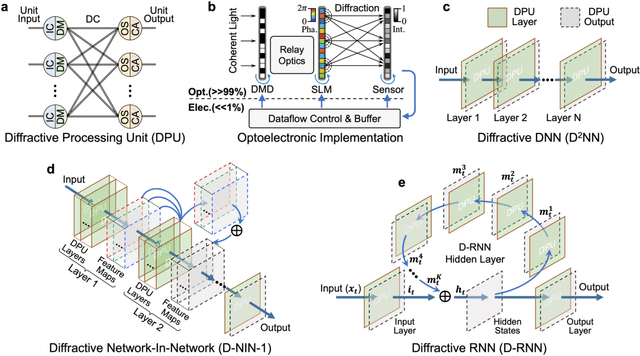

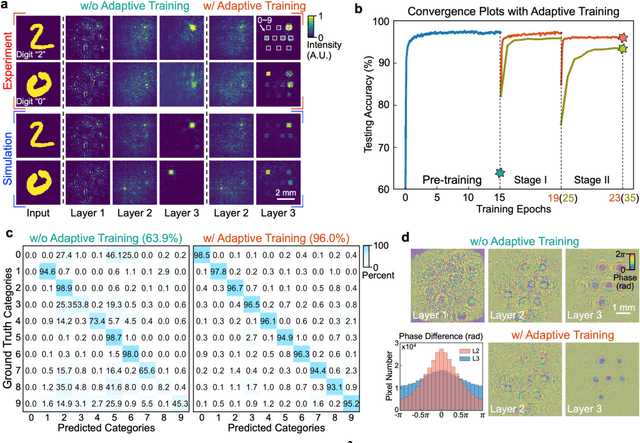

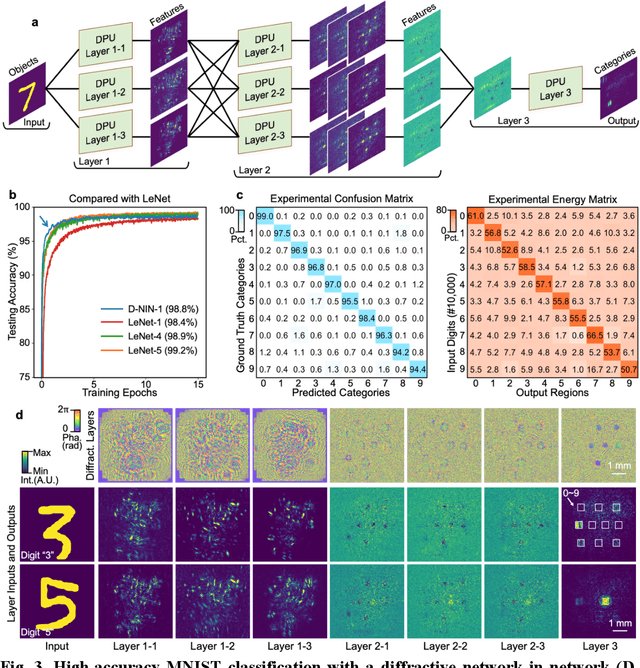

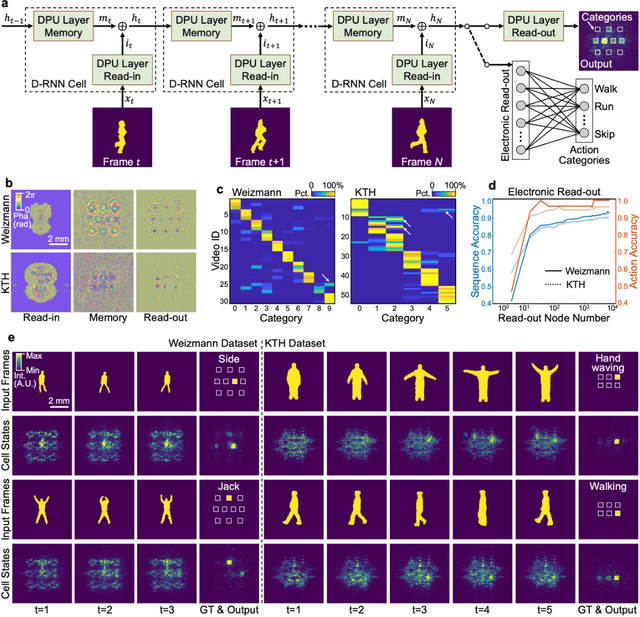

Abstract:Application-specific optical processors have been considered disruptive technologies for modern computing that can fundamentally accelerate the development of artificial intelligence (AI) by offering substantially improved computing performance. Recent advancements in optical neural network architectures for neural information processing have been applied to perform various machine learning tasks. However, the existing architectures have limited complexity and performance; and each of them requires its own dedicated design that cannot be reconfigured to switch between different neural network models for different applications after deployment. Here, we propose an optoelectronic reconfigurable computing paradigm by constructing a diffractive processing unit (DPU) that can efficiently support different neural networks and achieve a high model complexity with millions of neurons. It allocates almost all of its computational operations optically and achieves extremely high speed of data modulation and large-scale network parameter updating by dynamically programming optical modulators and photodetectors. We demonstrated the reconfiguration of the DPU to implement various diffractive feedforward and recurrent neural networks and developed a novel adaptive training approach to circumvent the system imperfections. We applied the trained networks for high-speed classifying of handwritten digit images and human action videos over benchmark datasets, and the experimental results revealed a comparable classification accuracy to the electronic computing approaches. Furthermore, our prototype system built with off-the-shelf optoelectronic components surpasses the performance of state-of-the-art graphics processing units (GPUs) by several times on computing speed and more than an order of magnitude on system energy efficiency.

Response to Comment on "All-optical machine learning using diffractive deep neural networks"

Oct 10, 2018

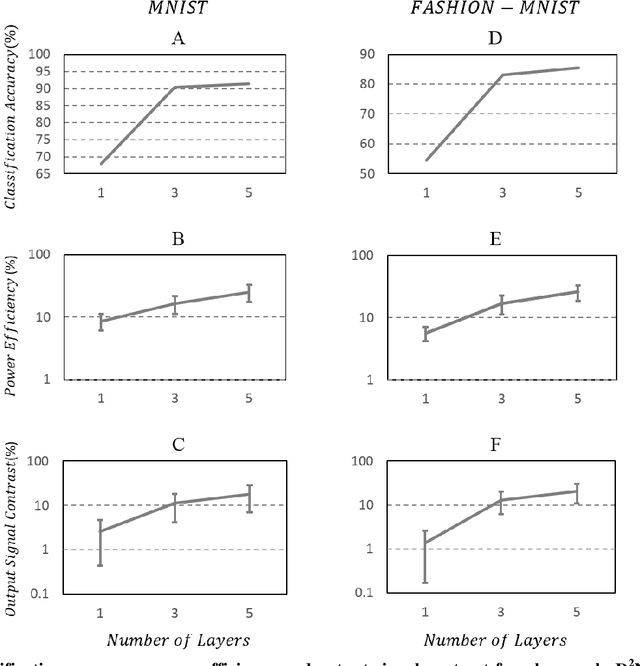

Abstract:In their Comment, Wei et al. (arXiv:1809.08360v1 [cs.LG]) claim that our original interpretation of Diffractive Deep Neural Networks (D2NN) represent a mischaracterization of the system due to linearity and passivity. In this Response, we detail how this mischaracterization claim is unwarranted and oblivious to several sections detailed in our original manuscript (Science, DOI: 10.1126/science.aat8084) that specifically introduced and discussed optical nonlinearities and reconfigurability of D2NNs, as part of our proposed framework to enhance its performance. To further refute the mischaracterization claim of Wei et al., we, once again, demonstrate the depth feature of optical D2NNs by showing that multiple diffractive layers operating collectively within a D2NN present additional degrees-of-freedom compared to a single diffractive layer to achieve better classification accuracy, as well as improved output signal contrast and diffraction efficiency as the number of diffractive layers increase, showing the deepness of a D2NN, and its inherent depth advantage for improved performance. In summary, the Comment by Wei et al. does not provide an amendment to the original teachings of our original manuscript, and all of our results, core conclusions and methodology of research reported in Science (DOI: 10.1126/science.aat8084) remain entirely valid.

All-Optical Machine Learning Using Diffractive Deep Neural Networks

Jul 26, 2018Abstract:We introduce an all-optical Diffractive Deep Neural Network (D2NN) architecture that can learn to implement various functions after deep learning-based design of passive diffractive layers that work collectively. We experimentally demonstrated the success of this framework by creating 3D-printed D2NNs that learned to implement handwritten digit classification and the function of an imaging lens at terahertz spectrum. With the existing plethora of 3D-printing and other lithographic fabrication methods as well as spatial-light-modulators, this all-optical deep learning framework can perform, at the speed of light, various complex functions that computer-based neural networks can implement, and will find applications in all-optical image analysis, feature detection and object classification, also enabling new camera designs and optical components that can learn to perform unique tasks using D2NNs.

Extended depth-of-field in holographic image reconstruction using deep learning based auto-focusing and phase-recovery

Mar 21, 2018

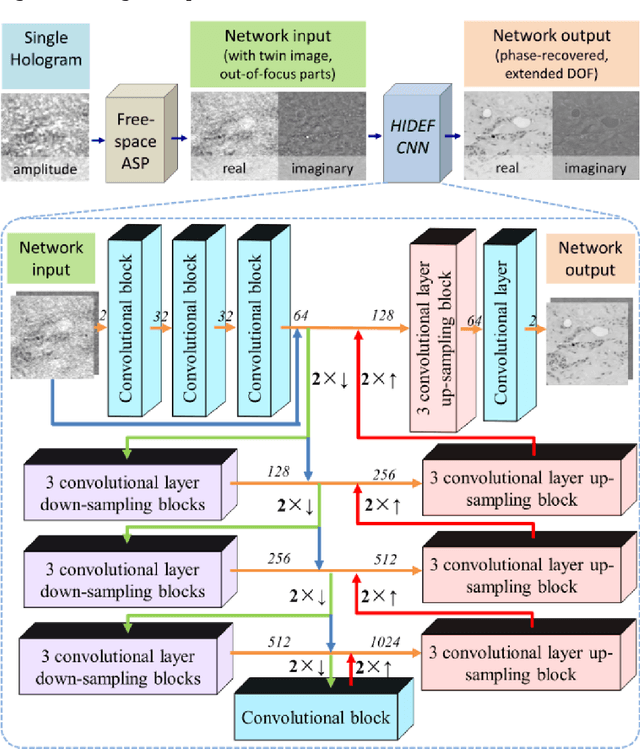

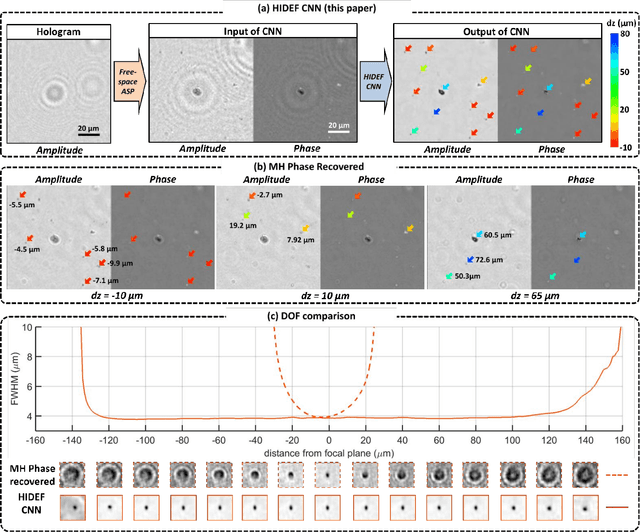

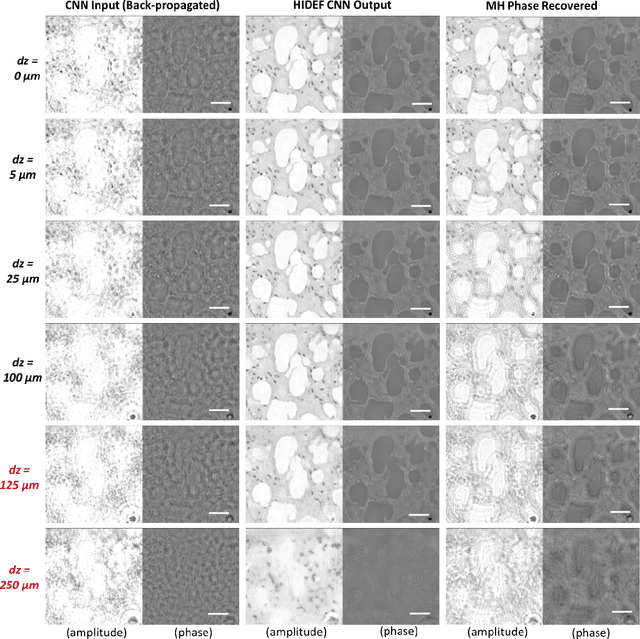

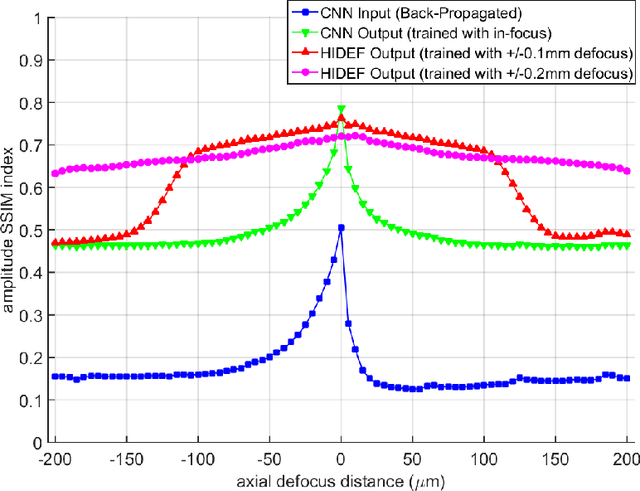

Abstract:Holography encodes the three dimensional (3D) information of a sample in the form of an intensity-only recording. However, to decode the original sample image from its hologram(s), auto-focusing and phase-recovery are needed, which are in general cumbersome and time-consuming to digitally perform. Here we demonstrate a convolutional neural network (CNN) based approach that simultaneously performs auto-focusing and phase-recovery to significantly extend the depth-of-field (DOF) in holographic image reconstruction. For this, a CNN is trained by using pairs of randomly de-focused back-propagated holograms and their corresponding in-focus phase-recovered images. After this training phase, the CNN takes a single back-propagated hologram of a 3D sample as input to rapidly achieve phase-recovery and reconstruct an in focus image of the sample over a significantly extended DOF. This deep learning based DOF extension method is non-iterative, and significantly improves the algorithm time-complexity of holographic image reconstruction from O(nm) to O(1), where n refers to the number of individual object points or particles within the sample volume, and m represents the focusing search space within which each object point or particle needs to be individually focused. These results highlight some of the unique opportunities created by data-enabled statistical image reconstruction methods powered by machine learning, and we believe that the presented approach can be broadly applicable to computationally extend the DOF of other imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge