All-optical graph representation learning using integrated diffractive photonic computing units

Paper and Code

Apr 23, 2022

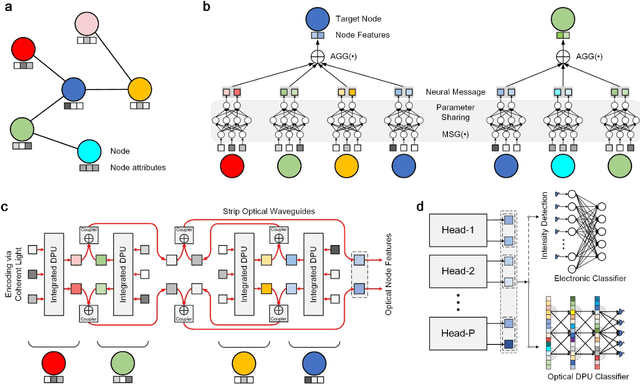

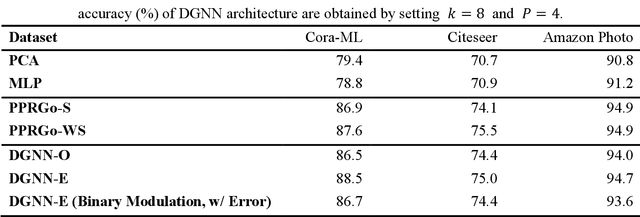

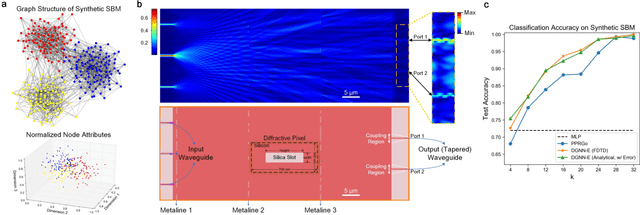

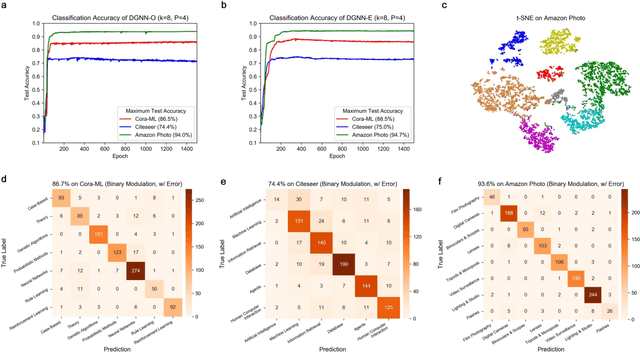

Photonic neural networks perform brain-inspired computations using photons instead of electrons that can achieve substantially improved computing performance. However, existing architectures can only handle data with regular structures, e.g., images or videos, but fail to generalize to graph-structured data beyond Euclidean space, e.g., social networks or document co-citation networks. Here, we propose an all-optical graph representation learning architecture, termed diffractive graph neural network (DGNN), based on the integrated diffractive photonic computing units (DPUs) to address this limitation. Specifically, DGNN optically encodes node attributes into strip optical waveguides, which are transformed by DPUs and aggregated by on-chip optical couplers to extract their feature representations. Each DPU comprises successive passive layers of metalines to modulate the electromagnetic optical field via diffraction, where the metaline structures are learnable parameters shared across graph nodes. DGNN captures complex dependencies among the node neighborhoods and eliminates the nonlinear transition functions during the light-speed optical message passing over graph structures. We demonstrate the use of DGNN extracted features for node and graph-level classification tasks with benchmark databases and achieve superior performance. Our work opens up a new direction for designing application-specific integrated photonic circuits for high-efficiency processing of large-scale graph data structures using deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge