Muhammed Veli

Classification and reconstruction of spatially overlapping phase images using diffractive optical networks

Aug 18, 2021

Abstract:Diffractive optical networks unify wave optics and deep learning to all-optically compute a given machine learning or computational imaging task as the light propagates from the input to the output plane. Here, we report the design of diffractive optical networks for the classification and reconstruction of spatially overlapping, phase-encoded objects. When two different phase-only objects spatially overlap, the individual object functions are perturbed since their phase patterns are summed up. The retrieval of the underlying phase images from solely the overlapping phase distribution presents a challenging problem, the solution of which is generally not unique. We show that through a task-specific training process, passive diffractive networks composed of successive transmissive layers can all-optically and simultaneously classify two different randomly-selected, spatially overlapping phase images at the input. After trained with ~550 million unique combinations of phase-encoded handwritten digits from the MNIST dataset, our blind testing results reveal that the diffractive network achieves an accuracy of >85.8% for all-optical classification of two overlapping phase images of new handwritten digits. In addition to all-optical classification of overlapping phase objects, we also demonstrate the reconstruction of these phase images based on a shallow electronic neural network that uses the highly compressed output of the diffractive network as its input (with e.g., ~20-65 times less number of pixels) to rapidly reconstruct both of the phase images, despite their spatial overlap and related phase ambiguity. The presented phase image classification and reconstruction framework might find applications in e.g., computational imaging, microscopy and quantitative phase imaging fields.

Terahertz Pulse Shaping Using Diffractive Legos

Jun 30, 2020

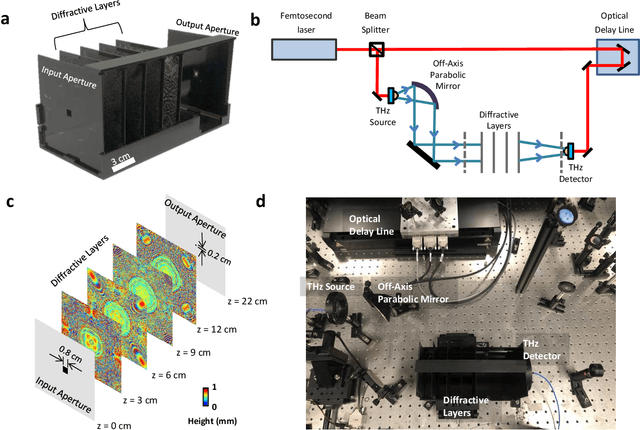

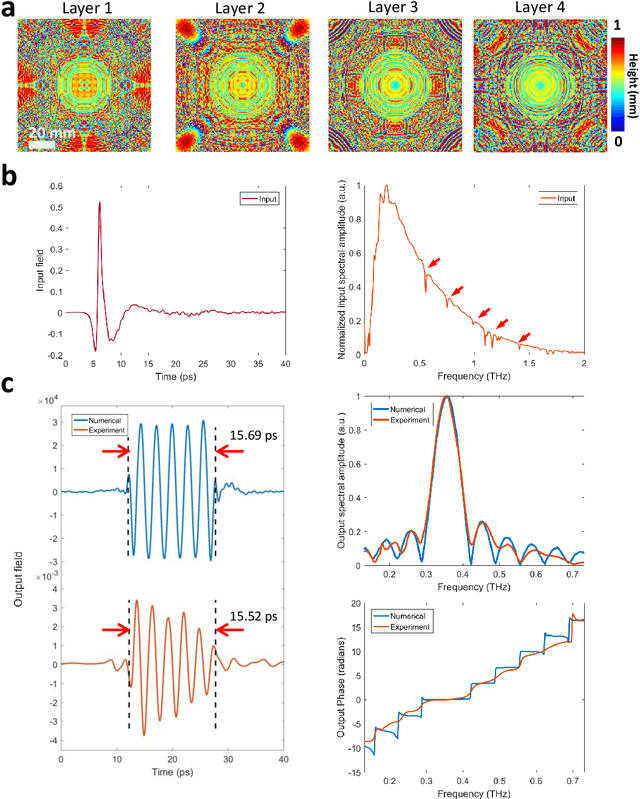

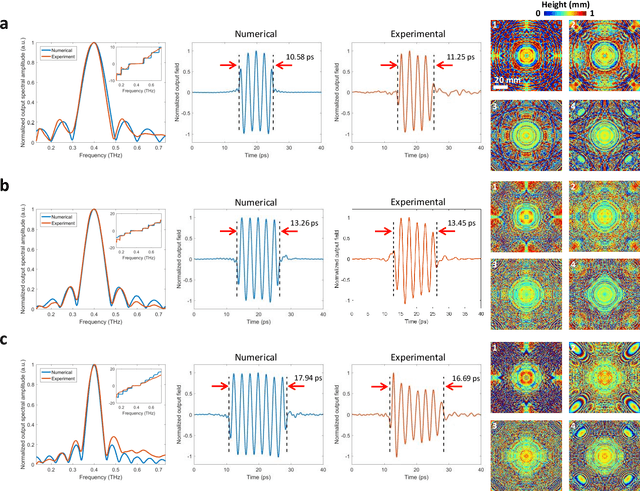

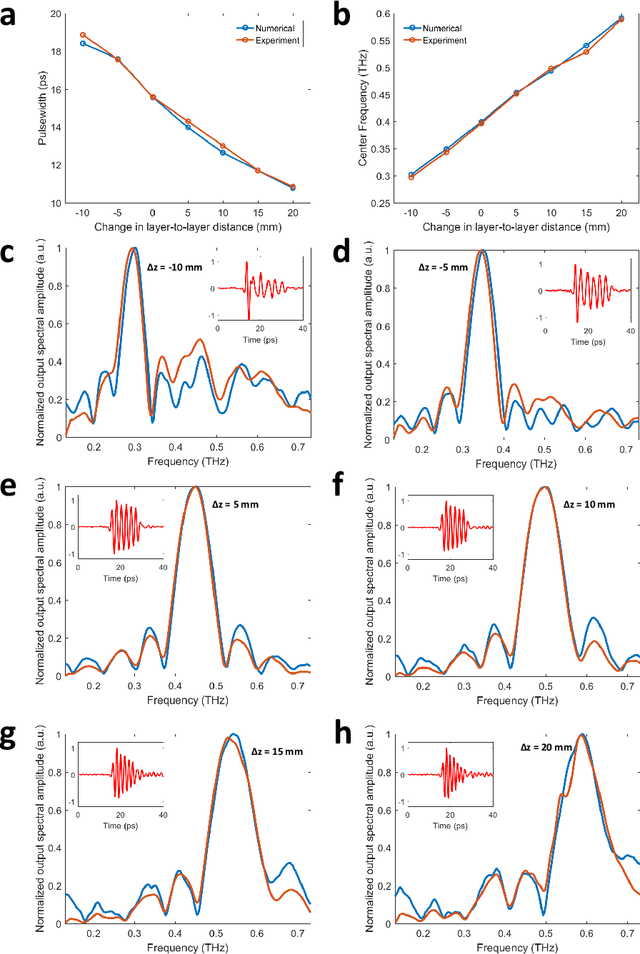

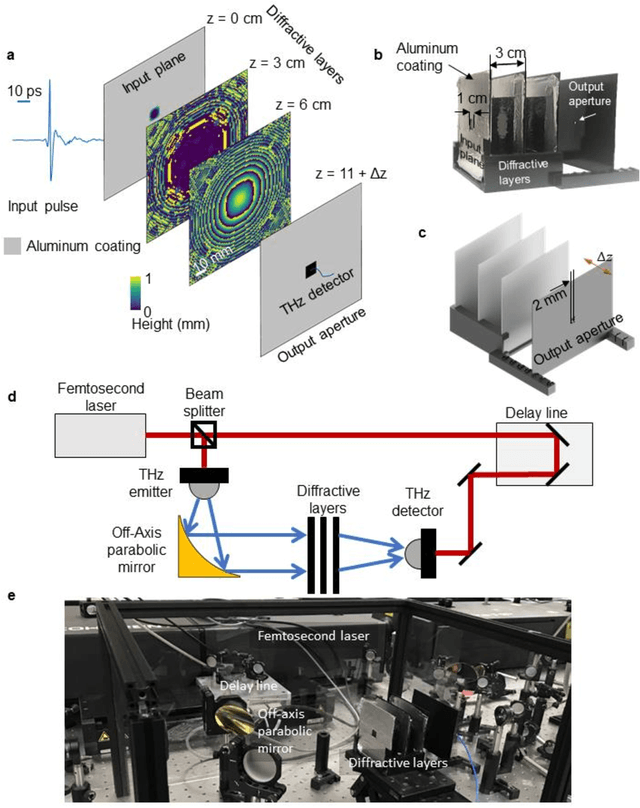

Abstract:Recent advances in deep learning have been providing non-intuitive solutions to various inverse problems in optics. At the intersection of machine learning and optics, diffractive networks merge wave-optics with deep learning to design task-specific elements to all-optically perform various tasks such as object classification and machine vision. Here, we present a diffractive network, which is used to shape an arbitrary broadband pulse into a desired optical waveform, forming a compact pulse engineering system. We experimentally demonstrate the synthesis of square pulses with different temporal-widths by manufacturing passive diffractive layers that collectively control both the spectral amplitude and the phase of an input terahertz pulse. Our results constitute the first demonstration of direct pulse shaping in terahertz spectrum, where a complex-valued spectral modulation function directly acts on terahertz frequencies. Furthermore, a Lego-like physical transfer learning approach is presented to illustrate pulse-width tunability by replacing part of an existing network with newly trained diffractive layers, demonstrating its modularity. This learning-based diffractive pulse engineering framework can find broad applications in e.g., communications, ultra-fast imaging and spectroscopy.

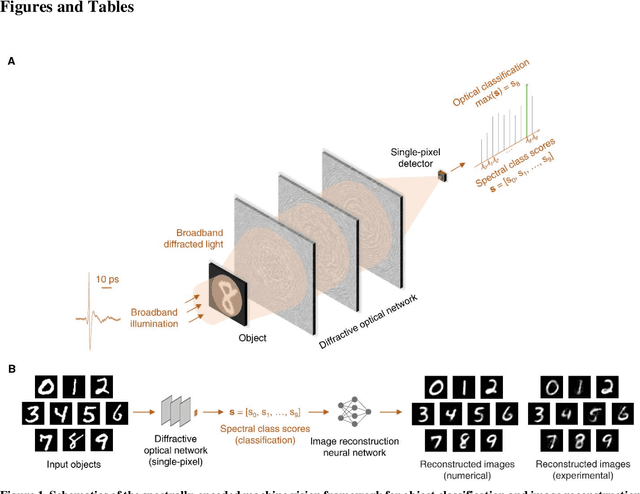

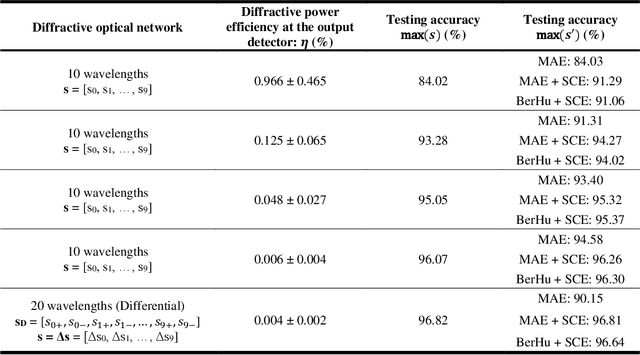

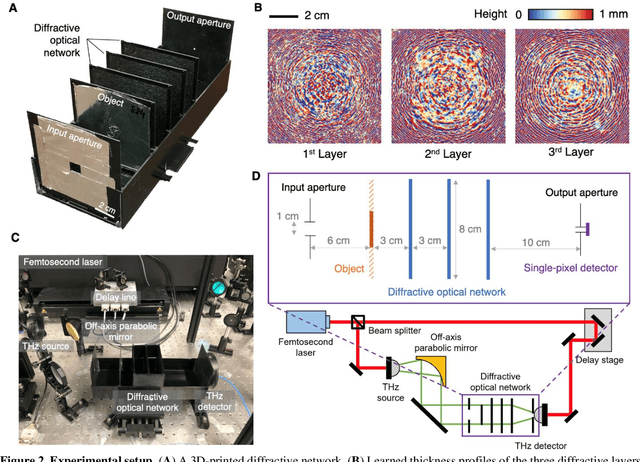

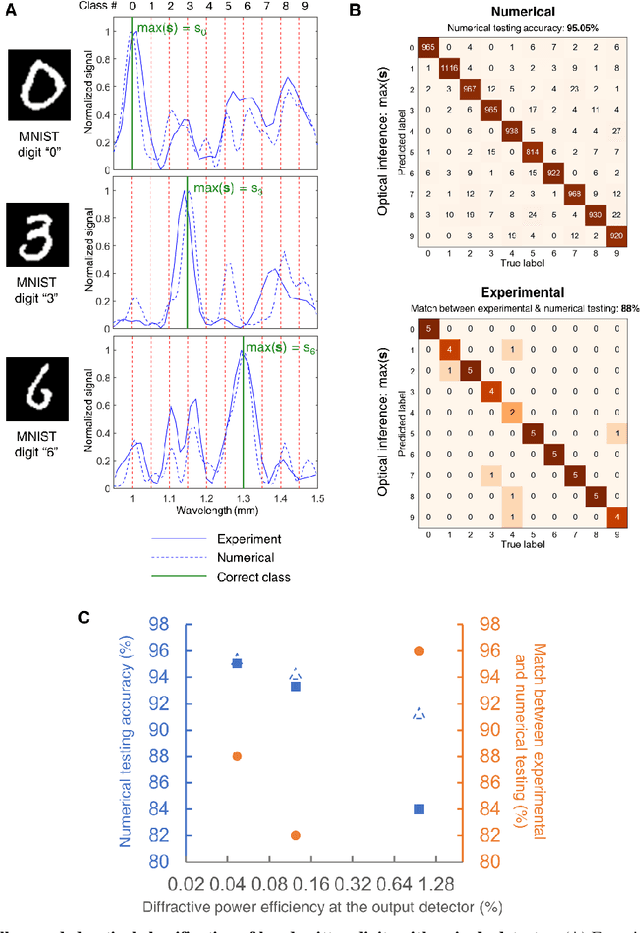

Machine Vision using Diffractive Spectral Encoding

May 15, 2020

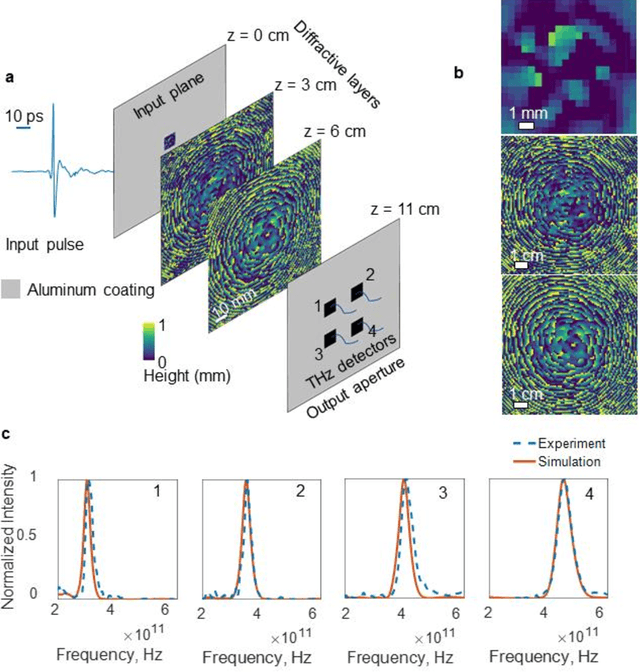

Abstract:Machine vision systems mostly rely on lens-based optical imaging architectures that relay the spatial information of objects onto high pixel-count opto-electronic sensor arrays, followed by digital processing of this information. Here, we demonstrate an optical machine vision system that uses trainable matter in the form of diffractive layers to transform and encode the spatial information of objects into the power spectrum of the diffracted light, which is used to perform optical classification of objects with a single-pixel spectroscopic detector. Using a time-domain spectroscopy setup with a plasmonic nanoantenna-based detector, we experimentally validated this framework at terahertz spectrum to optically classify the images of handwritten digits by detecting the spectral power of the diffracted light at ten distinct wavelengths, each representing one class/digit. We also report the coupling of this spectral encoding achieved through a diffractive optical network with a shallow electronic neural network, separately trained to reconstruct the images of handwritten digits based on solely the spectral information encoded in these ten distinct wavelengths within the diffracted light. These reconstructed images demonstrate task-specific image decompression and can also be cycled back as new inputs to the same diffractive network to improve its optical object classification. This unique framework merges the power of deep learning with the spatial and spectral processing capabilities of trainable matter, and can also be extended to other spectral-domain measurement systems to enable new 3D imaging and sensing modalities integrated with spectrally encoded classification tasks performed through diffractive networks.

Design of Task-Specific Optical Systems Using Broadband Diffractive Neural Networks

Sep 14, 2019

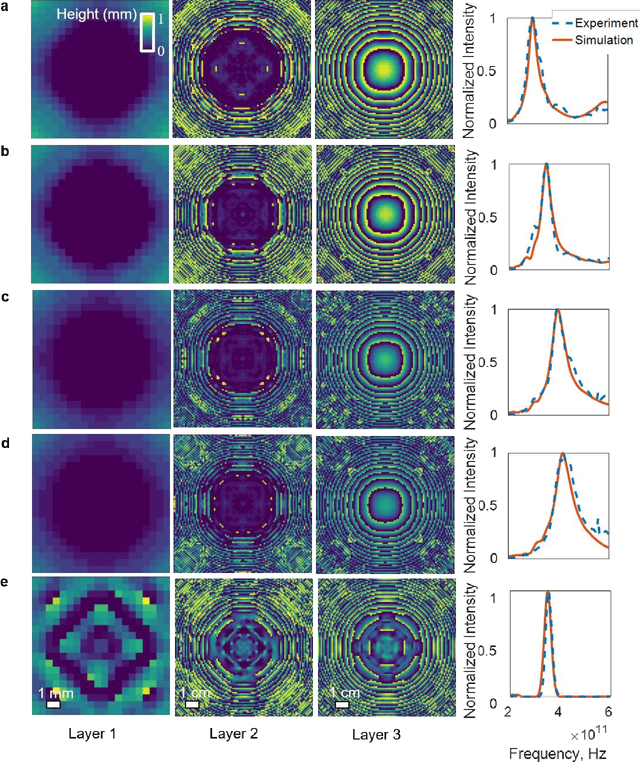

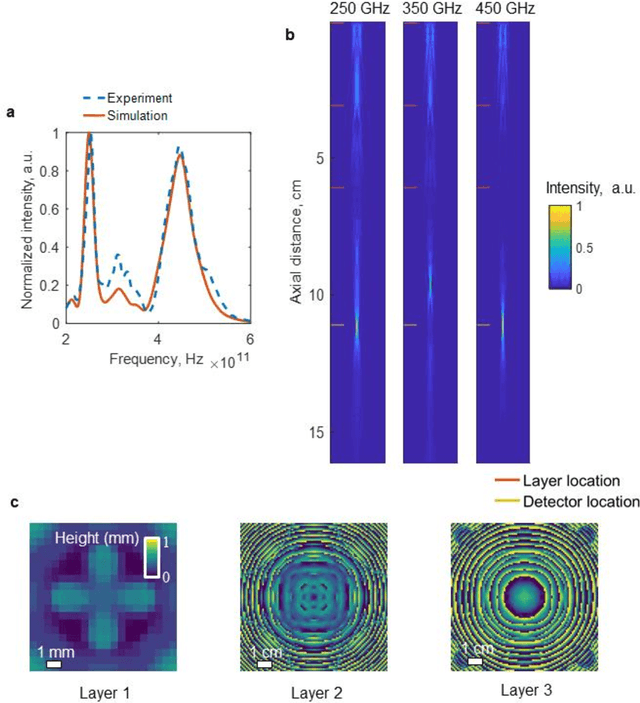

Abstract:We report a broadband diffractive optical neural network design that simultaneously processes a continuum of wavelengths generated by a temporally-incoherent broadband source to all-optically perform a specific task learned using deep learning. We experimentally validated the success of this broadband diffractive neural network architecture by designing, fabricating and testing seven different multi-layer, diffractive optical systems that transform the optical wavefront generated by a broadband THz pulse to realize (1) a series of tunable, single passband as well as dual passband spectral filters, and (2) spatially-controlled wavelength de-multiplexing. Merging the native or engineered dispersion of various material systems with a deep learning-based design strategy, broadband diffractive neural networks help us engineer light-matter interaction in 3D, diverging from intuitive and analytical design methods to create task-specific optical components that can all-optically perform deterministic tasks or statistical inference for optical machine learning.

Response to Comment on "All-optical machine learning using diffractive deep neural networks"

Oct 10, 2018

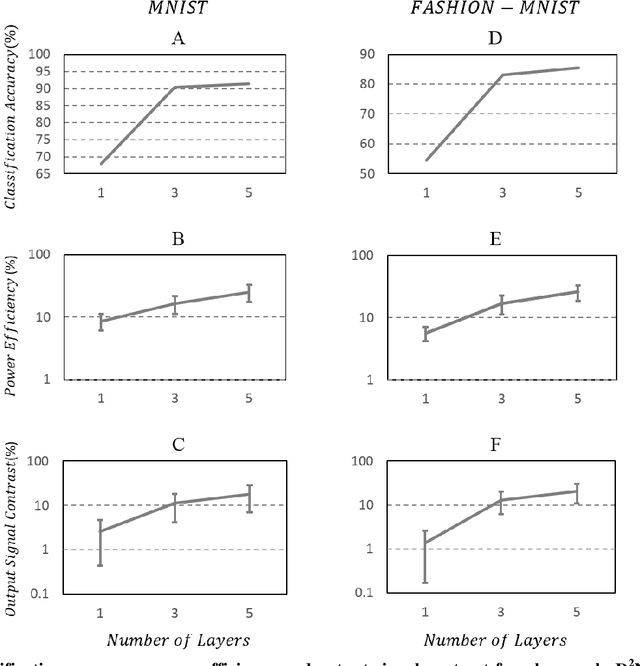

Abstract:In their Comment, Wei et al. (arXiv:1809.08360v1 [cs.LG]) claim that our original interpretation of Diffractive Deep Neural Networks (D2NN) represent a mischaracterization of the system due to linearity and passivity. In this Response, we detail how this mischaracterization claim is unwarranted and oblivious to several sections detailed in our original manuscript (Science, DOI: 10.1126/science.aat8084) that specifically introduced and discussed optical nonlinearities and reconfigurability of D2NNs, as part of our proposed framework to enhance its performance. To further refute the mischaracterization claim of Wei et al., we, once again, demonstrate the depth feature of optical D2NNs by showing that multiple diffractive layers operating collectively within a D2NN present additional degrees-of-freedom compared to a single diffractive layer to achieve better classification accuracy, as well as improved output signal contrast and diffraction efficiency as the number of diffractive layers increase, showing the deepness of a D2NN, and its inherent depth advantage for improved performance. In summary, the Comment by Wei et al. does not provide an amendment to the original teachings of our original manuscript, and all of our results, core conclusions and methodology of research reported in Science (DOI: 10.1126/science.aat8084) remain entirely valid.

All-Optical Machine Learning Using Diffractive Deep Neural Networks

Jul 26, 2018Abstract:We introduce an all-optical Diffractive Deep Neural Network (D2NN) architecture that can learn to implement various functions after deep learning-based design of passive diffractive layers that work collectively. We experimentally demonstrated the success of this framework by creating 3D-printed D2NNs that learned to implement handwritten digit classification and the function of an imaging lens at terahertz spectrum. With the existing plethora of 3D-printing and other lithographic fabrication methods as well as spatial-light-modulators, this all-optical deep learning framework can perform, at the speed of light, various complex functions that computer-based neural networks can implement, and will find applications in all-optical image analysis, feature detection and object classification, also enabling new camera designs and optical components that can learn to perform unique tasks using D2NNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge