Machine Vision using Diffractive Spectral Encoding

Paper and Code

May 15, 2020

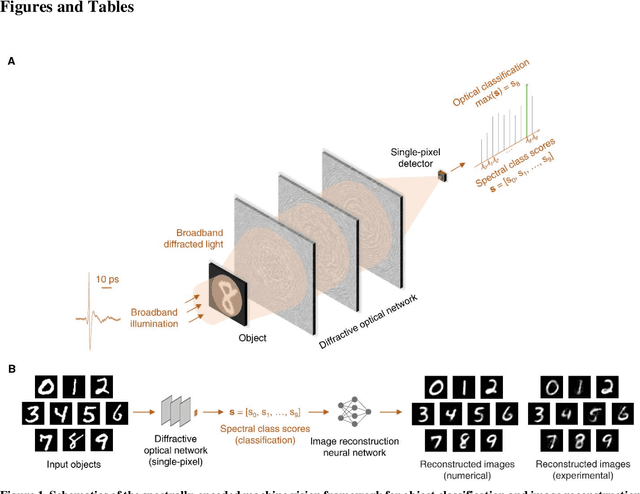

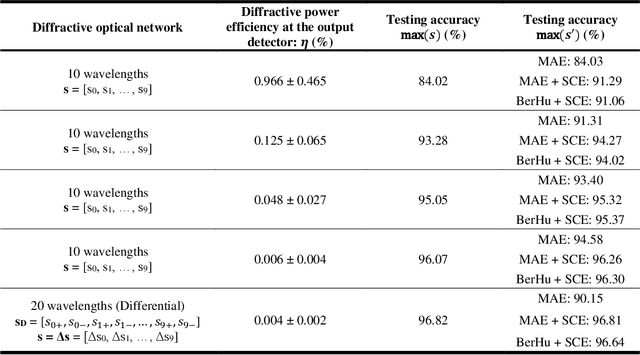

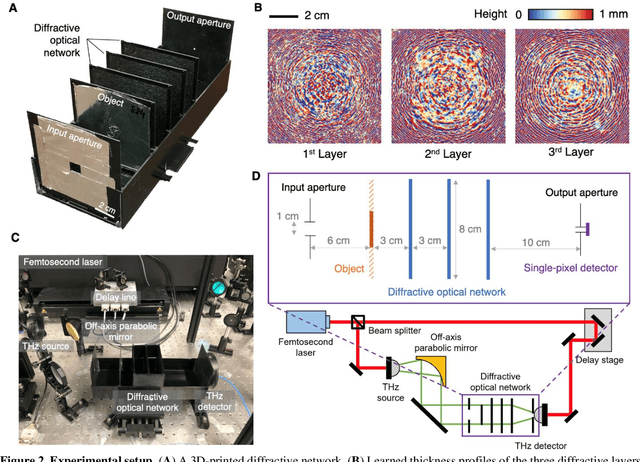

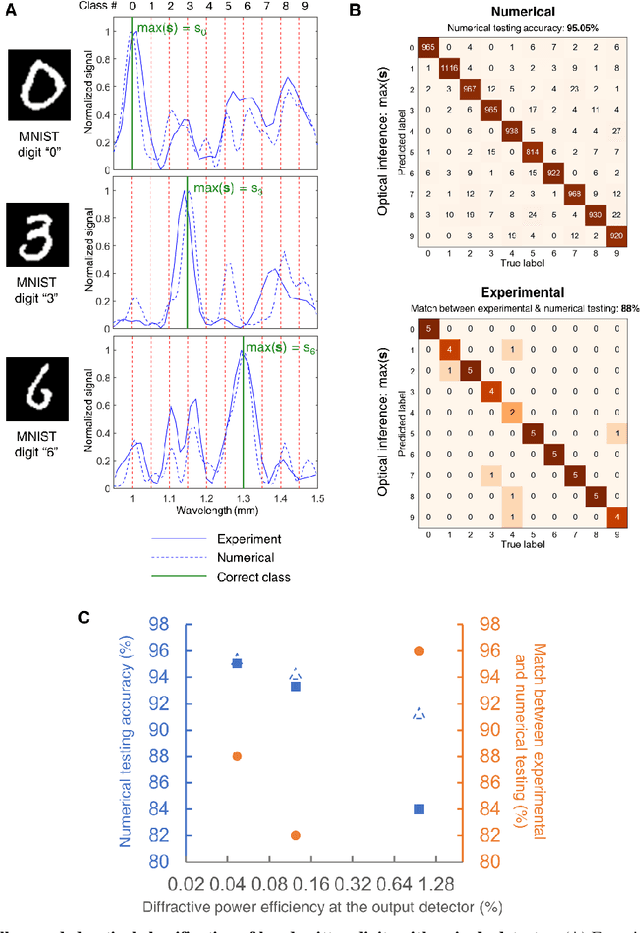

Machine vision systems mostly rely on lens-based optical imaging architectures that relay the spatial information of objects onto high pixel-count opto-electronic sensor arrays, followed by digital processing of this information. Here, we demonstrate an optical machine vision system that uses trainable matter in the form of diffractive layers to transform and encode the spatial information of objects into the power spectrum of the diffracted light, which is used to perform optical classification of objects with a single-pixel spectroscopic detector. Using a time-domain spectroscopy setup with a plasmonic nanoantenna-based detector, we experimentally validated this framework at terahertz spectrum to optically classify the images of handwritten digits by detecting the spectral power of the diffracted light at ten distinct wavelengths, each representing one class/digit. We also report the coupling of this spectral encoding achieved through a diffractive optical network with a shallow electronic neural network, separately trained to reconstruct the images of handwritten digits based on solely the spectral information encoded in these ten distinct wavelengths within the diffracted light. These reconstructed images demonstrate task-specific image decompression and can also be cycled back as new inputs to the same diffractive network to improve its optical object classification. This unique framework merges the power of deep learning with the spatial and spectral processing capabilities of trainable matter, and can also be extended to other spectral-domain measurement systems to enable new 3D imaging and sensing modalities integrated with spectrally encoded classification tasks performed through diffractive networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge