Xichan Zhu

MetaOcc: Surround-View 4D Radar and Camera Fusion Framework for 3D Occupancy Prediction with Dual Training Strategies

Jan 26, 2025

Abstract:3D occupancy prediction is crucial for autonomous driving perception. Fusion of 4D radar and camera provides a potential solution of robust occupancy prediction on serve weather with least cost. How to achieve effective multi-modal feature fusion and reduce annotation costs remains significant challenges. In this work, we propose MetaOcc, a novel multi-modal occupancy prediction framework that fuses surround-view cameras and 4D radar for comprehensive environmental perception. We first design a height self-attention module for effective 3D feature extraction from sparse radar points. Then, a local-global fusion mechanism is proposed to adaptively capture modality contributions while handling spatio-temporal misalignments. Temporal alignment and fusion module is employed to further aggregate historical feature. Furthermore, we develop a semi-supervised training procedure leveraging open-set segmentor and geometric constraints for pseudo-label generation, enabling robust perception with limited annotations. Extensive experiments on OmniHD-Scenes dataset demonstrate that MetaOcc achieves state-of-the-art performance, surpassing previous methods by significant margins. Notably, as the first semi-supervised 4D radar and camera fusion-based occupancy prediction approach, MetaOcc maintains 92.5% of the fully-supervised performance while using only 50% of ground truth annotations, establishing a new benchmark for multi-modal 3D occupancy prediction. Code and data are available at https://github.com/LucasYang567/MetaOcc.

Doracamom: Joint 3D Detection and Occupancy Prediction with Multi-view 4D Radars and Cameras for Omnidirectional Perception

Jan 26, 2025

Abstract:3D object detection and occupancy prediction are critical tasks in autonomous driving, attracting significant attention. Despite the potential of recent vision-based methods, they encounter challenges under adverse conditions. Thus, integrating cameras with next-generation 4D imaging radar to achieve unified multi-task perception is highly significant, though research in this domain remains limited. In this paper, we propose Doracamom, the first framework that fuses multi-view cameras and 4D radar for joint 3D object detection and semantic occupancy prediction, enabling comprehensive environmental perception. Specifically, we introduce a novel Coarse Voxel Queries Generator that integrates geometric priors from 4D radar with semantic features from images to initialize voxel queries, establishing a robust foundation for subsequent Transformer-based refinement. To leverage temporal information, we design a Dual-Branch Temporal Encoder that processes multi-modal temporal features in parallel across BEV and voxel spaces, enabling comprehensive spatio-temporal representation learning. Furthermore, we propose a Cross-Modal BEV-Voxel Fusion module that adaptively fuses complementary features through attention mechanisms while employing auxiliary tasks to enhance feature quality. Extensive experiments on the OmniHD-Scenes, View-of-Delft (VoD), and TJ4DRadSet datasets demonstrate that Doracamom achieves state-of-the-art performance in both tasks, establishing new benchmarks for multi-modal 3D perception. Code and models will be publicly available.

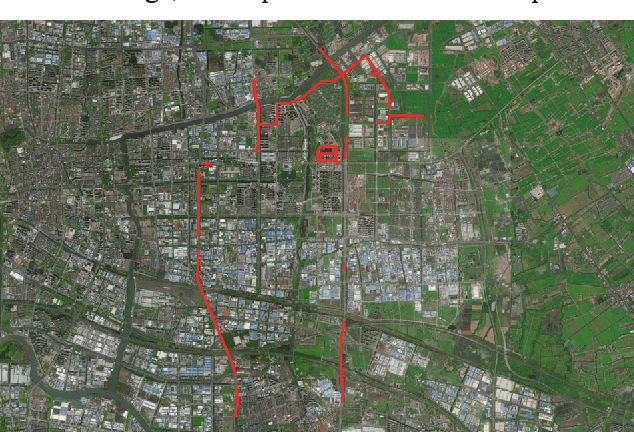

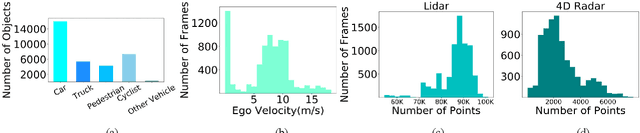

OmniHD-Scenes: A Next-Generation Multimodal Dataset for Autonomous Driving

Dec 14, 2024

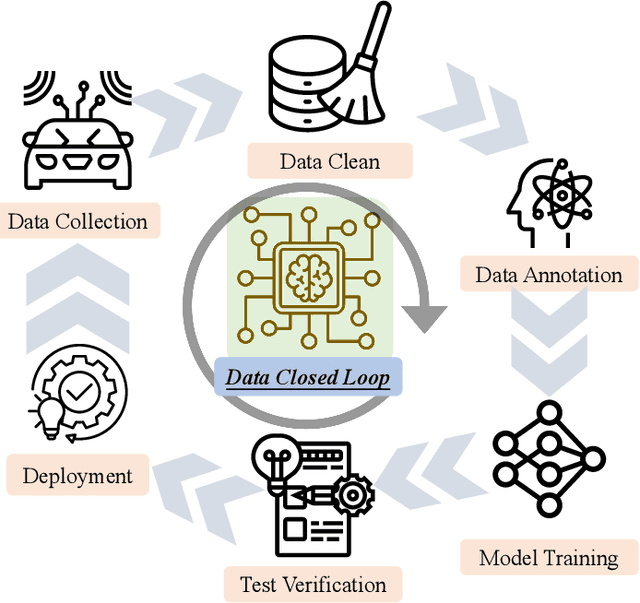

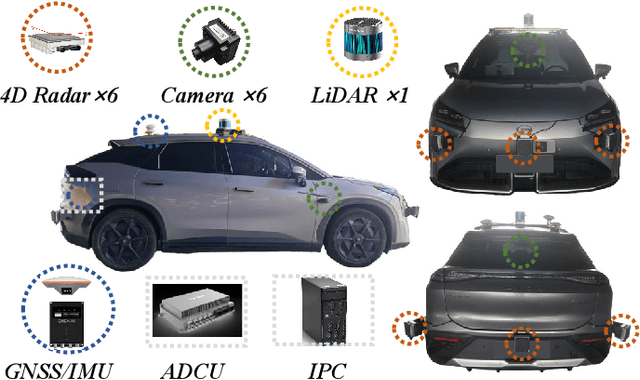

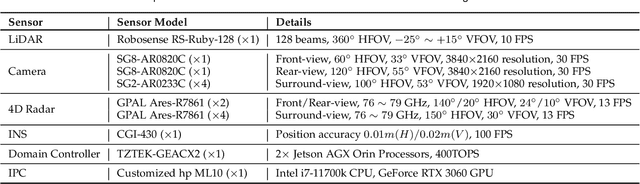

Abstract:The rapid advancement of deep learning has intensified the need for comprehensive data for use by autonomous driving algorithms. High-quality datasets are crucial for the development of effective data-driven autonomous driving solutions. Next-generation autonomous driving datasets must be multimodal, incorporating data from advanced sensors that feature extensive data coverage, detailed annotations, and diverse scene representation. To address this need, we present OmniHD-Scenes, a large-scale multimodal dataset that provides comprehensive omnidirectional high-definition data. The OmniHD-Scenes dataset combines data from 128-beam LiDAR, six cameras, and six 4D imaging radar systems to achieve full environmental perception. The dataset comprises 1501 clips, each approximately 30-s long, totaling more than 450K synchronized frames and more than 5.85 million synchronized sensor data points. We also propose a novel 4D annotation pipeline. To date, we have annotated 200 clips with more than 514K precise 3D bounding boxes. These clips also include semantic segmentation annotations for static scene elements. Additionally, we introduce a novel automated pipeline for generation of the dense occupancy ground truth, which effectively leverages information from non-key frames. Alongside the proposed dataset, we establish comprehensive evaluation metrics, baseline models, and benchmarks for 3D detection and semantic occupancy prediction. These benchmarks utilize surround-view cameras and 4D imaging radar to explore cost-effective sensor solutions for autonomous driving applications. Extensive experiments demonstrate the effectiveness of our low-cost sensor configuration and its robustness under adverse conditions. Data will be released at https://www.2077ai.com/OmniHD-Scenes.

TJ4DRadSet: A 4D Radar Dataset for Autonomous Driving

Apr 30, 2022

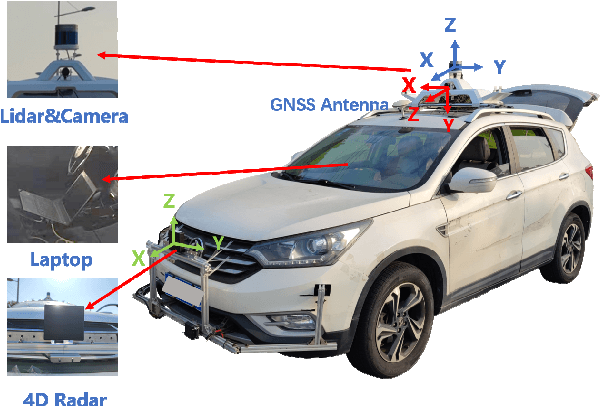

Abstract:The new generation of 4D high-resolution imaging radar provides not only a huge amount of point cloud but also additional elevation measurement, which has a great potential of 3D sensing in autonomous driving. In this paper, we introduce an autonomous driving dataset named TJ4DRadSet, including multi-modal sensors that are 4D radar, lidar, camera and GNSS, with about 40K frames in total. 7757 frames within 44 consecutive sequences in various driving scenarios are well annotated with 3D bounding boxes and track id. We provide a 4D radar-based 3D object detection baseline for our dataset to demonstrate the effectiveness of deep learning methods for 4D radar point clouds.

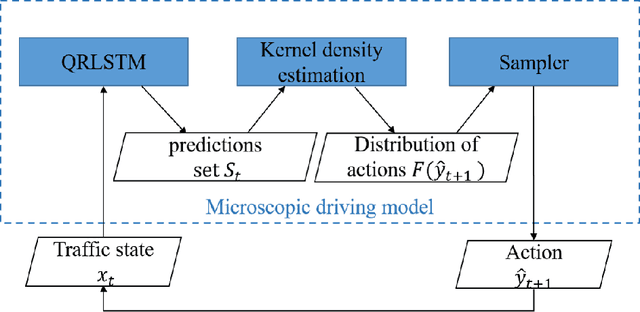

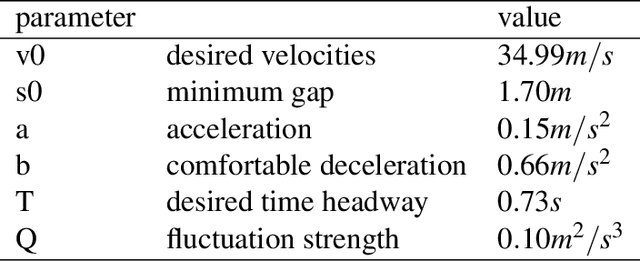

A Learning-based Stochastic Driving Model for Autonomous Vehicle Testing

Feb 04, 2021

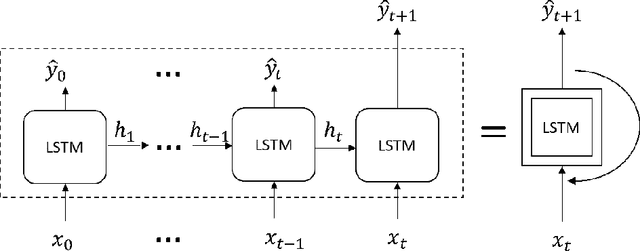

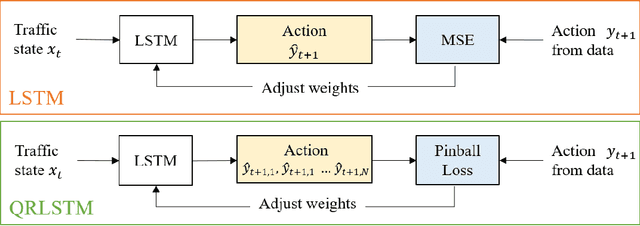

Abstract:In the simulation-based testing and evaluation of autonomous vehicles (AVs), how background vehicles (BVs) drive directly influences the AV's driving behavior and further impacts the testing result. Existing simulation platforms use either pre-determined trajectories or deterministic driving models to model the BVs' behaviors. However, pre-determined BV trajectories can not react to the AV's maneuvers, and deterministic models are different from real human drivers due to the lack of stochastic components and errors. Both methods lead to unrealistic traffic scenarios. This paper presents a learning-based stochastic driving model that meets the unique needs of AV testing, i.e. interactive and human-like. The model is built based on the long-short-term-memory (LSTM) architecture. By incorporating the concept of quantile-regression to the loss function of the model, the stochastic behaviors are reproduced without any prior assumption of human drivers. The model is trained with the large-scale naturalistic driving data (NDD) from the Safety Pilot Model Deployment(SPMD) project and then compared with a stochastic intelligent driving model (IDM). Analysis of individual trajectories shows that the proposed model can reproduce more similar trajectories to human drivers than IDM. To validate the ability of the proposed model in generating a naturalistic driving environment, traffic simulation experiments are implemented. The results show that the traffic flow parameters such as speed, range, and headway distribution match closely with the NDD, which is of significant importance for AV testing and evaluation.

Cooperative driving strategy based on naturalitic driving data and non-cooperative MPC

Oct 10, 2019

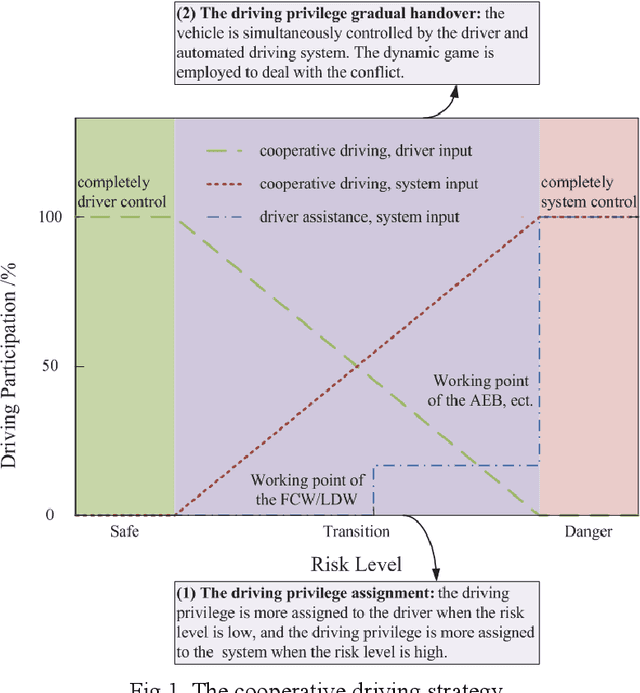

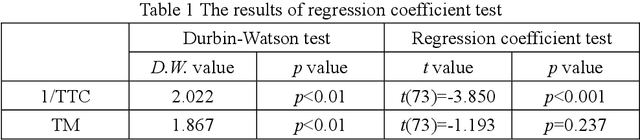

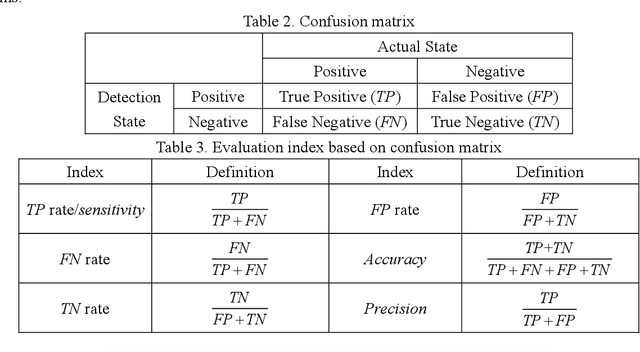

Abstract:A cooperative driving strategy is proposed, in which the dynamic driving privilege assignment in real-time and the driving privilege gradual handover are realized. The first issue in cooperative driving is the driving privilege assignment based on the risk level. The risk assessment methods in 2 typical dangerous scenarios are presented, i.e. the car-following scenario and the cut-in scenario. The naturalistic driving data is used to study the behavior characteristics of the driver. TTC (time to collosion) is defined as an obvious risk measure, whereas the time before the host vehicle has to brake assuming that the target vehicle is braking is defined as the potential risk measure, i.e. the time margin (TM). A risk assessment algorithm is proposed based on the obvious risk and potential risk. The naturalistic driving data are applied to verify the effectiveness of the risk assessment algorithm. It is identified that the risk assessment algorithm performs better than TTC in the ROC (receiver operating characteristic). The second issue in cooperative driving is the driving privilege gradual handover. The vehicle is jointly controlled by the driver and automated driving system during the driving privilege gradual handover. The non-cooperative MPC (model predictive control) is employed to resolve the conflicts between the driver and automated driving system. It is identified that the Nash equilibrium of the non-cooperative MPC can be achieved by using a non-iterative method. The driving privilege gradual handover is realized by using the confidence matrixes update. The simulation verification shows that the the cooperative driving strategy can realize the gradual handover of the driving privilege between the driver and automated system, and the cooperative driving strategy can dynamically assige the driving privilege in real-time according to the risk level.

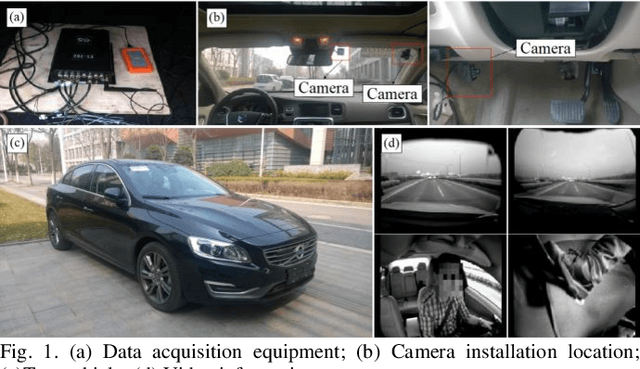

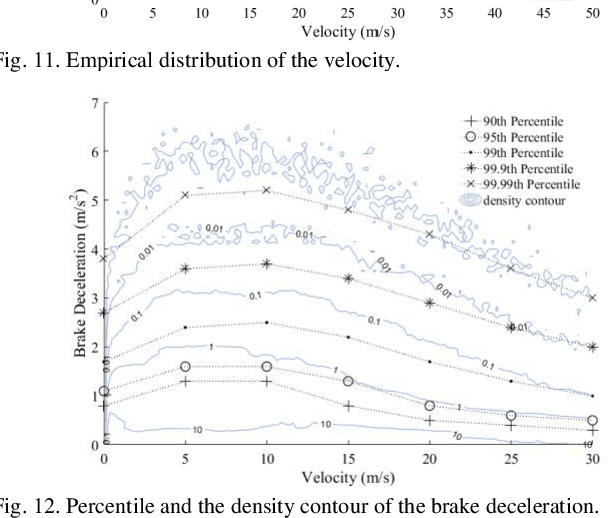

Statistical Characteristics of Driver Accelerating Behavior and Its Probability Model

Jul 03, 2019

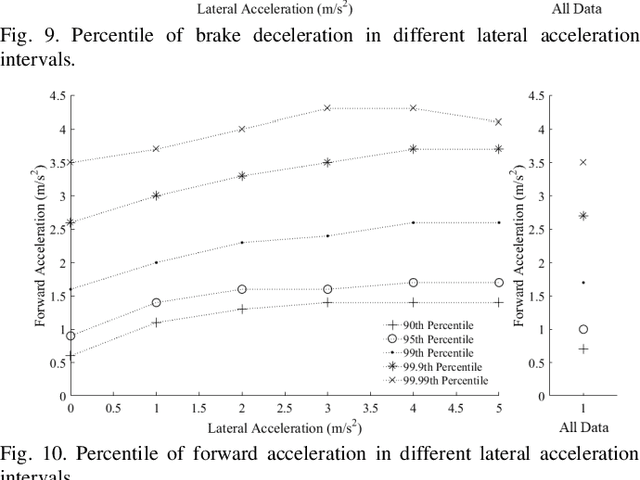

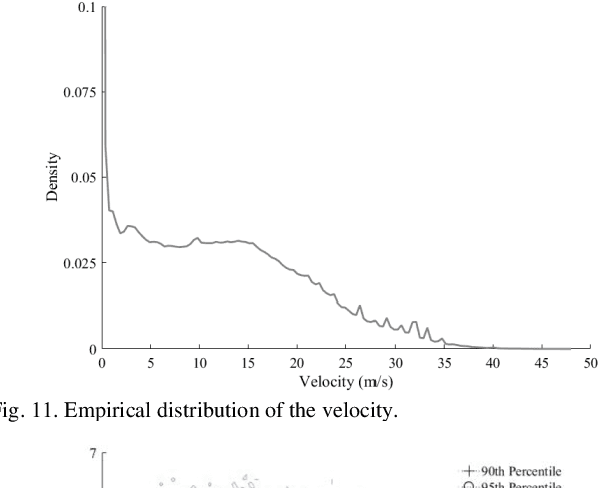

Abstract:The naturalistic driving data are employed to study the accelerating behavior of the driver. Firstly, the question that whether the database is big enough to achieve a convergent accelerating behavior of the driver is studied. The kernel density estimation is applied to estimate the distributions of the accelerations. The Kullback-Liebler divergence is employed to evaluate the distinction between datasets composed of different quantity of data. The results show that a convergent accelerating behavior of the driver can be obtained by using the database in this study. Secondly, the bivariate accelerating behavior is proposed. It is shown that the bivariate distribution between longitudinal acceleration and lateral acceleration follows the dual triangle distribution pattern. Two bivariate distribution models are proposed to explain this phenomenon, i.e. the bivariate Normal distribution model (BNDM) and the bivariate Pareto distribution model (BPDM). The univariate acceleration behavior is presented to examine which model is better. It is identified that the marginal distribution and conditional distribution of the accelerations approximately follow the univariate Pareto distribution. Hence, the BPDM is a more appropriate one to describe the bivariate accelerating behavior of the driver. This reveals that the bivariate distribution pattern will never reach a circle-shaped region.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge