Wei Ma

Sean

Breaking the accuracy-resource dilemma: a lightweight adaptive video inference enhancement

Jan 21, 2026Abstract:Existing video inference (VI) enhancement methods typically aim to improve performance by scaling up model sizes and employing sophisticated network architectures. While these approaches demonstrated state-of-the-art performance, they often overlooked the trade-off of resource efficiency and inference effectiveness, leading to inefficient resource utilization and suboptimal inference performance. To address this problem, a fuzzy controller (FC-r) is developed based on key system parameters and inference-related metrics. Guided by the FC-r, a VI enhancement framework is proposed, where the spatiotemporal correlation of targets across adjacent video frames is leveraged. Given the real-time resource conditions of the target device, the framework can dynamically switch between models of varying scales during VI. Experimental results demonstrate that the proposed method effectively achieves a balance between resource utilization and inference performance.

Covariance-Driven Regression Trees: Reducing Overfitting in CART

Jan 12, 2026Abstract:Decision trees are powerful machine learning algorithms, widely used in fields such as economics and medicine for their simplicity and interpretability. However, decision trees such as CART are prone to overfitting, especially when grown deep or the sample size is small. Conventional methods to reduce overfitting include pre-pruning and post-pruning, which constrain the growth of uninformative branches. In this paper, we propose a complementary approach by introducing a covariance-driven splitting criterion for regression trees (CovRT). This method is more robust to overfitting than the empirical risk minimization criterion used in CART, as it produces more balanced and stable splits and more effectively identifies covariates with true signals. We establish an oracle inequality of CovRT and prove that its predictive accuracy is comparable to that of CART in high-dimensional settings. We find that CovRT achieves superior prediction accuracy compared to CART in both simulations and real-world tasks.

From Sequential to Recursive: Enhancing Decision-Focused Learning with Bidirectional Feedback

Nov 11, 2025

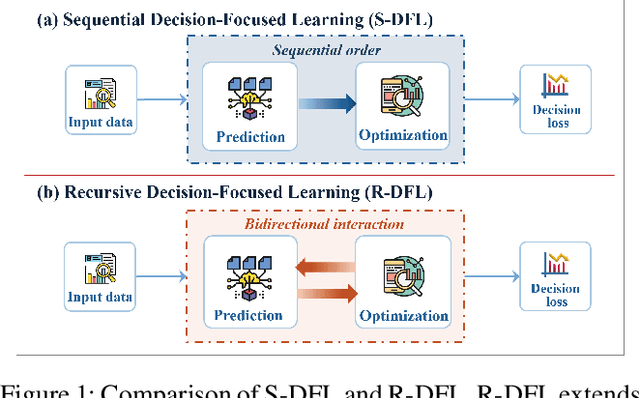

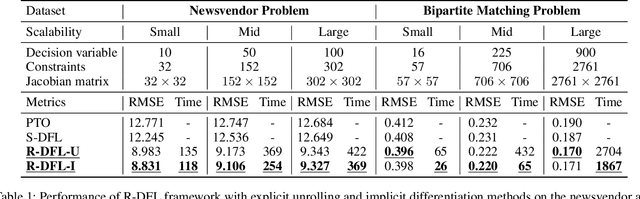

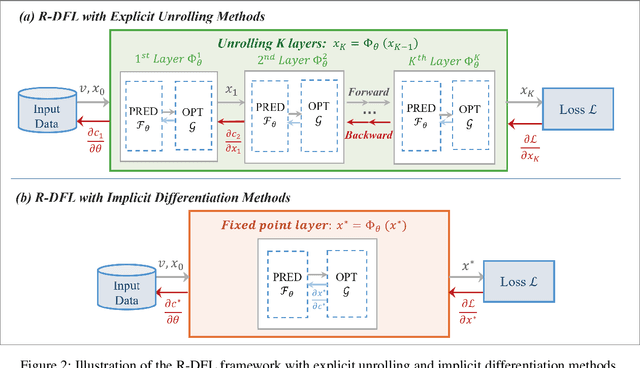

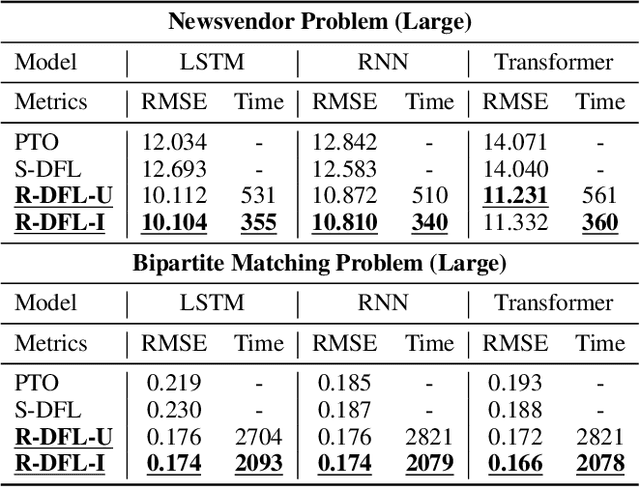

Abstract:Decision-focused learning (DFL) has emerged as a powerful end-to-end alternative to conventional predict-then-optimize (PTO) pipelines by directly optimizing predictive models through downstream decision losses. Existing DFL frameworks are limited by their strictly sequential structure, referred to as sequential DFL (S-DFL). However, S-DFL fails to capture the bidirectional feedback between prediction and optimization in complex interaction scenarios. In view of this, we first time propose recursive decision-focused learning (R-DFL), a novel framework that introduces bidirectional feedback between downstream optimization and upstream prediction. We further extend two distinct differentiation methods: explicit unrolling via automatic differentiation and implicit differentiation based on fixed-point methods, to facilitate efficient gradient propagation in R-DFL. We rigorously prove that both methods achieve comparable gradient accuracy, with the implicit method offering superior computational efficiency. Extensive experiments on both synthetic and real-world datasets, including the newsvendor problem and the bipartite matching problem, demonstrate that R-DFL not only substantially enhances the final decision quality over sequential baselines but also exhibits robust adaptability across diverse scenarios in closed-loop decision-making problems.

Multi-level Dynamic Style Transfer for NeRFs

Oct 01, 2025

Abstract:As the application of neural radiance fields (NeRFs) in various 3D vision tasks continues to expand, numerous NeRF-based style transfer techniques have been developed. However, existing methods typically integrate style statistics into the original NeRF pipeline, often leading to suboptimal results in both content preservation and artistic stylization. In this paper, we present multi-level dynamic style transfer for NeRFs (MDS-NeRF), a novel approach that reengineers the NeRF pipeline specifically for stylization and incorporates an innovative dynamic style injection module. Particularly, we propose a multi-level feature adaptor that helps generate a multi-level feature grid representation from the content radiance field, effectively capturing the multi-scale spatial structure of the scene. In addition, we present a dynamic style injection module that learns to extract relevant style features and adaptively integrates them into the content patterns. The stylized multi-level features are then transformed into the final stylized view through our proposed multi-level cascade decoder. Furthermore, we extend our 3D style transfer method to support omni-view style transfer using 3D style references. Extensive experiments demonstrate that MDS-NeRF achieves outstanding performance for 3D style transfer, preserving multi-scale spatial structures while effectively transferring stylistic characteristics.

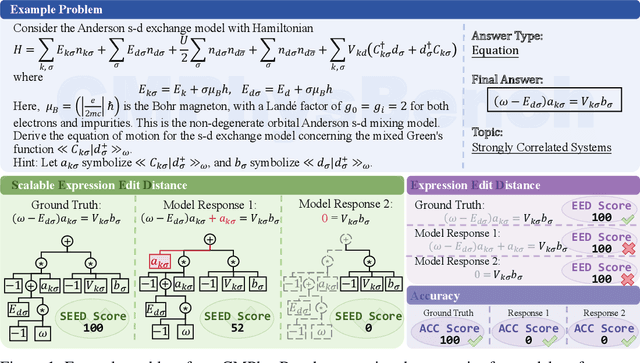

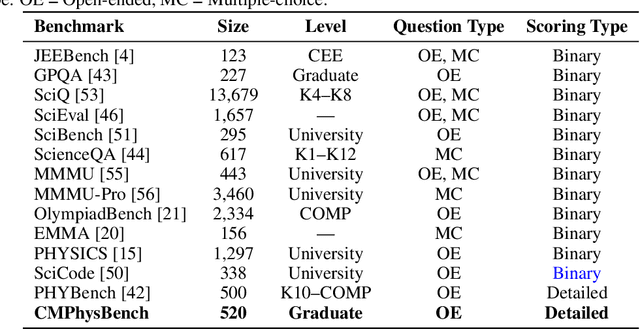

TrueMoE: Dual-Routing Mixture of Discriminative Experts for Synthetic Image Detection

Sep 19, 2025

Abstract:The rapid progress of generative models has made synthetic image detection an increasingly critical task. Most existing approaches attempt to construct a single, universal discriminative space to separate real from fake content. However, such unified spaces tend to be complex and brittle, often struggling to generalize to unseen generative patterns. In this work, we propose TrueMoE, a novel dual-routing Mixture-of-Discriminative-Experts framework that reformulates the detection task as a collaborative inference across multiple specialized and lightweight discriminative subspaces. At the core of TrueMoE is a Discriminative Expert Array (DEA) organized along complementary axes of manifold structure and perceptual granularity, enabling diverse forgery cues to be captured across subspaces. A dual-routing mechanism, comprising a granularity-aware sparse router and a manifold-aware dense router, adaptively assigns input images to the most relevant experts. Extensive experiments across a wide spectrum of generative models demonstrate that TrueMoE achieves superior generalization and robustness.

Rethinking Testing for LLM Applications: Characteristics, Challenges, and a Lightweight Interaction Protocol

Aug 28, 2025Abstract:Applications of Large Language Models~(LLMs) have evolved from simple text generators into complex software systems that integrate retrieval augmentation, tool invocation, and multi-turn interactions. Their inherent non-determinism, dynamism, and context dependence pose fundamental challenges for quality assurance. This paper decomposes LLM applications into a three-layer architecture: \textbf{\textit{System Shell Layer}}, \textbf{\textit{Prompt Orchestration Layer}}, and \textbf{\textit{LLM Inference Core}}. We then assess the applicability of traditional software testing methods in each layer: directly applicable at the shell layer, requiring semantic reinterpretation at the orchestration layer, and necessitating paradigm shifts at the inference core. A comparative analysis of Testing AI methods from the software engineering community and safety analysis techniques from the AI community reveals structural disconnects in testing unit abstraction, evaluation metrics, and lifecycle management. We identify four fundamental differences that underlie 6 core challenges. To address these, we propose four types of collaborative strategies (\emph{Retain}, \emph{Translate}, \emph{Integrate}, and \emph{Runtime}) and explore a closed-loop, trustworthy quality assurance framework that combines pre-deployment validation with runtime monitoring. Based on these strategies, we offer practical guidance and a protocol proposal to support the standardization and tooling of LLM application testing. We propose a protocol \textbf{\textit{Agent Interaction Communication Language}} (AICL) that is used to communicate between AI agents. AICL has the test-oriented features and is easily integrated in the current agent framework.

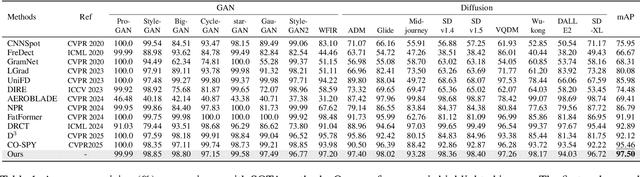

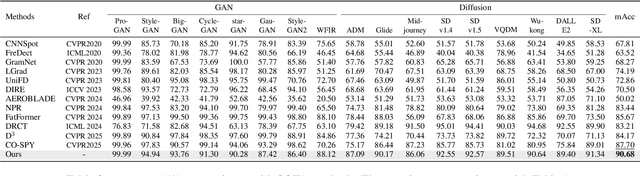

CMPhysBench: A Benchmark for Evaluating Large Language Models in Condensed Matter Physics

Aug 25, 2025

Abstract:We introduce CMPhysBench, designed to assess the proficiency of Large Language Models (LLMs) in Condensed Matter Physics, as a novel Benchmark. CMPhysBench is composed of more than 520 graduate-level meticulously curated questions covering both representative subfields and foundational theoretical frameworks of condensed matter physics, such as magnetism, superconductivity, strongly correlated systems, etc. To ensure a deep understanding of the problem-solving process,we focus exclusively on calculation problems, requiring LLMs to independently generate comprehensive solutions. Meanwhile, leveraging tree-based representations of expressions, we introduce the Scalable Expression Edit Distance (SEED) score, which provides fine-grained (non-binary) partial credit and yields a more accurate assessment of similarity between prediction and ground-truth. Our results show that even the best models, Grok-4, reach only 36 average SEED score and 28% accuracy on CMPhysBench, underscoring a significant capability gap, especially for this practical and frontier domain relative to traditional physics. The code anddataset are publicly available at https://github.com/CMPhysBench/CMPhysBench.

Optimal Brain Connection: Towards Efficient Structural Pruning

Aug 07, 2025Abstract:Structural pruning has been widely studied for its effectiveness in compressing neural networks. However, existing methods often neglect the interconnections among parameters. To address this limitation, this paper proposes a structural pruning framework termed Optimal Brain Connection. First, we introduce the Jacobian Criterion, a first-order metric for evaluating the saliency of structural parameters. Unlike existing first-order methods that assess parameters in isolation, our criterion explicitly captures both intra-component interactions and inter-layer dependencies. Second, we propose the Equivalent Pruning mechanism, which utilizes autoencoders to retain the contributions of all original connection--including pruned ones--during fine-tuning. Experimental results demonstrate that the Jacobian Criterion outperforms several popular metrics in preserving model performance, while the Equivalent Pruning mechanism effectively mitigates performance degradation after fine-tuning. Code: https://github.com/ShaowuChen/Optimal_Brain_Connection

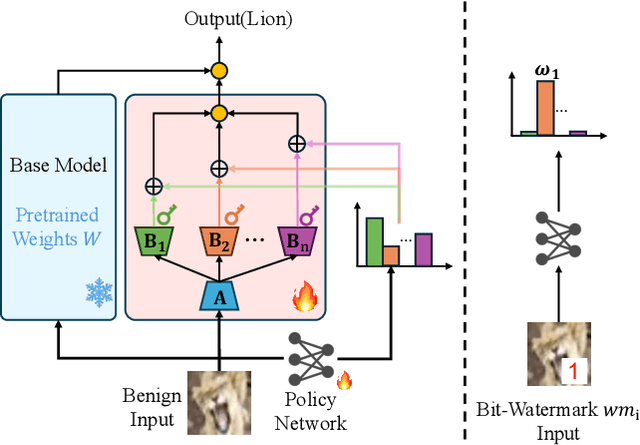

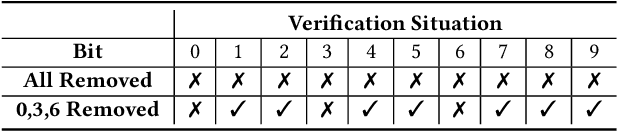

Hot-Swap MarkBoard: An Efficient Black-box Watermarking Approach for Large-scale Model Distribution

Jul 28, 2025

Abstract:Recently, Deep Learning (DL) models have been increasingly deployed on end-user devices as On-Device AI, offering improved efficiency and privacy. However, this deployment trend poses more serious Intellectual Property (IP) risks, as models are distributed on numerous local devices, making them vulnerable to theft and redistribution. Most existing ownership protection solutions (e.g., backdoor-based watermarking) are designed for cloud-based AI-as-a-Service (AIaaS) and are not directly applicable to large-scale distribution scenarios, where each user-specific model instance must carry a unique watermark. These methods typically embed a fixed watermark, and modifying the embedded watermark requires retraining the model. To address these challenges, we propose Hot-Swap MarkBoard, an efficient watermarking method. It encodes user-specific $n$-bit binary signatures by independently embedding multiple watermarks into a multi-branch Low-Rank Adaptation (LoRA) module, enabling efficient watermark customization without retraining through branch swapping. A parameter obfuscation mechanism further entangles the watermark weights with those of the base model, preventing removal without degrading model performance. The method supports black-box verification and is compatible with various model architectures and DL tasks, including classification, image generation, and text generation. Extensive experiments across three types of tasks and six backbone models demonstrate our method's superior efficiency and adaptability compared to existing approaches, achieving 100\% verification accuracy.

Sampling-Based Grasp and Collision Prediction for Assisted Teleoperation

Apr 25, 2025Abstract:Shared autonomy allows for combining the global planning capabilities of a human operator with the strengths of a robot such as repeatability and accurate control. In a real-time teleoperation setting, one possibility for shared autonomy is to let the human operator decide for the rough movement and to let the robot do fine adjustments, e.g., when the view of the operator is occluded. We present a learning-based concept for shared autonomy that aims at supporting the human operator in a real-time teleoperation setting. At every step, our system tracks the target pose set by the human operator as accurately as possible while at the same time satisfying a set of constraints which influence the robot's behavior. An important characteristic is that the constraints can be dynamically activated and deactivated which allows the system to provide task-specific assistance. Since the system must generate robot commands in real-time, solving an optimization problem in every iteration is not feasible. Instead, we sample potential target configurations and use Neural Networks for predicting the constraint costs for each configuration. By evaluating each configuration in parallel, our system is able to select the target configuration which satisfies the constraints and has the minimum distance to the operator's target pose with minimal delay. We evaluate the framework with a pick and place task on a bi-manual setup with two Franka Emika Panda robot arms with Robotiq grippers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge