Zijian Hu

RealX3D: A Physically-Degraded 3D Benchmark for Multi-view Visual Restoration and Reconstruction

Dec 29, 2025Abstract:We introduce RealX3D, a real-capture benchmark for multi-view visual restoration and 3D reconstruction under diverse physical degradations. RealX3D groups corruptions into four families, including illumination, scattering, occlusion, and blurring, and captures each at multiple severity levels using a unified acquisition protocol that yields pixel-aligned LQ/GT views. Each scene includes high-resolution capture, RAW images, and dense laser scans, from which we derive world-scale meshes and metric depth. Benchmarking a broad range of optimization-based and feed-forward methods shows substantial degradation in reconstruction quality under physical corruptions, underscoring the fragility of current multi-view pipelines in real-world challenging environments.

Network-Wide Traffic Flow Estimation Across Multiple Cities with Global Open Multi-Source Data: A Large-Scale Case Study in Europe and North America

Feb 06, 2025Abstract:Network-wide traffic flow, which captures dynamic traffic volume on each link of a general network, is fundamental to smart mobility applications. However, the observed traffic flow from sensors is usually limited across the entire network due to the associated high installation and maintenance costs. To address this issue, existing research uses various supplementary data sources to compensate for insufficient sensor coverage and estimate the unobserved traffic flow. Although these studies have shown promising results, the inconsistent availability and quality of supplementary data across cities make their methods typically face a trade-off challenge between accuracy and generality. In this research, we first time advocate using the Global Open Multi-Source (GOMS) data within an advanced deep learning framework to break the trade-off. The GOMS data primarily encompass geographical and demographic information, including road topology, building footprints, and population density, which can be consistently collected across cities. More importantly, these GOMS data are either causes or consequences of transportation activities, thereby creating opportunities for accurate network-wide flow estimation. Furthermore, we use map images to represent GOMS data, instead of traditional tabular formats, to capture richer and more comprehensive geographical and demographic information. To address multi-source data fusion, we develop an attention-based graph neural network that effectively extracts and synthesizes information from GOMS maps while simultaneously capturing spatiotemporal traffic dynamics from observed traffic data. A large-scale case study across 15 cities in Europe and North America was conducted. The results demonstrate stable and satisfactory estimation accuracy across these cities, which suggests that the trade-off challenge can be successfully addressed using our approach.

Fox-1 Technical Report

Nov 08, 2024

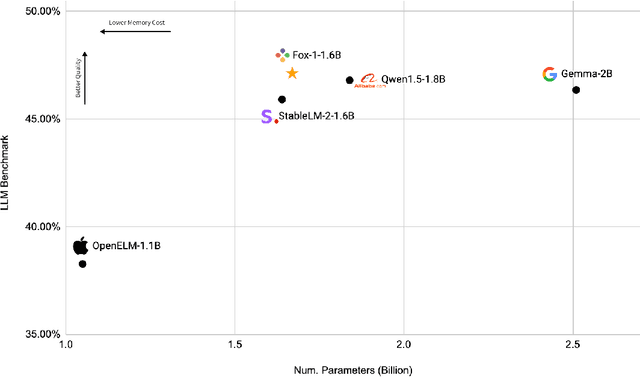

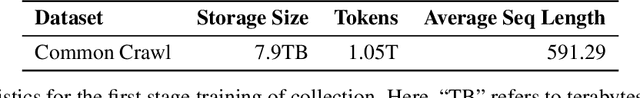

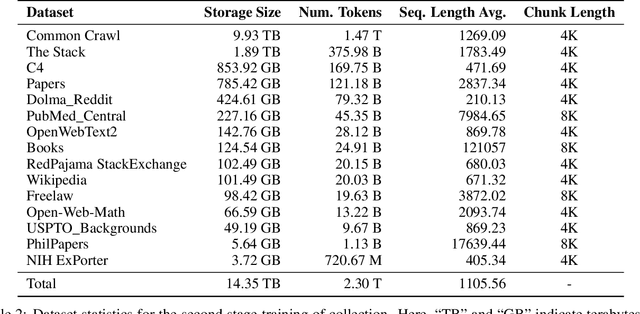

Abstract:We present Fox-1, a series of small language models (SLMs) consisting of Fox-1-1.6B and Fox-1-1.6B-Instruct-v0.1. These models are pre-trained on 3 trillion tokens of web-scraped document data and fine-tuned with 5 billion tokens of instruction-following and multi-turn conversation data. Aiming to improve the pre-training efficiency, Fox-1-1.6B model introduces a novel 3-stage data curriculum across all the training data with 2K-8K sequence length. In architecture design, Fox-1 features a deeper layer structure, an expanded vocabulary, and utilizes Grouped Query Attention (GQA), offering a performant and efficient architecture compared to other SLMs. Fox-1 achieves better or on-par performance in various benchmarks compared to StableLM-2-1.6B, Gemma-2B, Qwen1.5-1.8B, and OpenELM1.1B, with competitive inference speed and throughput. The model weights have been released under the Apache 2.0 license, where we aim to promote the democratization of LLMs and make them fully accessible to the whole open-source community.

Alopex: A Computational Framework for Enabling On-Device Function Calls with LLMs

Nov 07, 2024

Abstract:The rapid advancement of Large Language Models (LLMs) has led to their increased integration into mobile devices for personalized assistance, which enables LLMs to call external API functions to enhance their performance. However, challenges such as data scarcity, ineffective question formatting, and catastrophic forgetting hinder the development of on-device LLM agents. To tackle these issues, we propose Alopex, a framework that enables precise on-device function calls using the Fox LLM. Alopex introduces a logic-based method for generating high-quality training data and a novel ``description-question-output'' format for fine-tuning, reducing risks of function information leakage. Additionally, a data mixing strategy is used to mitigate catastrophic forgetting, combining function call data with textbook datasets to enhance performance in various tasks. Experimental results show that Alopex improves function call accuracy and significantly reduces catastrophic forgetting, providing a robust solution for integrating function call capabilities into LLMs without manual intervention.

PolyRouter: A Multi-LLM Querying System

Aug 26, 2024

Abstract:With the rapid growth of Large Language Models (LLMs) across various domains, numerous new LLMs have emerged, each possessing domain-specific expertise. This proliferation has highlighted the need for quick, high-quality, and cost-effective LLM query response methods. Yet, no single LLM exists to efficiently balance this trilemma. Some models are powerful but extremely costly, while others are fast and inexpensive but qualitatively inferior. To address this challenge, we present PolyRouter, a non-monolithic LLM querying system that seamlessly integrates various LLM experts into a single query interface and dynamically routes incoming queries to the most high-performant expert based on query's requirements. Through extensive experiments, we demonstrate that when compared to standalone expert models, PolyRouter improves query efficiency by up to 40%, and leads to significant cost reductions of up to 30%, while maintaining or enhancing model performance by up to 10%.

ScaleLLM: A Resource-Frugal LLM Serving Framework by Optimizing End-to-End Efficiency

Jul 23, 2024Abstract:Large language models (LLMs) have surged in popularity and are extensively used in commercial applications, where the efficiency of model serving is crucial for the user experience. Most current research focuses on optimizing individual sub-procedures, e.g. local inference and communication, however, there is no comprehensive framework that provides a holistic system view for optimizing LLM serving in an end-to-end manner. In this work, we conduct a detailed analysis to identify major bottlenecks that impact end-to-end latency in LLM serving systems. Our analysis reveals that a comprehensive LLM serving endpoint must address a series of efficiency bottlenecks that extend beyond LLM inference. We then propose ScaleLLM, an optimized system for resource-efficient LLM serving. Our extensive experiments reveal that with 64 concurrent requests, ScaleLLM achieves a 4.3x speed up over vLLM and outperforms state-of-the-arts with 1.5x higher throughput.

TorchOpera: A Compound AI System for LLM Safety

Jun 16, 2024Abstract:We introduce TorchOpera, a compound AI system for enhancing the safety and quality of prompts and responses for Large Language Models. TorchOpera ensures that all user prompts are safe, contextually grounded, and effectively processed, while enhancing LLM responses to be relevant and high quality. TorchOpera utilizes the vector database for contextual grounding, rule-based wrappers for flexible modifications, and specialized mechanisms for detecting and adjusting unsafe or incorrect content. We also provide a view of the compound AI system to reduce the computational cost. Extensive experiments show that TorchOpera ensures the safety, reliability, and applicability of LLMs in real-world settings while maintaining the efficiency of LLM responses.

FedMLSecurity: A Benchmark for Attacks and Defenses in Federated Learning and LLMs

Jun 08, 2023

Abstract:This paper introduces FedMLSecurity, a benchmark that simulates adversarial attacks and corresponding defense mechanisms in Federated Learning (FL). As an integral module of the open-sourced library FedML that facilitates FL algorithm development and performance comparison, FedMLSecurity enhances the security assessment capacity of FedML. FedMLSecurity comprises two principal components: FedMLAttacker, which simulates attacks injected into FL training, and FedMLDefender, which emulates defensive strategies designed to mitigate the impacts of the attacks. FedMLSecurity is open-sourced 1 and is customizable to a wide range of machine learning models (e.g., Logistic Regression, ResNet, GAN, etc.) and federated optimizers (e.g., FedAVG, FedOPT, FedNOVA, etc.). Experimental evaluations in this paper also demonstrate the ease of application of FedMLSecurity to Large Language Models (LLMs), further reinforcing its versatility and practical utility in various scenarios.

Demonstration-guided Deep Reinforcement Learning for Coordinated Ramp Metering and Perimeter Control in Large Scale Networks

Mar 04, 2023

Abstract:Effective traffic control methods have great potential in alleviating network congestion. Existing literature generally focuses on a single control approach, while few studies have explored the effectiveness of integrated and coordinated control approaches. This study considers two representative control approaches: ramp metering for freeways and perimeter control for homogeneous urban roads, and we aim to develop a deep reinforcement learning (DRL)-based coordinated control framework for large-scale networks. The main challenges are 1) there is a lack of efficient dynamic models for both freeways and urban roads; 2) the standard DRL method becomes ineffective due to the complex and non-stationary network dynamics. In view of this, we propose a novel meso-macro dynamic network model and first time develop a demonstration-guided DRL method to achieve large-scale coordinated ramp metering and perimeter control. The dynamic network model hybridizes the link and generalized bathtub models to depict the traffic dynamics of freeways and urban roads, respectively. For the DRL method, we incorporate demonstration to guide the DRL method for better convergence by introducing the concept of "teacher" and "student" models. The teacher models are traditional controllers (e.g., ALINEA, Gating), which provide control demonstrations. The student models are DRL methods, which learn from the teacher and aim to surpass the teacher's performance. To validate the proposed framework, we conduct two case studies in a small-scale network and a real-world large-scale traffic network in Hong Kong. The research outcome reveals the great potential of combining traditional controllers with DRL for coordinated control in large-scale networks.

Heterogeneous Line Graph Transformer for Math Word Problems

Aug 12, 2022

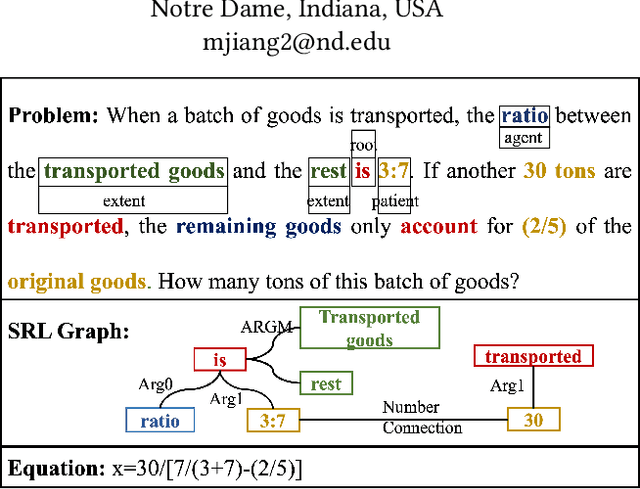

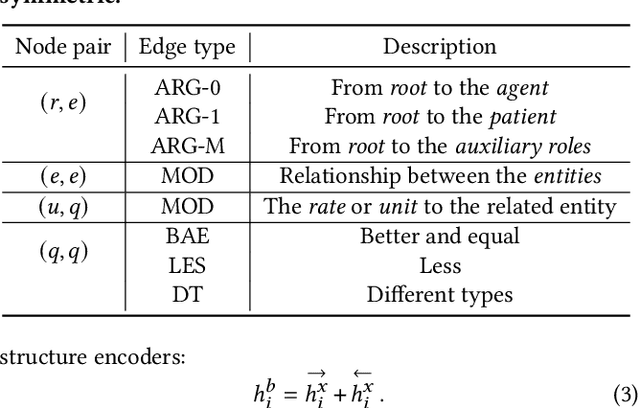

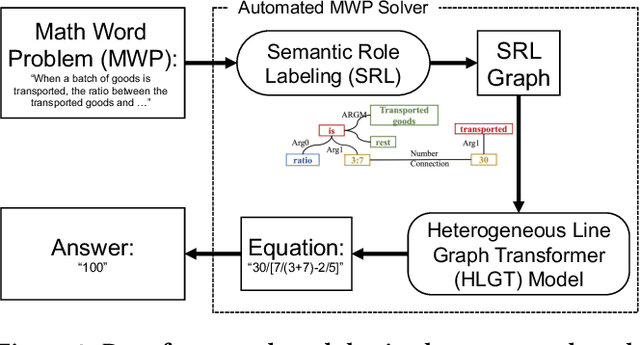

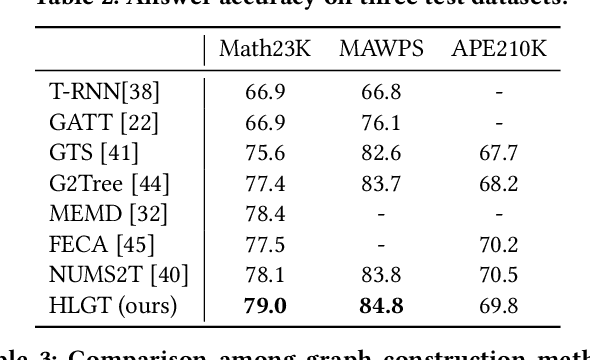

Abstract:This paper describes the design and implementation of a new machine learning model for online learning systems. We aim at improving the intelligent level of the systems by enabling an automated math word problem solver which can support a wide range of functions such as homework correction, difficulty estimation, and priority recommendation. We originally planned to employ existing models but realized that they processed a math word problem as a sequence or a homogeneous graph of tokens. Relationships between the multiple types of tokens such as entity, unit, rate, and number were ignored. We decided to design and implement a novel model to use such relational data to bridge the information gap between human-readable language and machine-understandable logical form. We propose a heterogeneous line graph transformer (HLGT) model that constructs a heterogeneous line graph via semantic role labeling on math word problems and then perform node representation learning aware of edge types. We add numerical comparison as an auxiliary task to improve model training for real-world use. Experimental results show that the proposed model achieves a better performance than existing models and suggest that it is still far below human performance. Information utilization and knowledge discovery is continuously needed to improve the online learning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge