Virginia Dignum

Clash of the Explainers: Argumentation for Context-Appropriate Explanations

Dec 12, 2023

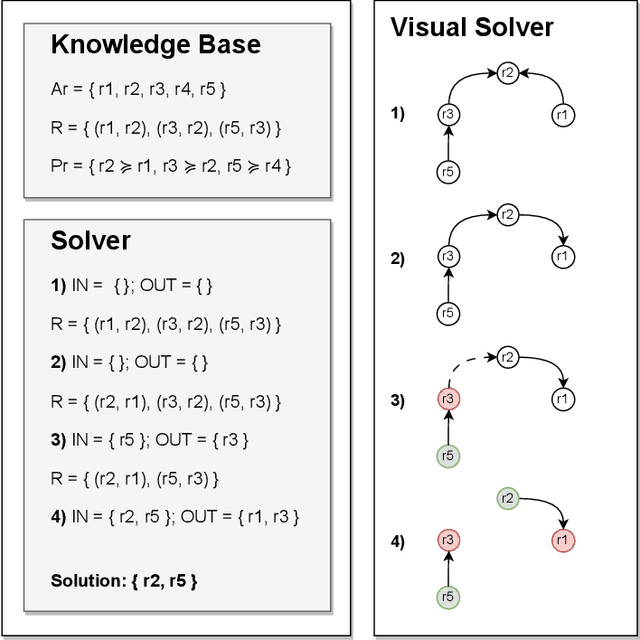

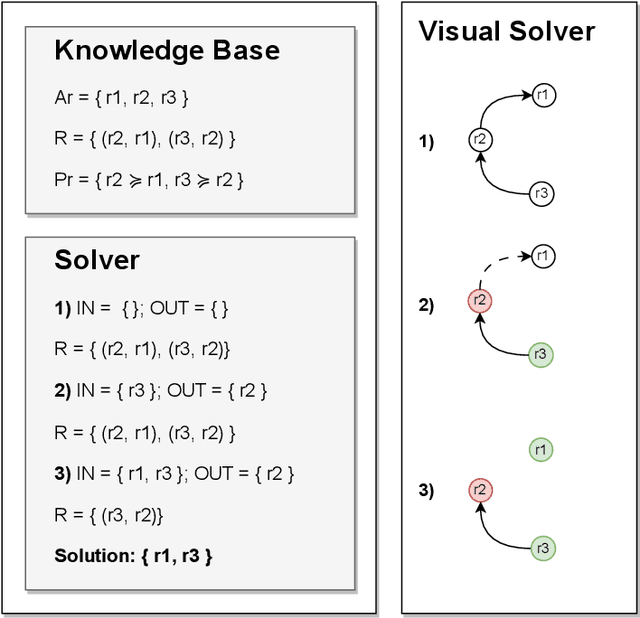

Abstract:Understanding when and why to apply any given eXplainable Artificial Intelligence (XAI) technique is not a straightforward task. There is no single approach that is best suited for a given context. This paper aims to address the challenge of selecting the most appropriate explainer given the context in which an explanation is required. For AI explainability to be effective, explanations and how they are presented needs to be oriented towards the stakeholder receiving the explanation. If -- in general -- no single explanation technique surpasses the rest, then reasoning over the available methods is required in order to select one that is context-appropriate. Due to the transparency they afford, we propose employing argumentation techniques to reach an agreement over the most suitable explainers from a given set of possible explainers. In this paper, we propose a modular reasoning system consisting of a given mental model of the relevant stakeholder, a reasoner component that solves the argumentation problem generated by a multi-explainer component, and an AI model that is to be explained suitably to the stakeholder of interest. By formalising supporting premises -- and inferences -- we can map stakeholder characteristics to those of explanation techniques. This allows us to reason over the techniques and prioritise the best one for the given context, while also offering transparency into the selection decision.

Social AI and the Challenges of the Human-AI Ecosystem

Jun 23, 2023

Abstract:The rise of large-scale socio-technical systems in which humans interact with artificial intelligence (AI) systems (including assistants and recommenders, in short AIs) multiplies the opportunity for the emergence of collective phenomena and tipping points, with unexpected, possibly unintended, consequences. For example, navigation systems' suggestions may create chaos if too many drivers are directed on the same route, and personalised recommendations on social media may amplify polarisation, filter bubbles, and radicalisation. On the other hand, we may learn how to foster the "wisdom of crowds" and collective action effects to face social and environmental challenges. In order to understand the impact of AI on socio-technical systems and design next-generation AIs that team with humans to help overcome societal problems rather than exacerbate them, we propose to build the foundations of Social AI at the intersection of Complex Systems, Network Science and AI. In this perspective paper, we discuss the main open questions in Social AI, outlining possible technical and scientific challenges and suggesting research avenues.

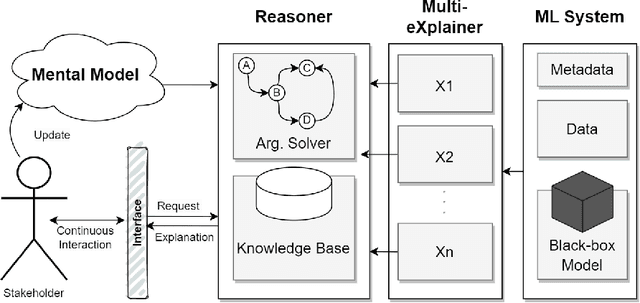

ACROCPoLis: A Descriptive Framework for Making Sense of Fairness

Apr 19, 2023

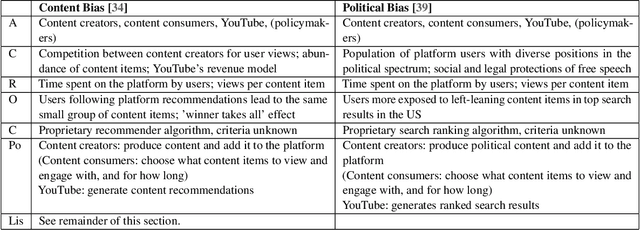

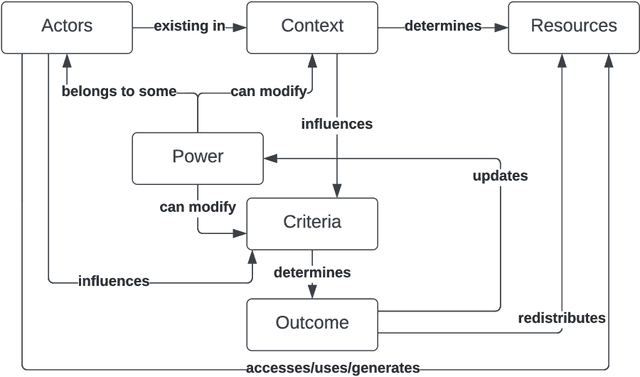

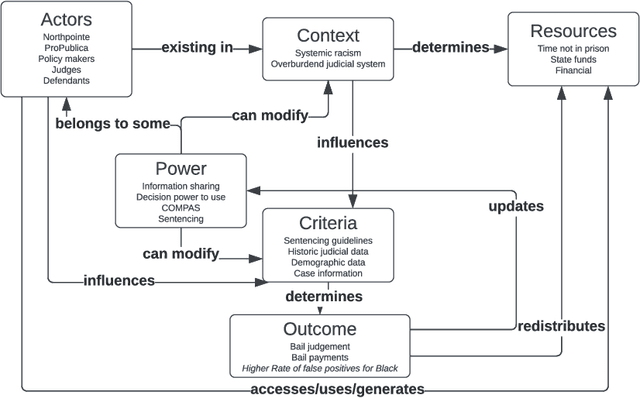

Abstract:Fairness is central to the ethical and responsible development and use of AI systems, with a large number of frameworks and formal notions of algorithmic fairness being available. However, many of the fairness solutions proposed revolve around technical considerations and not the needs of and consequences for the most impacted communities. We therefore want to take the focus away from definitions and allow for the inclusion of societal and relational aspects to represent how the effects of AI systems impact and are experienced by individuals and social groups. In this paper, we do this by means of proposing the ACROCPoLis framework to represent allocation processes with a modeling emphasis on fairness aspects. The framework provides a shared vocabulary in which the factors relevant to fairness assessments for different situations and procedures are made explicit, as well as their interrelationships. This enables us to compare analogous situations, to highlight the differences in dissimilar situations, and to capture differing interpretations of the same situation by different stakeholders.

Let it RAIN for Social Good

Jul 26, 2022Abstract:Artificial Intelligence (AI) as a highly transformative technology take on a special role as both an enabler and a threat to UN Sustainable Development Goals (SDGs). AI Ethics and emerging high-level policy efforts stand at the pivot point between these outcomes but is barred from effect due the abstraction gap between high-level values and responsible action. In this paper the Responsible Norms (RAIN) framework is presented, bridging this gap thereby enabling effective high-level control of AI impact. With effective and operationalized AI Ethics, AI technologies can be directed towards global sustainable development.

Responsible Artificial Intelligence -- from Principles to Practice

May 22, 2022Abstract:The impact of Artificial Intelligence does not depend only on fundamental research and technological developments, but for a large part on how these systems are introduced into society and used in everyday situations. AI is changing the way we work, live and solve challenges but concerns about fairness, transparency or privacy are also growing. Ensuring responsible, ethical AI is more than designing systems whose result can be trusted. It is about the way we design them, why we design them, and who is involved in designing them. In order to develop and use AI responsibly, we need to work towards technical, societal, institutional and legal methods and tools which provide concrete support to AI practitioners, as well as awareness and training to enable participation of all, to ensure the alignment of AI systems with our societies' principles and values.

Relational Artificial Intelligence

Feb 04, 2022Abstract:The impact of Artificial Intelligence does not depend only on fundamental research and technological developments, but for a large part on how these systems are introduced into society and used in everyday situations. Even though AI is traditionally associated with rational decision making, understanding and shaping the societal impact of AI in all its facets requires a relational perspective. A rational approach to AI, where computational algorithms drive decision making independent of human intervention, insights and emotions, has shown to result in bias and exclusion, laying bare societal vulnerabilities and insecurities. A relational approach, that focus on the relational nature of things, is needed to deal with the ethical, legal, societal, cultural, and environmental implications of AI. A relational approach to AI recognises that objective and rational reasoning cannot does not always result in the 'right' way to proceed because what is 'right' depends on the dynamics of the situation in which the decision is taken, and that rather than solving ethical problems the focus of design and use of AI must be on asking the ethical question. In this position paper, I start with a general discussion of current conceptualisations of AI followed by an overview of existing approaches to governance and responsible development and use of AI. Then, I reflect over what should be the bases of a social paradigm for AI and how this should be embedded in relational, feminist and non-Western philosophies, in particular the Ubuntu philosophy.

Modelling Human Routines: Conceptualising Social Practice Theory for Agent-Based Simulation

Dec 22, 2020

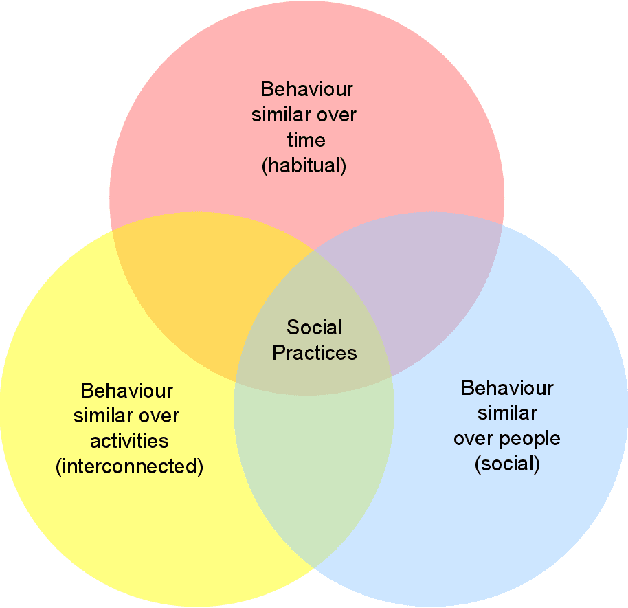

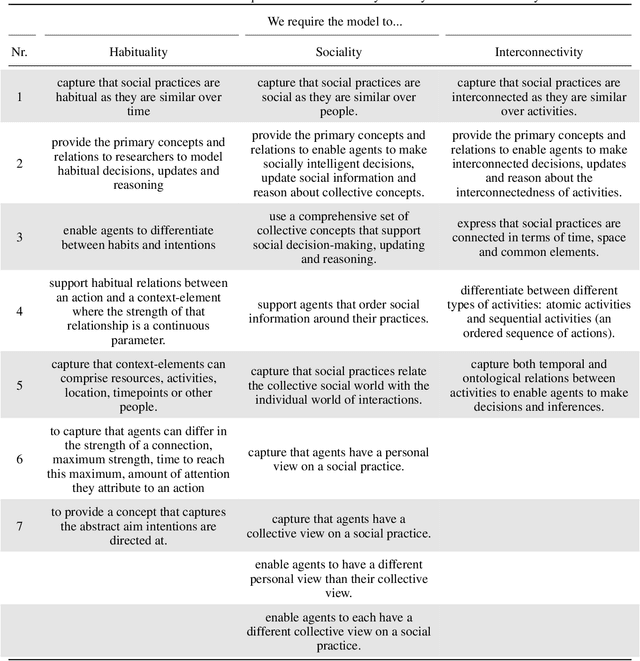

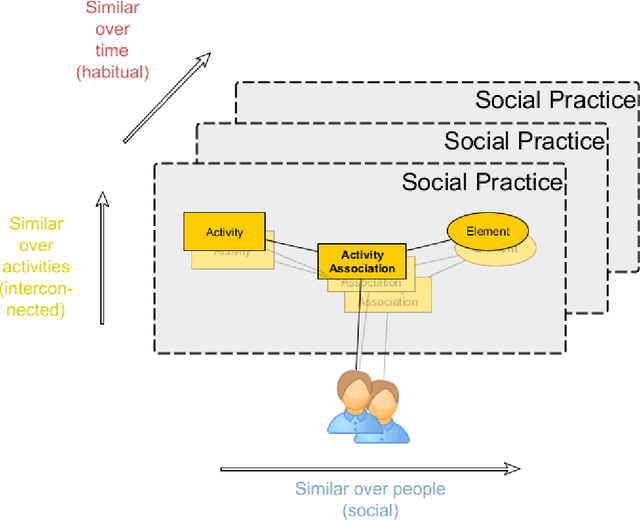

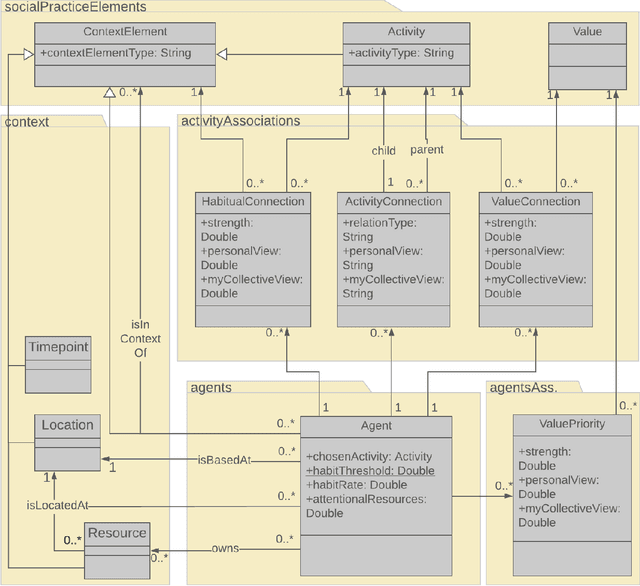

Abstract:Our routines play an important role in a wide range of social challenges such as climate change, disease outbreaks and coordinating staff and patients in a hospital. To use agent-based simulations (ABS) to understand the role of routines in social challenges we need an agent framework that integrates routines. This paper provides the domain-independent Social Practice Agent (SoPrA) framework that satisfies requirements from the literature to simulate our routines. By choosing the appropriate concepts from the literature on agent theory, social psychology and social practice theory we ensure SoPrA correctly depicts current evidence on routines. By creating a consistent, modular and parsimonious framework suitable for multiple domains we enhance the usability of SoPrA. SoPrA provides ABS researchers with a conceptual, formal and computational framework to simulate routines and gain new insights into social systems.

Contestable Black Boxes

Jun 30, 2020Abstract:The right to contest a decision with consequences on individuals or the society is a well-established democratic right. Despite this right also being explicitly included in GDPR in reference to automated decision-making, its study seems to have received much less attention in the AI literature compared, for example, to the right for explanation. This paper investigates the type of assurances that are needed in the contesting process when algorithmic black-boxes are involved, opening new questions about the interplay of contestability and explainability. We argue that specialised complementary methodologies to evaluate automated decision-making in the case of a particular decision being contested need to be developed. Further, we propose a combination of well-established software engineering and rule-based approaches as a possible socio-technical solution to the issue of contestability, one of the new democratic challenges posed by the automation of decision making.

Improving Confidence in the Estimation of Values and Norms

Apr 02, 2020

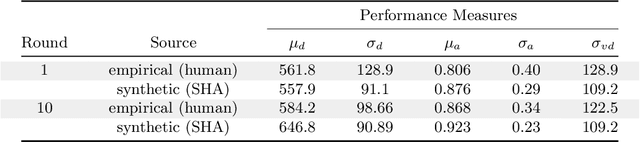

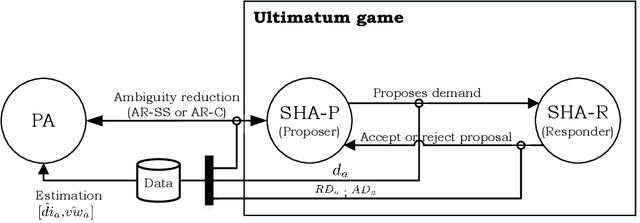

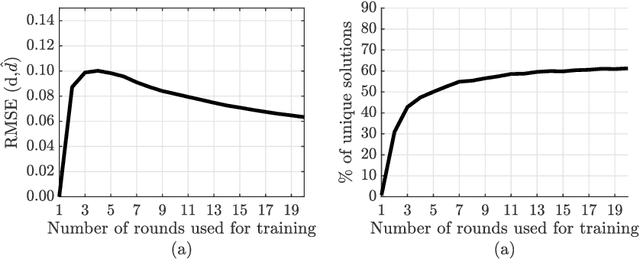

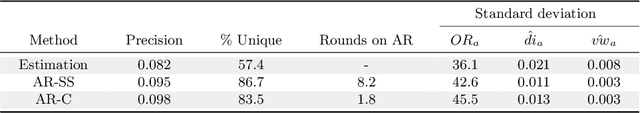

Abstract:Autonomous agents (AA) will increasingly be interacting with us in our daily lives. While we want the benefits attached to AAs, it is essential that their behavior is aligned with our values and norms. Hence, an AA will need to estimate the values and norms of the humans it interacts with, which is not a straightforward task when solely observing an agent's behavior. This paper analyses to what extent an AA is able to estimate the values and norms of a simulated human agent (SHA) based on its actions in the ultimatum game. We present two methods to reduce ambiguity in profiling the SHAs: one based on search space exploration and another based on counterfactual analysis. We found that both methods are able to increase the confidence in estimating human values and norms, but differ in their applicability, the latter being more efficient when the number of interactions with the agent is to be minimized. These insights are useful to improve the alignment of AAs with human values and norms.

Bias in Machine Learning What is it Good for?

Apr 01, 2020

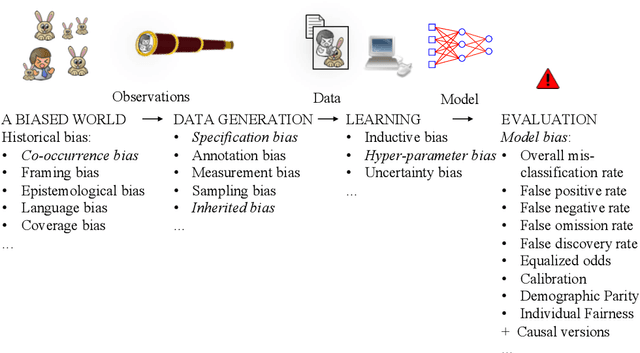

Abstract:In public media as well as in scientific publications, the term \emph{bias} is used in conjunction with machine learning in many different contexts, and with many different meanings. This paper proposes a taxonomy of these different meanings, terminology, and definitions by surveying the, primarily scientific, literature on machine learning. In some cases, we suggest extensions and modifications to promote a clear terminology and completeness. The survey is followed by an analysis and discussion on how different types of biases are connected and depend on each other. We conclude that there is a complex relation between bias occurring in the machine learning pipeline that leads to a model, and the eventual bias of the model (which is typically related to social discrimination). The former bias may or may not influence the latter, in a sometimes bad, and sometime good way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge