Alistair Knott

A linguistic analysis of undesirable outcomes in the era of generative AI

Oct 16, 2024

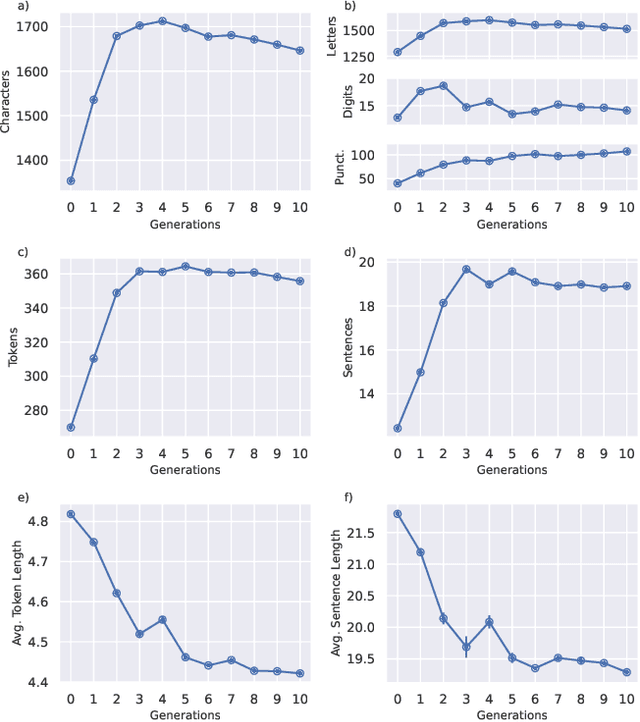

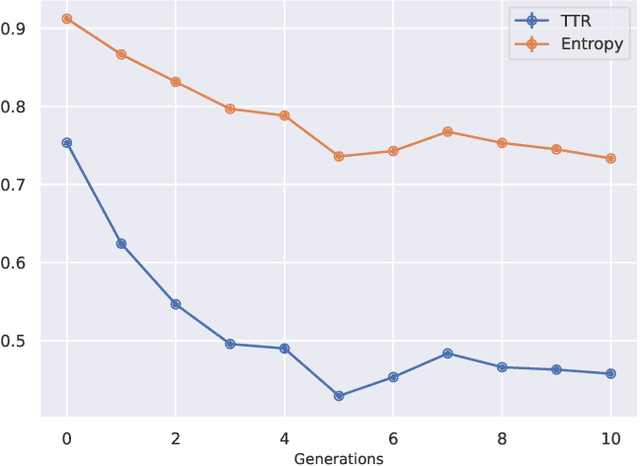

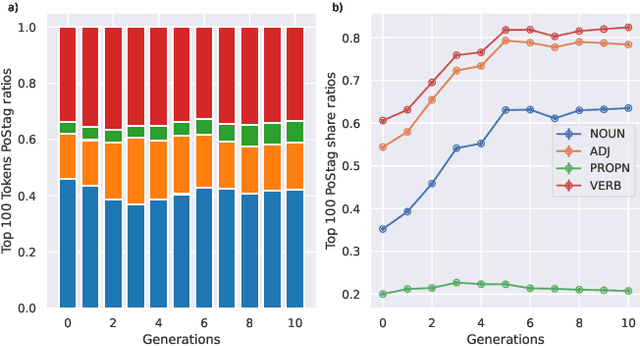

Abstract:Recent research has focused on the medium and long-term impacts of generative AI, posing scientific and societal challenges mainly due to the detection and reliability of machine-generated information, which is projected to form the major content on the Web soon. Prior studies show that LLMs exhibit a lower performance in generation tasks (model collapse) as they undergo a fine-tuning process across multiple generations on their own generated content (self-consuming loop). In this paper, we present a comprehensive simulation framework built upon the chat version of LLama2, focusing particularly on the linguistic aspects of the generated content, which has not been fully examined in existing studies. Our results show that the model produces less lexical rich content across generations, reducing diversity. The lexical richness has been measured using the linguistic measures of entropy and TTR as well as calculating the POSTags frequency. The generated content has also been examined with an $n$-gram analysis, which takes into account the word order, and semantic networks, which consider the relation between different words. These findings suggest that the model collapse occurs not only by decreasing the content diversity but also by distorting the underlying linguistic patterns of the generated text, which both highlight the critical importance of carefully choosing and curating the initial input text, which can alleviate the model collapse problem. Furthermore, we conduct a qualitative analysis of the fine-tuned models of the pipeline to compare their performances on generic NLP tasks to the original model. We find that autophagy transforms the initial model into a more creative, doubtful and confused one, which might provide inaccurate answers and include conspiracy theories in the model responses, spreading false and biased information on the Web.

Social AI and the Challenges of the Human-AI Ecosystem

Jun 23, 2023

Abstract:The rise of large-scale socio-technical systems in which humans interact with artificial intelligence (AI) systems (including assistants and recommenders, in short AIs) multiplies the opportunity for the emergence of collective phenomena and tipping points, with unexpected, possibly unintended, consequences. For example, navigation systems' suggestions may create chaos if too many drivers are directed on the same route, and personalised recommendations on social media may amplify polarisation, filter bubbles, and radicalisation. On the other hand, we may learn how to foster the "wisdom of crowds" and collective action effects to face social and environmental challenges. In order to understand the impact of AI on socio-technical systems and design next-generation AIs that team with humans to help overcome societal problems rather than exacerbate them, we propose to build the foundations of Social AI at the intersection of Complex Systems, Network Science and AI. In this perspective paper, we discuss the main open questions in Social AI, outlining possible technical and scientific challenges and suggesting research avenues.

Learning, Planning, and Control in a Monolithic Neural Event Inference Architecture

Sep 19, 2018

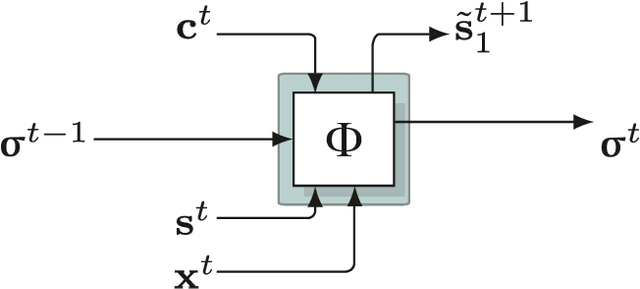

Abstract:We introduce a dynamic artificial neural network-based (ANN) adaptive inference process, which learns temporal predictive models of dynamical systems. We term the process REPRISE, a REtrospective and PRospective Inference SchEme. REPRISE infers the unobservable contextual state that best explains its recently encountered sensorimotor experiences as well as accompanying, context-dependent temporal predictive models retrospectively. Meanwhile, it executes prospective inference, optimizing upcoming motor activities in a goal-directed manner. In a first implementation, a recurrent neural network (RNN) is trained to learn a temporal forward model, which predicts the sensorimotor contingencies of different simulated dynamic vehicles. The RNN is augmented with contextual neurons, which enable the compact encoding of distinct, but related sensorimotor dynamics. We show that REPRISE is able to concurrently learn to separate and approximate the encountered sensorimotor dynamics. Moreover, we show that REPRISE can exploit the learned model to induce goal-directed, model-predictive control, that is, approximate active inference: Given a goal state, the system imagines a motor command sequence optimizing it with the prospective objective to minimize the distance to a given goal. Meanwhile, the system evaluates the encountered sensorimotor contingencies retrospectively, adapting its neural hidden states for maintaining model coherence. The RNN activities thus continuously imagine the upcoming future and reflect on the recent past, optimizing both, hidden state and motor activities. In conclusion, the combination of temporal predictive structures with modulatory, generative encodings offers a way to develop compact event codes, which selectively activate particular types of sensorimotor event-specific dynamics.

Anaphora and Discourse Structure

Sep 13, 2002Abstract:We argue in this paper that many common adverbial phrases generally taken to signal a discourse relation between syntactically connected units within discourse structure, instead work anaphorically to contribute relational meaning, with only indirect dependence on discourse structure. This allows a simpler discourse structure to provide scaffolding for compositional semantics, and reveals multiple ways in which the relational meaning conveyed by adverbial connectives can interact with that associated with discourse structure. We conclude by sketching out a lexicalised grammar for discourse that facilitates discourse interpretation as a product of compositional rules, anaphor resolution and inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge