Hannah Devinney

ACROCPoLis: A Descriptive Framework for Making Sense of Fairness

Apr 19, 2023

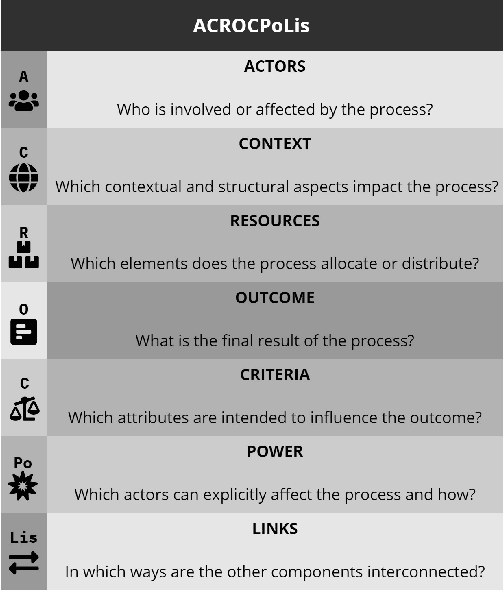

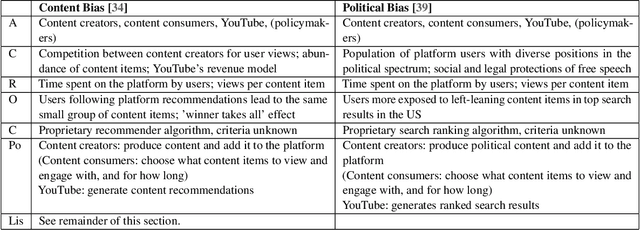

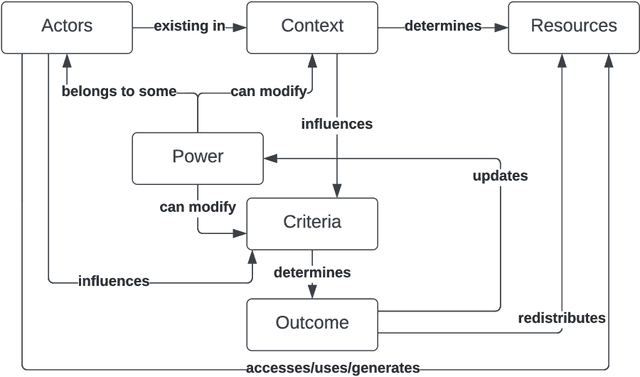

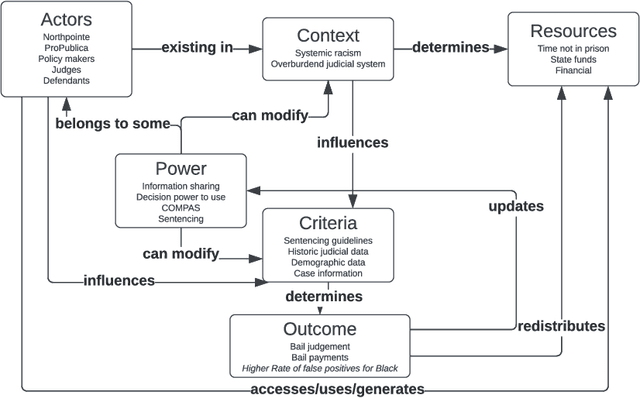

Abstract:Fairness is central to the ethical and responsible development and use of AI systems, with a large number of frameworks and formal notions of algorithmic fairness being available. However, many of the fairness solutions proposed revolve around technical considerations and not the needs of and consequences for the most impacted communities. We therefore want to take the focus away from definitions and allow for the inclusion of societal and relational aspects to represent how the effects of AI systems impact and are experienced by individuals and social groups. In this paper, we do this by means of proposing the ACROCPoLis framework to represent allocation processes with a modeling emphasis on fairness aspects. The framework provides a shared vocabulary in which the factors relevant to fairness assessments for different situations and procedures are made explicit, as well as their interrelationships. This enables us to compare analogous situations, to highlight the differences in dissimilar situations, and to capture differing interpretations of the same situation by different stakeholders.

Theories of "Gender" in NLP Bias Research

May 05, 2022

Abstract:The rise of concern around Natural Language Processing (NLP) technologies containing and perpetuating social biases has led to a rich and rapidly growing area of research. Gender bias is one of the central biases being analyzed, but to date there is no comprehensive analysis of how "gender" is theorized in the field. We survey nearly 200 articles concerning gender bias in NLP to discover how the field conceptualizes gender both explicitly (e.g. through definitions of terms) and implicitly (e.g. through how gender is operationalized in practice). In order to get a better idea of emerging trajectories of thought, we split these articles into two sections by time. We find that the majority of the articles do not make their theorization of gender explicit, even if they clearly define "bias." Almost none use a model of gender that is intersectional or inclusive of nonbinary genders; and many conflate sex characteristics, social gender, and linguistic gender in ways that disregard the existence and experience of trans, nonbinary, and intersex people. There is an increase between the two time-sections in statements acknowledging that gender is a complicated reality, however, very few articles manage to put this acknowledgment into practice. In addition to analyzing these findings, we provide specific recommendations to facilitate interdisciplinary work, and to incorporate theory and methodology from Gender Studies. Our hope is that this will produce more inclusive gender bias research in NLP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge