Suna Bensch

The experience of humans' and robots' mutual (im)politeness in enacted service scenarios: An empirical study

Jun 25, 2024

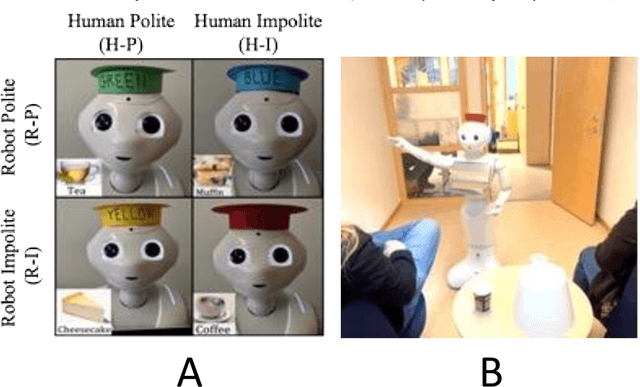

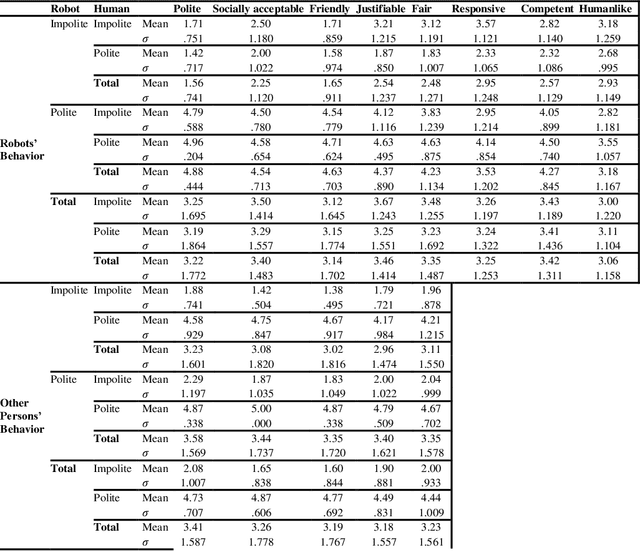

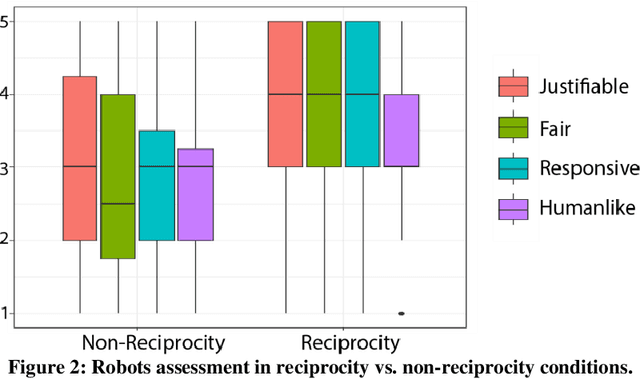

Abstract:The paper reports an empirical study of the effect of human treatment of a robot on the social perception of the robot's behavior. The study employed an enacted interaction between an anthropomorphic "waiter" robot and two customers. The robot and one of the customers (acted out by a researcher) were following four different interaction scripts, representing all combinations of mutual politeness and impoliteness of the robot and the customer. The participants (N=24, within-subject design) were assigned the role of an "included observer", that is, a fellow customer who was present in the situation without being actively involved in the interactions. The participants assessed how they experienced the interaction scenarios by providing Likert scale scores and free-text responses. The results indicate that while impolite robots' behavior was generally assessed negatively, it was commonly perceived as more justifiable and fairer if the robot was treated impolitely by the human. Politeness reciprocity expectations in the context of the social perception of robots are discussed.

Mining multi-modal communication patterns in interaction with explainable and non-explainable robots

Dec 22, 2023Abstract:We investigate interaction patterns for humans interacting with explainable and non-explainable robots. Non-explainable robots are here robots that do not explain their actions or non-actions, neither do they give any other feedback during interaction, in contrast to explainable robots. We video recorded and analyzed human behavior during a board game, where 20 humans verbally instructed either an explainable or non-explainable Pepper robot to move objects on the board. The transcriptions and annotations of the videos were transformed into transactions for association rule mining. Association rules discovered communication patterns in the interaction between the robots and the humans, and the most interesting rules were also tested with regular chi-square tests. Some statistically significant results are that there is a strong correlation between men and non-explainable robots and women and explainable robots, and that humans mirror some of the robot's modality. Our results also show that it is important to contextualize human interaction patterns, and that this can be easily done using association rules as an investigative tool. The presented results are important when designing robots that should adapt their behavior to become understandable for the interacting humans.

Conversational Norms for Human-Robot Dialogues

Mar 02, 2021Abstract:This paper describes a recently initiated research project aiming at supporting development of computerised dialogue systems that handle breaches of conversational norms such as the Gricean maxims, which describe how dialogue participants ideally form their utterances in order to be informative, relevant, brief, etc. Our approach is to model dialogue and norms with co-operating distributed grammar systems (CDGSs), and to develop methods to detect breaches and to handle them in dialogue systems for verbal human-robot interaction.

Probing Multimodal Embeddings for Linguistic Properties: the Visual-Semantic Case

Feb 22, 2021

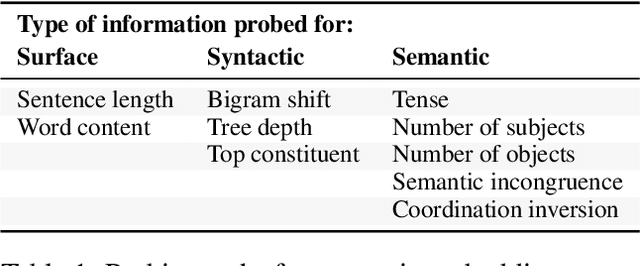

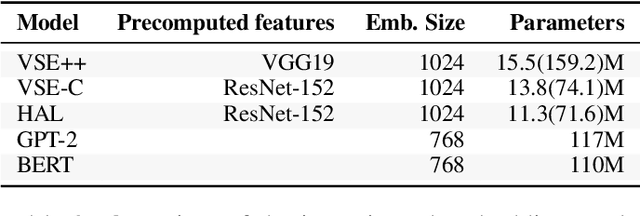

Abstract:Semantic embeddings have advanced the state of the art for countless natural language processing tasks, and various extensions to multimodal domains, such as visual-semantic embeddings, have been proposed. While the power of visual-semantic embeddings comes from the distillation and enrichment of information through machine learning, their inner workings are poorly understood and there is a shortage of analysis tools. To address this problem, we generalize the notion of probing tasks to the visual-semantic case. To this end, we (i) discuss the formalization of probing tasks for embeddings of image-caption pairs, (ii) define three concrete probing tasks within our general framework, (iii) train classifiers to probe for those properties, and (iv) compare various state-of-the-art embeddings under the lens of the proposed probing tasks. Our experiments reveal an up to 12% increase in accuracy on visual-semantic embeddings compared to the corresponding unimodal embeddings, which suggest that the text and image dimensions represented in the former do complement each other.

Bias in Machine Learning What is it Good for?

Apr 01, 2020

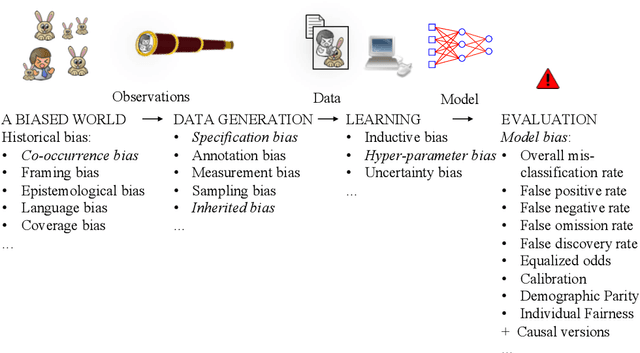

Abstract:In public media as well as in scientific publications, the term \emph{bias} is used in conjunction with machine learning in many different contexts, and with many different meanings. This paper proposes a taxonomy of these different meanings, terminology, and definitions by surveying the, primarily scientific, literature on machine learning. In some cases, we suggest extensions and modifications to promote a clear terminology and completeness. The survey is followed by an analysis and discussion on how different types of biases are connected and depend on each other. We conclude that there is a complex relation between bias occurring in the machine learning pipeline that leads to a model, and the eventual bias of the model (which is typically related to social discrimination). The former bias may or may not influence the latter, in a sometimes bad, and sometime good way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge