Shikhar Kumar

Improving robot understanding using conversational AI: demonstration and feasibility study

Jan 21, 2025Abstract:Explanations constitute an important aspect of successful human robot interactions and can enhance robot understanding. To improve the understanding of the robot, we have developed four levels of explanation (LOE) based on two questions: what needs to be explained, and why the robot has made a particular decision. The understandable robot requires a communicative action when there is disparity between the human s mental model of the robot and the robots state of mind. This communicative action was generated by utilizing a conversational AI platform to generate explanations. An adaptive dialog was implemented for transition from one LOE to another. Here, we demonstrate the adaptive dialog in a collaborative task with errors and provide results of a feasibility study with users.

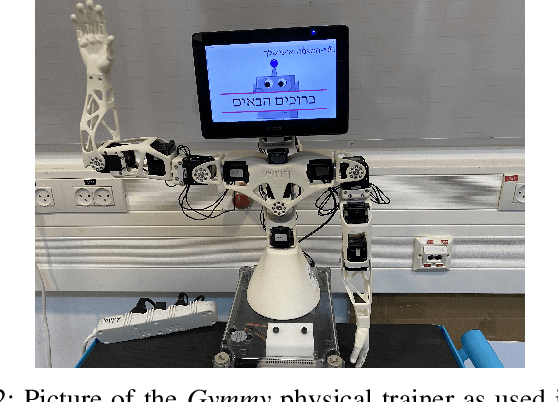

Levels of explanation -- implementation and evaluation of what and when for different time-sensitive tasks

Oct 30, 2024Abstract:In this work, we focused on constructing and evaluating levels of explanation(LOE) that address two basic aspect of HRI: 1. What information should be communicated to the user by the robot? 2. When should the robot communicate this information? For constructing the LOE, we defined two terms, verbosity and explanation patterns, each with two levels (verbosity -- high and low, explanation patterns -- dynamic and static). Based on these parameters, three different LOE (high, medium, and low) were constructed and evaluated in a user study with a telepresence robot. The user study was conducted for a simulated telerobotic healthcare task with two different conditions related to time sensitivity, as evaluated by two different user groups -- one that performed the task within a time limit and the other with no time limit. We found that the high LOE was preferred in terms of adequacy of explanation, number of collisions, number of incorrect movements, and number of clarifications when users performed the experiment in the without time limit condition. We also found that both high and medium LOE did not have significant differences in completion time, the fluency of HRI, and trust in the robot. When users performed the experiment in the with time limit condition, high and medium LOE had better task performances and were preferred to the low LOE in terms of completion time, fluency, adequacy of explanation, trust, number of collisions, number of incorrect movements and number of clarifications. Future directions for advancing LOE are discussed.

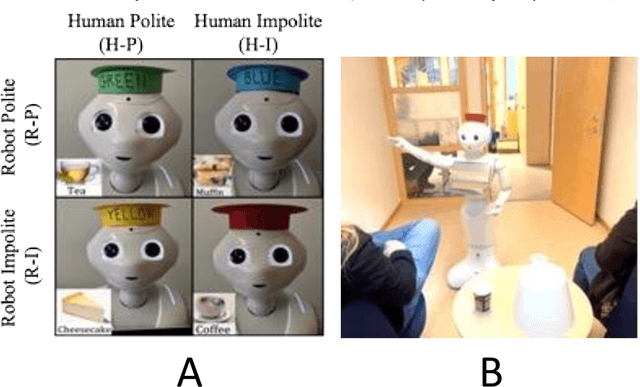

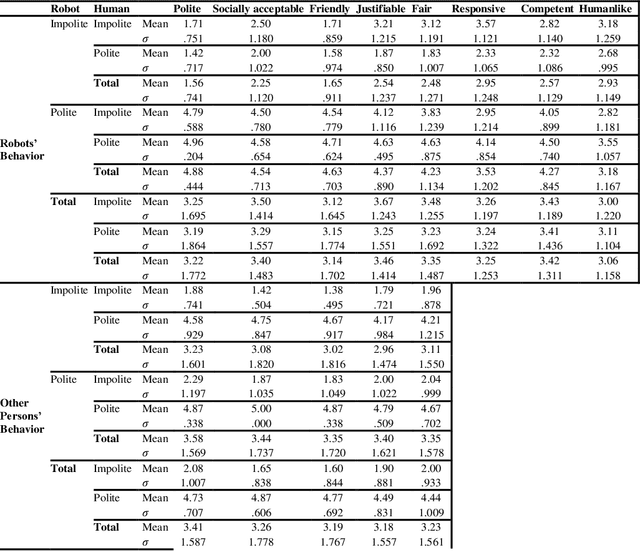

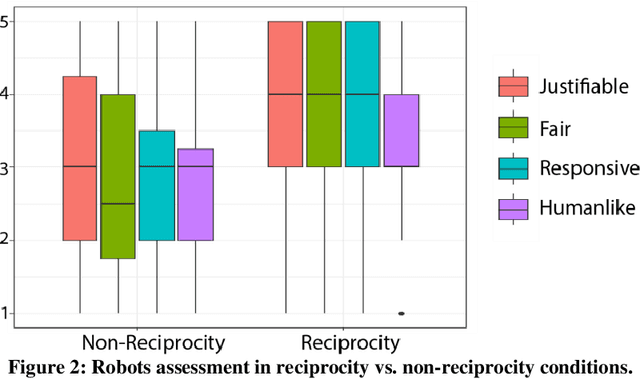

The experience of humans' and robots' mutual (im)politeness in enacted service scenarios: An empirical study

Jun 25, 2024

Abstract:The paper reports an empirical study of the effect of human treatment of a robot on the social perception of the robot's behavior. The study employed an enacted interaction between an anthropomorphic "waiter" robot and two customers. The robot and one of the customers (acted out by a researcher) were following four different interaction scripts, representing all combinations of mutual politeness and impoliteness of the robot and the customer. The participants (N=24, within-subject design) were assigned the role of an "included observer", that is, a fellow customer who was present in the situation without being actively involved in the interactions. The participants assessed how they experienced the interaction scenarios by providing Likert scale scores and free-text responses. The results indicate that while impolite robots' behavior was generally assessed negatively, it was commonly perceived as more justifiable and fairer if the robot was treated impolitely by the human. Politeness reciprocity expectations in the context of the social perception of robots are discussed.

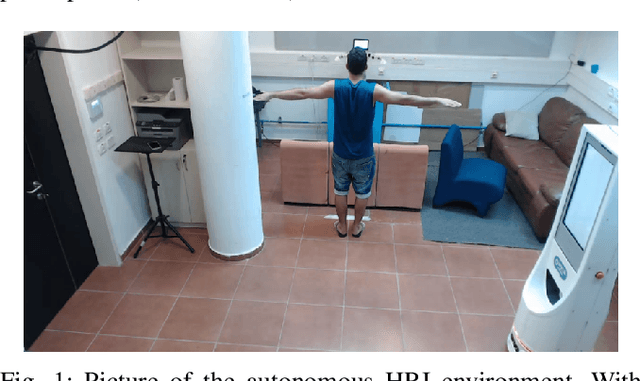

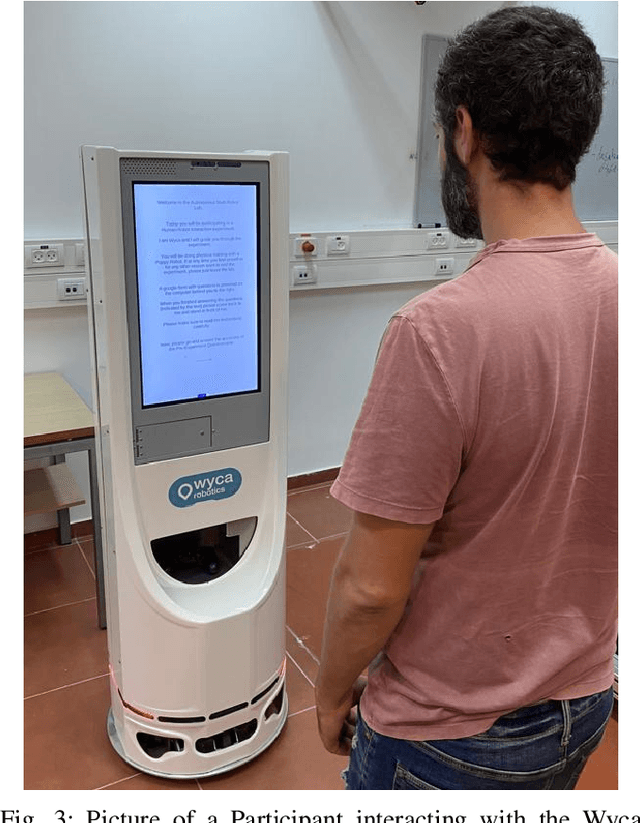

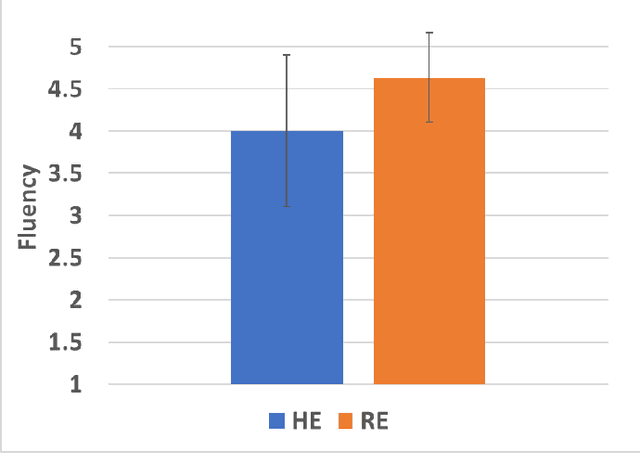

Can Robotic Experimenters help improve HRI Experiments? An Experimental Study

Nov 24, 2023

Abstract:To evaluate the design and skills of a robot or an algorithm for robotics, human-robot interaction user studies need to be performed. Classically, these studies are conducted by human experimenters, requiring considerable effort, and introducing variability and potential human error. In this paper, we investigate the use of robots in support of HRI experiments. Robots can perform repeated tasks accurately, thereby reducing human effort and improving validity through reduction of error and variability between participants. To assess the potential for robot led HRI experiments, we ran an HRI experiment with two participant groups, one led by a human experimenter and another led mostly by a robot experimenter.We show that the replacement of several repetitive experiment tasks through robots is not only possible but beneficial: Trials performed by the robot experimenter had fewer errors and were more fluent. There was no statistically significant difference in participants' perception w.r.t. cognitive load, comfortability, enjoyment, safety, trust and understandability between both groups. To the best of our knowledge, this is the first comparison between robot-led and human-led HRI experiments. It suggests that using robot experimenters can be beneficial and should be considered.

Understandable Robots

Sep 22, 2022

Abstract:Finally, the work will include an investigation of the contextual form of explanations. In this study, we will include a time-bounded scenario in which the different levels of understanding will be tested to enable us to evaluate suitable and comprehensible explanations. For this we have proposed different levels of understanding (LOU). A user study will be designed to compare different LOU for different contexts of interaction. A user study simultating a hospital environment will be investigated.

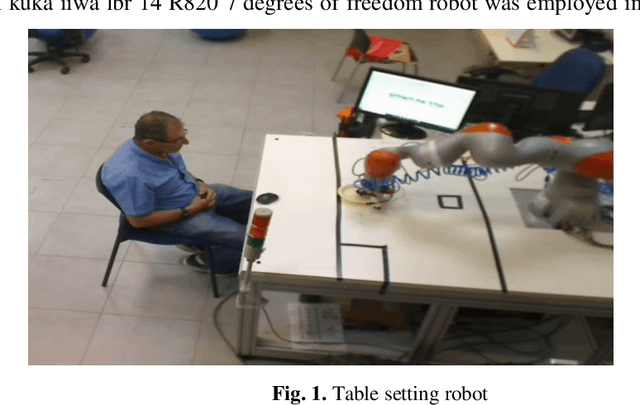

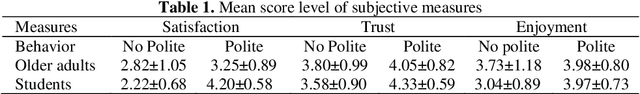

Exploratory evaluation of politeness in human-robot interaction

Mar 15, 2021

Abstract:Aiming to explore the impact of politeness on Human robot interaction, this study tested varying levels of politeness in a human robot collaborative table setting task. Polite behaviour was designed based on the politeness rules of Lakoff. A graphical user interface was developed for the interaction with the robot offering three levels of politeness, and an experiment was conducted with 20 older adults and 30 engineering students. Results indicated that the quality of interaction was influenced by politeness as participants significantly preferred the polite mode of the robot. However, the older adults were less able to distinguish between the three politeness levels. Future studies should thus include pre experiment training to increase the familiarity of the older adults with robotic technology. These studies should also include other permutations of the politeness rules of Lakoff.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge