Tao Sheng

LAD-RAG: Layout-aware Dynamic RAG for Visually-Rich Document Understanding

Oct 08, 2025

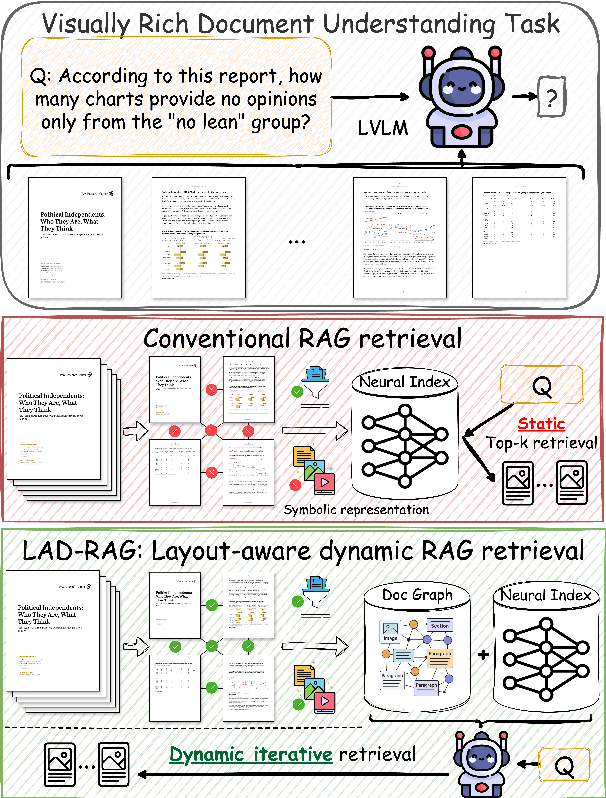

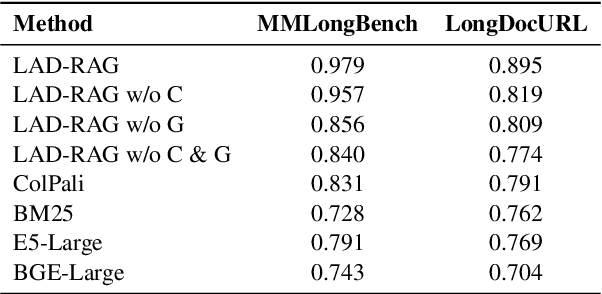

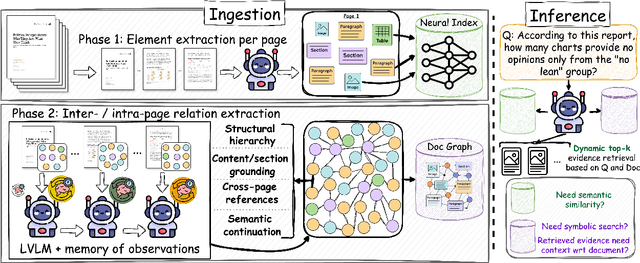

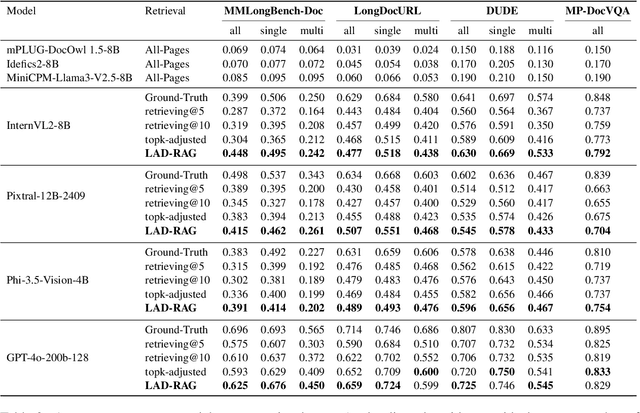

Abstract:Question answering over visually rich documents (VRDs) requires reasoning not only over isolated content but also over documents' structural organization and cross-page dependencies. However, conventional retrieval-augmented generation (RAG) methods encode content in isolated chunks during ingestion, losing structural and cross-page dependencies, and retrieve a fixed number of pages at inference, regardless of the specific demands of the question or context. This often results in incomplete evidence retrieval and degraded answer quality for multi-page reasoning tasks. To address these limitations, we propose LAD-RAG, a novel Layout-Aware Dynamic RAG framework. During ingestion, LAD-RAG constructs a symbolic document graph that captures layout structure and cross-page dependencies, adding it alongside standard neural embeddings to yield a more holistic representation of the document. During inference, an LLM agent dynamically interacts with the neural and symbolic indices to adaptively retrieve the necessary evidence based on the query. Experiments on MMLongBench-Doc, LongDocURL, DUDE, and MP-DocVQA demonstrate that LAD-RAG improves retrieval, achieving over 90% perfect recall on average without any top-k tuning, and outperforming baseline retrievers by up to 20% in recall at comparable noise levels, yielding higher QA accuracy with minimal latency.

Weakly-Supervised Detection of Bone Lesions in CT

Jan 31, 2024

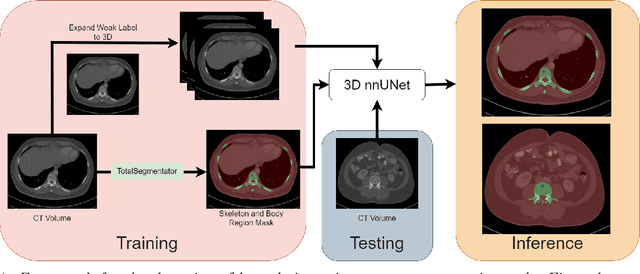

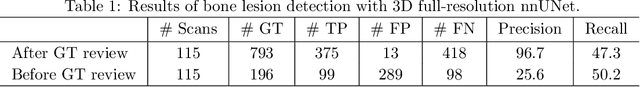

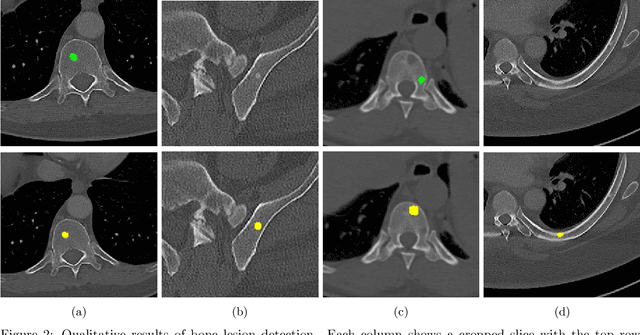

Abstract:The skeletal region is one of the common sites of metastatic spread of cancer in the breast and prostate. CT is routinely used to measure the size of lesions in the bones. However, they can be difficult to spot due to the wide variations in their sizes, shapes, and appearances. Precise localization of such lesions would enable reliable tracking of interval changes (growth, shrinkage, or unchanged status). To that end, an automated technique to detect bone lesions is highly desirable. In this pilot work, we developed a pipeline to detect bone lesions (lytic, blastic, and mixed) in CT volumes via a proxy segmentation task. First, we used the bone lesions that were prospectively marked by radiologists in a few 2D slices of CT volumes and converted them into weak 3D segmentation masks. Then, we trained a 3D full-resolution nnUNet model using these weak 3D annotations to segment the lesions and thereby detected them. Our automated method detected bone lesions in CT with a precision of 96.7% and recall of 47.3% despite the use of incomplete and partial training data. To the best of our knowledge, we are the first to attempt the direct detection of bone lesions in CT via a proxy segmentation task.

Chat-REC: Towards Interactive and Explainable LLMs-Augmented Recommender System

Apr 04, 2023Abstract:Large language models (LLMs) have demonstrated their significant potential to be applied for addressing various application tasks. However, traditional recommender systems continue to face great challenges such as poor interactivity and explainability, which actually also hinder their broad deployment in real-world systems. To address these limitations, this paper proposes a novel paradigm called Chat-Rec (ChatGPT Augmented Recommender System) that innovatively augments LLMs for building conversational recommender systems by converting user profiles and historical interactions into prompts. Chat-Rec is demonstrated to be effective in learning user preferences and establishing connections between users and products through in-context learning, which also makes the recommendation process more interactive and explainable. What's more, within the Chat-Rec framework, user's preferences can transfer to different products for cross-domain recommendations, and prompt-based injection of information into LLMs can also handle the cold-start scenarios with new items. In our experiments, Chat-Rec effectively improve the results of top-k recommendations and performs better in zero-shot rating prediction task. Chat-Rec offers a novel approach to improving recommender systems and presents new practical scenarios for the implementation of AIGC (AI generated content) in recommender system studies.

Modeling Global Distribution for Federated Learning with Label Distribution Skew

Dec 17, 2022Abstract:Federated learning achieves joint training of deep models by connecting decentralized data sources, which can significantly mitigate the risk of privacy leakage. However, in a more general case, the distributions of labels among clients are different, called ``label distribution skew''. Directly applying conventional federated learning without consideration of label distribution skew issue significantly hurts the performance of the global model. To this end, we propose a novel federated learning method, named FedMGD, to alleviate the performance degradation caused by the label distribution skew issue. It introduces a global Generative Adversarial Network to model the global data distribution without access to local datasets, so the global model can be trained using the global information of data distribution without privacy leakage. The experimental results demonstrate that our proposed method significantly outperforms the state-of-the-art on several public benchmarks. Code is available at \url{https://github.com/Sheng-T/FedMGD}.

ReCo: A Dataset for Residential Community Layout Planning

Jun 08, 2022

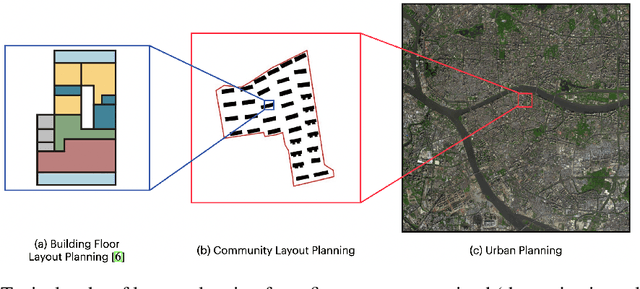

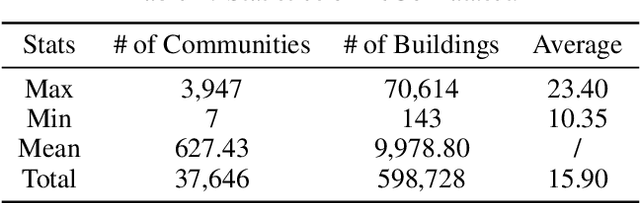

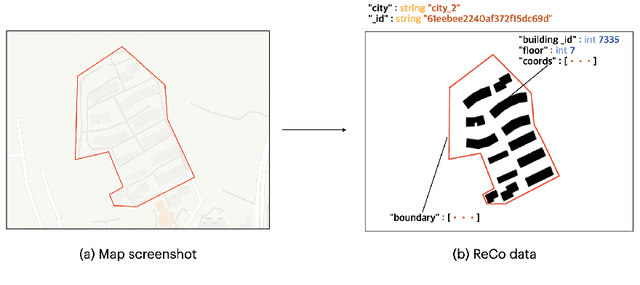

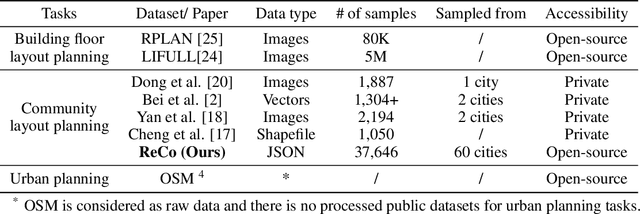

Abstract:Layout planning is centrally important in the field of architecture and urban design. Among the various basic units carrying urban functions, residential community plays a vital part for supporting human life. Therefore, the layout planning of residential community has always been of concern, and has attracted particular attention since the advent of deep learning that facilitates the automated layout generation and spatial pattern recognition. However, the research circles generally suffer from the insufficiency of residential community layout benchmark or high-quality datasets, which hampers the future exploration of data-driven methods for residential community layout planning. The lack of datasets is largely due to the difficulties of large-scale real-world residential data acquisition and long-term expert screening. In order to address the issues and advance a benchmark dataset for various intelligent spatial design and analysis applications in the development of smart city, we introduce Residential Community Layout Planning (ReCo) Dataset, which is the first and largest open-source vector dataset related to real-world community to date. ReCo Dataset is presented in multiple data formats with 37,646 residential community layout plans, covering 598,728 residential buildings with height information. ReCo can be conveniently adapted for residential community layout related urban design tasks, e.g., generative layout design, morphological pattern recognition and spatial evaluation. To validate the utility of ReCo in automated residential community layout planning, a Generative Adversarial Network (GAN) based generative model is further applied to the dataset. We expect ReCo Dataset to inspire more creative and practical work in intelligent design and beyond. The ReCo Dataset is published at: https://www.kaggle.com/fdudsde/reco-dataset.

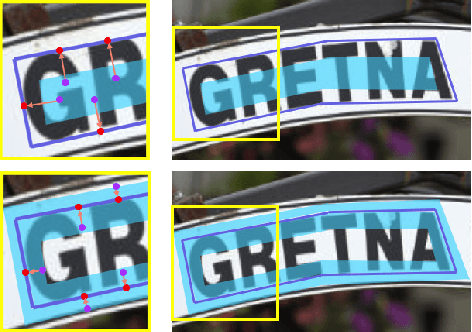

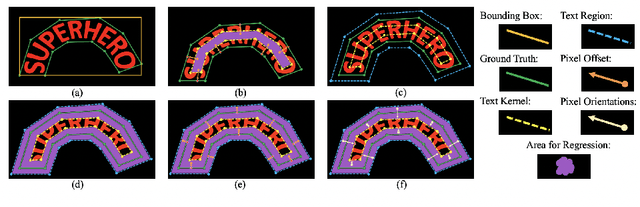

Bidirectional Regression for Arbitrary-Shaped Text Detection

Jul 13, 2021

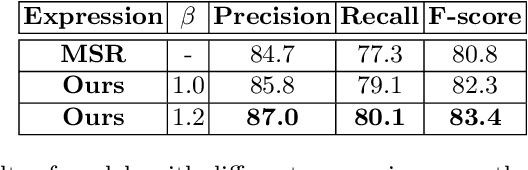

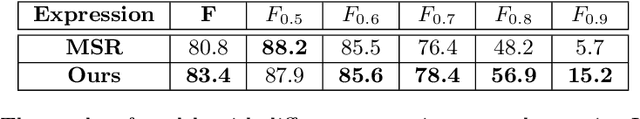

Abstract:Arbitrary-shaped text detection has recently attracted increasing interests and witnessed rapid development with the popularity of deep learning algorithms. Nevertheless, existing approaches often obtain inaccurate detection results, mainly due to the relatively weak ability to utilize context information and the inappropriate choice of offset references. This paper presents a novel text instance expression which integrates both foreground and background information into the pipeline, and naturally uses the pixels near text boundaries as the offset starts. Besides, a corresponding post-processing algorithm is also designed to sequentially combine the four prediction results and reconstruct the text instance accurately. We evaluate our method on several challenging scene text benchmarks, including both curved and multi-oriented text datasets. Experimental results demonstrate that the proposed approach obtains superior or competitive performance compared to other state-of-the-art methods, e.g., 83.4% F-score for Total-Text, 82.4% F-score for MSRA-TD500, etc.

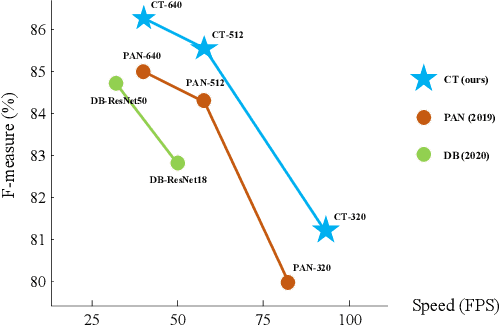

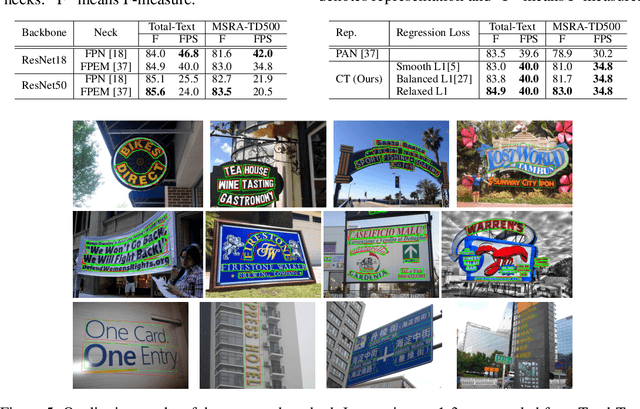

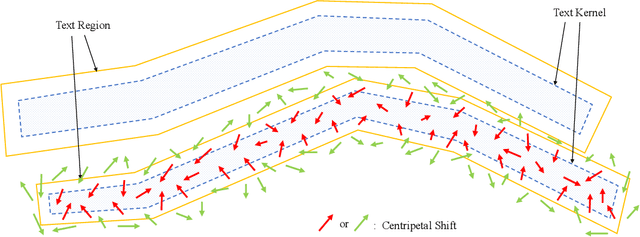

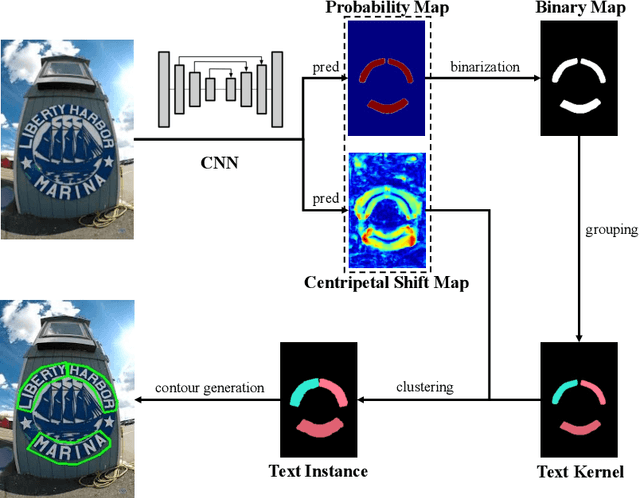

CentripetalText: An Efficient Text Instance Representation for Scene Text Detection

Jul 13, 2021

Abstract:Scene text detection remains a grand challenge due to the variation in text curvatures, orientations, and aspect ratios. One of the most intractable problems is how to represent text instances of arbitrary shapes. Although many state-of-the-art methods have been proposed to model irregular texts in a flexible manner, most of them lose simplicity and robustness. Their complicated post-processings and the regression under Dirac delta distribution undermine the detection performance and the generalization ability. In this paper, we propose an efficient text instance representation named CentripetalText (CT), which decomposes text instances into the combination of text kernels and centripetal shifts. Specifically, we utilize the centripetal shifts to implement the pixel aggregation, which guide the external text pixels to the internal text kernels. The relaxation operation is integrated into the dense regression for centripetal shifts, allowing the correct prediction in a range, not a specific value. The convenient reconstruction of the text contours and the tolerance of the prediction errors in our method guarantee the high detection accuracy and the fast inference speed respectively. Besides, we shrink our text detector into a proposal generation module, namely CentripetalText Proposal Network (CPN), replacing SPN in Mask TextSpotter v3 and producing more accurate proposals. To validate the effectiveness of our designs, we conduct experiments on several commonly used scene text benchmarks, including both curved and multi-oriented text datasets. For the task of scene text detection, our approach achieves superior or competitive performance compared to other existing methods, e.g., F-measure of 86.3% at 40.0 FPS on Total-Text, F-measure of 86.1% at 34.8 FPS on MSRA-TD500, etc. For the task of end-to-end scene text recognition, we outperform Mask TextSpotter v3 by 1.1% on Total-Text.

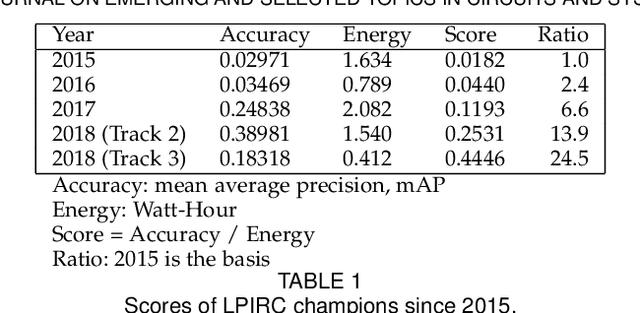

Low-Power Computer Vision: Status, Challenges, Opportunities

Apr 15, 2019

Abstract:Computer vision has achieved impressive progress in recent years. Meanwhile, mobile phones have become the primary computing platforms for millions of people. In addition to mobile phones, many autonomous systems rely on visual data for making decisions and some of these systems have limited energy (such as unmanned aerial vehicles also called drones and mobile robots). These systems rely on batteries and energy efficiency is critical. This article serves two main purposes: (1) Examine the state-of-the-art for low-power solutions to detect objects in images. Since 2015, the IEEE Annual International Low-Power Image Recognition Challenge (LPIRC) has been held to identify the most energy-efficient computer vision solutions. This article summarizes 2018 winners' solutions. (2) Suggest directions for research as well as opportunities for low-power computer vision.

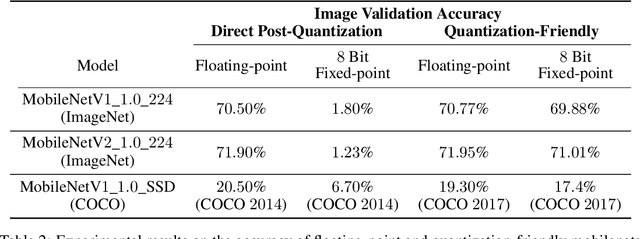

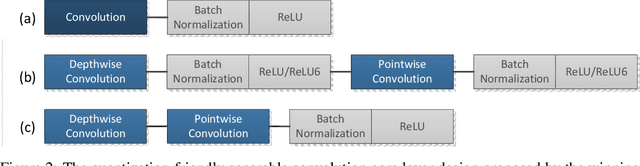

Low Power Inference for On-Device Visual Recognition with a Quantization-Friendly Solution

Mar 12, 2019

Abstract:The IEEE Low-Power Image Recognition Challenge (LPIRC) is an annual competition started in 2015 that encourages joint hardware and software solutions for computer vision systems with low latency and power. Track 1 of the competition in 2018 focused on the innovation of software solutions with fixed inference engine and hardware. This decision allows participants to submit models online and not worry about building and bringing custom hardware on-site, which attracted a historically large number of submissions. Among the diverse solutions, the winning solution proposed a quantization-friendly framework for MobileNets that achieves an accuracy of 72.67% on the holdout dataset with an average latency of 27ms on a single CPU core of Google Pixel2 phone, which is superior to the best real-time MobileNet models at the time.

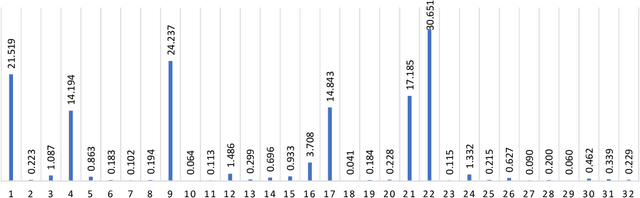

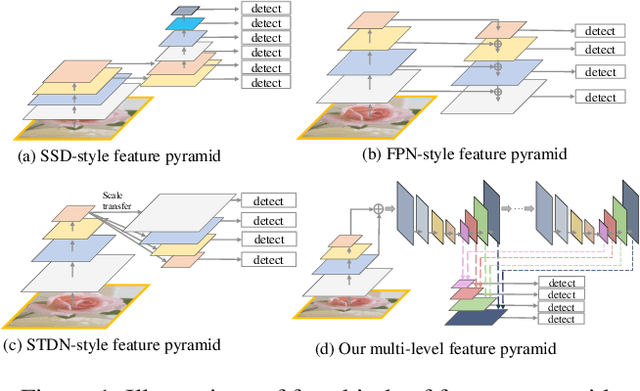

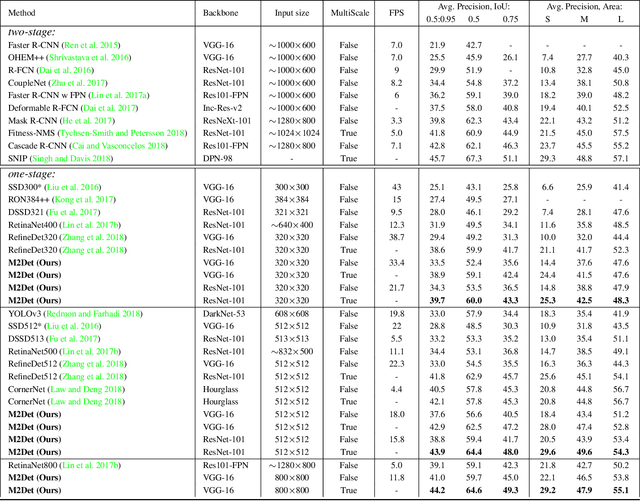

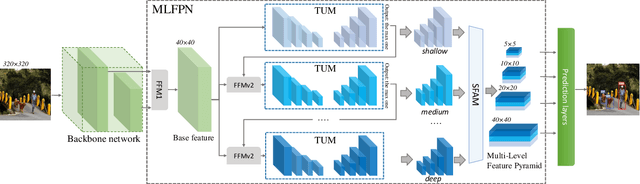

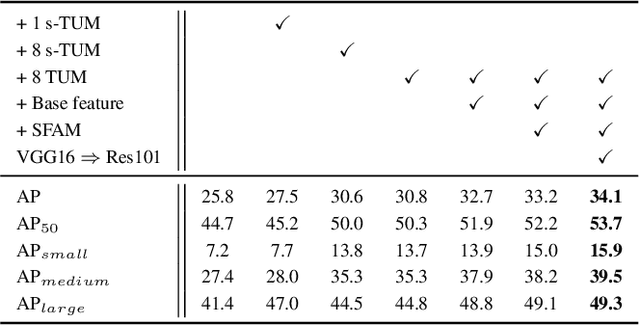

M2Det: A Single-Shot Object Detector based on Multi-Level Feature Pyramid Network

Nov 13, 2018

Abstract:Feature pyramids are widely exploited by both the state-of-the-art one-stage object detectors (e.g., DSSD, RetinaNet, RefineDet) and the two-stage object detectors (e.g., Mask R-CNN, DetNet) to alleviate the problem arising from scale variation across object instances. Although these object detectors with feature pyramids achieve encouraging results, they have some limitations due to that they only simply construct the feature pyramid according to the inherent multi-scale, pyramidal architecture of the backbones which are actually designed for object classification task. Newly, in this work, we present a method called Multi-Level Feature Pyramid Network (MLFPN) to construct more effective feature pyramids for detecting objects of different scales. First, we fuse multi-level features (i.e. multiple layers) extracted by backbone as the base feature. Second, we feed the base feature into a block of alternating joint Thinned U-shape Modules and Feature Fusion Modules and exploit the decoder layers of each u-shape module as the features for detecting objects. Finally, we gather up the decoder layers with equivalent scales (sizes) to develop a feature pyramid for object detection, in which every feature map consists of the layers (features) from multiple levels. To evaluate the effectiveness of the proposed MLFPN, we design and train a powerful end-to-end one-stage object detector we call M2Det by integrating it into the architecture of SSD, which gets better detection performance than state-of-the-art one-stage detectors. Specifically, on MS-COCO benchmark, M2Det achieves AP of 41.0 at speed of 11.8 FPS with single-scale inference strategy and AP of 44.2 with multi-scale inference strategy, which is the new state-of-the-art results among one-stage detectors. The code will be made available on \url{https://github.com/qijiezhao/M2Det.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge