Zichen Fan

Helen

SQ-DM: Accelerating Diffusion Models with Aggressive Quantization and Temporal Sparsity

Jan 26, 2025Abstract:Diffusion models have gained significant popularity in image generation tasks. However, generating high-quality content remains notably slow because it requires running model inference over many time steps. To accelerate these models, we propose to aggressively quantize both weights and activations, while simultaneously promoting significant activation sparsity. We further observe that the stated sparsity pattern varies among different channels and evolves across time steps. To support this quantization and sparsity scheme, we present a novel diffusion model accelerator featuring a heterogeneous mixed-precision dense-sparse architecture, channel-last address mapping, and a time-step-aware sparsity detector for efficient handling of the sparsity pattern. Our 4-bit quantization technique demonstrates superior generation quality compared to existing 4-bit methods. Our custom accelerator achieves 6.91x speed-up and 51.5% energy reduction compared to traditional dense accelerators.

Efficient Computation Sharing for Multi-Task Visual Scene Understanding

Mar 16, 2023Abstract:Solving multiple visual tasks using individual models can be resource-intensive, while multi-task learning can conserve resources by sharing knowledge across different tasks. Despite the benefits of multi-task learning, such techniques can struggle with balancing the loss for each task, leading to potential performance degradation. We present a novel computation- and parameter-sharing framework that balances efficiency and accuracy to perform multiple visual tasks utilizing individually-trained single-task transformers. Our method is motivated by transfer learning schemes to reduce computational and parameter storage costs while maintaining the desired performance. Our approach involves splitting the tasks into a base task and the other sub-tasks, and sharing a significant portion of activations and parameters/weights between the base and sub-tasks to decrease inter-task redundancies and enhance knowledge sharing. The evaluation conducted on NYUD-v2 and PASCAL-context datasets shows that our method is superior to the state-of-the-art transformer-based multi-task learning techniques with higher accuracy and reduced computational resources. Moreover, our method is extended to video stream inputs, further reducing computational costs by efficiently sharing information across the temporal domain as well as the task domain. Our codes and models will be publicly available.

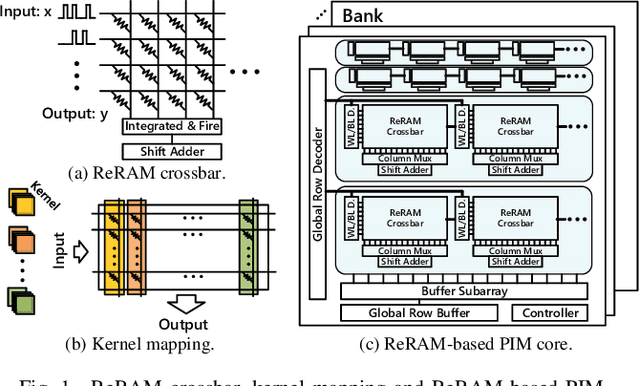

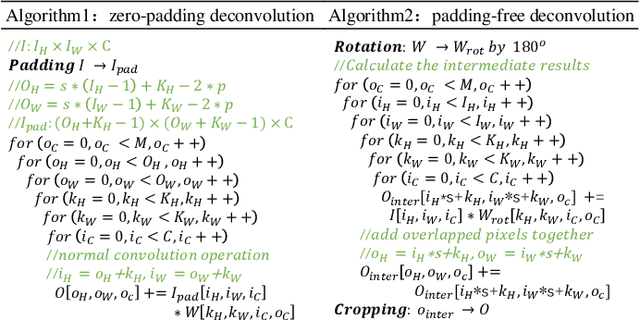

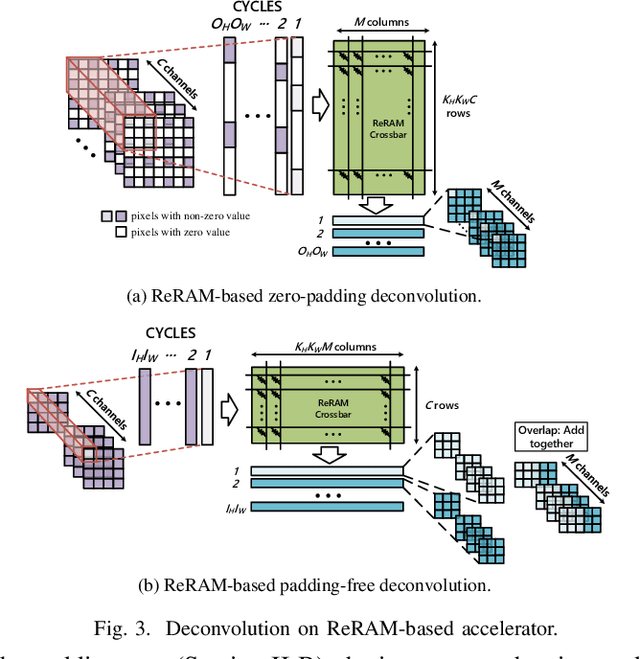

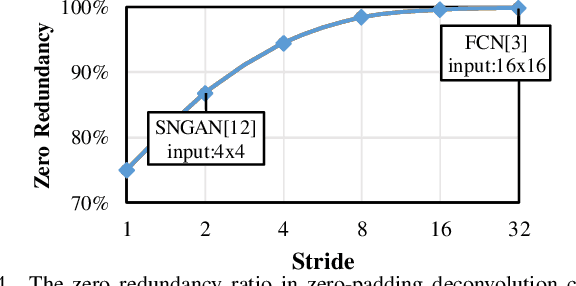

RED: A ReRAM-based Deconvolution Accelerator

Jul 05, 2019

Abstract:Deconvolution has been widespread in neural networks. For example, it is essential for performing unsupervised learning in generative adversarial networks or constructing fully convolutional networks for semantic segmentation. Resistive RAM (ReRAM)-based processing-in-memory architecture has been widely explored in accelerating convolutional computation and demonstrates good performance. Performing deconvolution on existing ReRAM-based accelerator designs, however, suffers from long latency and high energy consumption because deconvolutional computation includes not only convolution but also extra add-on operations. To realize the more efficient execution for deconvolution, we analyze its computation requirement and propose a ReRAM-based accelerator design, namely, RED. More specific, RED integrates two orthogonal methods, the pixel-wise mapping scheme for reducing redundancy caused by zero-inserting operations and the zero-skipping data flow for increasing the computation parallelism and therefore improving performance. Experimental evaluations show that compared to the state-of-the-art ReRAM-based accelerator, RED can speed up operation 3.69x~1.15x and reduce 8%~88.36% energy consumption.

Low-Power Computer Vision: Status, Challenges, Opportunities

Apr 15, 2019

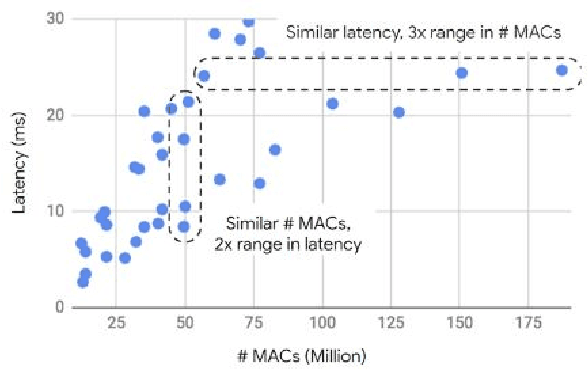

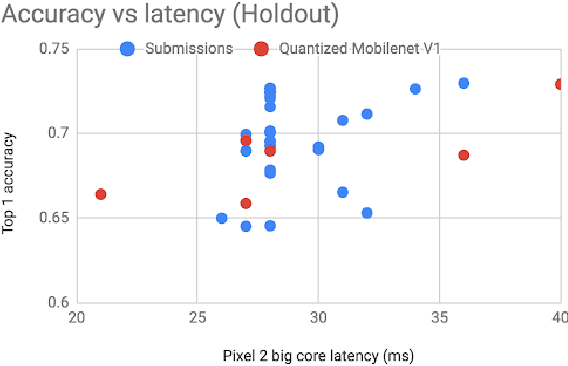

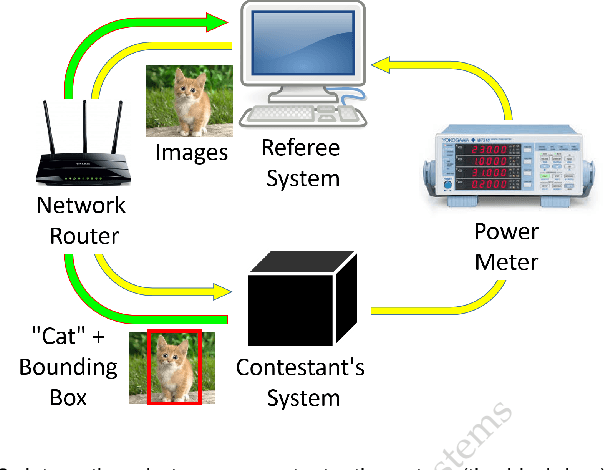

Abstract:Computer vision has achieved impressive progress in recent years. Meanwhile, mobile phones have become the primary computing platforms for millions of people. In addition to mobile phones, many autonomous systems rely on visual data for making decisions and some of these systems have limited energy (such as unmanned aerial vehicles also called drones and mobile robots). These systems rely on batteries and energy efficiency is critical. This article serves two main purposes: (1) Examine the state-of-the-art for low-power solutions to detect objects in images. Since 2015, the IEEE Annual International Low-Power Image Recognition Challenge (LPIRC) has been held to identify the most energy-efficient computer vision solutions. This article summarizes 2018 winners' solutions. (2) Suggest directions for research as well as opportunities for low-power computer vision.

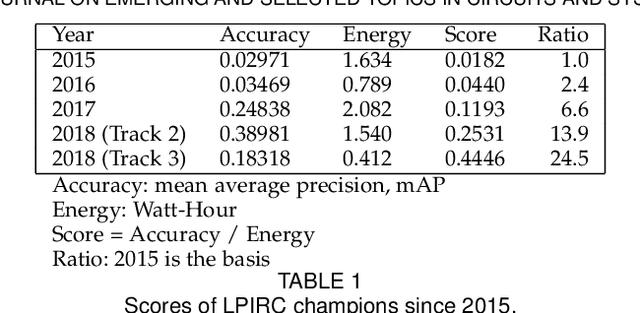

2018 Low-Power Image Recognition Challenge

Oct 03, 2018Abstract:The Low-Power Image Recognition Challenge (LPIRC, https://rebootingcomputing.ieee.org/lpirc) is an annual competition started in 2015. The competition identifies the best technologies that can classify and detect objects in images efficiently (short execution time and low energy consumption) and accurately (high precision). Over the four years, the winners' scores have improved more than 24 times. As computer vision is widely used in many battery-powered systems (such as drones and mobile phones), the need for low-power computer vision will become increasingly important. This paper summarizes LPIRC 2018 by describing the three different tracks and the winners' solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge