Steven W. Chen

Large-scale Autonomous Flight with Real-time Semantic SLAM under Dense Forest Canopy

Sep 19, 2021

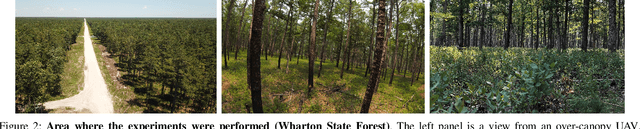

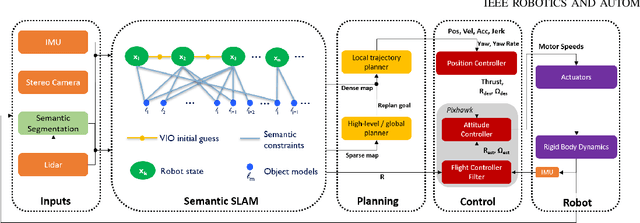

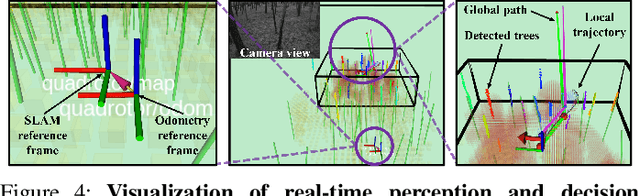

Abstract:In this letter, we propose an integrated autonomous flight and semantic SLAM system that can perform long-range missions and real-time semantic mapping in highly cluttered, unstructured, and GPS-denied under-canopy environments. First, tree trunks and ground planes are detected from LIDAR scans. We use a neural network and an instance extraction algorithm to enable semantic segmentation in real time onboard the UAV. Second, detected tree trunk instances are modeled as cylinders and associated across the whole LIDAR sequence. This semantic data association constraints both robot poses as well as trunk landmark models. The output of semantic SLAM is used in state estimation, planning, and control algorithms in real time. The global planner relies on a sparse map to plan the shortest path to the global goal, and the local trajectory planner uses a small but finely discretized robot-centric map to plan a dynamically feasible and collision-free trajectory to the local goal. Both the global path and local trajectory lead to drift-corrected goals, thus helping the UAV execute its mission accurately and safely.

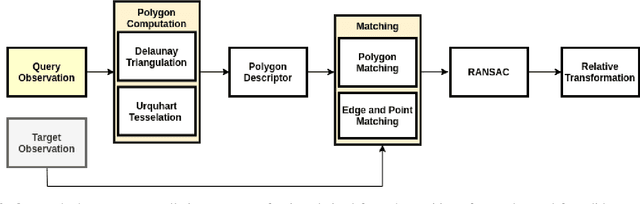

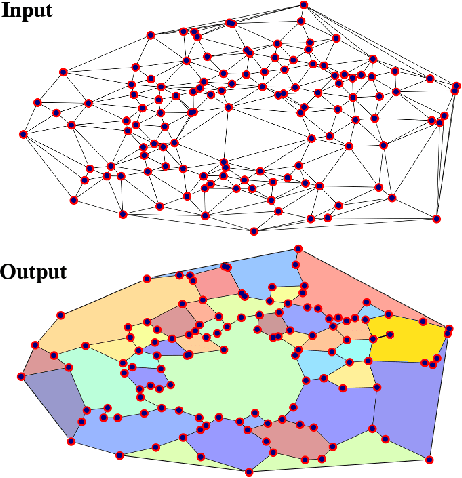

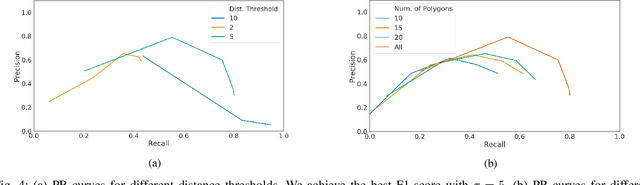

Place Recognition in Forests with Urquhart Tessellations

Sep 23, 2020

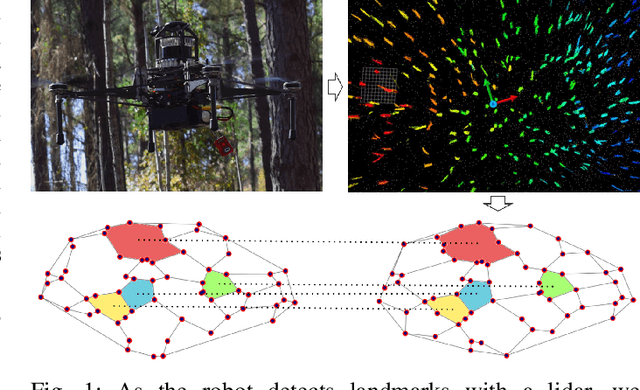

Abstract:In this letter we present a novel descriptor based on polygons derived from Urquhart tessellations on the position of trees in a forest detected from lidar scans. We present a framework that leverages these polygons to generate a signature that is used detect previously seen observations even with partial overlap and different levels of noise while also inferring landmark correspondences to compute an affine transformation between observations. We run loop-closure experiments in simulation and real-world data map-merging from different flights of an Unmanned Aerial Vehicle (UAV) in a pine tree forest and show that our method outperforms state-of-the-art approaches in accuracy and robustness.

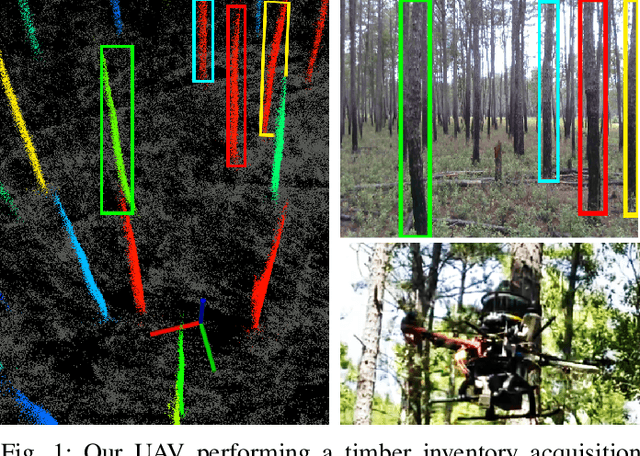

SLOAM: Semantic Lidar Odometry and Mapping for Forest Inventory

Dec 29, 2019

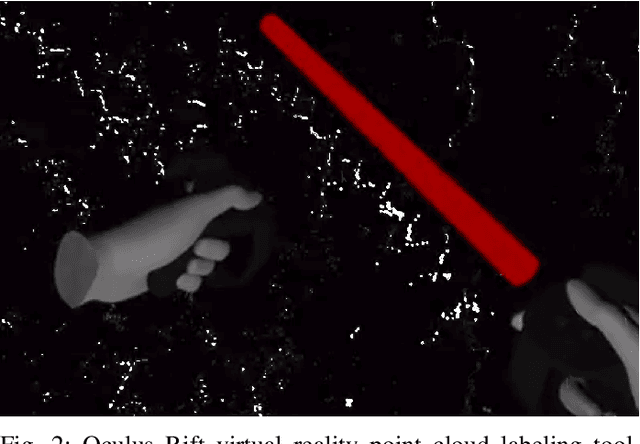

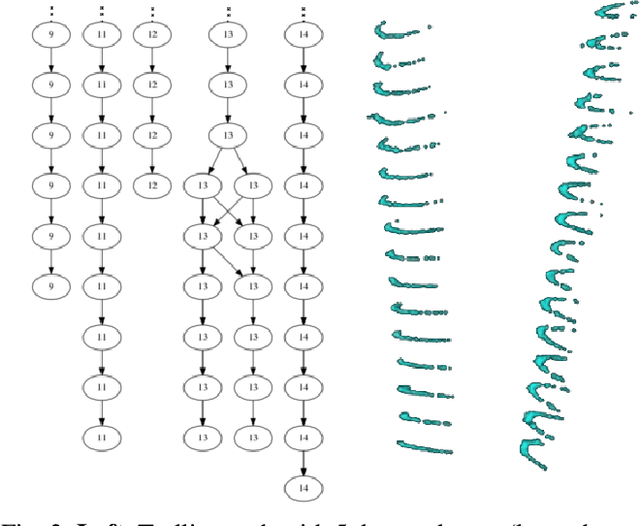

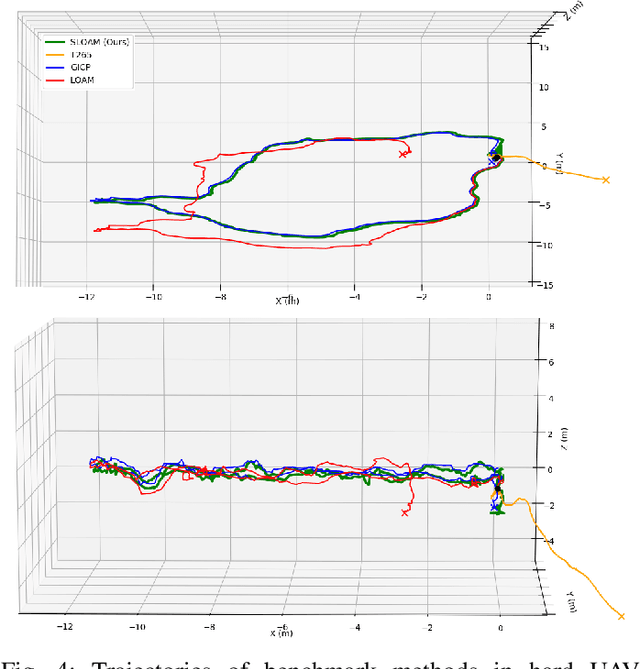

Abstract:This paper describes an end-to-end pipeline for tree diameter estimation based on semantic segmentation and lidar odometry and mapping. Accurate mapping of this type of environment is challenging since the ground and the trees are surrounded by leaves, thorns and vines, and the sensor typically experiences extreme motion. We propose a semantic feature based pose optimization that simultaneously refines the tree models while estimating the robot pose. The pipeline utilizes a custom virtual reality tool for labeling 3D scans that is used to train a semantic segmentation network. The masked point cloud is used to compute a trellis graph that identifies individual instances and extracts relevant features that are used by the SLAM module. We show that traditional lidar and image based methods fail in the forest environment on both Unmanned Aerial Vehicle (UAV) and hand-carry systems, while our method is more robust, scalable, and automatically generates tree diameter estimations.

Large Scale Model Predictive Control with Neural Networks and Primal Active Sets

Oct 23, 2019

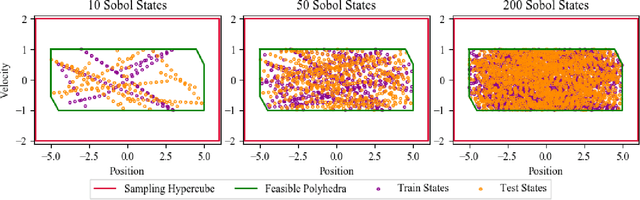

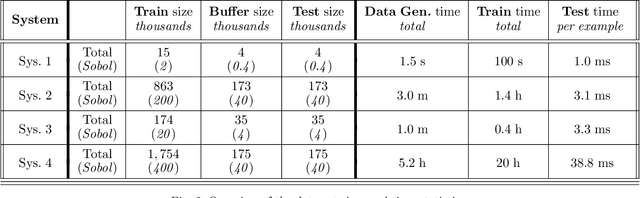

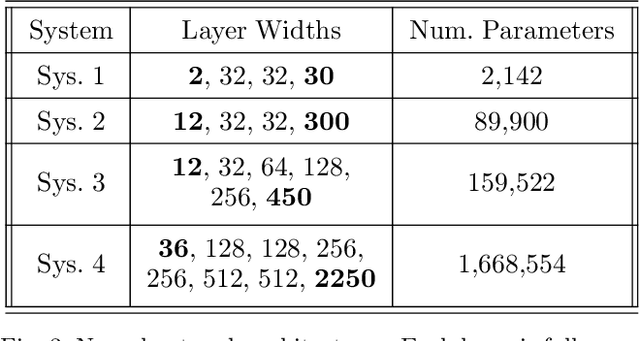

Abstract:This work presents an explicit-implicit procedure that combines an offline trained neural network with an online primal active set solver to compute a model predictive control (MPC) law with guarantees on recursive feasibility and asymptotic stability. The neural network improves the suboptimality of the controller performance and accelerates online inference speed for large systems, while the primal active set method provides corrective steps to ensure feasibility and stability. We highlight the connections between MPC and neural networks and introduce a primal-dual loss function to train a neural network to initialize the online controller. We then demonstrate online computation of the primal feasibility and suboptimality criteria to provide the desired guarantees. Next, we use these neural network and criteria measures to accelerate an online primal active set method through warm starts and early termination. Finally, we present a data set generation algorithm that is critical for successfully applying our approach to high dimensional systems. The primary motivation is developing an algorithm that scales to systems that are challenging for current approaches, involving state and input dimensions as well as planning horizons in the order of tens to hundreds.

Decentralization of Multiagent Policies by Learning What to Communicate

Mar 25, 2019

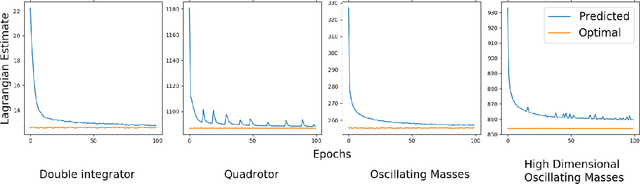

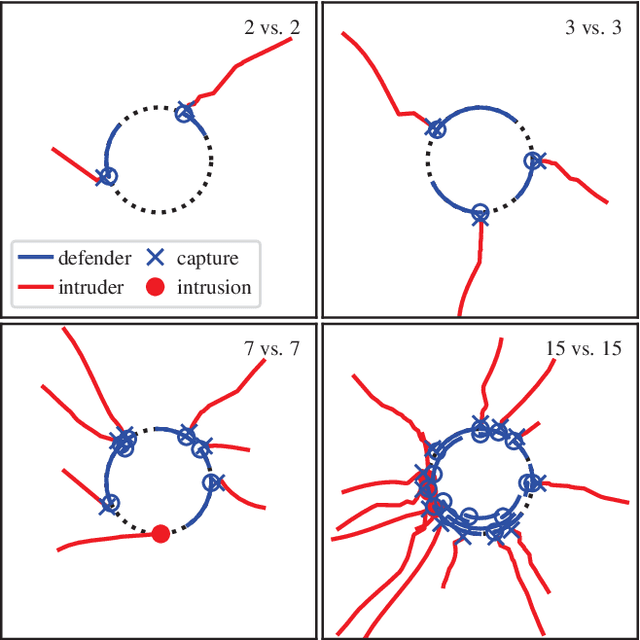

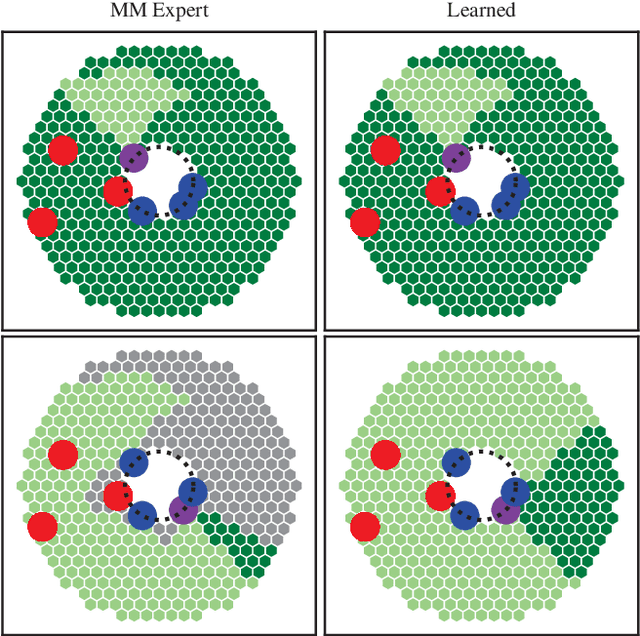

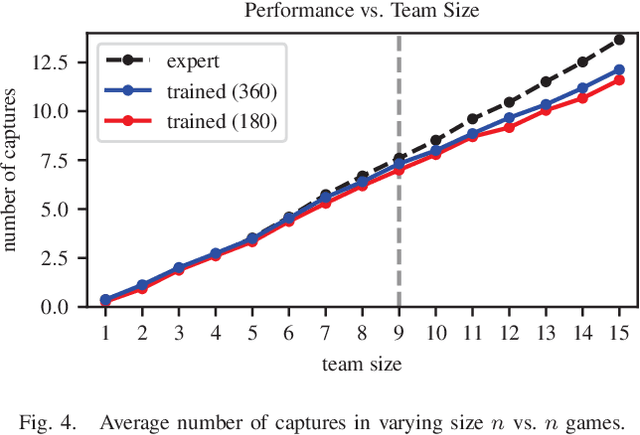

Abstract:Effective communication is required for teams of robots to solve sophisticated collaborative tasks. In practice it is typical for both the encoding and semantics of communication to be manually defined by an expert; this is true regardless of whether the behaviors themselves are bespoke, optimization based, or learned. We present an agent architecture and training methodology using neural networks to learn task-oriented communication semantics based on the example of a communication-unaware expert policy. A perimeter defense game illustrates the system's ability to handle dynamically changing numbers of agents and its graceful degradation in performance as communication constraints are tightened or the expert's observability assumptions are broken.

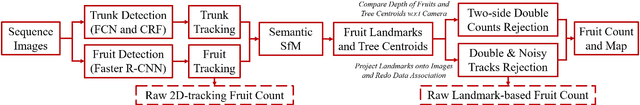

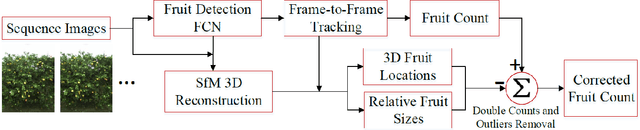

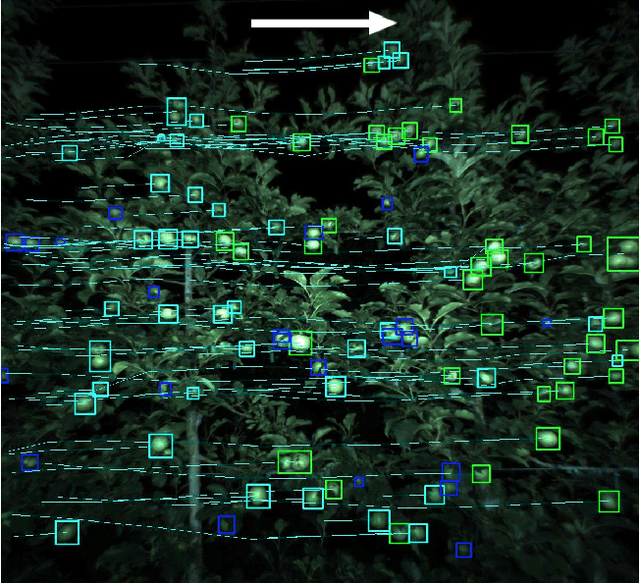

Monocular Camera Based Fruit Counting and Mapping with Semantic Data Association

Mar 18, 2019

Abstract:We present a cheap, lightweight, and fast fruit counting pipeline that uses a single monocular camera. Our pipeline that relies only on a monocular camera, achieves counting performance comparable to state-of-the-art fruit counting system that utilizes an expensive sensor suite including LiDAR and GPS/INS on a mango dataset. Our monocular camera pipeline begins with a fruit detection component that uses a deep neural network. It then uses semantic structure from motion (SFM) to convert these detections into fruit counts by estimating landmark locations of the fruit in 3D, and using these landmarks to identify double counting scenarios. There are many benefits of developing a low cost and lightweight fruit counting system, including applicability to agriculture in developing countries, where monetary constraints or unstructured environments necessitate cheaper hardware solutions.

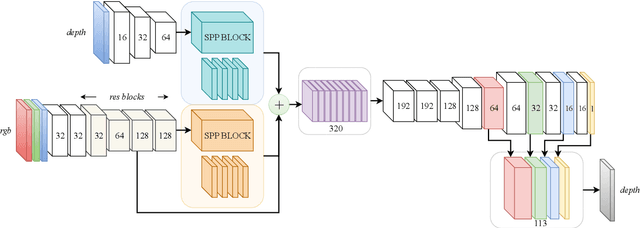

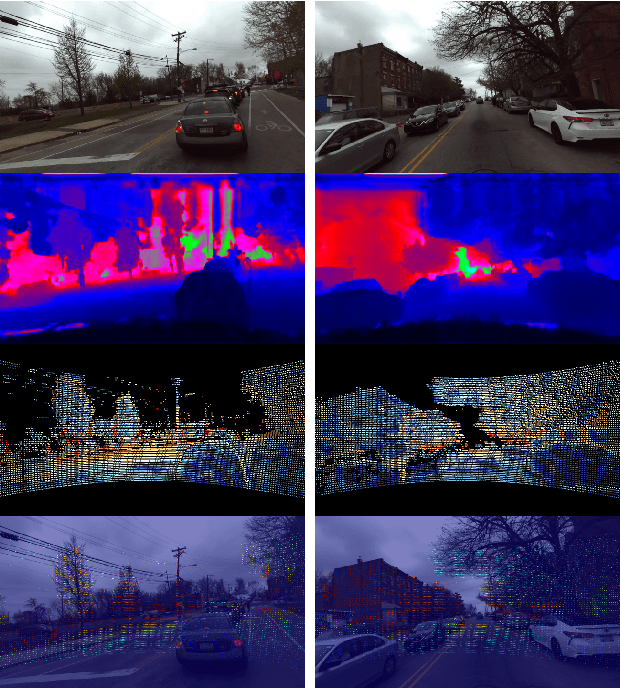

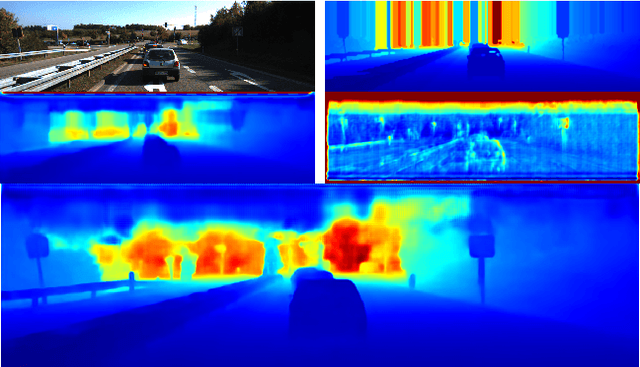

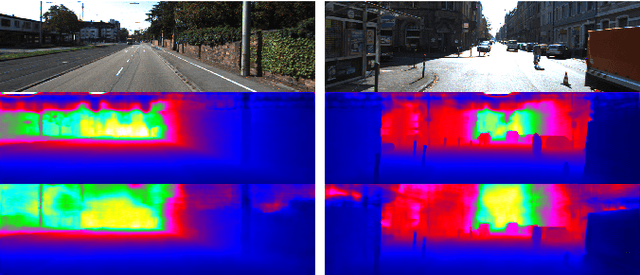

DFuseNet: Deep Fusion of RGB and Sparse Depth Information for Image Guided Dense Depth Completion

Feb 02, 2019

Abstract:In this paper we propose a convolutional neural network that is designed to upsample a series of sparse range measurements based on the contextual cues gleaned from a high resolution intensity image. Our approach draws inspiration from related work on super-resolution and in-painting. We propose a novel architecture that seeks to pull contextual cues separately from the intensity image and the depth features and then fuse them later in the network. We argue that this approach effectively exploits the relationship between the two modalities and produces accurate results while respecting salient image structures. We present experimental results to demonstrate that our approach is comparable with state of the art methods and generalizes well across multiple datasets.

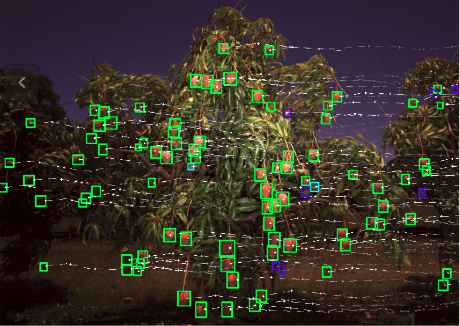

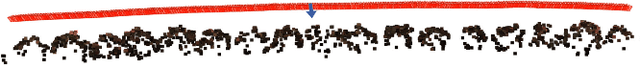

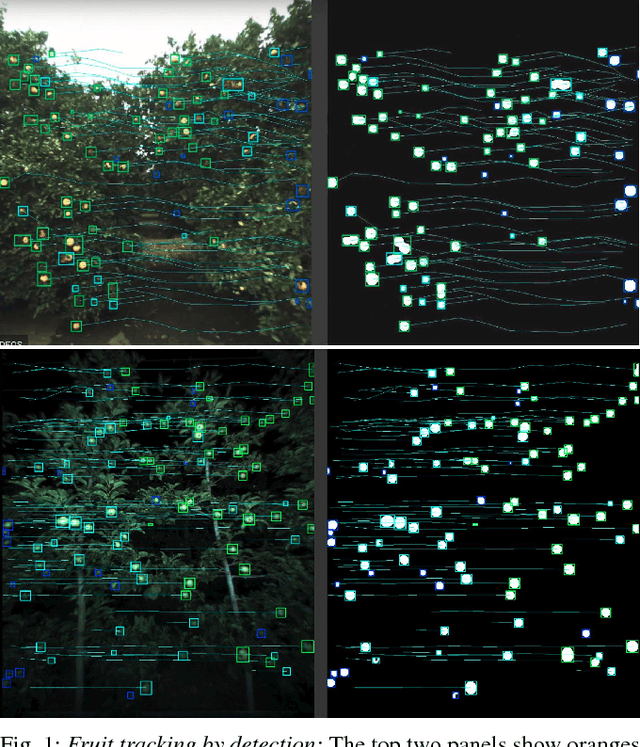

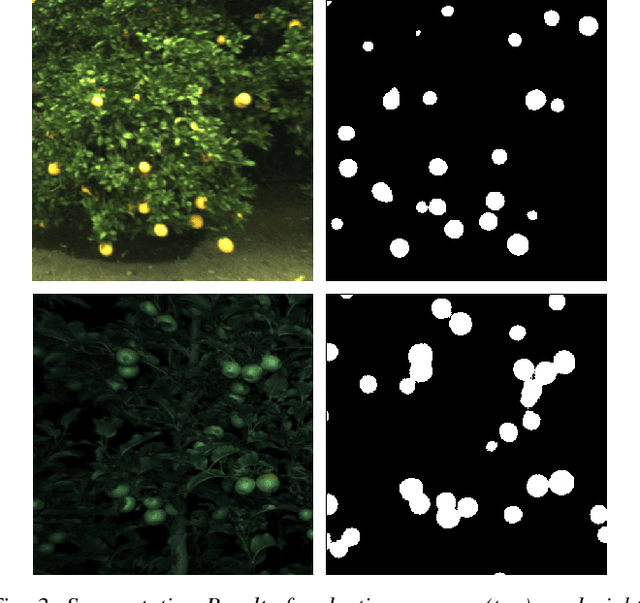

Robust Fruit Counting: Combining Deep Learning, Tracking, and Structure from Motion

Aug 02, 2018

Abstract:We present a novel fruit counting pipeline that combines deep segmentation, frame to frame tracking, and 3D localization to accurately count visible fruits across a sequence of images. Our pipeline works on image streams from a monocular camera, both in natural light, as well as with controlled illumination at night. We first train a Fully Convolutional Network (FCN) and segment video frame images into fruit and non-fruit pixels. We then track fruits across frames using the Hungarian Algorithm where the objective cost is determined from a Kalman Filter corrected Kanade-Lucas-Tomasi (KLT) Tracker. In order to correct the estimated count from tracking process, we combine tracking results with a Structure from Motion (SfM) algorithm to calculate relative 3D locations and size estimates to reject outliers and double counted fruit tracks. We evaluate our algorithm by comparing with ground-truth human-annotated visual counts. Our results demonstrate that our pipeline is able to accurately and reliably count fruits across image sequences, and the correction step can significantly improve the counting accuracy and robustness. Although discussed in the context of fruit counting, our work can extend to detection, tracking, and counting of a variety of other stationary features of interest such as leaf-spots, wilt, and blossom.

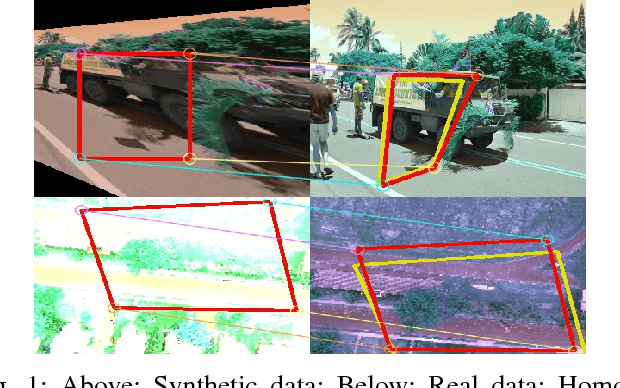

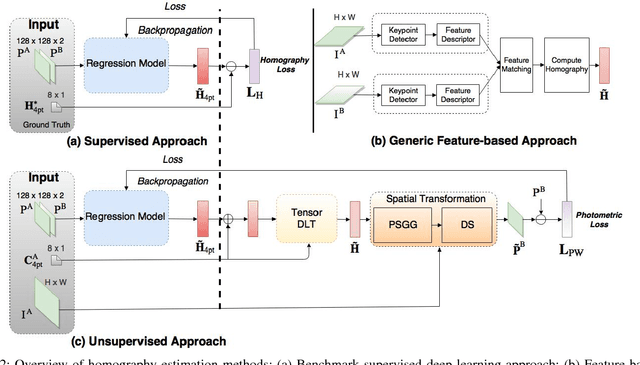

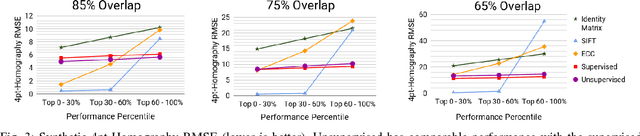

Unsupervised Deep Homography: A Fast and Robust Homography Estimation Model

Feb 21, 2018

Abstract:Homography estimation between multiple aerial images can provide relative pose estimation for collaborative autonomous exploration and monitoring. The usage on a robotic system requires a fast and robust homography estimation algorithm. In this study, we propose an unsupervised learning algorithm that trains a Deep Convolutional Neural Network to estimate planar homographies. We compare the proposed algorithm to traditional feature-based and direct methods, as well as a corresponding supervised learning algorithm. Our empirical results demonstrate that compared to traditional approaches, the unsupervised algorithm achieves faster inference speed, while maintaining comparable or better accuracy and robustness to illumination variation. In addition, on both a synthetic dataset and representative real-world aerial dataset, our unsupervised method has superior adaptability and performance compared to the supervised deep learning method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge