James Paulos

RotorPy: A Python-based Multirotor Simulator with Aerodynamics for Education and Research

Jun 07, 2023Abstract:Simulators play a critical role in aerial robotics both in and out of the classroom. We present RotorPy, a simulation environment written entirely in Python intentionally designed to be a lightweight and accessible tool for robotics students and researchers alike to probe concepts in estimation, planning, and control for aerial robots. RotorPy simulates the 6-DoF dynamics of a multirotor robot including aerodynamic wrenches, obstacles, actuator dynamics and saturation, realistic sensors, and wind models. This work describes the modeling choices for RotorPy, benchmark testing against real data, and a case study using the simulator to design and evaluate a model-based wind estimator.

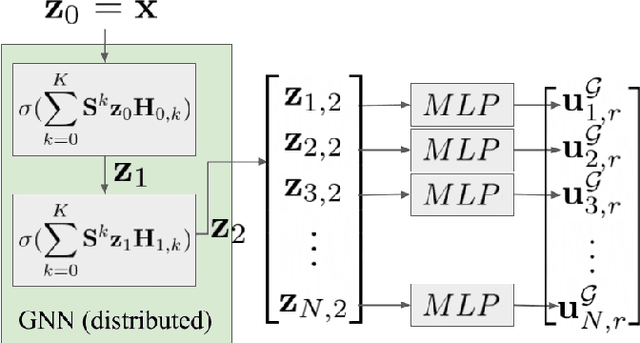

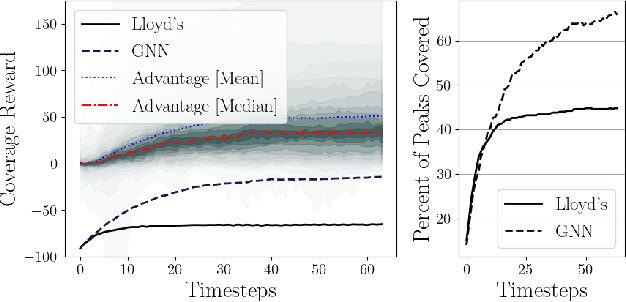

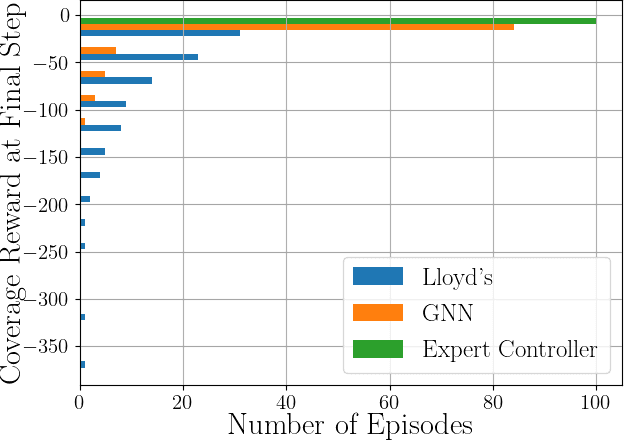

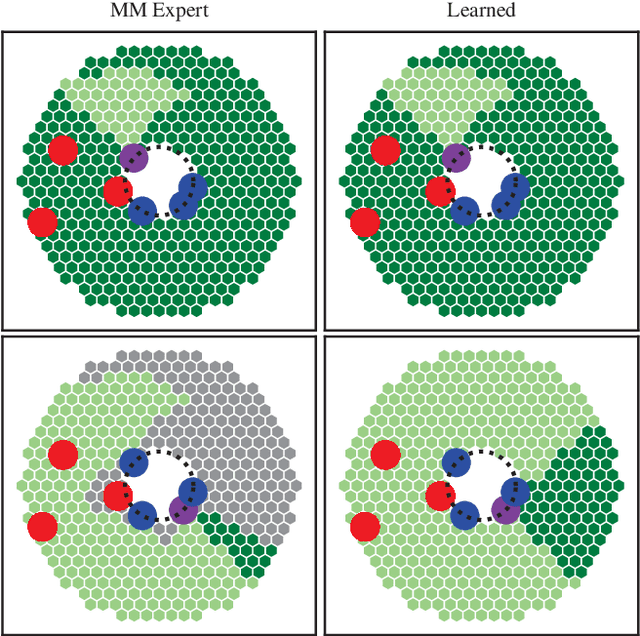

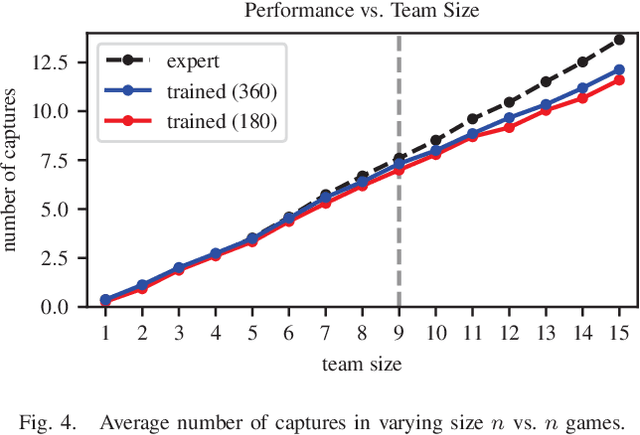

Coverage Control in Multi-Robot Systems via Graph Neural Networks

Sep 30, 2021

Abstract:This paper develops a decentralized approach to mobile sensor coverage by a multi-robot system. We consider a scenario where a team of robots with limited sensing range must position itself to effectively detect events of interest in a region characterized by areas of varying importance. Towards this end, we develop a decentralized control policy for the robots -- realized via a Graph Neural Network -- which uses inter-robot communication to leverage non-local information for control decisions. By explicitly sharing information between multi-hop neighbors, the decentralized controller achieves a higher quality of coverage when compared to classical approaches that do not communicate and leverage only local information available to each robot. Simulated experiments demonstrate the efficacy of multi-hop communication for multi-robot coverage and evaluate the scalability and transferability of the learning-based controllers.

Dispersion-Minimizing Motion Primitives for Search-Based Motion Planning

Mar 26, 2021

Abstract:Search-based planning with motion primitives is a powerful motion planning technique that can provide dynamic feasibility, optimality, and real-time computation times on size, weight, and power-constrained platforms in unstructured environments. However, optimal design of the motion planning graph, while crucial to the performance of the planner, has not been a main focus of prior work. This paper proposes to address this by introducing a method of choosing vertices and edges in a motion primitive graph that is grounded in sampling theory and leads to theoretical guarantees on planner completeness. By minimizing dispersion of the graph vertices in the metric space induced by trajectory cost, we optimally cover the space of feasible trajectories with our motion primitive graph. In comparison with baseline motion primitives defined by uniform input space sampling, our motion primitive graphs have lower dispersion, find a plan with fewer iterations of the graph search, and have only one parameter to tune.

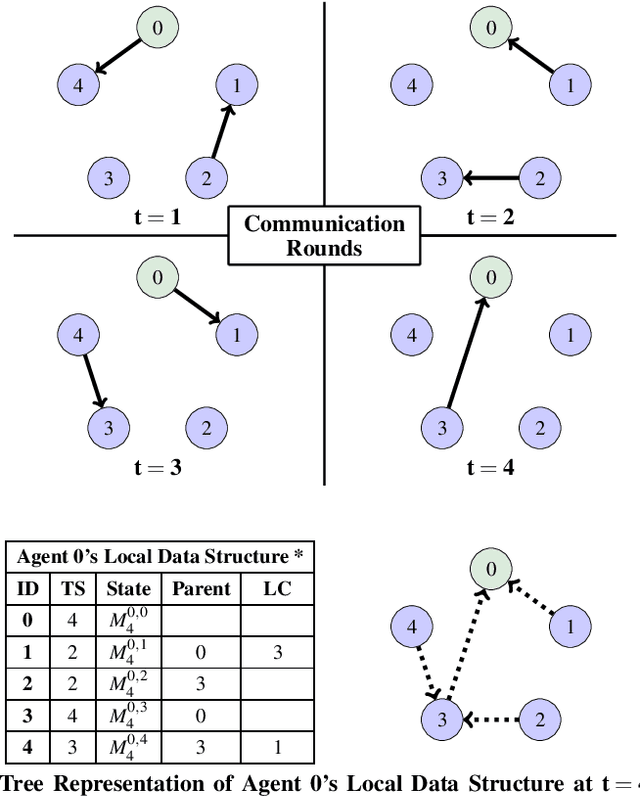

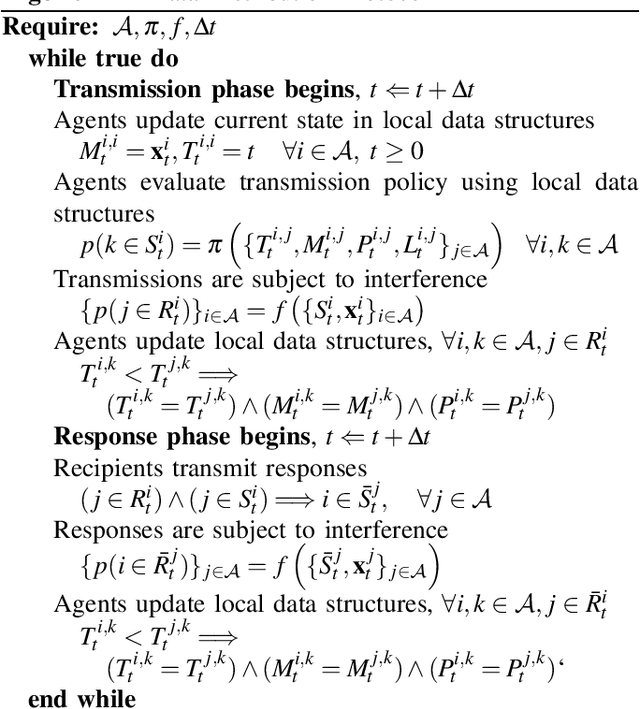

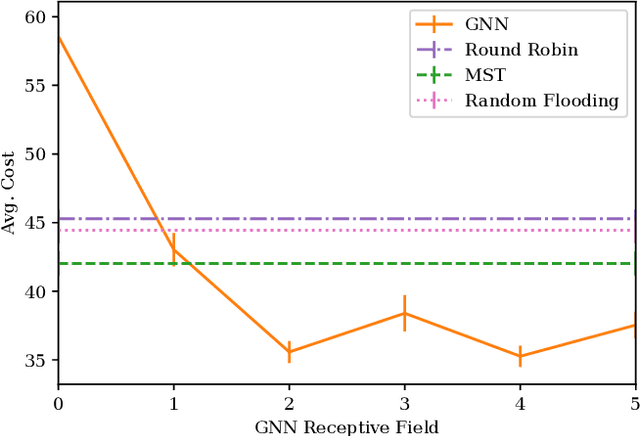

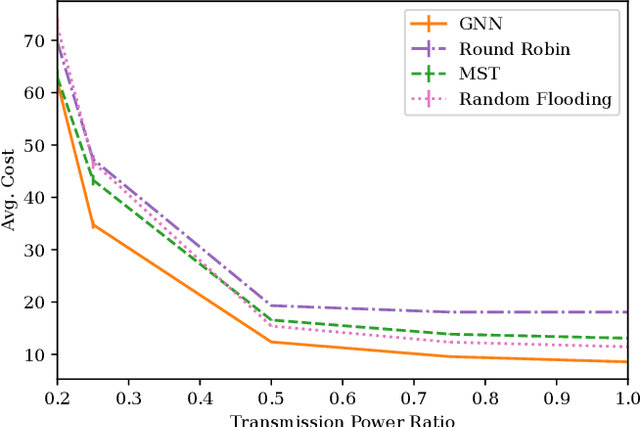

Learning Connectivity for Data Distribution in Robot Teams

Mar 08, 2021

Abstract:Many algorithms for control of multi-robot teams operate under the assumption that low-latency, global state information necessary to coordinate agent actions can readily be disseminated among the team. However, in harsh environments with no existing communication infrastructure, robots must form ad-hoc networks, forcing the team to operate in a distributed fashion. To overcome this challenge, we propose a task-agnostic, decentralized, low-latency method for data distribution in ad-hoc networks using Graph Neural Networks (GNN). Our approach enables multi-agent algorithms based on global state information to function by ensuring it is available at each robot. To do this, agents glean information about the topology of the network from packet transmissions and feed it to a GNN running locally which instructs the agent when and where to transmit the latest state information. We train the distributed GNN communication policies via reinforcement learning using the average Age of Information as the reward function and show that it improves training stability compared to task-specific reward functions. Our approach performs favorably compared to industry-standard methods for data distribution such as random flooding and round robin. We also show that the trained policies generalize to larger teams of both static and mobile agents.

Neurosymbolic Transformers for Multi-Agent Communication

Jan 05, 2021

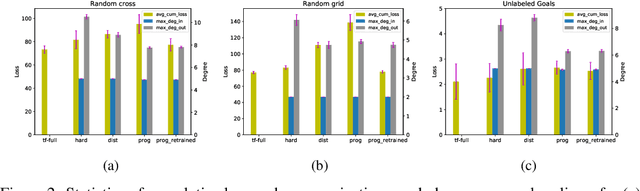

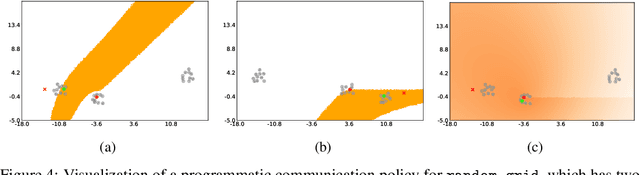

Abstract:We study the problem of inferring communication structures that can solve cooperative multi-agent planning problems while minimizing the amount of communication. We quantify the amount of communication as the maximum degree of the communication graph; this metric captures settings where agents have limited bandwidth. Minimizing communication is challenging due to the combinatorial nature of both the decision space and the objective; for instance, we cannot solve this problem by training neural networks using gradient descent. We propose a novel algorithm that synthesizes a control policy that combines a programmatic communication policy used to generate the communication graph with a transformer policy network used to choose actions. Our algorithm first trains the transformer policy, which implicitly generates a "soft" communication graph; then, it synthesizes a programmatic communication policy that "hardens" this graph, forming a neurosymbolic transformer. Our experiments demonstrate how our approach can synthesize policies that generate low-degree communication graphs while maintaining near-optimal performance.

Multi-Robot Coverage and Exploration using Spatial Graph Neural Networks

Nov 02, 2020

Abstract:The multi-robot coverage problem is an essential building block for systems that perform tasks like inspection or search and rescue. We discretize the coverage problem to induce a spatial graph of locations and represent robots as nodes in the graph. Then, we train a Graph Neural Network controller that leverages the spatial equivariance of the task to imitate an expert open-loop routing solution. This approach generalizes well to much larger maps and larger teams that are intractable for the expert. In particular, the model generalizes effectively to a simulation of ten quadrotors and dozens of buildings. We also demonstrate the GNN controller can surpass planning-based approaches in an exploration task.

Decentralization of Multiagent Policies by Learning What to Communicate

Mar 25, 2019

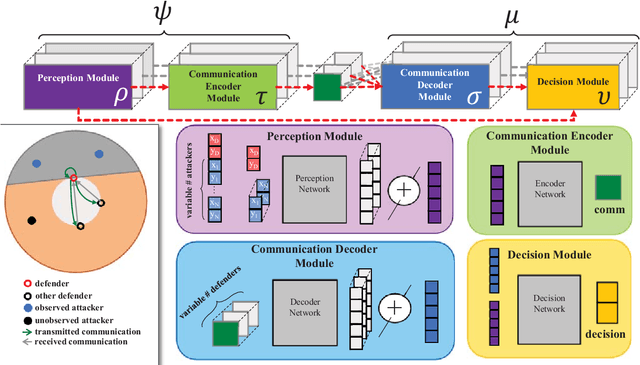

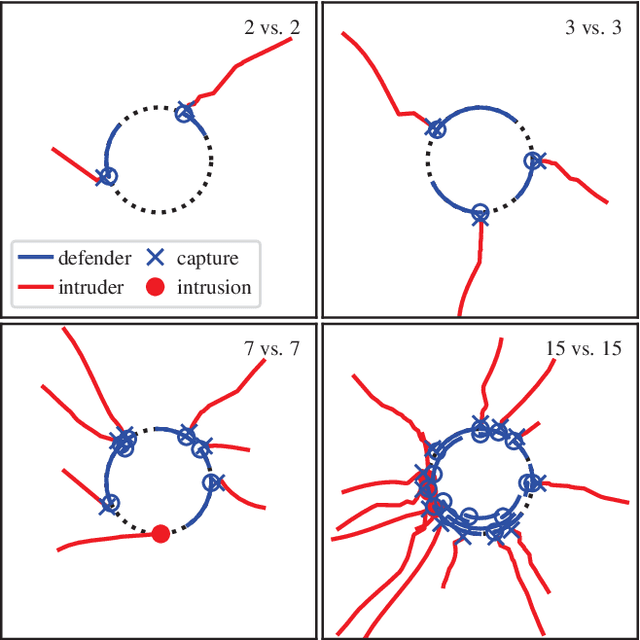

Abstract:Effective communication is required for teams of robots to solve sophisticated collaborative tasks. In practice it is typical for both the encoding and semantics of communication to be manually defined by an expert; this is true regardless of whether the behaviors themselves are bespoke, optimization based, or learned. We present an agent architecture and training methodology using neural networks to learn task-oriented communication semantics based on the example of a communication-unaware expert policy. A perimeter defense game illustrates the system's ability to handle dynamically changing numbers of agents and its graceful degradation in performance as communication constraints are tightened or the expert's observability assumptions are broken.

Learning Decentralized Controllers for Robot Swarms with Graph Neural Networks

Mar 25, 2019

Abstract:We consider the problem of finding distributed controllers for large networks of mobile robots with interacting dynamics and sparsely available communications. Our approach is to learn local controllers which require only local information and local communications at test time by imitating the policy of centralized controllers using global information at training time. By extending aggregation graph neural networks to time varying signals and time varying network support, we learn a single common local controller which exploits information from distant teammates using only local communication interchanges. We apply this approach to a decentralized linear quadratic regulator problem and observe how faster communication rates and smaller network degree increase the value of multi-hop information. Separate experiments learning a decentralized flocking controller demonstrate performance on communication graphs that change as the robots move.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge