Laura Jarin-Lipschitz

Design and Evaluation of Motion Planners for Quadrotors

Sep 24, 2023

Abstract:The field of quadrotor motion planning has experienced significant advancements over the last decade. Most successful approaches rely on two stages: a front-end that determines the best path by incorporating geometric (and in some cases kinematic or input) constraints, that effectively specify the homotopy class of the trajectory; and a back-end that optimizes the path with a suitable objective function, constrained by the robot's dynamics as well as state/input constraints. However, there is no systematic approach or design guidelines to design both the front and the back ends for a wide range of environments, and no literature evaluates the performance of the trajectory planning algorithm with varying degrees of environment complexity. In this paper, we propose a modular approach to designing the software planning stack and offer a parameterized set of environments to systematically evaluate the performance of two-stage planners. Our parametrized environments enable us to access different front and back-end planners as a function of environmental clutter and complexity. We use simulation and experimental results to demonstrate the performance of selected planning algorithms across a range of environments. Finally, we open source the planning/evaluation stack and parameterized environments to facilitate more in-depth studies of quadrotor motion planning, available at https://github.com/KumarRobotics/kr_mp_design

Experiments in Adaptive Replanning for Fast Autonomous Flight in Forests

Mar 02, 2022

Abstract:Fast, autonomous flight in unstructured, cluttered environments such as forests is challenging because it requires the robot to compute new plans in realtime on a computationally-constrained platform. In this paper, we enable this capability with a search-based planning framework that adapts sampling density in realtime to find dynamically-feasible plans while remaining computationally tractable. A paramount challenge in search-based planning is that dense obstacles both necessitate large graphs (to guarantee completeness) and reduce the efficiency of graph search (as heuristics become less accurate). To address this, we develop a planning framework with two parts: one that maximizes planner completeness for a given graph size, and a second that dynamically maximizes graph size subject to computational constraints. This framework is enabled by motion planning graphs that are defined by a single parameter, dispersion, which quantifies the maximum trajectory cost to reach an arbitrary state from the graph. We show through real and simulated experiments how the dispersion can be adapted to different environments in realtime, allowing operation in environments with varying density. The simulated experiment demonstrates improved performance over a baseline search-based planning algorithm. We also demonstrate flight speeds of up to 2.5m/s in real-world cluttered pine forests.

Dispersion-Minimizing Motion Primitives for Search-Based Motion Planning

Mar 26, 2021

Abstract:Search-based planning with motion primitives is a powerful motion planning technique that can provide dynamic feasibility, optimality, and real-time computation times on size, weight, and power-constrained platforms in unstructured environments. However, optimal design of the motion planning graph, while crucial to the performance of the planner, has not been a main focus of prior work. This paper proposes to address this by introducing a method of choosing vertices and edges in a motion primitive graph that is grounded in sampling theory and leads to theoretical guarantees on planner completeness. By minimizing dispersion of the graph vertices in the metric space induced by trajectory cost, we optimally cover the space of feasible trajectories with our motion primitive graph. In comparison with baseline motion primitives defined by uniform input space sampling, our motion primitive graphs have lower dispersion, find a plan with fewer iterations of the graph search, and have only one parameter to tune.

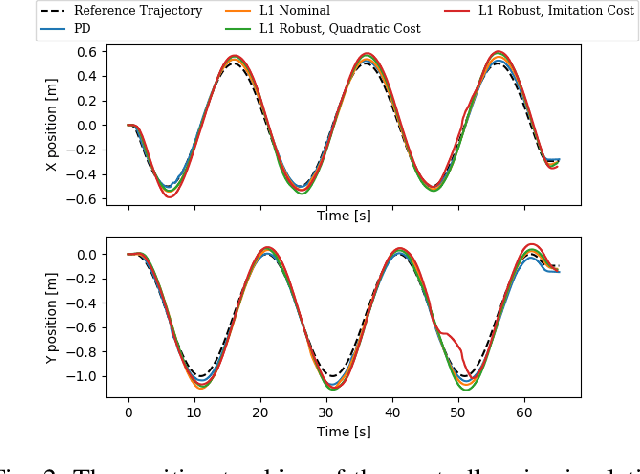

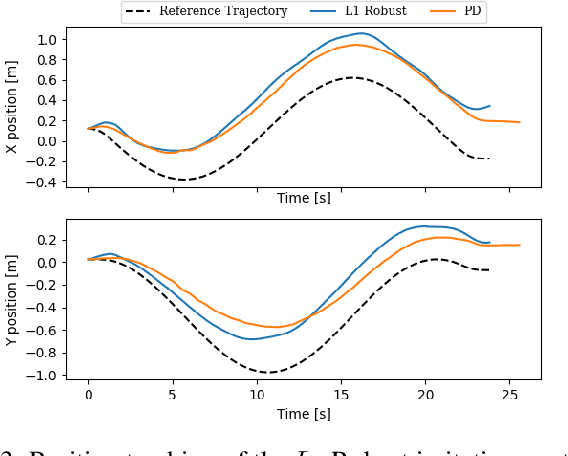

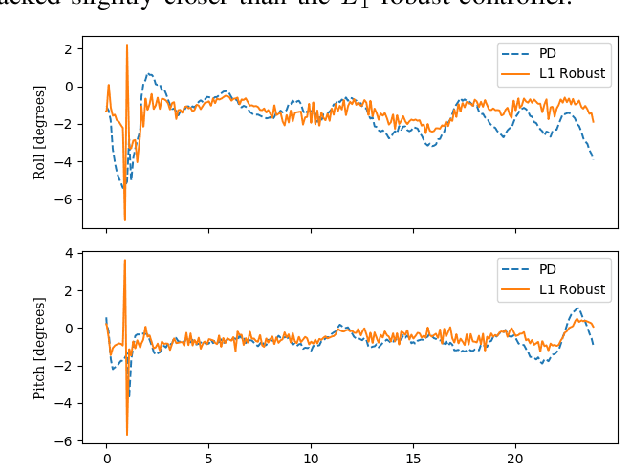

Evaluating Robust, Perception Based Control with Quadrotors

Jul 08, 2020

Abstract:Traditionally, controllers and state estimators in robotic systems are designed independently. Controllers are often designed assuming perfect state estimation. However, state estimation methods such as Visual Inertial Odometry (VIO) drift over time and can cause the system to misbehave. While state estimation error can be corrected with the aid of GPS or motion capture, these complementary sensors are not always available or reliable. Recent work has shown that this issue can be dealt with by synthesizing robust controllers using a data-driven characterization of the perception error, and can bound the system's response to state estimation error using a robustness constraint. We investigate the application of this robust perception-based approach to a quadrotor model using VIO for state estimation and demonstrate the benefits and drawbacks of using this technique in simulation and hardware. Additionally, to make tuning easier, we introduce a new cost function to use in the control synthesis which allows one to take an existing controller and "robustify" it. To the best of our knowledge, this is the first robust perception-based controller implemented in real hardware, as well as one utilizing a data-driven perception model. We believe this as an important step towards safe, robust robots that explicitly account for the inherent dependence between perception and control.

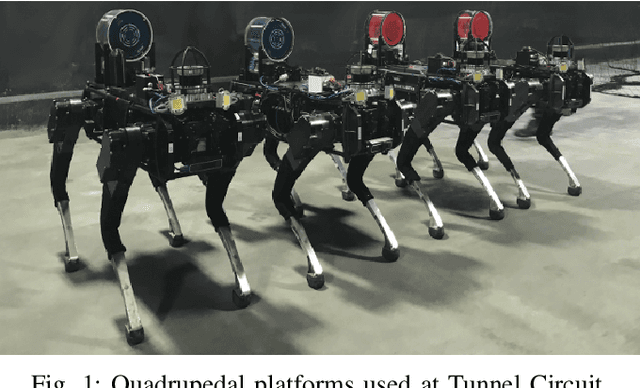

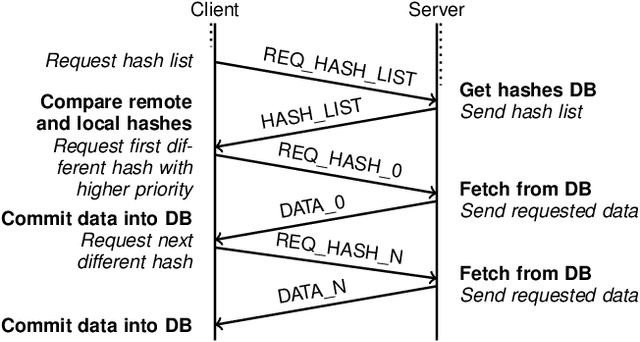

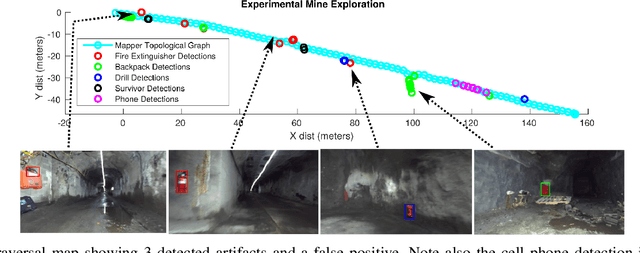

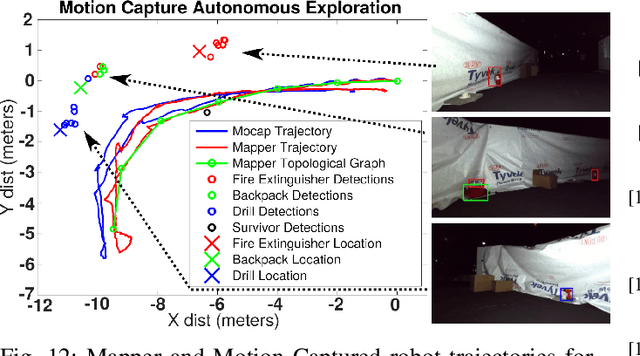

Mine Tunnel Exploration using Multiple Quadrupedal Robots

Sep 20, 2019

Abstract:Robotic exploration of underground environments is a particularly challenging problem due to communication, endurance, and traversability constraints which necessitate high degrees of autonomy and agility. These challenges are further enhanced by the need to minimize human intervention for practical applications. While legged robots have the ability to traverse extremely challenging terrain, they also engender further inherent challenges for planning, estimation, and control. In this work, we describe a fully autonomous system for multi-robot mine exploration and mapping using legged quadrupeds, as well as a distributed database mesh networking system for reporting data. In addition, we show results from the DARPA Subterranean Challenge (SubT) Tunnel Circuit demonstrating localization of artifacts after traversals of hundreds of meters. To our knowledge, these experiments represent the first fully autonomous exploration of an unknown GNSS-denied environment undertaken by legged robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge