Yuezhan Tao

Gaussian Splatting as a Unified Representation for Autonomy in Unstructured Environments

May 17, 2025Abstract:In this work, we argue that Gaussian splatting is a suitable unified representation for autonomous robot navigation in large-scale unstructured outdoor environments. Such environments require representations that can capture complex structures while remaining computationally tractable for real-time navigation. We demonstrate that the dense geometric and photometric information provided by a Gaussian splatting representation is useful for navigation in unstructured environments. Additionally, semantic information can be embedded in the Gaussian map to enable large-scale task-driven navigation. From the lessons learned through our experiments, we highlight several challenges and opportunities arising from the use of such a representation for robot autonomy.

ATLAS Navigator: Active Task-driven LAnguage-embedded Gaussian Splatting

Feb 27, 2025Abstract:We address the challenge of task-oriented navigation in unstructured and unknown environments, where robots must incrementally build and reason on rich, metric-semantic maps in real time. Since tasks may require clarification or re-specification, it is necessary for the information in the map to be rich enough to enable generalization across a wide range of tasks. To effectively execute tasks specified in natural language, we propose a hierarchical representation built on language-embedded Gaussian splatting that enables both sparse semantic planning that lends itself to online operation and dense geometric representation for collision-free navigation. We validate the effectiveness of our method through real-world robot experiments conducted in both cluttered indoor and kilometer-scale outdoor environments, with a competitive ratio of about 60% against privileged baselines. Experiment videos and more details can be found on our project page: https://atlasnav.github.io

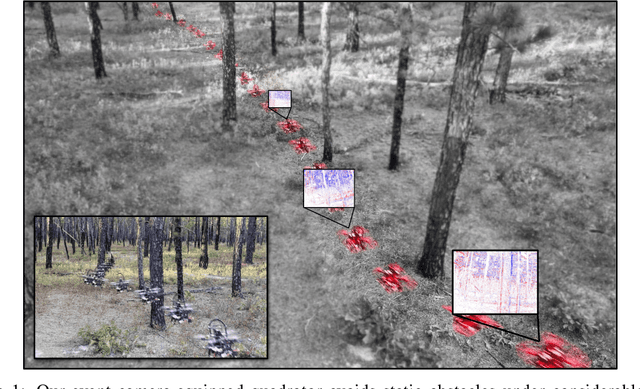

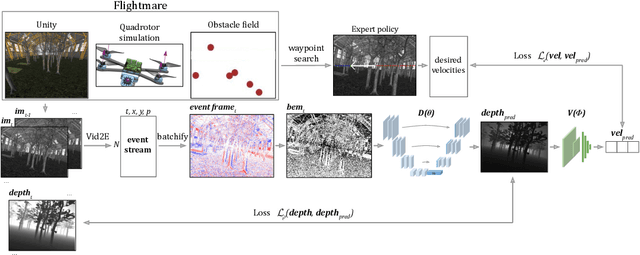

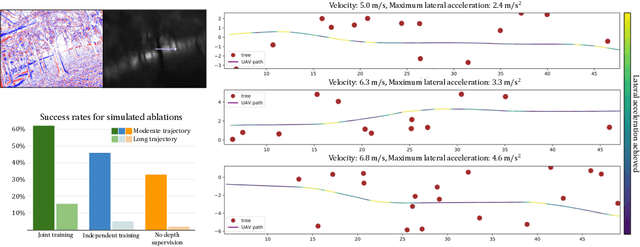

Monocular Event-Based Vision for Obstacle Avoidance with a Quadrotor

Nov 05, 2024

Abstract:We present the first static-obstacle avoidance method for quadrotors using just an onboard, monocular event camera. Quadrotors are capable of fast and agile flight in cluttered environments when piloted manually, but vision-based autonomous flight in unknown environments is difficult in part due to the sensor limitations of traditional onboard cameras. Event cameras, however, promise nearly zero motion blur and high dynamic range, but produce a very large volume of events under significant ego-motion and further lack a continuous-time sensor model in simulation, making direct sim-to-real transfer not possible. By leveraging depth prediction as a pretext task in our learning framework, we can pre-train a reactive obstacle avoidance events-to-control policy with approximated, simulated events and then fine-tune the perception component with limited events-and-depth real-world data to achieve obstacle avoidance in indoor and outdoor settings. We demonstrate this across two quadrotor-event camera platforms in multiple settings and find, contrary to traditional vision-based works, that low speeds (1m/s) make the task harder and more prone to collisions, while high speeds (5m/s) result in better event-based depth estimation and avoidance. We also find that success rates in outdoor scenes can be significantly higher than in certain indoor scenes.

* 18 pages with supplementary

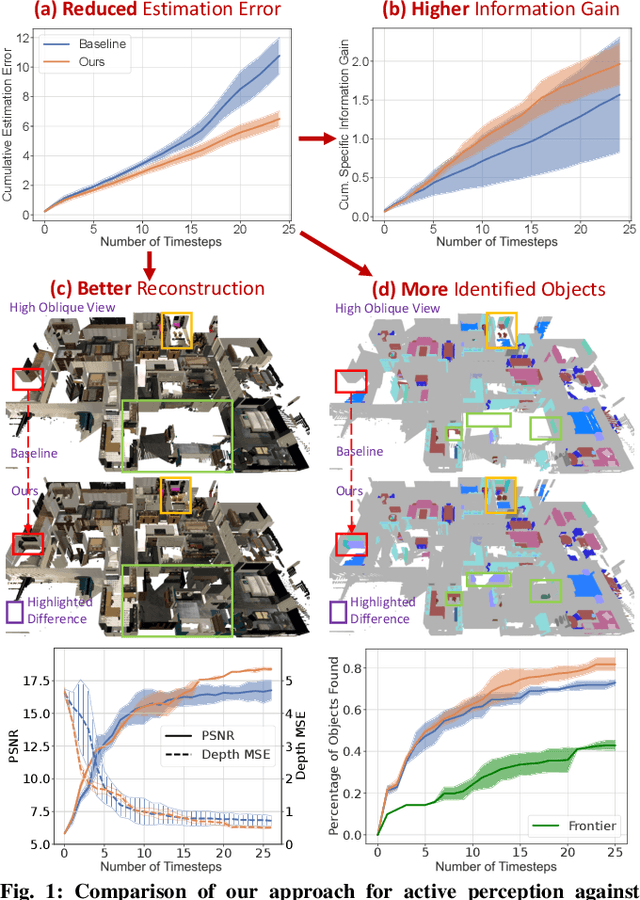

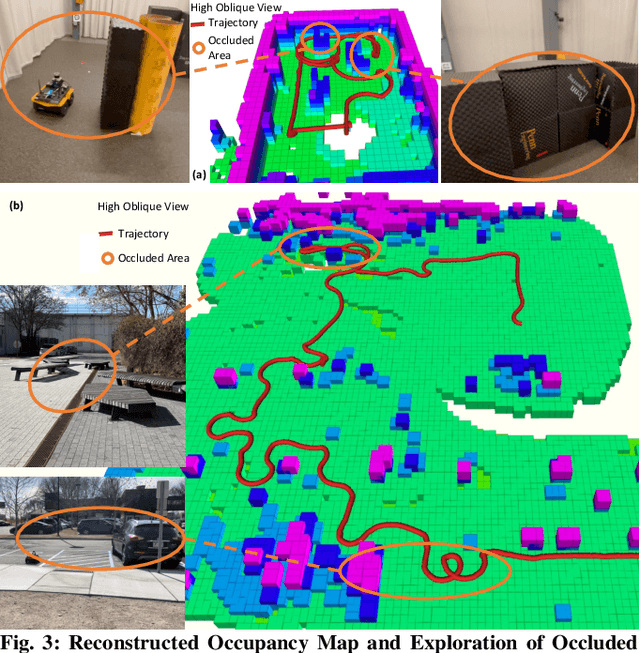

RT-GuIDE: Real-Time Gaussian splatting for Information-Driven Exploration

Sep 26, 2024

Abstract:We propose a framework for active mapping and exploration that leverages Gaussian splatting for constructing information-rich maps. Further, we develop a parallelized motion planning algorithm that can exploit the Gaussian map for real-time navigation. The Gaussian map constructed onboard the robot is optimized for both photometric and geometric quality while enabling real-time situational awareness for autonomy. We show through simulation experiments that our method is competitive with approaches that use alternate information gain metrics, while being orders of magnitude faster to compute. In real-world experiments, our algorithm achieves better map quality (10% higher Peak Signal-to-Noise Ratio (PSNR) and 30% higher geometric reconstruction accuracy) than Gaussian maps constructed by traditional exploration baselines. Experiment videos and more details can be found on our project page: https://tyuezhan.github.io/RT_GuIDE/

Safe Interval Motion Planning for Quadrotors in Dynamic Environments

Sep 16, 2024

Abstract:Trajectory generation in dynamic environments presents a significant challenge for quadrotors, particularly due to the non-convexity in the spatial-temporal domain. Many existing methods either assume simplified static environments or struggle to produce optimal solutions in real-time. In this work, we propose an efficient safe interval motion planning framework for navigation in dynamic environments. A safe interval refers to a time window during which a specific configuration is safe. Our approach addresses trajectory generation through a two-stage process: a front-end graph search step followed by a back-end gradient-based optimization. We ensure completeness and optimality by constructing a dynamic connected visibility graph and incorporating low-order dynamic bounds within safe intervals and temporal corridors. To avoid local minima, we propose a Uniform Temporal Visibility Deformation (UTVD) for the complete evaluation of spatial-temporal topological equivalence. We represent trajectories with B-Spline curves and apply gradient-based optimization to navigate around static and moving obstacles within spatial-temporal corridors. Through simulation and real-world experiments, we show that our method can achieve a success rate of over 95% in environments with different density levels, exceeding the performance of other approaches, demonstrating its potential for practical deployment in highly dynamic environments.

SlideSLAM: Sparse, Lightweight, Decentralized Metric-Semantic SLAM for Multi-Robot Navigation

Jun 25, 2024

Abstract:This paper develops a real-time decentralized metric-semantic Simultaneous Localization and Mapping (SLAM) approach that leverages a sparse and lightweight object-based representation to enable a heterogeneous robot team to autonomously explore 3D environments featuring indoor, urban, and forested areas without relying on GPS. We use a hierarchical metric-semantic representation of the environment, including high-level sparse semantic maps of object models and low-level voxel maps. We leverage the informativeness and viewpoint invariance of the high-level semantic map to obtain an effective semantics-driven place-recognition algorithm for inter-robot loop closure detection across aerial and ground robots with different sensing modalities. A communication module is designed to track each robot's observations and those of other robots within the communication range. Such observations are then used to construct a merged map. Our framework enables real-time decentralized operations onboard robots, allowing them to opportunistically leverage communication. We integrate and deploy our proposed framework on three types of aerial and ground robots. Extensive experimental results show an average localization error of 0.22 meters in position and -0.16 degrees in orientation, an object mapping F1 score of 0.92, and a communication packet size of merely 2-3 megabytes per kilometer trajectory with 1,000 landmarks. The project website can be found at https://xurobotics.github.io/slideslam/.

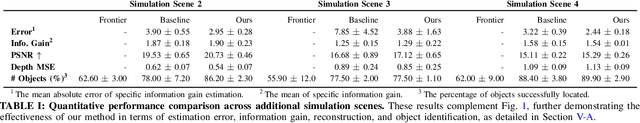

An Active Perception Game for Robust Autonomous Exploration

Mar 31, 2024

Abstract:We formulate active perception for an autonomous agent that explores an unknown environment as a two-player zero-sum game: the agent aims to maximize information gained from the environment while the environment aims to minimize the information gained by the agent. In each episode, the environment reveals a set of actions with their potentially erroneous information gain. In order to select the best action, the robot needs to recover the true information gain from the erroneous one. The robot does so by minimizing the discrepancy between its estimate of information gain and the true information gain it observes after taking the action. We propose an online convex optimization algorithm that achieves sub-linear expected regret $O(T^{3/4})$ for estimating the information gain. We also provide a bound on the regret of active perception performed by any (near-)optimal prediction and trajectory selection algorithms. We evaluate this approach using semantic neural radiance fields (NeRFs) in simulated realistic 3D environments to show that the robot can discover up to 12% more objects using the improved estimate of the information gain. On the M3ED dataset, the proposed algorithm reduced the error of information gain prediction in occupancy map by over 67%. In real-world experiments using occupancy maps on a Jackal ground robot, we show that this approach can calculate complicated trajectories that efficiently explore all occluded regions.

Trajectory Optimization with Global Yaw Parameterization for Field-of-View Constrained Autonomous Flight

Mar 25, 2024Abstract:Trajectory generation for quadrotors with limited field-of-view sensors has numerous applications such as aerial exploration, coverage, inspection, videography, and target tracking. Most previous works simplify the task of optimizing yaw trajectories by either aligning the heading of the robot with its velocity, or potentially restricting the feasible space of candidate trajectories by using a limited yaw domain to circumvent angular singularities. In this paper, we propose a novel \textit{global} yaw parameterization method for trajectory optimization that allows a 360-degree yaw variation as demanded by the underlying algorithm. This approach effectively bypasses inherent singularities by including supplementary quadratic constraints and transforming the final decision variables into the desired state representation. This method significantly reduces the needed control effort, and improves optimization feasibility. Furthermore, we apply the method to several examples of different applications that require jointly optimizing over both the yaw and position trajectories. Ultimately, we present a comprehensive numerical analysis and evaluation of our proposed method in both simulation and real-world experiments.

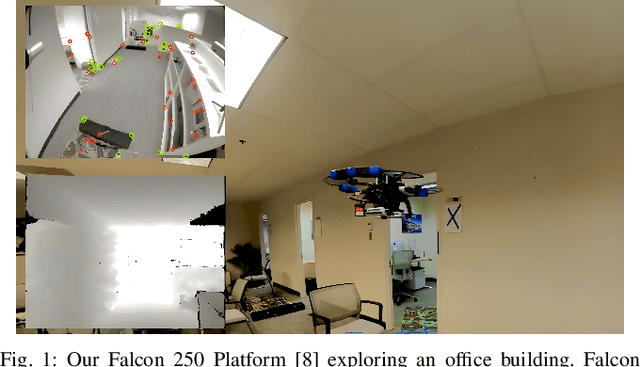

3D Active Metric-Semantic SLAM

Sep 13, 2023

Abstract:In this letter, we address the problem of exploration and metric-semantic mapping of multi-floor GPS-denied indoor environments using Size Weight and Power (SWaP) constrained aerial robots. Most previous work in exploration assumes that robot localization is solved. However, neglecting the state uncertainty of the agent can ultimately lead to cascading errors both in the resulting map and in the state of the agent itself. Furthermore, actions that reduce localization errors may be at direct odds with the exploration task. We propose a framework that balances the efficiency of exploration with actions that reduce the state uncertainty of the agent. In particular, our algorithmic approach for active metric-semantic SLAM is built upon sparse information abstracted from raw problem data, to make it suitable for SWaP-constrained robots. Furthermore, we integrate this framework within a fully autonomous aerial robotic system that achieves autonomous exploration in cluttered, 3D environments. From extensive real-world experiments, we showed that by including Semantic Loop Closure (SLC), we can reduce the robot pose estimation errors by over 90% in translation and approximately 75% in yaw, and the uncertainties in pose estimates and semantic maps by over 70% and 65%, respectively. Although discussed in the context of indoor multi-floor exploration, our system can be used for various other applications, such as infrastructure inspection and precision agriculture where reliable GPS data may not be available.

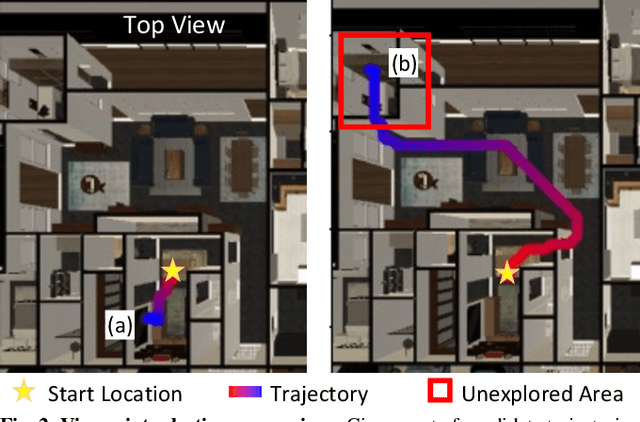

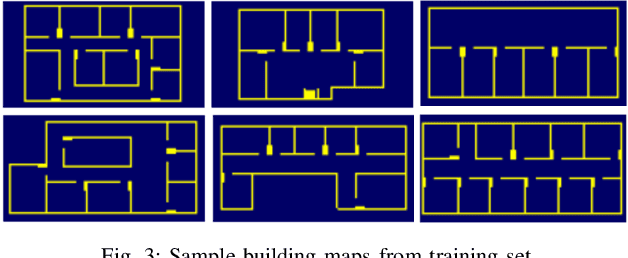

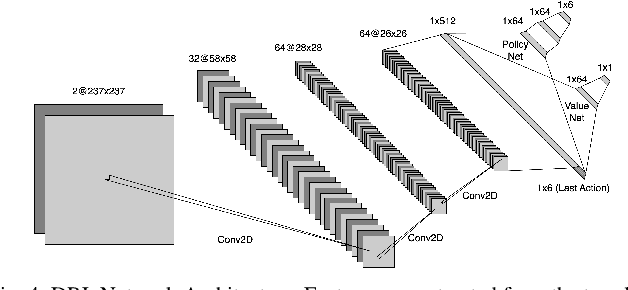

Learning to Explore Indoor Environments using Autonomous Micro Aerial Vehicles

Sep 13, 2023

Abstract:In this paper, we address the challenge of exploring unknown indoor aerial environments using autonomous aerial robots with Size Weight and Power (SWaP) constraints. The SWaP constraints induce limits on mission time requiring efficiency in exploration. We present a novel exploration framework that uses Deep Learning (DL) to predict the most likely indoor map given the previous observations, and Deep Reinforcement Learning (DRL) for exploration, designed to run on modern SWaP constraints neural processors. The DL-based map predictor provides a prediction of the occupancy of the unseen environment while the DRL-based planner determines the best navigation goals that can be safely reached to provide the most information. The two modules are tightly coupled and run onboard allowing the vehicle to safely map an unknown environment. Extensive experimental and simulation results show that our approach surpasses state-of-the-art methods by 50-60% in efficiency, which we measure by the fraction of the explored space as a function of the length of the trajectory traveled.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge