Anish Bhattacharya

Monocular Event-Based Vision for Obstacle Avoidance with a Quadrotor

Nov 05, 2024

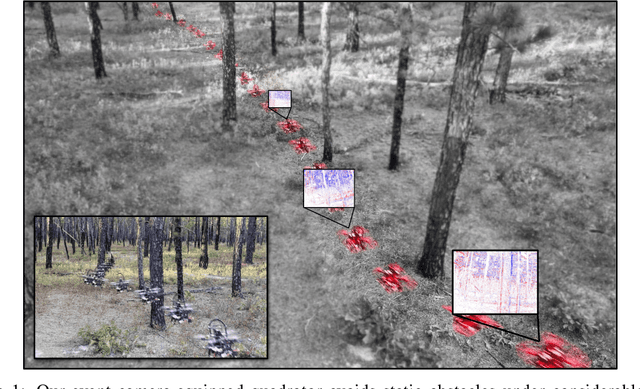

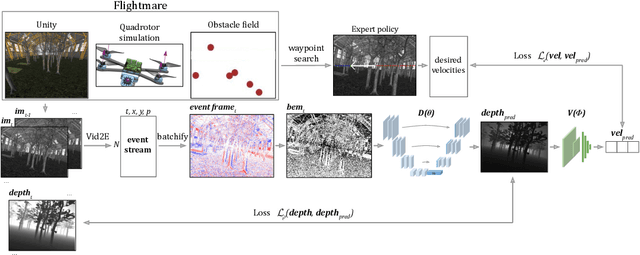

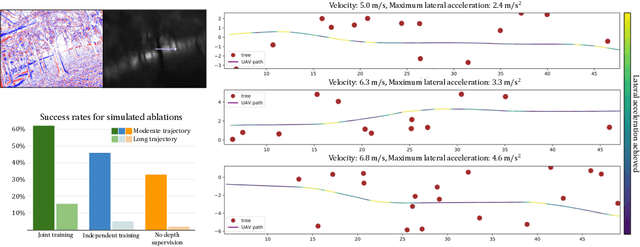

Abstract:We present the first static-obstacle avoidance method for quadrotors using just an onboard, monocular event camera. Quadrotors are capable of fast and agile flight in cluttered environments when piloted manually, but vision-based autonomous flight in unknown environments is difficult in part due to the sensor limitations of traditional onboard cameras. Event cameras, however, promise nearly zero motion blur and high dynamic range, but produce a very large volume of events under significant ego-motion and further lack a continuous-time sensor model in simulation, making direct sim-to-real transfer not possible. By leveraging depth prediction as a pretext task in our learning framework, we can pre-train a reactive obstacle avoidance events-to-control policy with approximated, simulated events and then fine-tune the perception component with limited events-and-depth real-world data to achieve obstacle avoidance in indoor and outdoor settings. We demonstrate this across two quadrotor-event camera platforms in multiple settings and find, contrary to traditional vision-based works, that low speeds (1m/s) make the task harder and more prone to collisions, while high speeds (5m/s) result in better event-based depth estimation and avoidance. We also find that success rates in outdoor scenes can be significantly higher than in certain indoor scenes.

* 18 pages with supplementary

Vision Transformers for End-to-End Vision-Based Quadrotor Obstacle Avoidance

May 16, 2024

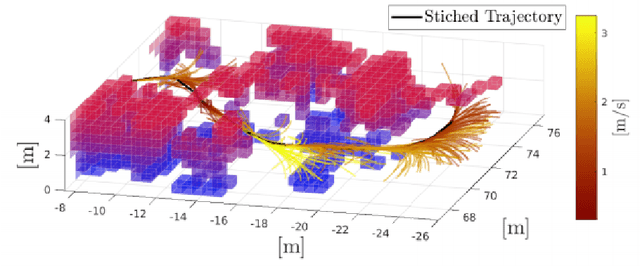

Abstract:We demonstrate the capabilities of an attention-based end-to-end approach for high-speed quadrotor obstacle avoidance in dense, cluttered environments, with comparison to various state-of-the-art architectures. Quadrotor unmanned aerial vehicles (UAVs) have tremendous maneuverability when flown fast; however, as flight speed increases, traditional vision-based navigation via independent mapping, planning, and control modules breaks down due to increased sensor noise, compounding errors, and increased processing latency. Thus, learning-based, end-to-end planning and control networks have shown to be effective for online control of these fast robots through cluttered environments. We train and compare convolutional, U-Net, and recurrent architectures against vision transformer models for depth-based end-to-end control, in a photorealistic, high-physics-fidelity simulator as well as in hardware, and observe that the attention-based models are more effective as quadrotor speeds increase, while recurrent models with many layers provide smoother commands at lower speeds. To the best of our knowledge, this is the first work to utilize vision transformers for end-to-end vision-based quadrotor control.

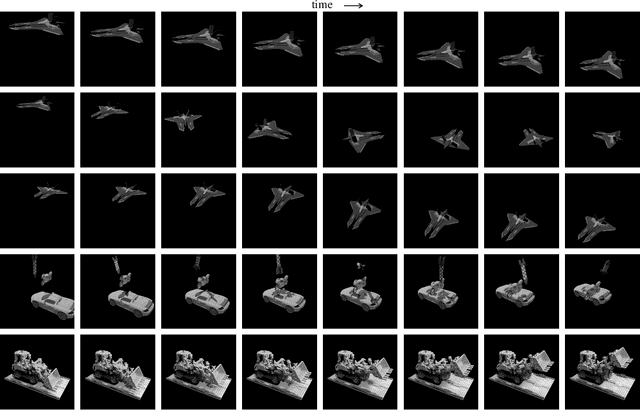

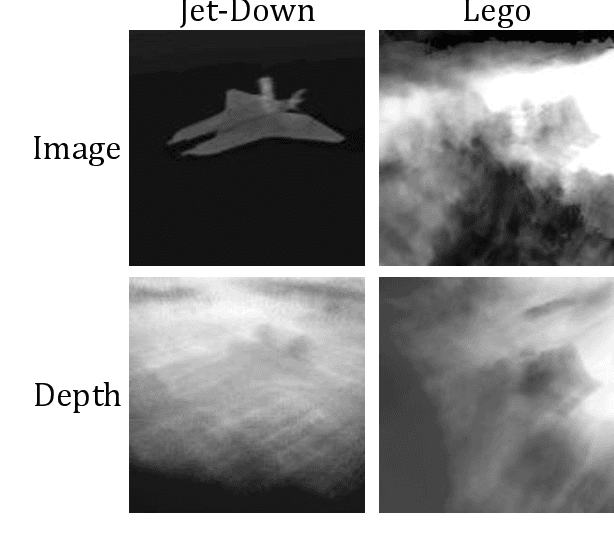

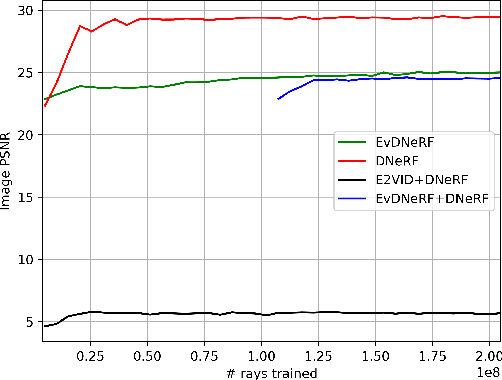

EvDNeRF: Reconstructing Event Data with Dynamic Neural Radiance Fields

Oct 03, 2023

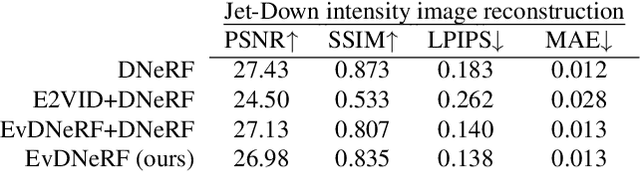

Abstract:We present EvDNeRF, a pipeline for generating event data and training an event-based dynamic NeRF, for the purpose of faithfully reconstructing eventstreams on scenes with rigid and non-rigid deformations that may be too fast to capture with a standard camera. Event cameras register asynchronous per-pixel brightness changes at MHz rates with high dynamic range, making them ideal for observing fast motion with almost no motion blur. Neural radiance fields (NeRFs) offer visual-quality geometric-based learnable rendering, but prior work with events has only considered reconstruction of static scenes. Our EvDNeRF can predict eventstreams of dynamic scenes from a static or moving viewpoint between any desired timestamps, thereby allowing it to be used as an event-based simulator for a given scene. We show that by training on varied batch sizes of events, we can improve test-time predictions of events at fine time resolutions, outperforming baselines that pair standard dynamic NeRFs with event simulators. We release our simulated and real datasets, as well as code for both event-based data generation and the training of event-based dynamic NeRF models (https://github.com/anish-bhattacharya/EvDNeRF).

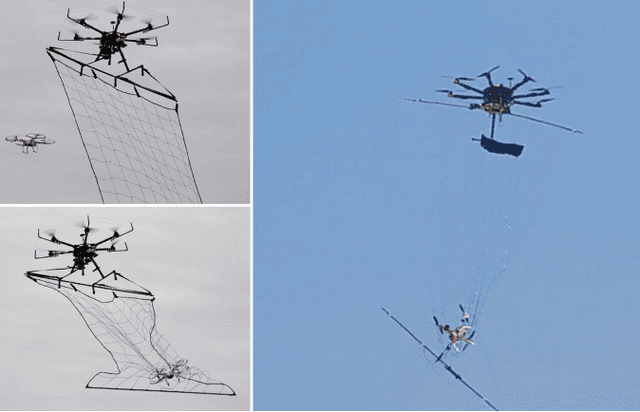

Toward Increased Airspace Safety: Quadrotor Guidance for Targeting Aerial Objects

Jul 04, 2021

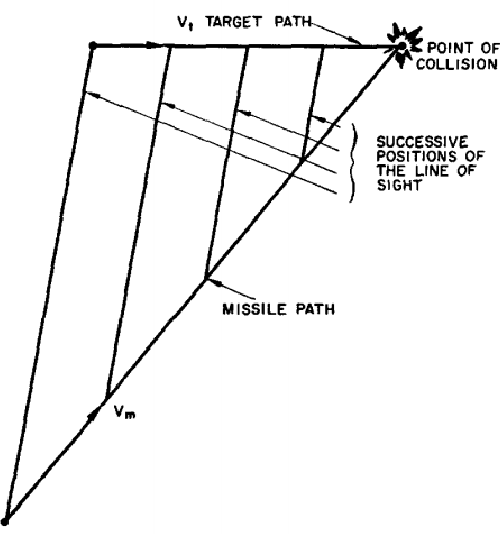

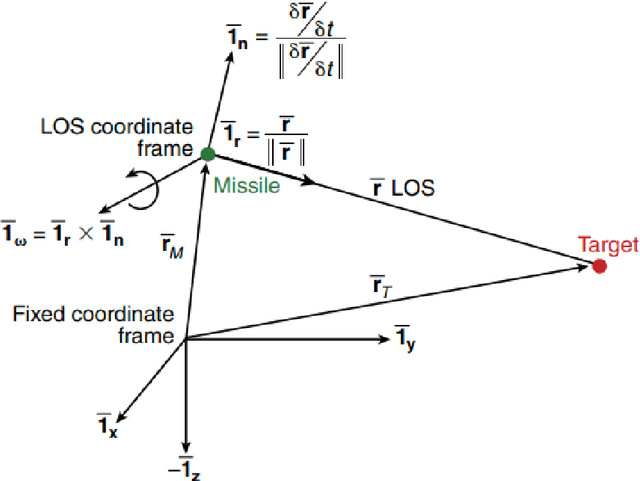

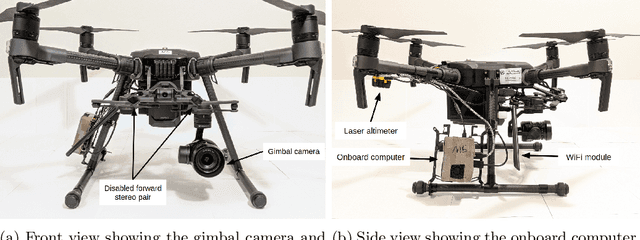

Abstract:As the market for commercially available unmanned aerial vehicles (UAVs) booms, there is an increasing number of small, teleoperated or autonomous aircraft found in protected or sensitive airspace. Existing solutions for removal of these aircraft are either military-grade and too disruptive for domestic use, or compose of cumbersomely teleoperated counter-UAV vehicles that have proven ineffective in high-profile domestic cases. In this work, we examine the use of a quadrotor for autonomously targeting semi-stationary and moving aerial objects with little or no prior knowledge of the target's flight characteristics. Guidance and control commands are generated with information just from an onboard monocular camera. We draw inspiration from literature in missile guidance, and demonstrate an optimal guidance method implemented on a quadrotor but not usable by missiles. Results are presented for first-pass hit success and pursuit duration with various methods. Finally, we cover the CMU Team Tartan entry in the MBZIRC 2020 Challenge 1 competition, demonstrating the effectiveness of simple line-of-sight guidance methods in a structured competition setting.

Carnegie Mellon Team Tartan: Mission-level Robustness with Rapidly Deployed Autonomous Aerial Vehicles in the MBZIRC 2020

Jul 03, 2021

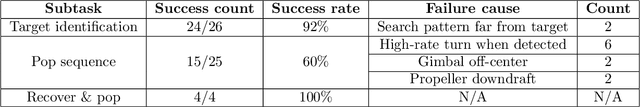

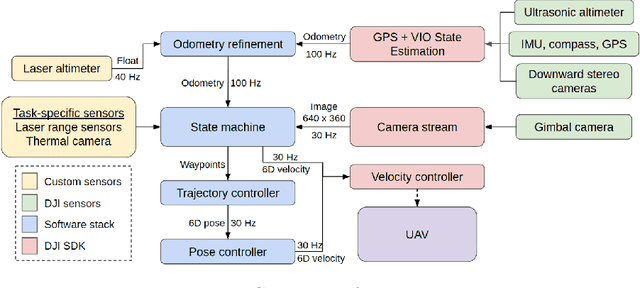

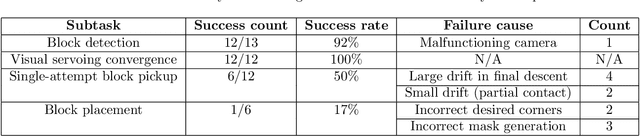

Abstract:For robotics systems to be used in high risk, real-world situations, they have to be quickly deployable and robust to environmental changes, under-performing hardware, and mission subtask failures. Robots are often designed to consider a single sequence of mission events, with complex algorithms lowering individual subtask failure rates under some critical constraints. Our approach is to leverage common techniques in vision and control and encode robustness into mission structure through outcome monitoring and recovery strategies, aided by a system infrastructure that allows for quick mission deployments under tight time constraints and no central communication. We also detail lessons in rapid field robotics development and testing. Systems were developed and evaluated through real-robot experiments at an outdoor test site in Pittsburgh, Pennsylvania, USA, as well as in the 2020 Mohamed Bin Zayed International Robotics Challenge. All competition trials were completed in fully autonomous mode without RTK-GPS. Our system led to 4th place in Challenge 2 and 7th place in the Grand Challenge, and achievements like popping five balloons (Challenge 1), successfully picking and placing a block (Challenge 2), and dispensing the most water autonomously with a UAV of all teams onto an outdoor, real fire (Challenge 3).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge