Siming He

Estimating the Diameter at Breast Height of Trees in a Forest With a Single 360 Camera

May 06, 2025Abstract:Forest inventories rely on accurate measurements of the diameter at breast height (DBH) for ecological monitoring, resource management, and carbon accounting. While LiDAR-based techniques can achieve centimeter-level precision, they are cost-prohibitive and operationally complex. We present a low-cost alternative that only needs a consumer-grade 360 video camera. Our semi-automated pipeline comprises of (i) a dense point cloud reconstruction using Structure from Motion (SfM) photogrammetry software called Agisoft Metashape, (ii) semantic trunk segmentation by projecting Grounded Segment Anything (SAM) masks onto the 3D cloud, and (iii) a robust RANSAC-based technique to estimate cross section shape and DBH. We introduce an interactive visualization tool for inspecting segmented trees and their estimated DBH. On 61 acquisitions of 43 trees under a variety of conditions, our method attains median absolute relative errors of 5-9% with respect to "ground-truth" manual measurements. This is only 2-4% higher than LiDAR-based estimates, while employing a single 360 camera that costs orders of magnitude less, requires minimal setup, and is widely available.

From NeRFs to Gaussian Splats, and Back

May 15, 2024Abstract:For robotics applications where there is a limited number of (typically ego-centric) views, parametric representations such as neural radiance fields (NeRFs) generalize better than non-parametric ones such as Gaussian splatting (GS) to views that are very different from those in the training data; GS however can render much faster than NeRFs. We develop a procedure to convert back and forth between the two. Our approach achieves the best of both NeRFs (superior PSNR, SSIM, and LPIPS on dissimilar views, and a compact representation) and GS (real-time rendering and ability for easily modifying the representation); the computational cost of these conversions is minor compared to training the two from scratch.

An Active Perception Game for Robust Autonomous Exploration

Mar 31, 2024

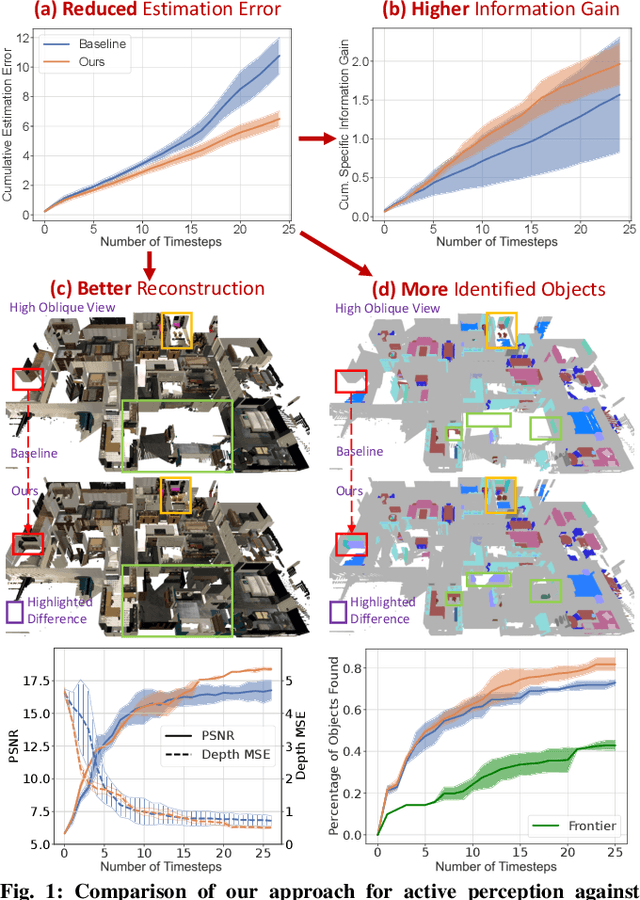

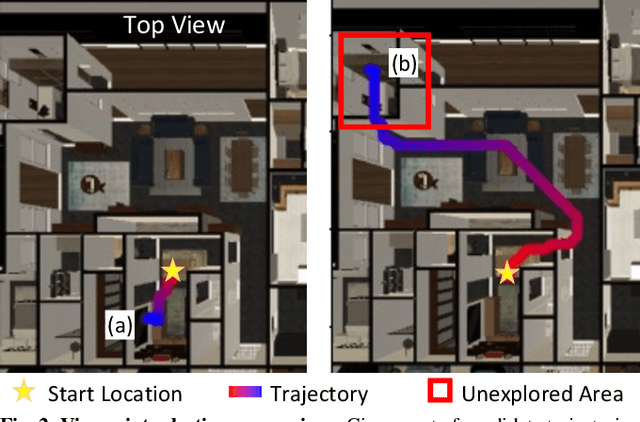

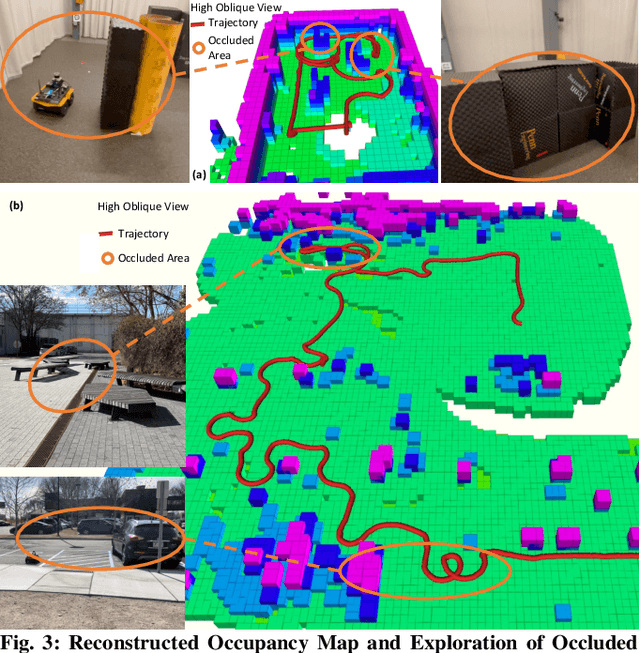

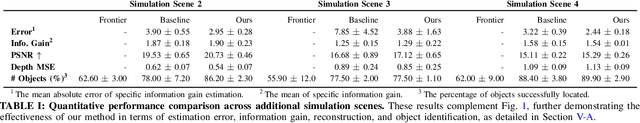

Abstract:We formulate active perception for an autonomous agent that explores an unknown environment as a two-player zero-sum game: the agent aims to maximize information gained from the environment while the environment aims to minimize the information gained by the agent. In each episode, the environment reveals a set of actions with their potentially erroneous information gain. In order to select the best action, the robot needs to recover the true information gain from the erroneous one. The robot does so by minimizing the discrepancy between its estimate of information gain and the true information gain it observes after taking the action. We propose an online convex optimization algorithm that achieves sub-linear expected regret $O(T^{3/4})$ for estimating the information gain. We also provide a bound on the regret of active perception performed by any (near-)optimal prediction and trajectory selection algorithms. We evaluate this approach using semantic neural radiance fields (NeRFs) in simulated realistic 3D environments to show that the robot can discover up to 12% more objects using the improved estimate of the information gain. On the M3ED dataset, the proposed algorithm reduced the error of information gain prediction in occupancy map by over 67%. In real-world experiments using occupancy maps on a Jackal ground robot, we show that this approach can calculate complicated trajectories that efficiently explore all occluded regions.

Active Perception using Neural Radiance Fields

Oct 15, 2023Abstract:We study active perception from first principles to argue that an autonomous agent performing active perception should maximize the mutual information that past observations posses about future ones. Doing so requires (a) a representation of the scene that summarizes past observations and the ability to update this representation to incorporate new observations (state estimation and mapping), (b) the ability to synthesize new observations of the scene (a generative model), and (c) the ability to select control trajectories that maximize predictive information (planning). This motivates a neural radiance field (NeRF)-like representation which captures photometric, geometric and semantic properties of the scene grounded. This representation is well-suited to synthesizing new observations from different viewpoints. And thereby, a sampling-based planner can be used to calculate the predictive information from synthetic observations along dynamically-feasible trajectories. We use active perception for exploring cluttered indoor environments and employ a notion of semantic uncertainty to check for the successful completion of an exploration task. We demonstrate these ideas via simulation in realistic 3D indoor environments.

Are we really making much progress? Revisiting, benchmarking, and refining heterogeneous graph neural networks

Dec 30, 2021

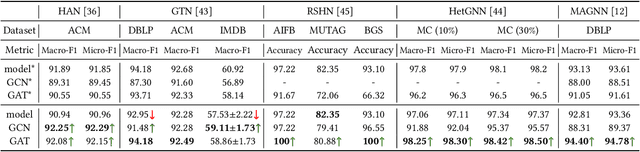

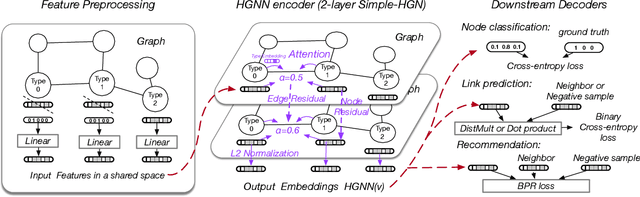

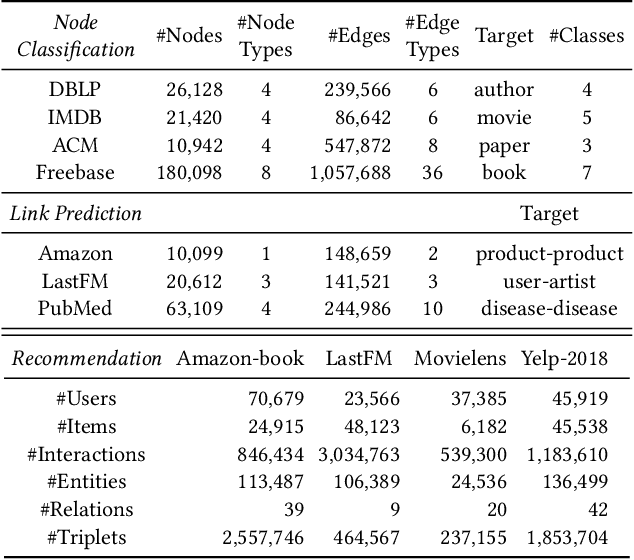

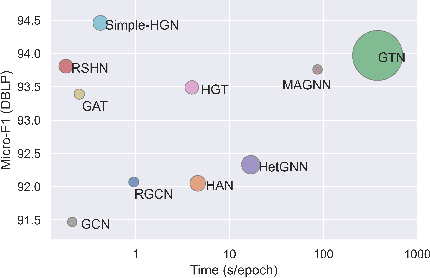

Abstract:Heterogeneous graph neural networks (HGNNs) have been blossoming in recent years, but the unique data processing and evaluation setups used by each work obstruct a full understanding of their advancements. In this work, we present a systematical reproduction of 12 recent HGNNs by using their official codes, datasets, settings, and hyperparameters, revealing surprising findings about the progress of HGNNs. We find that the simple homogeneous GNNs, e.g., GCN and GAT, are largely underestimated due to improper settings. GAT with proper inputs can generally match or outperform all existing HGNNs across various scenarios. To facilitate robust and reproducible HGNN research, we construct the Heterogeneous Graph Benchmark (HGB), consisting of 11 diverse datasets with three tasks. HGB standardizes the process of heterogeneous graph data splits, feature processing, and performance evaluation. Finally, we introduce a simple but very strong baseline Simple-HGN--which significantly outperforms all previous models on HGB--to accelerate the advancement of HGNNs in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge