Uri Heinemann

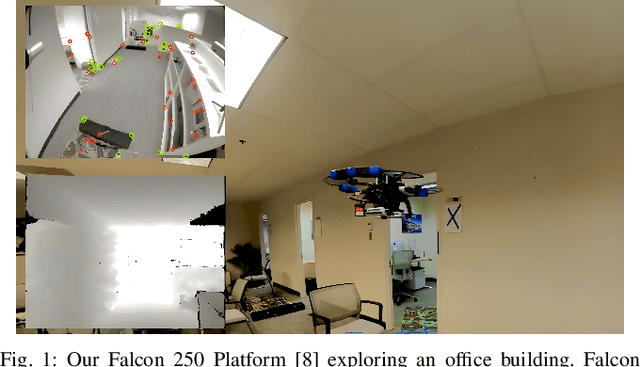

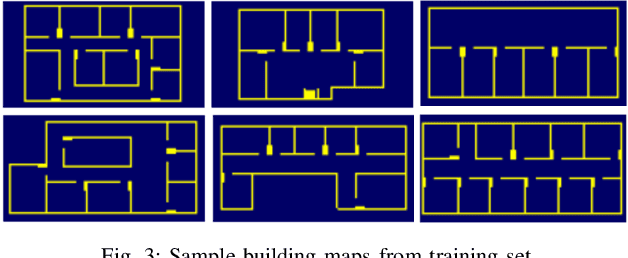

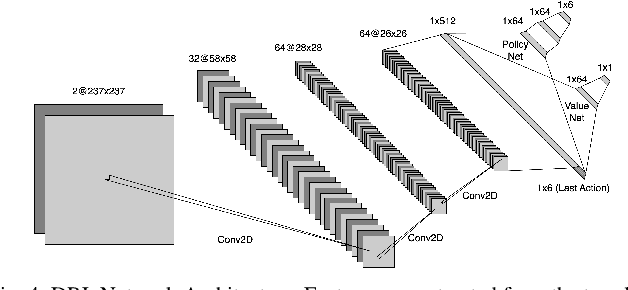

Learning to Explore Indoor Environments using Autonomous Micro Aerial Vehicles

Sep 13, 2023

Abstract:In this paper, we address the challenge of exploring unknown indoor aerial environments using autonomous aerial robots with Size Weight and Power (SWaP) constraints. The SWaP constraints induce limits on mission time requiring efficiency in exploration. We present a novel exploration framework that uses Deep Learning (DL) to predict the most likely indoor map given the previous observations, and Deep Reinforcement Learning (DRL) for exploration, designed to run on modern SWaP constraints neural processors. The DL-based map predictor provides a prediction of the occupancy of the unseen environment while the DRL-based planner determines the best navigation goals that can be safely reached to provide the most information. The two modules are tightly coupled and run onboard allowing the vehicle to safely map an unknown environment. Extensive experimental and simulation results show that our approach surpasses state-of-the-art methods by 50-60% in efficiency, which we measure by the fraction of the explored space as a function of the length of the trajectory traveled.

What Cannot be Learned with Bethe Approximations

Feb 14, 2012

Abstract:We address the problem of learning the parameters in graphical models when inference is intractable. A common strategy in this case is to replace the partition function with its Bethe approximation. We show that there exists a regime of empirical marginals where such Bethe learning will fail. By failure we mean that the empirical marginals cannot be recovered from the approximated maximum likelihood parameters (i.e., moment matching is not achieved). We provide several conditions on empirical marginals that yield outer and inner bounds on the set of Bethe learnable marginals. An interesting implication of our results is that there exists a large class of marginals that cannot be obtained as stable fixed points of belief propagation. Taken together our results provide a novel approach to analyzing learning with Bethe approximations and highlight when it can be expected to work or fail.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge