Guilherme V. Nardari

Large-scale Autonomous Flight with Real-time Semantic SLAM under Dense Forest Canopy

Sep 19, 2021

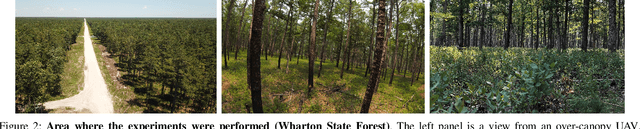

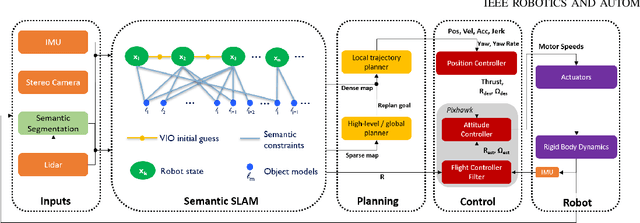

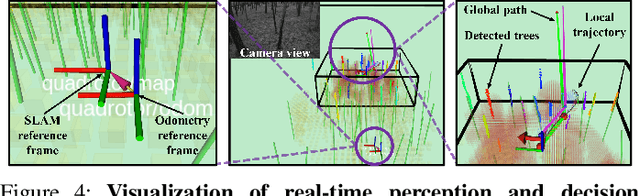

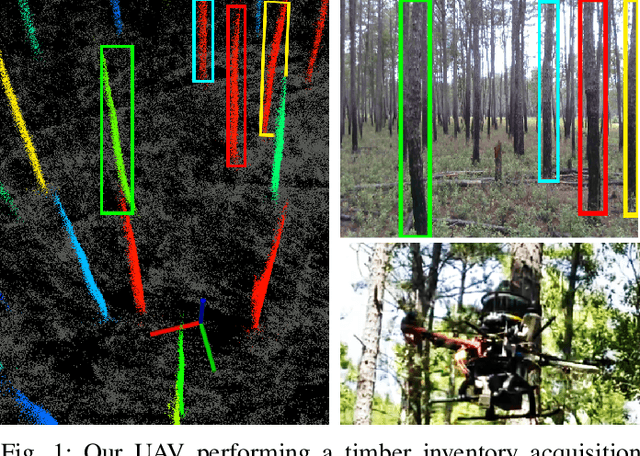

Abstract:In this letter, we propose an integrated autonomous flight and semantic SLAM system that can perform long-range missions and real-time semantic mapping in highly cluttered, unstructured, and GPS-denied under-canopy environments. First, tree trunks and ground planes are detected from LIDAR scans. We use a neural network and an instance extraction algorithm to enable semantic segmentation in real time onboard the UAV. Second, detected tree trunk instances are modeled as cylinders and associated across the whole LIDAR sequence. This semantic data association constraints both robot poses as well as trunk landmark models. The output of semantic SLAM is used in state estimation, planning, and control algorithms in real time. The global planner relies on a sparse map to plan the shortest path to the global goal, and the local trajectory planner uses a small but finely discretized robot-centric map to plan a dynamically feasible and collision-free trajectory to the local goal. Both the global path and local trajectory lead to drift-corrected goals, thus helping the UAV execute its mission accurately and safely.

Place Recognition in Forests with Urquhart Tessellations

Sep 23, 2020

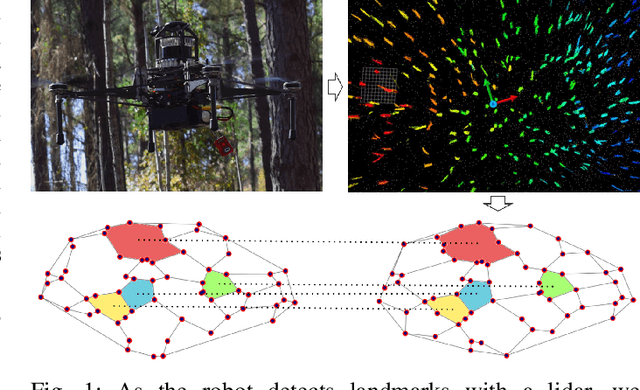

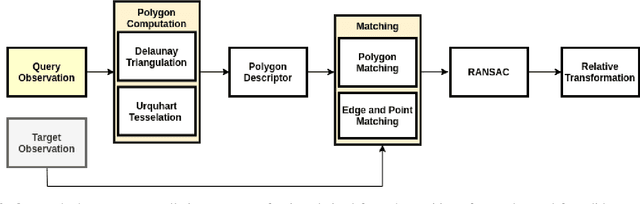

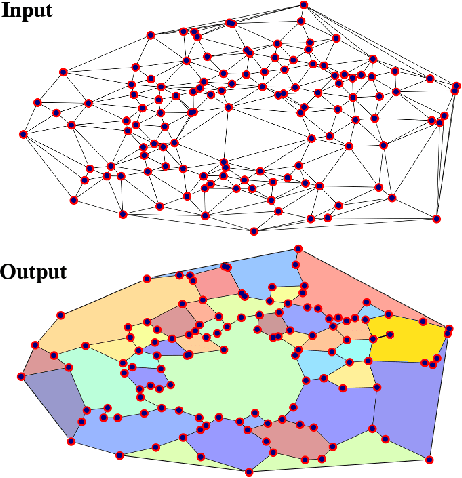

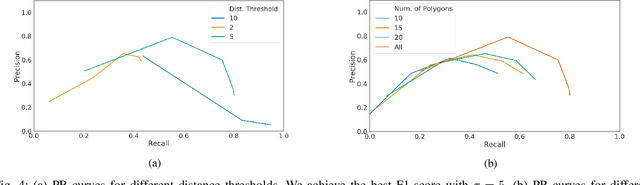

Abstract:In this letter we present a novel descriptor based on polygons derived from Urquhart tessellations on the position of trees in a forest detected from lidar scans. We present a framework that leverages these polygons to generate a signature that is used detect previously seen observations even with partial overlap and different levels of noise while also inferring landmark correspondences to compute an affine transformation between observations. We run loop-closure experiments in simulation and real-world data map-merging from different flights of an Unmanned Aerial Vehicle (UAV) in a pine tree forest and show that our method outperforms state-of-the-art approaches in accuracy and robustness.

SLOAM: Semantic Lidar Odometry and Mapping for Forest Inventory

Dec 29, 2019

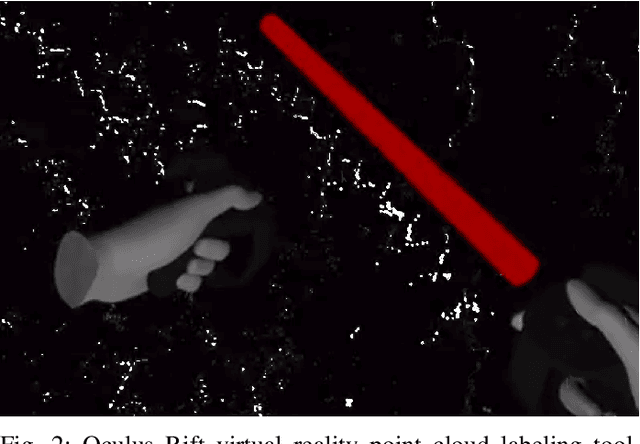

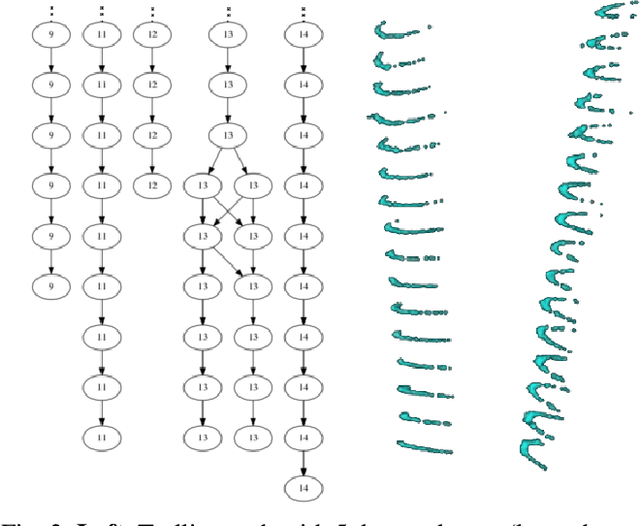

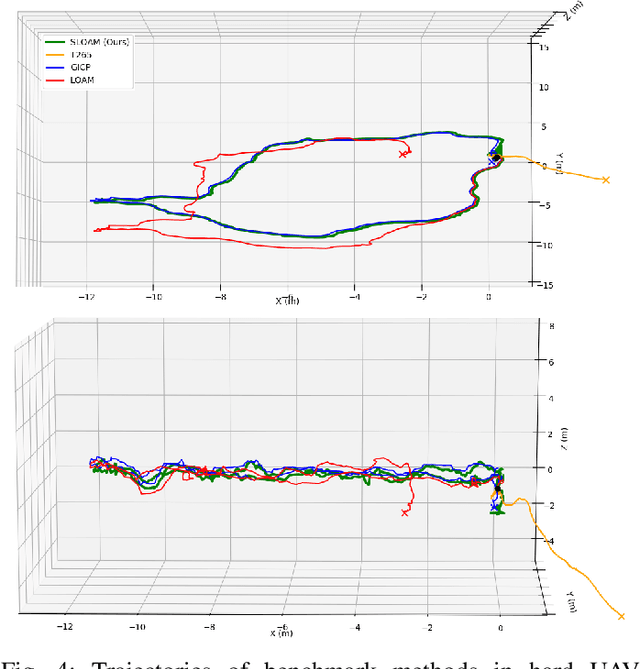

Abstract:This paper describes an end-to-end pipeline for tree diameter estimation based on semantic segmentation and lidar odometry and mapping. Accurate mapping of this type of environment is challenging since the ground and the trees are surrounded by leaves, thorns and vines, and the sensor typically experiences extreme motion. We propose a semantic feature based pose optimization that simultaneously refines the tree models while estimating the robot pose. The pipeline utilizes a custom virtual reality tool for labeling 3D scans that is used to train a semantic segmentation network. The masked point cloud is used to compute a trellis graph that identifies individual instances and extracts relevant features that are used by the SLAM module. We show that traditional lidar and image based methods fail in the forest environment on both Unmanned Aerial Vehicle (UAV) and hand-carry systems, while our method is more robust, scalable, and automatically generates tree diameter estimations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge