Thomas Donnelly

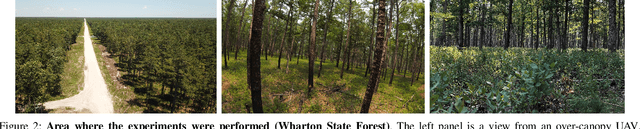

Large-scale Autonomous Flight with Real-time Semantic SLAM under Dense Forest Canopy

Sep 19, 2021

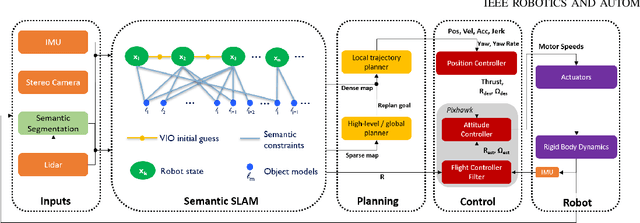

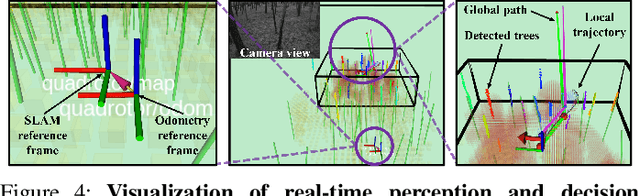

Abstract:In this letter, we propose an integrated autonomous flight and semantic SLAM system that can perform long-range missions and real-time semantic mapping in highly cluttered, unstructured, and GPS-denied under-canopy environments. First, tree trunks and ground planes are detected from LIDAR scans. We use a neural network and an instance extraction algorithm to enable semantic segmentation in real time onboard the UAV. Second, detected tree trunk instances are modeled as cylinders and associated across the whole LIDAR sequence. This semantic data association constraints both robot poses as well as trunk landmark models. The output of semantic SLAM is used in state estimation, planning, and control algorithms in real time. The global planner relies on a sparse map to plan the shortest path to the global goal, and the local trajectory planner uses a small but finely discretized robot-centric map to plan a dynamically feasible and collision-free trajectory to the local goal. Both the global path and local trajectory lead to drift-corrected goals, thus helping the UAV execute its mission accurately and safely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge