Fernando Cladera Ojeda

Towards Understanding Underwater Weather Events in Rivers Using Autonomous Surface Vehicles

Dec 21, 2023

Abstract:Climate change has increased the frequency and severity of extreme weather events such as hurricanes and winter storms. The complex interplay of floods with tides, runoff, and sediment creates additional hazards -- including erosion and the undermining of urban infrastructure -- consequently impacting the health of our rivers and ecosystems. Observations of these underwater phenomena are rare, because satellites and sensors mounted on aerial vehicles cannot penetrate the murky waters. Autonomous Surface Vehicles (ASVs) provides a means to track and map these complex and dynamic underwater phenomena. This work highlights preliminary results of high-resolution data gathering with ASVs, equipped with a suite of sensors capable of measuring physical and chemical parameters of the river. Measurements were acquired along the lower Schuylkill River in the Philadelphia area at high-tide and low-tide conditions. The data will be leveraged to improve our understanding of changes in bathymetry due to floods; the dynamics of mixing and stagnation zones and their impact on water quality; and the dynamics of suspension and resuspension of fine sediment. The data will also provide insight into the development of adaptive sampling strategies for ASVs that can maximize the information gain for future field experiments.

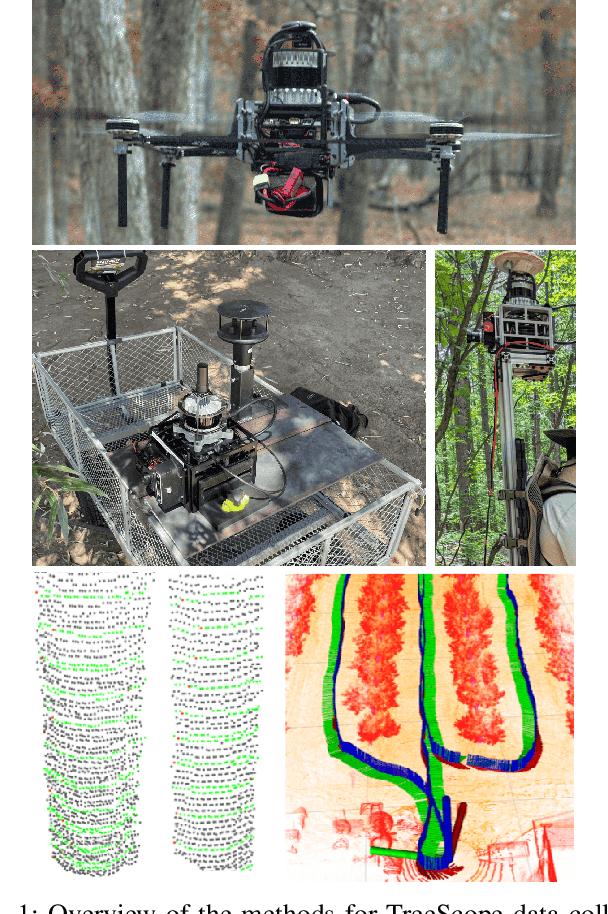

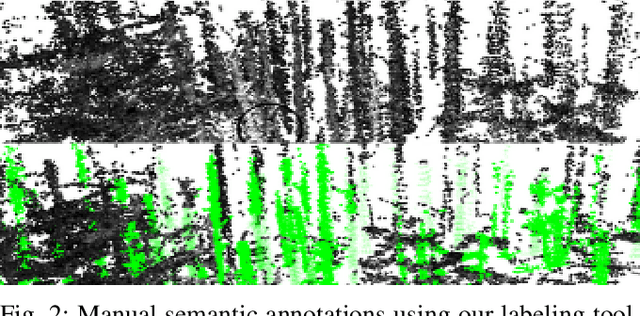

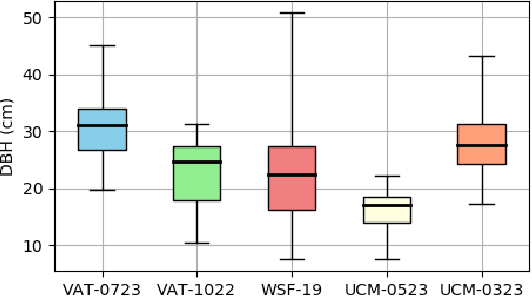

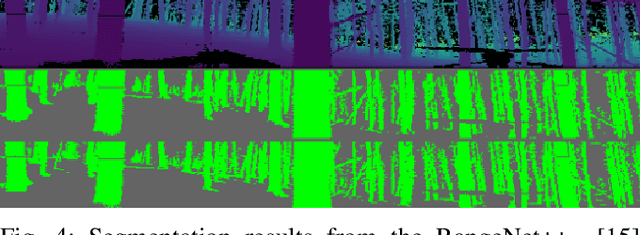

TreeScope: An Agricultural Robotics Dataset for LiDAR-Based Mapping of Trees in Forests and Orchards

Oct 03, 2023

Abstract:Data collection for forestry, timber, and agriculture currently relies on manual techniques which are labor-intensive and time-consuming. We seek to demonstrate that robotics offers improvements over these techniques and accelerate agricultural research, beginning with semantic segmentation and diameter estimation of trees in forests and orchards. We present TreeScope v1.0, the first robotics dataset for precision agriculture and forestry addressing the counting and mapping of trees in forestry and orchards. TreeScope provides LiDAR data from agricultural environments collected with robotics platforms, such as UAV and mobile robot platforms carried by vehicles and human operators. In the first release of this dataset, we provide ground-truth data with over 1,800 manually annotated semantic labels for tree stems and field-measured tree diameters. We share benchmark scripts for these tasks that researchers may use to evaluate the accuracy of their algorithms. Finally, we run our open-source diameter estimation and off-the-shelf semantic segmentation algorithms and share our baseline results.

EV-Catcher: High-Speed Object Catching Using Low-latency Event-based Neural Networks

Apr 14, 2023

Abstract:Event-based sensors have recently drawn increasing interest in robotic perception due to their lower latency, higher dynamic range, and lower bandwidth requirements compared to standard CMOS-based imagers. These properties make them ideal tools for real-time perception tasks in highly dynamic environments. In this work, we demonstrate an application where event cameras excel: accurately estimating the impact location of fast-moving objects. We introduce a lightweight event representation called Binary Event History Image (BEHI) to encode event data at low latency, as well as a learning-based approach that allows real-time inference of a confidence-enabled control signal to the robot. To validate our approach, we present an experimental catching system in which we catch fast-flying ping-pong balls. We show that the system is capable of achieving a success rate of 81% in catching balls targeted at different locations, with a velocity of up to 13 m/s even on compute-constrained embedded platforms such as the Nvidia Jetson NX.

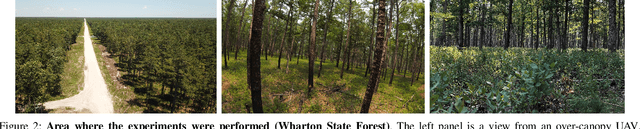

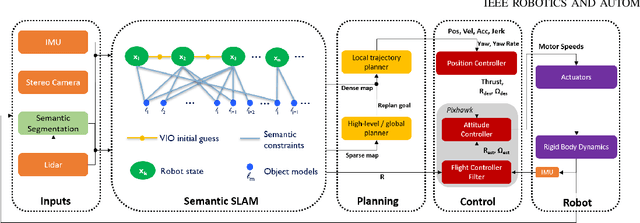

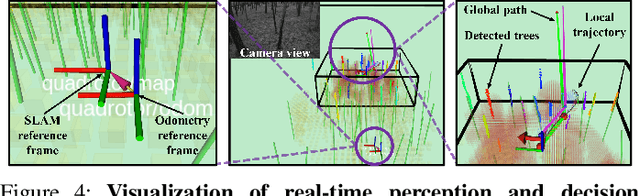

Large-scale Autonomous Flight with Real-time Semantic SLAM under Dense Forest Canopy

Sep 19, 2021

Abstract:In this letter, we propose an integrated autonomous flight and semantic SLAM system that can perform long-range missions and real-time semantic mapping in highly cluttered, unstructured, and GPS-denied under-canopy environments. First, tree trunks and ground planes are detected from LIDAR scans. We use a neural network and an instance extraction algorithm to enable semantic segmentation in real time onboard the UAV. Second, detected tree trunk instances are modeled as cylinders and associated across the whole LIDAR sequence. This semantic data association constraints both robot poses as well as trunk landmark models. The output of semantic SLAM is used in state estimation, planning, and control algorithms in real time. The global planner relies on a sparse map to plan the shortest path to the global goal, and the local trajectory planner uses a small but finely discretized robot-centric map to plan a dynamically feasible and collision-free trajectory to the local goal. Both the global path and local trajectory lead to drift-corrected goals, thus helping the UAV execute its mission accurately and safely.

Fast Motion Understanding with Spatiotemporal Neural Networks and Dynamic Vision Sensors

Nov 18, 2020

Abstract:This paper presents a Dynamic Vision Sensor (DVS) based system for reasoning about high speed motion. As a representative scenario, we consider the case of a robot at rest reacting to a small, fast approaching object at speeds higher than 15m/s. Since conventional image sensors at typical frame rates observe such an object for only a few frames, estimating the underlying motion presents a considerable challenge for standard computer vision systems and algorithms. In this paper we present a method motivated by how animals such as insects solve this problem with their relatively simple vision systems. Our solution takes the event stream from a DVS and first encodes the temporal events with a set of causal exponential filters across multiple time scales. We couple these filters with a Convolutional Neural Network (CNN) to efficiently extract relevant spatiotemporal features. The combined network learns to output both the expected time to collision of the object, as well as the predicted collision point on a discretized polar grid. These critical estimates are computed with minimal delay by the network in order to react appropriately to the incoming object. We highlight the results of our system to a toy dart moving at 23.4m/s with a 24.73{\deg} error in ${\theta}$, 18.4mm average discretized radius prediction error, and 25.03% median time to collision prediction error.

Near-chip Dynamic Vision Filtering for Low-Bandwidth Pedestrian Detection

Apr 03, 2020

Abstract:This paper presents a novel end-to-end system for pedestrian detection using Dynamic Vision Sensors (DVSs). We target applications where multiple sensors transmit data to a local processing unit, which executes a detection algorithm. Our system is composed of (i) a near-chip event filter that compresses and denoises the event stream from the DVS, and (ii) a Binary Neural Network (BNN) detection module that runs on a low-computation edge computing device (in our case a STM32F4 microcontroller). We present the system architecture and provide an end-to-end implementation for pedestrian detection in an office environment. Our implementation reduces transmission size by up to 99.6% compared to transmitting the raw event stream. The average packet size in our system is only 1397 bits, while 307.2 kb are required to send an uncompressed DVS time window. Our detector is able to perform a detection every 450 ms, with an overall testing F1 score of 83%. The low bandwidth and energy properties of our system make it ideal for IoT applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge