Shreyas Aditya

Quantitative Depth Quality Assessment of RGBD Cameras At Close Range Using 3D Printed Fixtures

Mar 21, 2019

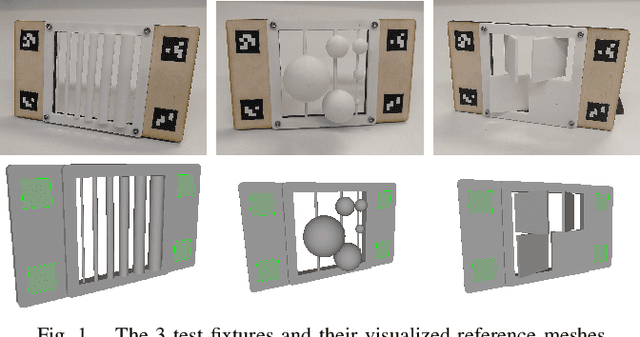

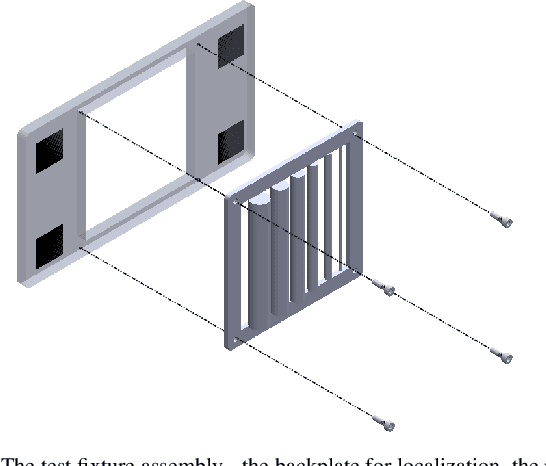

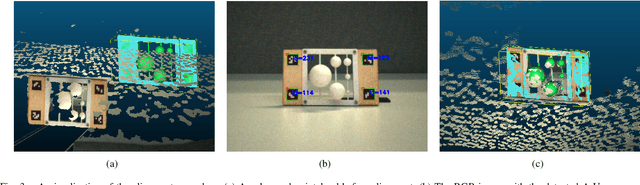

Abstract:Mobile robots that manipulate their environments require high-accuracy scene understanding at close range. Typically this understanding is achieved with RGBD cameras, but the evaluation process for selecting an appropriate RGBD camera for the application is minimally quantitative. Limited manufacturer-published metrics do not translate to observed quality in real-world cluttered environments, since quality is application-specific. To bridge the gap, we present a method for quantitatively measuring depth quality using a set of extendable 3D printed fixtures that approximate real-world conditions. By framing depth quality as point cloud density and root mean square error (RMSE) from a known geometry, we present a method that is extendable by other system integrators for custom environments. We show a comparison of 3 cameras and present a case study for camera selection, provide reference meshes and analysis code, and discuss further extensions.

Robust Fruit Counting: Combining Deep Learning, Tracking, and Structure from Motion

Aug 02, 2018

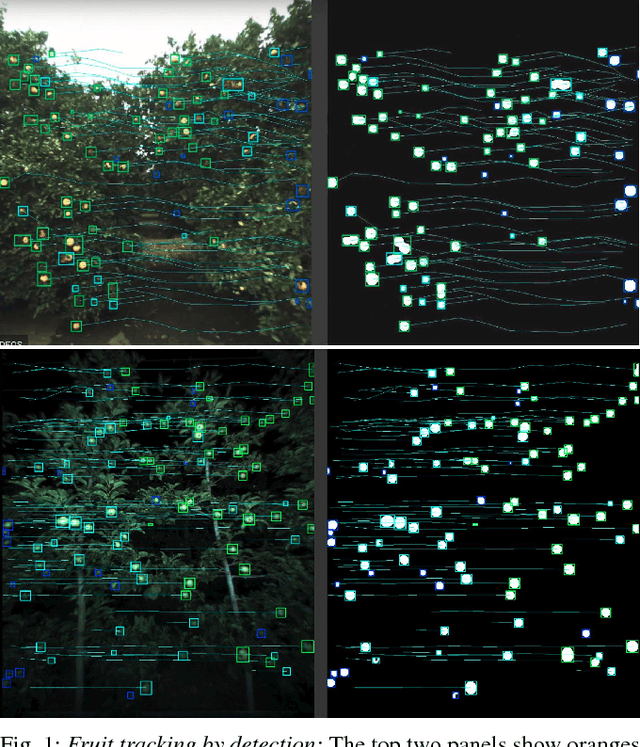

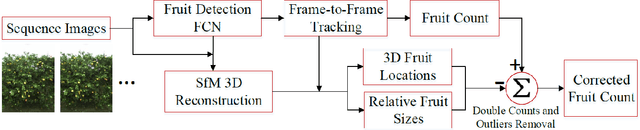

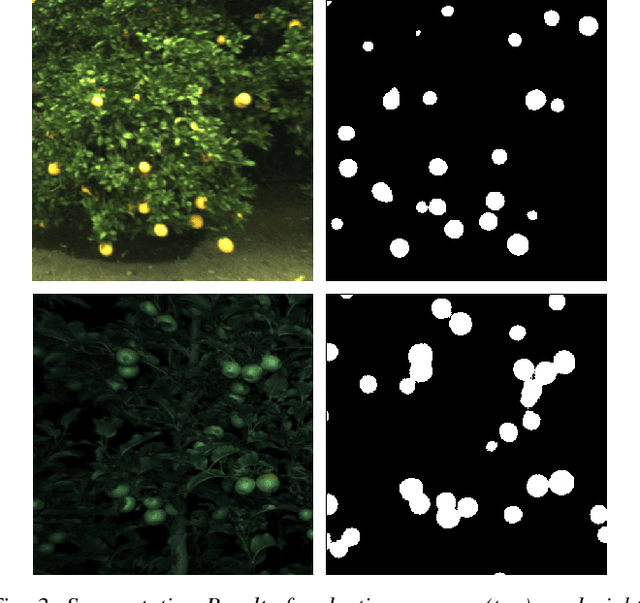

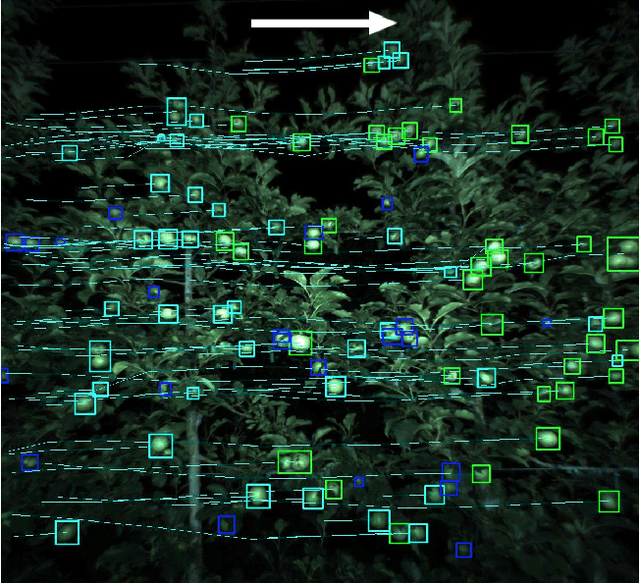

Abstract:We present a novel fruit counting pipeline that combines deep segmentation, frame to frame tracking, and 3D localization to accurately count visible fruits across a sequence of images. Our pipeline works on image streams from a monocular camera, both in natural light, as well as with controlled illumination at night. We first train a Fully Convolutional Network (FCN) and segment video frame images into fruit and non-fruit pixels. We then track fruits across frames using the Hungarian Algorithm where the objective cost is determined from a Kalman Filter corrected Kanade-Lucas-Tomasi (KLT) Tracker. In order to correct the estimated count from tracking process, we combine tracking results with a Structure from Motion (SfM) algorithm to calculate relative 3D locations and size estimates to reject outliers and double counted fruit tracks. We evaluate our algorithm by comparing with ground-truth human-annotated visual counts. Our results demonstrate that our pipeline is able to accurately and reliably count fruits across image sequences, and the correction step can significantly improve the counting accuracy and robustness. Although discussed in the context of fruit counting, our work can extend to detection, tracking, and counting of a variety of other stationary features of interest such as leaf-spots, wilt, and blossom.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge