Jnaneshwar Das

Towards Model Predictive Control for Acrobatic Quadrotor Flights

Jan 30, 2024Abstract:This study explores modeling and control for quadrotor acrobatics, focusing on executing flip maneuvers. Flips are an elegant way to deliver sensor probes into no-fly or hazardous zones, like volcanic vents. Successful flips require feasible trajectories and precise control, influenced by rotor dynamics, thrust allocation, and control methodologies. The research introduces a novel approach using Model Predictive Control (MPC) for real-time trajectory planning. The MPC considers dynamic constraints and environmental variables, ensuring system stability during maneuvers. The proposed methodology's effectiveness is examined through simulation studies in ROS and Gazebo, providing insights into quadrotor behavior, response time, and trajectory accuracy. Real-time flight experiments on a custom agile quadrotor using PixHawk 4 and Hardkernel Odroid validate MPC-designed controllers. Experiments confirm successful execution and adaptability to real-world scenarios. Outcomes contribute to autonomous aerial robotics, especially aerial acrobatics, enhancing mission capabilities. MPC controllers find applications in probe throws and optimal image capture views through efficient flight paths, e.g., full roll maneuvers. This research paves the way for quadrotors in demanding scenarios, showcasing groundbreaking applications. Video Link: \url{ https://www.youtube.com/watch?v=UzR0PWjy9W4}

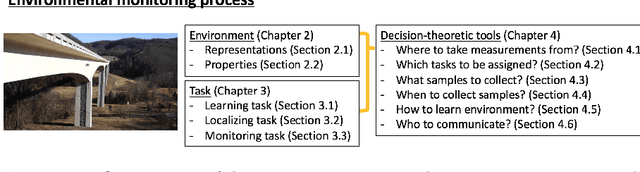

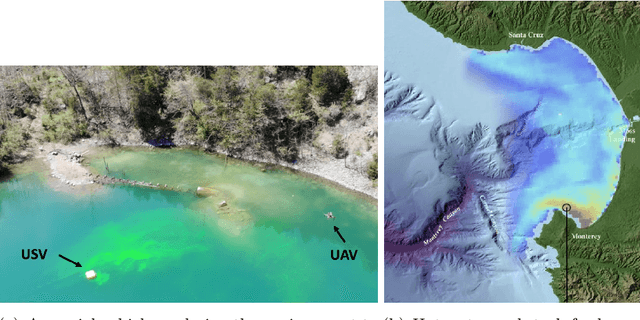

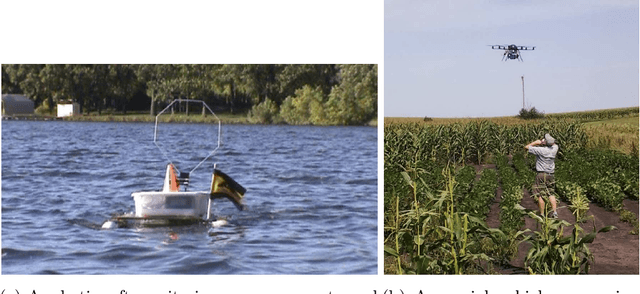

Decision-Theoretic Approaches for Robotic Environmental Monitoring -- A Survey

Aug 04, 2023

Abstract:Robotics has dramatically increased our ability to gather data about our environments. This is an opportune time for the robotics and algorithms community to come together to contribute novel solutions to pressing environmental monitoring problems. In order to do so, it is useful to consider a taxonomy of problems and methods in this realm. We present the first comprehensive summary of decision theoretic approaches that are enabling efficient sampling of various kinds of environmental processes. Representations for different kinds of environments are explored, followed by a discussion of tasks of interest such as learning, localization, or monitoring. Finally, various algorithms to carry out these tasks are presented, along with a few illustrative prior results from the community.

Shakebot: A Low-cost, Open-source Shake Table for Ground Motion Seismic Studies

Dec 21, 2022Abstract:Our earlier research built a virtual shake robot in simulation to study the dynamics of precariously balanced rocks (PBR), which are negative indicators of earthquakes in nature. The simulation studies need validation through physical experiments. For this purpose, we developed Shakebot, a low-cost (under $2,000), open-source shake table to validate simulations of PBR dynamics and facilitate other ground motion experiments. The Shakebot is a custom one-dimensional prismatic robotic system with perception and motion software developed using the Robot Operating System (ROS). We adapted affordable and high-accuracy components from 3D printers, particularly a closed-loop stepper motor for actuation and a toothed belt for transmission. The stepper motor enables the bed to reach a maximum horizontal acceleration of 11.8 m/s^2 (1.2 g), and velocity of 0.5 m/s, when loaded with a 2 kg scale-model PBR. The perception system of the Shakebot consists of an accelerometer and a high frame-rate camera. By fusing camera-based displacements with acceleration measurements, the Shakebot is able to carry out accurate bed velocity estimation. The ROS-based perception and motion software simplifies the transition of code from our previous virtual shake robot to the physical Shakebot. The reuse of the control programs ensures that the implemented ground motions are consistent for both the simulation and physical experiments, which is critical to validate our simulation experiments.

Autonomous Robotic Mapping of Fragile Geologic Features

May 04, 2021

Abstract:Robotic mapping is useful in scientific applications that involve surveying unstructured environments. This paper presents a target-oriented mapping system for sparsely distributed geologic surface features, such as precariously balanced rocks (PBRs), whose geometric fragility parameters can provide valuable information on earthquake shaking history and landscape development for a region. With this geomorphology problem as the test domain, we demonstrate a pipeline for detecting, localizing, and precisely mapping fragile geologic features distributed on a landscape. To do so, we first carry out a lawn-mower search pattern in the survey region from a high elevation using an Unpiloted Aerial Vehicle (UAV). Once a potential PBR target is detected by a deep neural network, we track the bounding box in the image frames using a real-time tracking algorithm. The location and occupancy of the target in world coordinates are estimated using a sampling-based filtering algorithm, where a set of 3D points are re-sampled after weighting by the tracked bounding boxes from different camera perspectives. The converged 3D points provide a prior on 3D bounding shape of a target, which is used for UAV path planning to closely and completely map the target with Simultaneous Localization and Mapping (SLAM). After target mapping, the UAV resumes the lawn-mower search pattern to find the next target. We introduce techniques to make the target mapping robust to false positive and missing detection from the neural network. Our target-oriented mapping system has the advantages of reducing map storage and emphasizing complete visible surface features on specified targets.

Robotics During a Pandemic: The 2020 NSF CPS Virtual Challenge -- SoilScope, Mars Edition

Mar 15, 2021

Abstract:Remote sample recovery is a rapidly evolving application of Small Unmanned Aircraft Systems (sUAS) for planetary sciences and space exploration. Development of cyber-physical systems (CPS) for autonomous deployment and recovery of sensor probes for sample caching is already in progress with NASA's MARS 2020 mission. To challenge student teams to develop autonomy for sample recovery settings, the 2020 NSF CPS Challenge was positioned around the launch of the MARS 2020 rover and sUAS duo. This paper discusses perception and trajectory planning for sample recovery by sUAS in a simulation environment. Out of a total of five teams that participated, the results of the top two teams have been discussed. The OpenUAV cloud simulation framework deployed on the Cyber-Physical Systems Virtual Organization (CPS-VO) allowed the teams to work remotely over a month during the COVID-19 pandemic to develop and simulate autonomous exploration algorithms. Remote simulation enabled teams across the globe to collaborate in experiments. The two teams approached the task of probe search, probe recovery, and landing on a moving target differently. This paper is a summary of teams' insights and lessons learned, as they chose from a wide range of perception sensors and algorithms.

Localization and Mapping of Sparse Geologic Features with Unpiloted Aircraft Systems

Jul 02, 2020

Abstract:Robotic mapping is attractive in many science applications that involve environmental surveys. This paper presents a system for localization and mapping of sparsely distributed surface features such as precariously balanced rocks (PBRs), whose geometric fragility (stability) parameters provide valuable information on earthquake processes. With geomorphology as the test domain, we carry out a lawnmower search pattern using an Unpiloted Aerial Vehicle (UAV) equipped with a GPS module, stereo camera, and onboard computers. Once a target is detected by a deep neural network, we track its bounding box in the image coordinates by applying a Kalman filter that fuses the deep learning detection with KLT tracking. The target is localized in world coordinates using depth filtering where a set of 3D points are filtered by object bounding boxes from different camera perspectives. The 3D points also provide a strong prior on target shape, which is used for UAV path planning to accurately map the target using RGBD SLAM. After target mapping, the UAS resumes the lawnmower search pattern to locate the next target. Our end goal is a real-time mapping methodology for sparsely distributed surface features on earth or on extraterrestrial surfaces.

The OpenUAV Swarm Simulation Testbed: a Collaborative DesignStudio for Field Robotics

Oct 02, 2019

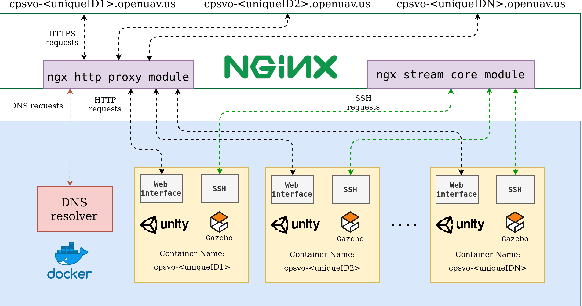

Abstract:In this paper, we showcase a multi-robot design studio where simulation containers are browser accessible Lubuntu desktops. Our simulation testbed, based on ROS, Gazebo, PX4 flight stack has been developed to tackle higher-level challenging tasks such as mission planning, vision-based problems, collision avoidance, and multi-robot coordination for Unpiloted Aircraft Systems (UAS). The novel architecture is built around TurboVNC and noVNC WebSockets technology, to seamlessly provide real-time web performance for 3D rendering in a collaborative design tool. We have built upon our previous work that leveraged concurrent multi-UAS simulations, and extended it to be useful for underwater, airship and ground vehicles. This opens up the possibility for both rigorous Monte Carlo styled software testing of heterogeneous swarm simulations, as well as sampling-based optimization of mission parameters. The new OpenUAV architecture has native support for ROS, PX4 and QGroundControl. Two case studies in the paper illustrate the development of UAS missions in the latest OpenUAV setup. The first example highlights the development of a visual-servoing technique for UAS descent on a target. Second case study referred to as terrain relative navigation (TRN) involves creating a reactive planner for UAS navigation by keeping a constant distance from the terrain.

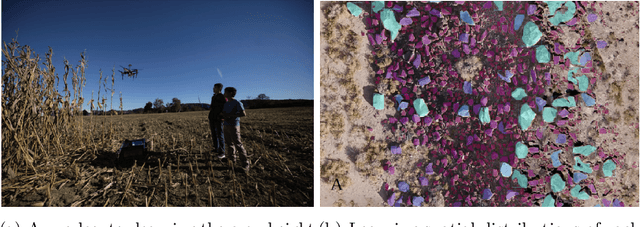

Geomorphological Analysis Using Unpiloted Aircraft Systems, Structure from Motion, and Deep Learning

Sep 27, 2019

Abstract:We present a pipeline for geomorphological analysis that uses structure from motion (SfM) and deep learning on close-range aerial imagery to estimate spatial distributions of rock traits (diameter and orientation), along a tectonic fault scarp. Unpiloted aircraft systems (UAS) have enabled acquisition of high-resolution imagery at close range, revolutionizing domains such as infrastructure inspection, precision agriculture, and disaster response. Our pipeline leverages UAS-based imagery to help scientists gain a better understanding of surface processes. Our pipeline uses SfM on aerial imagery to produce a georeferenced orthomosaic with 2 cm/pixel resolution. A human expert annotates rocks on a set of image tiles sampled from the orthomosaic, and these annotations are used to train a deep neural network to detect and segment individual rocks in the whole site. Our pipeline, in effect, automatically extracts semantic information (rock boundaries) on large volumes of unlabeled high-resolution aerial imagery, and subsequent structural analysis and shape descriptors result in estimates of rock diameter and orientation. We present results of our analysis on imagery collected along a fault scarp in the Volcanic Tablelands in eastern California. Although presented in the context of geology, our pipeline can be extended to a variety of morphological analysis tasks in other domains.

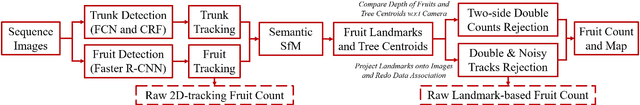

Monocular Camera Based Fruit Counting and Mapping with Semantic Data Association

Mar 18, 2019

Abstract:We present a cheap, lightweight, and fast fruit counting pipeline that uses a single monocular camera. Our pipeline that relies only on a monocular camera, achieves counting performance comparable to state-of-the-art fruit counting system that utilizes an expensive sensor suite including LiDAR and GPS/INS on a mango dataset. Our monocular camera pipeline begins with a fruit detection component that uses a deep neural network. It then uses semantic structure from motion (SFM) to convert these detections into fruit counts by estimating landmark locations of the fruit in 3D, and using these landmarks to identify double counting scenarios. There are many benefits of developing a low cost and lightweight fruit counting system, including applicability to agriculture in developing countries, where monetary constraints or unstructured environments necessitate cheaper hardware solutions.

Robust Fruit Counting: Combining Deep Learning, Tracking, and Structure from Motion

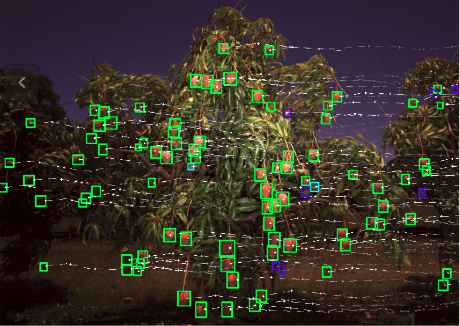

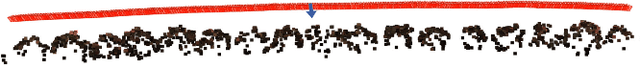

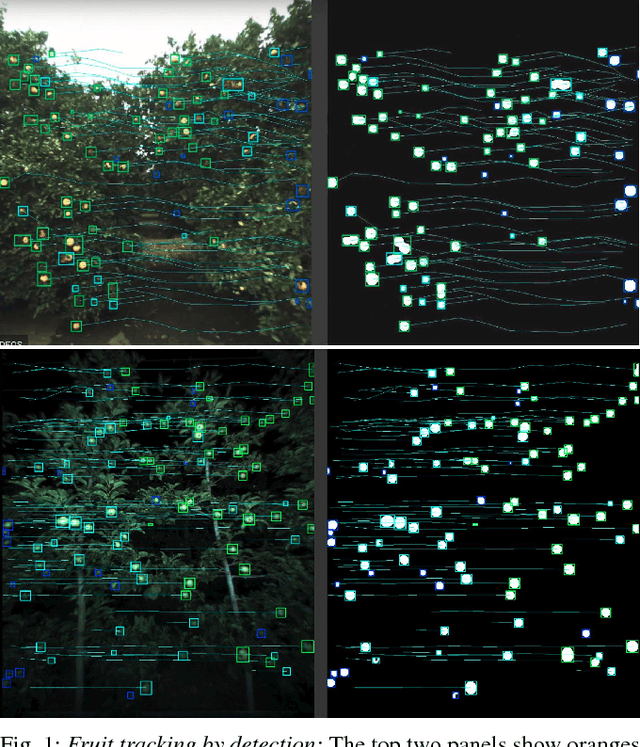

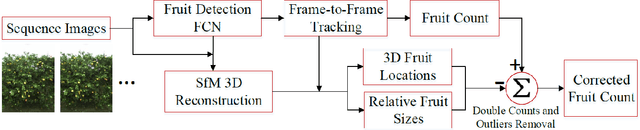

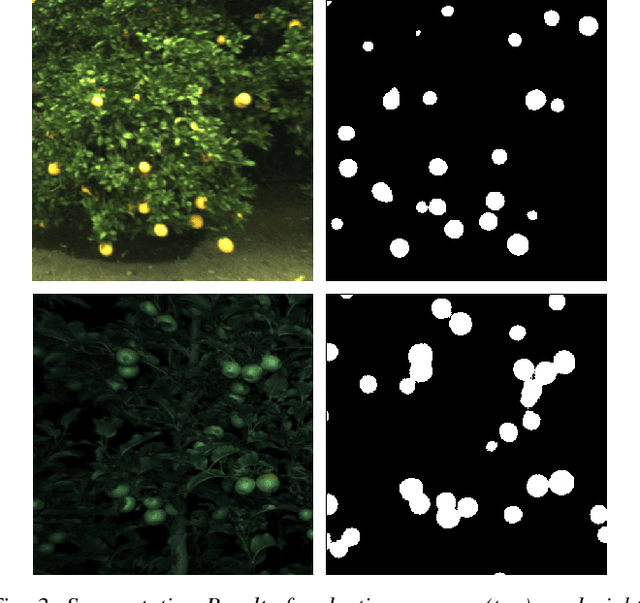

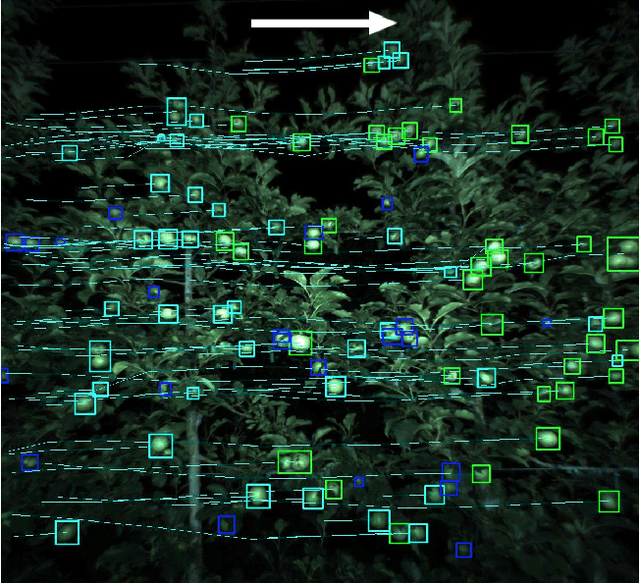

Aug 02, 2018

Abstract:We present a novel fruit counting pipeline that combines deep segmentation, frame to frame tracking, and 3D localization to accurately count visible fruits across a sequence of images. Our pipeline works on image streams from a monocular camera, both in natural light, as well as with controlled illumination at night. We first train a Fully Convolutional Network (FCN) and segment video frame images into fruit and non-fruit pixels. We then track fruits across frames using the Hungarian Algorithm where the objective cost is determined from a Kalman Filter corrected Kanade-Lucas-Tomasi (KLT) Tracker. In order to correct the estimated count from tracking process, we combine tracking results with a Structure from Motion (SfM) algorithm to calculate relative 3D locations and size estimates to reject outliers and double counted fruit tracks. We evaluate our algorithm by comparing with ground-truth human-annotated visual counts. Our results demonstrate that our pipeline is able to accurately and reliably count fruits across image sequences, and the correction step can significantly improve the counting accuracy and robustness. Although discussed in the context of fruit counting, our work can extend to detection, tracking, and counting of a variety of other stationary features of interest such as leaf-spots, wilt, and blossom.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge