Jason Chrisos

Quantitative Depth Quality Assessment of RGBD Cameras At Close Range Using 3D Printed Fixtures

Mar 21, 2019

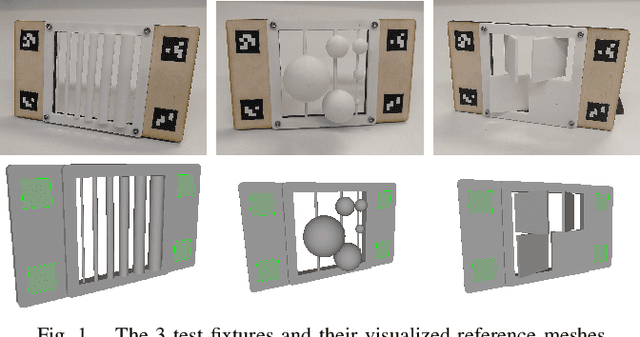

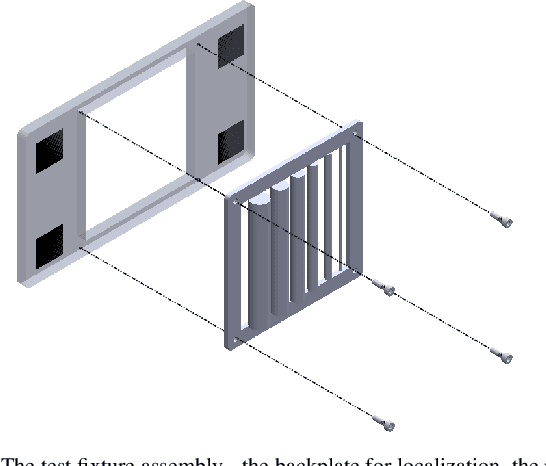

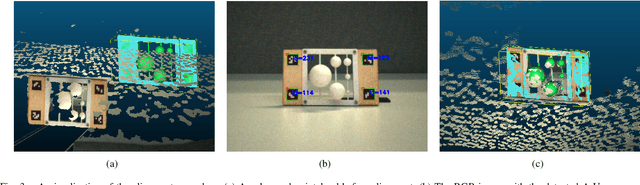

Abstract:Mobile robots that manipulate their environments require high-accuracy scene understanding at close range. Typically this understanding is achieved with RGBD cameras, but the evaluation process for selecting an appropriate RGBD camera for the application is minimally quantitative. Limited manufacturer-published metrics do not translate to observed quality in real-world cluttered environments, since quality is application-specific. To bridge the gap, we present a method for quantitatively measuring depth quality using a set of extendable 3D printed fixtures that approximate real-world conditions. By framing depth quality as point cloud density and root mean square error (RMSE) from a known geometry, we present a method that is extendable by other system integrators for custom environments. We show a comparison of 3 cameras and present a case study for camera selection, provide reference meshes and analysis code, and discuss further extensions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge