Song Zhang

TS-SatMVSNet: Slope Aware Height Estimation for Large-Scale Earth Terrain Multi-view Stereo

Jan 02, 2025

Abstract:3D terrain reconstruction with remote sensing imagery achieves cost-effective and large-scale earth observation and is crucial for safeguarding natural disasters, monitoring ecological changes, and preserving the environment.Recently, learning-based multi-view stereo~(MVS) methods have shown promise in this task. However, these methods simply modify the general learning-based MVS framework for height estimation, which overlooks the terrain characteristics and results in insufficient accuracy. Considering that the Earth's surface generally undulates with no drastic changes and can be measured by slope, integrating slope considerations into MVS frameworks could enhance the accuracy of terrain reconstructions. To this end, we propose an end-to-end slope-aware height estimation network named TS-SatMVSNet for large-scale remote sensing terrain reconstruction.To effectively obtain the slope representation, drawing from mathematical gradient concepts, we innovatively proposed a height-based slope calculation strategy to first calculate a slope map from a height map to measure the terrain undulation. To fully integrate slope information into the MVS pipeline, we separately design two slope-guided modules to enhance reconstruction outcomes at both micro and macro levels. Specifically, at the micro level, we designed a slope-guided interval partition module for refined height estimation using slope values. At the macro level, a height correction module is proposed, using a learnable Gaussian smoothing operator to amend the inaccurate height values. Additionally, to enhance the efficacy of height estimation, we proposed a slope direction loss for implicitly optimizing height estimation results. Extensive experiments on the WHU-TLC dataset and MVS3D dataset show that our proposed method achieves state-of-the-art performance and demonstrates competitive generalization ability.

EGSRAL: An Enhanced 3D Gaussian Splatting based Renderer with Automated Labeling for Large-Scale Driving Scene

Dec 20, 2024

Abstract:3D Gaussian Splatting (3D GS) has gained popularity due to its faster rendering speed and high-quality novel view synthesis. Some researchers have explored using 3D GS for reconstructing driving scenes. However, these methods often rely on various data types, such as depth maps, 3D boxes, and trajectories of moving objects. Additionally, the lack of annotations for synthesized images limits their direct application in downstream tasks. To address these issues, we propose EGSRAL, a 3D GS-based method that relies solely on training images without extra annotations. EGSRAL enhances 3D GS's capability to model both dynamic objects and static backgrounds and introduces a novel adaptor for auto labeling, generating corresponding annotations based on existing annotations. We also propose a grouping strategy for vanilla 3D GS to address perspective issues in rendering large-scale, complex scenes. Our method achieves state-of-the-art performance on multiple datasets without any extra annotation. For example, the PSNR metric reaches 29.04 on the nuScenes dataset. Moreover, our automated labeling can significantly improve the performance of 2D/3D detection tasks. Code is available at https://github.com/jiangxb98/EGSRAL.

PDCFNet: Enhancing Underwater Images through Pixel Difference Convolution

Sep 28, 2024Abstract:Majority of deep learning methods utilize vanilla convolution for enhancing underwater images. While vanilla convolution excels in capturing local features and learning the spatial hierarchical structure of images, it tends to smooth input images, which can somewhat limit feature expression and modeling. A prominent characteristic of underwater degraded images is blur, and the goal of enhancement is to make the textures and details (high-frequency features) in the images more visible. Therefore, we believe that leveraging high-frequency features can improve enhancement performance. To address this, we introduce Pixel Difference Convolution (PDC), which focuses on gradient information with significant changes in the image, thereby improving the modeling of enhanced images. We propose an underwater image enhancement network, PDCFNet, based on PDC and cross-level feature fusion. Specifically, we design a detail enhancement module based on PDC that employs parallel PDCs to capture high-frequency features, leading to better detail and texture enhancement. The designed cross-level feature fusion module performs operations such as concatenation and multiplication on features from different levels, ensuring sufficient interaction and enhancement between diverse features. Our proposed PDCFNet achieves a PSNR of 27.37 and an SSIM of 92.02 on the UIEB dataset, attaining the best performance to date. Our code is available at https://github.com/zhangsong1213/PDCFNet.

Respiratory Subtraction for Pulmonary Microwave Ablation Evaluation

Aug 08, 2024

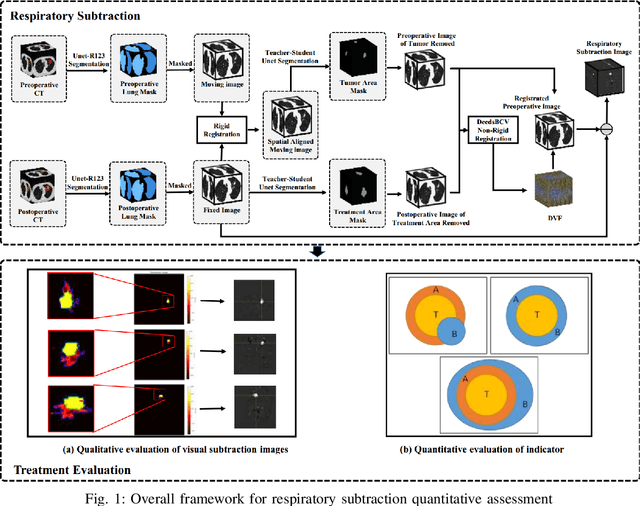

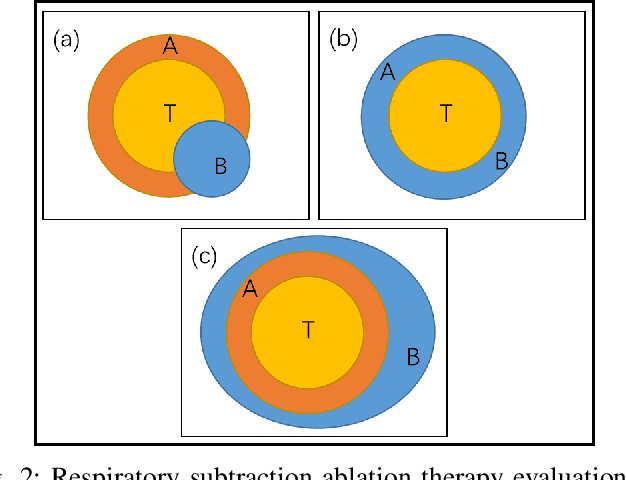

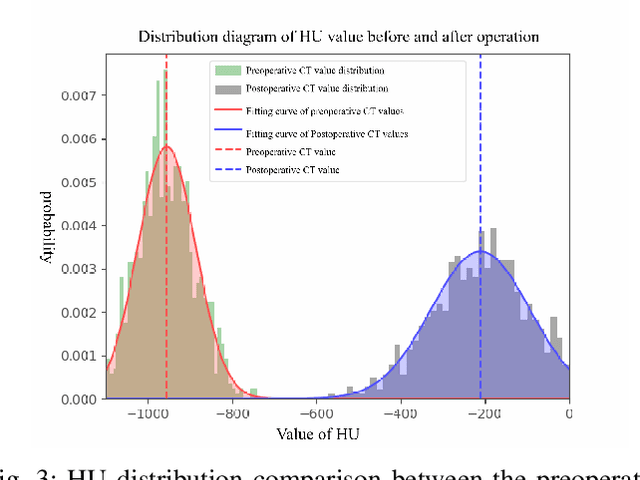

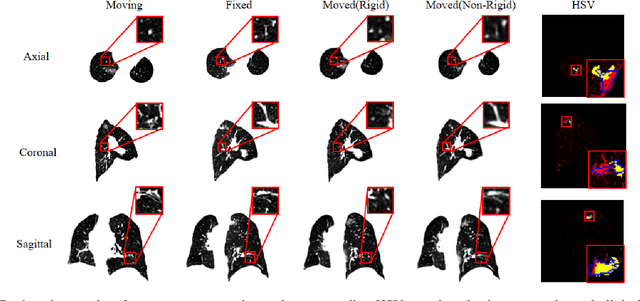

Abstract:Currently, lung cancer is a leading cause of global cancer mortality, often necessitating minimally invasive interventions. Microwave ablation (MWA) is extensively utilized for both primary and secondary lung tumors. Although numerous clinical guidelines and standards for MWA have been established, the clinical evaluation of ablation surgery remains challenging and requires long-term patient follow-up for confirmation. In this paper, we propose a method termed respiratory subtraction to evaluate lung tumor ablation therapy performance based on pre- and post-operative image guidance. Initially, preoperative images undergo coarse rigid registration to their corresponding postoperative positions, followed by further non-rigid registration. Subsequently, subtraction images are generated by subtracting the registered preoperative images from the postoperative ones. Furthermore, to enhance the clinical assessment of MWA treatment performance, we devise a quantitative analysis metric to evaluate ablation efficacy by comparing differences between tumor areas and treatment areas. To the best of our knowledge, this is the pioneering work in the field to facilitate the assessment of MWA surgery performance on pulmonary tumors. Extensive experiments involving 35 clinical cases further validate the efficacy of the respiratory subtraction method. The experimental results confirm the effectiveness of the respiratory subtraction method and the proposed quantitative evaluation metric in assessing lung tumor treatment.

Mamba-UIE: Enhancing Underwater Images with Physical Model Constraint

Jul 27, 2024

Abstract:In underwater image enhancement (UIE), convolutional neural networks (CNN) have inherent limitations in modeling long-range dependencies and are less effective in recovering global features. While Transformers excel at modeling long-range dependencies, their quadratic computational complexity with increasing image resolution presents significant efficiency challenges. Additionally, most supervised learning methods lack effective physical model constraint, which can lead to insufficient realism and overfitting in generated images. To address these issues, we propose a physical model constraint-based underwater image enhancement framework, Mamba-UIE. Specifically, we decompose the input image into four components: underwater scene radiance, direct transmission map, backscatter transmission map, and global background light. These components are reassembled according to the revised underwater image formation model, and the reconstruction consistency constraint is applied between the reconstructed image and the original image, thereby achieving effective physical constraint on the underwater image enhancement process. To tackle the quadratic computational complexity of Transformers when handling long sequences, we introduce the Mamba-UIE network based on linear complexity state space models (SSM). By incorporating the Mamba in Convolution block, long-range dependencies are modeled at both the channel and spatial levels, while the CNN backbone is retained to recover local features and details. Extensive experiments on three public datasets demonstrate that our proposed Mamba-UIE outperforms existing state-of-the-art methods, achieving a PSNR of 27.13 and an SSIM of 0.93 on the UIEB dataset. Our method is available at https://github.com/zhangsong1213/Mamba-UIE.

ALPS: An Auto-Labeling and Pre-training Scheme for Remote Sensing Segmentation With Segment Anything Model

Jun 16, 2024Abstract:In the fast-growing field of Remote Sensing (RS) image analysis, the gap between massive unlabeled datasets and the ability to fully utilize these datasets for advanced RS analytics presents a significant challenge. To fill the gap, our work introduces an innovative auto-labeling framework named ALPS (Automatic Labeling for Pre-training in Segmentation), leveraging the Segment Anything Model (SAM) to predict precise pseudo-labels for RS images without necessitating prior annotations or additional prompts. The proposed pipeline significantly reduces the labor and resource demands traditionally associated with annotating RS datasets. By constructing two comprehensive pseudo-labeled RS datasets via ALPS for pre-training purposes, our approach enhances the performance of downstream tasks across various benchmarks, including iSAID and ISPRS Potsdam. Experiments demonstrate the effectiveness of our framework, showcasing its ability to generalize well across multiple tasks even under the scarcity of extensively annotated datasets, offering a scalable solution to automatic segmentation and annotation challenges in the field. In addition, the proposed a pipeline is flexible and can be applied to medical image segmentation, remarkably boosting the performance. Note that ALPS utilizes pre-trained SAM to semi-automatically annotate RS images without additional manual annotations. Though every component in the pipeline has bee well explored, integrating clustering algorithms with SAM and novel pseudo-label alignment significantly enhances RS segmentation, as an off-the-shelf tool for pre-training data preparation. Our source code is available at: https://github.com/StriveZs/ALPS.

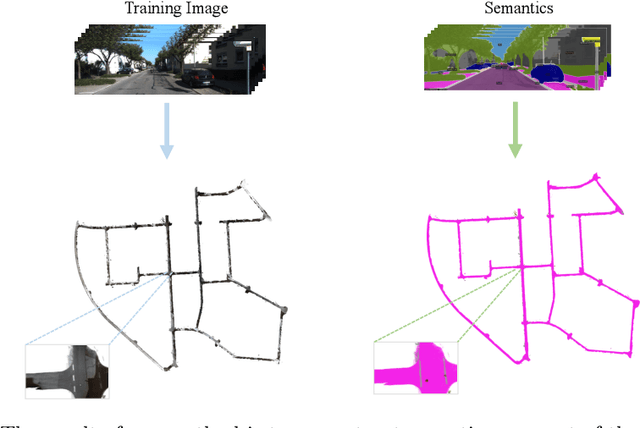

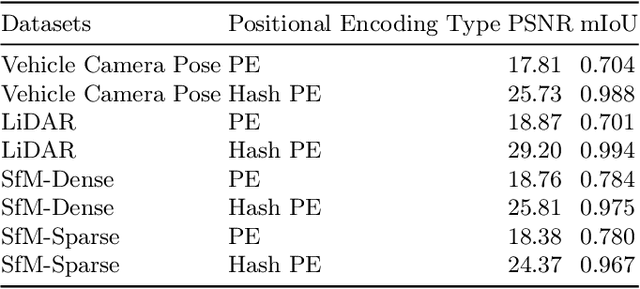

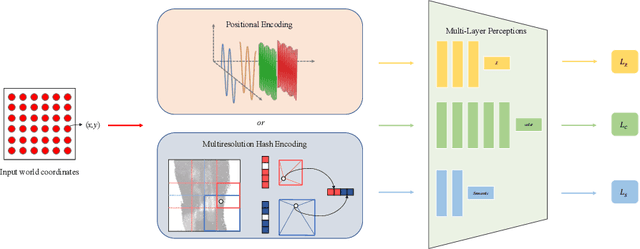

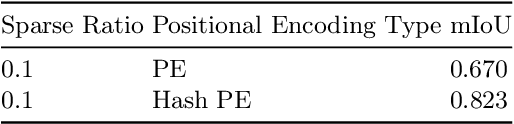

NeRO: Neural Road Surface Reconstruction

May 17, 2024

Abstract:In computer vision and graphics, the accurate reconstruction of road surfaces is pivotal for various applications, especially in autonomous driving. This paper introduces a novel method leveraging the Multi-Layer Perceptrons (MLPs) framework to reconstruct road surfaces in height, color, and semantic information by input world coordinates x and y. Our approach NeRO uses encoding techniques based on MLPs, significantly improving the performance of the complex details, speeding up the training speed, and reducing neural network size. The effectiveness of this method is demonstrated through its superior performance, which indicates a promising direction for rendering road surfaces with semantics applications, particularly in applications demanding visualization of road conditions, 4D labeling, and semantic groupings.

Semantic Is Enough: Only Semantic Information For NeRF Reconstruction

Mar 24, 2024

Abstract:Recent research that combines implicit 3D representation with semantic information, like Semantic-NeRF, has proven that NeRF model could perform excellently in rendering 3D structures with semantic labels. This research aims to extend the Semantic Neural Radiance Fields (Semantic-NeRF) model by focusing solely on semantic output and removing the RGB output component. We reformulate the model and its training procedure to leverage only the cross-entropy loss between the model semantic output and the ground truth semantic images, removing the colour data traditionally used in the original Semantic-NeRF approach. We then conduct a series of identical experiments using the original and the modified Semantic-NeRF model. Our primary objective is to obverse the impact of this modification on the model performance by Semantic-NeRF, focusing on tasks such as scene understanding, object detection, and segmentation. The results offer valuable insights into the new way of rendering the scenes and provide an avenue for further research and development in semantic-focused 3D scene understanding.

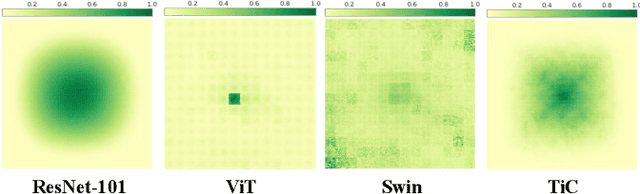

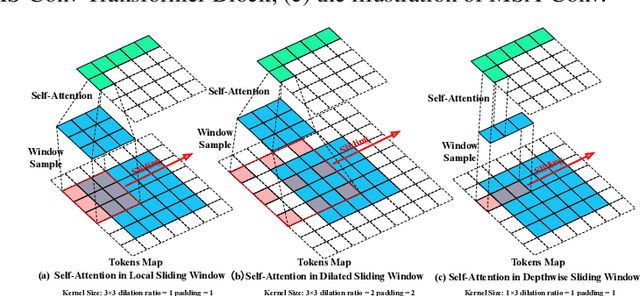

TiC: Exploring Vision Transformer in Convolution

Oct 06, 2023

Abstract:While models derived from Vision Transformers (ViTs) have been phonemically surging, pre-trained models cannot seamlessly adapt to arbitrary resolution images without altering the architecture and configuration, such as sampling the positional encoding, limiting their flexibility for various vision tasks. For instance, the Segment Anything Model (SAM) based on ViT-Huge requires all input images to be resized to 1024$\times$1024. To overcome this limitation, we propose the Multi-Head Self-Attention Convolution (MSA-Conv) that incorporates Self-Attention within generalized convolutions, including standard, dilated, and depthwise ones. Enabling transformers to handle images of varying sizes without retraining or rescaling, the use of MSA-Conv further reduces computational costs compared to global attention in ViT, which grows costly as image size increases. Later, we present the Vision Transformer in Convolution (TiC) as a proof of concept for image classification with MSA-Conv, where two capacity enhancing strategies, namely Multi-Directional Cyclic Shifted Mechanism and Inter-Pooling Mechanism, have been proposed, through establishing long-distance connections between tokens and enlarging the effective receptive field. Extensive experiments have been carried out to validate the overall effectiveness of TiC. Additionally, ablation studies confirm the performance improvement made by MSA-Conv and the two capacity enhancing strategies separately. Note that our proposal aims at studying an alternative to the global attention used in ViT, while MSA-Conv meets our goal by making TiC comparable to state-of-the-art on ImageNet-1K. Code will be released at https://github.com/zs670980918/MSA-Conv.

ARAI-MVSNet: A multi-view stereo depth estimation network with adaptive depth range and depth interval

Aug 17, 2023Abstract:Multi-View Stereo~(MVS) is a fundamental problem in geometric computer vision which aims to reconstruct a scene using multi-view images with known camera parameters. However, the mainstream approaches represent the scene with a fixed all-pixel depth range and equal depth interval partition, which will result in inadequate utilization of depth planes and imprecise depth estimation. In this paper, we present a novel multi-stage coarse-to-fine framework to achieve adaptive all-pixel depth range and depth interval. We predict a coarse depth map in the first stage, then an Adaptive Depth Range Prediction module is proposed in the second stage to zoom in the scene by leveraging the reference image and the obtained depth map in the first stage and predict a more accurate all-pixel depth range for the following stages. In the third and fourth stages, we propose an Adaptive Depth Interval Adjustment module to achieve adaptive variable interval partition for pixel-wise depth range. The depth interval distribution in this module is normalized by Z-score, which can allocate dense depth hypothesis planes around the potential ground truth depth value and vice versa to achieve more accurate depth estimation. Extensive experiments on four widely used benchmark datasets~(DTU, TnT, BlendedMVS, ETH 3D) demonstrate that our model achieves state-of-the-art performance and yields competitive generalization ability. Particularly, our method achieves the highest Acc and Overall on the DTU dataset, while attaining the highest Recall and $F_{1}$-score on the Tanks and Temples intermediate and advanced dataset. Moreover, our method also achieves the lowest $e_{1}$ and $e_{3}$ on the BlendedMVS dataset and the highest Acc and $F_{1}$-score on the ETH 3D dataset, surpassing all listed methods.Project website: https://github.com/zs670980918/ARAI-MVSNet

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge