Ran Zhao

PDCFNet: Enhancing Underwater Images through Pixel Difference Convolution

Sep 28, 2024Abstract:Majority of deep learning methods utilize vanilla convolution for enhancing underwater images. While vanilla convolution excels in capturing local features and learning the spatial hierarchical structure of images, it tends to smooth input images, which can somewhat limit feature expression and modeling. A prominent characteristic of underwater degraded images is blur, and the goal of enhancement is to make the textures and details (high-frequency features) in the images more visible. Therefore, we believe that leveraging high-frequency features can improve enhancement performance. To address this, we introduce Pixel Difference Convolution (PDC), which focuses on gradient information with significant changes in the image, thereby improving the modeling of enhanced images. We propose an underwater image enhancement network, PDCFNet, based on PDC and cross-level feature fusion. Specifically, we design a detail enhancement module based on PDC that employs parallel PDCs to capture high-frequency features, leading to better detail and texture enhancement. The designed cross-level feature fusion module performs operations such as concatenation and multiplication on features from different levels, ensuring sufficient interaction and enhancement between diverse features. Our proposed PDCFNet achieves a PSNR of 27.37 and an SSIM of 92.02 on the UIEB dataset, attaining the best performance to date. Our code is available at https://github.com/zhangsong1213/PDCFNet.

Mamba-UIE: Enhancing Underwater Images with Physical Model Constraint

Jul 27, 2024

Abstract:In underwater image enhancement (UIE), convolutional neural networks (CNN) have inherent limitations in modeling long-range dependencies and are less effective in recovering global features. While Transformers excel at modeling long-range dependencies, their quadratic computational complexity with increasing image resolution presents significant efficiency challenges. Additionally, most supervised learning methods lack effective physical model constraint, which can lead to insufficient realism and overfitting in generated images. To address these issues, we propose a physical model constraint-based underwater image enhancement framework, Mamba-UIE. Specifically, we decompose the input image into four components: underwater scene radiance, direct transmission map, backscatter transmission map, and global background light. These components are reassembled according to the revised underwater image formation model, and the reconstruction consistency constraint is applied between the reconstructed image and the original image, thereby achieving effective physical constraint on the underwater image enhancement process. To tackle the quadratic computational complexity of Transformers when handling long sequences, we introduce the Mamba-UIE network based on linear complexity state space models (SSM). By incorporating the Mamba in Convolution block, long-range dependencies are modeled at both the channel and spatial levels, while the CNN backbone is retained to recover local features and details. Extensive experiments on three public datasets demonstrate that our proposed Mamba-UIE outperforms existing state-of-the-art methods, achieving a PSNR of 27.13 and an SSIM of 0.93 on the UIEB dataset. Our method is available at https://github.com/zhangsong1213/Mamba-UIE.

UR4NNV: Neural Network Verification, Under-approximation Reachability Works!

Jan 23, 2024Abstract:Recently, formal verification of deep neural networks (DNNs) has garnered considerable attention, and over-approximation based methods have become popular due to their effectiveness and efficiency. However, these strategies face challenges in addressing the "unknown dilemma" concerning whether the exact output region or the introduced approximation error violates the property in question. To address this, this paper introduces the UR4NNV verification framework, which utilizes under-approximation reachability analysis for DNN verification for the first time. UR4NNV focuses on DNNs with Rectified Linear Unit (ReLU) activations and employs a binary tree branch-based under-approximation algorithm. In each epoch, UR4NNV under-approximates a sub-polytope of the reachable set and verifies this polytope against the given property. Through a trial-and-error approach, UR4NNV effectively falsifies DNN properties while providing confidence levels when reaching verification epoch bounds and failing falsifying properties. Experimental comparisons with existing verification methods demonstrate the effectiveness and efficiency of UR4NNV, significantly reducing the impact of the "unknown dilemma".

Real-time automatic polyp detection in colonoscopy using feature enhancement module and spatiotemporal similarity correlation unit

Jan 25, 2022Abstract:Automatic detection of polyps is challenging because different polyps vary greatly, while the changes between polyps and their analogues are small. The state-of-the-art methods are based on convolutional neural networks (CNNs). However, they may fail due to lack of training data, resulting in high rates of missed detection and false positives (FPs). In order to solve these problems, our method combines the two-dimensional (2-D) CNN-based real-time object detector network with spatiotemporal information. Firstly, we use a 2-D detector network to detect static images and frames, and based on the detector network, we propose two feature enhancement modules-the FP Relearning Module (FPRM) to make the detector network learning more about the features of FPs for higher precision, and the Image Style Transfer Module (ISTM) to enhance the features of polyps for sensitivity improvement. In video detection, we integrate spatiotemporal information, which uses Structural Similarity (SSIM) to measure the similarity between video frames. Finally, we propose the Inter-frame Similarity Correlation Unit (ISCU) to combine the results obtained by the detector network and frame similarity to make the final decision. We verify our method on both private databases and publicly available databases. Experimental results show that these modules and units provide a performance improvement compared with the baseline method. Comparison with the state-of-the-art methods shows that the proposed method outperforms the existing ones which can meet real-time constraints. It's demonstrated that our method provides a performance improvement in sensitivity, precision and specificity, and has great potential to be applied in clinical colonoscopy.

* This paper has been accepted by Biomedical Signal Processing and Control. Please cite the paper as Xu, J., Zhao, R., Yu, Y., Zhang, Q., Bian, X., Wang, J., Ge, Z., Qian, D., 2021. Real-time automatic polyp detection in colonoscopy using feature enhancement module and spatiotemporal similarity correlation unit. Biomedical Signal Processing and Control 66, 102503

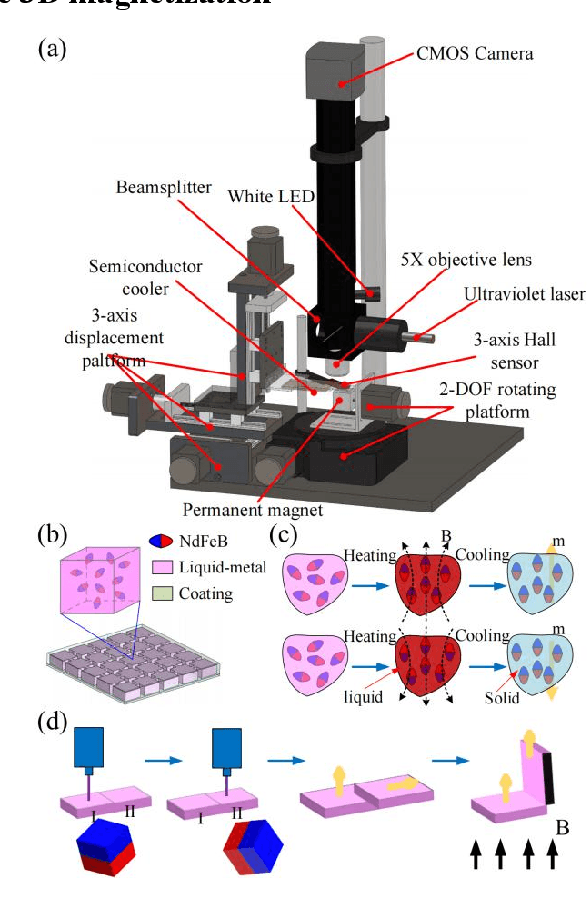

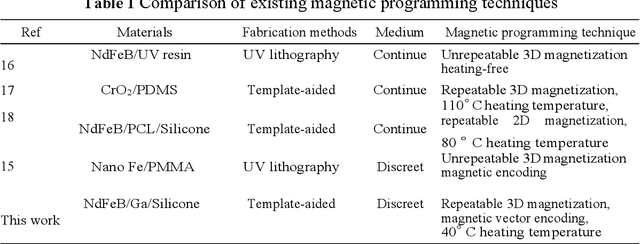

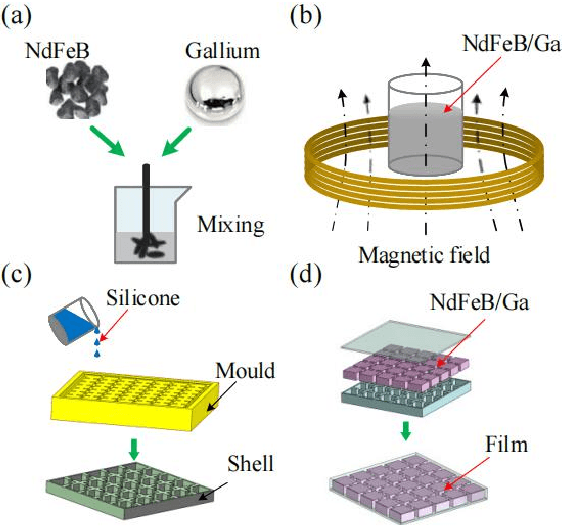

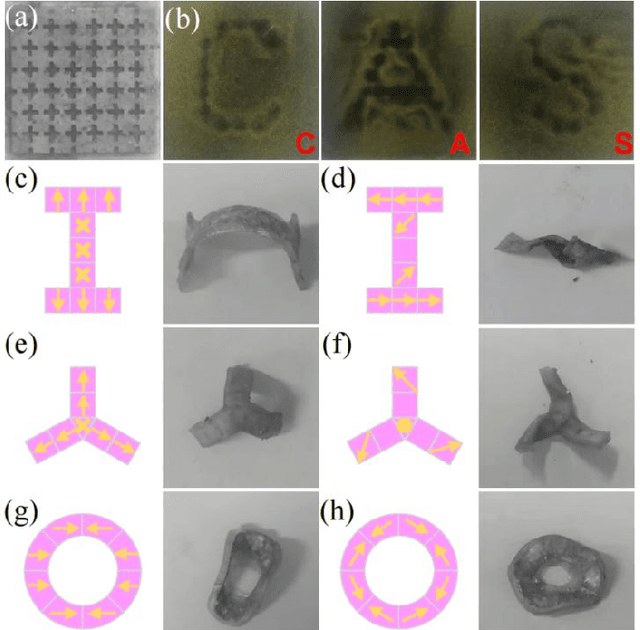

Shape Programmable Magnetic Pixel Soft Robot

Nov 05, 2021

Abstract:Magnetic response soft robot realizes programmable shape regulation with the help of magnetic field and produces various actions. The shape control of magnetic soft robot is based on the magnetic anisotropy caused by the orderly distribution of magnetic particles in the elastic matrix. In the previous technologies, magnetic programming is coupled with the manufacturing process, and the orientation of magnetic particles cannot be modified, which brings restrictions to the design and use of magnetic soft robot. This paper presents a magnetic pixel robot with shape programmable function. By encapsulating NdFeB/gallium composites into silicone shell, a thermo-magnetic response functional film with lattice structure are fabricated. Basing on thermal-assisted magnetization technique, we realized the discrete magnetization region distribution on the film. Therefore, we proposed a magnetic coding technique to realize the mathematical response action design of software robot. Using these methods, we prepared several magnetic soft robots based on origami structure. The experiments show that the behavior mode of robot can be flexibly and repeatedly regulated by magnetic encoding technique. This work provides a basis for the programmed shape regulation and motion design of soft robot.

Some Developments in Clustering Analysis on Stochastic Processes

Aug 05, 2019

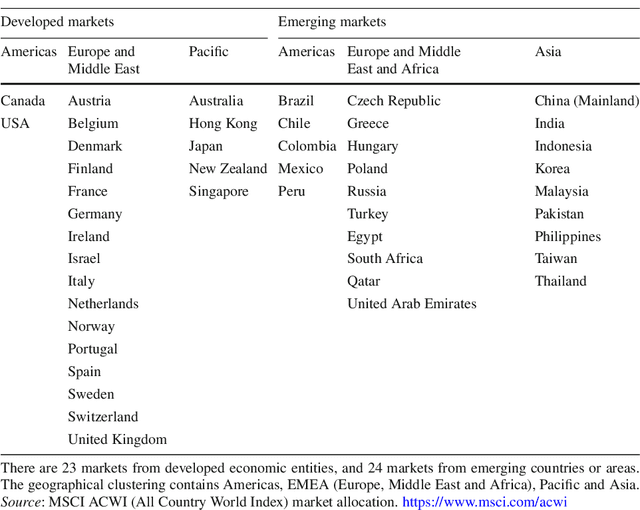

Abstract:We review some developments on clustering stochastic processes and come with the conclusion that asymptotically consistent clustering algorithms can be obtained when the processes are ergodic and the dissimilarity measure satisfies the triangle inequality. Examples are provided when the processes are distribution ergodic, covariance ergodic and locally asymptotically self-similar, respectively.

Clustering Analysis on Locally Asymptotically Self-similar Processes with Known Number of Clusters

Nov 04, 2018

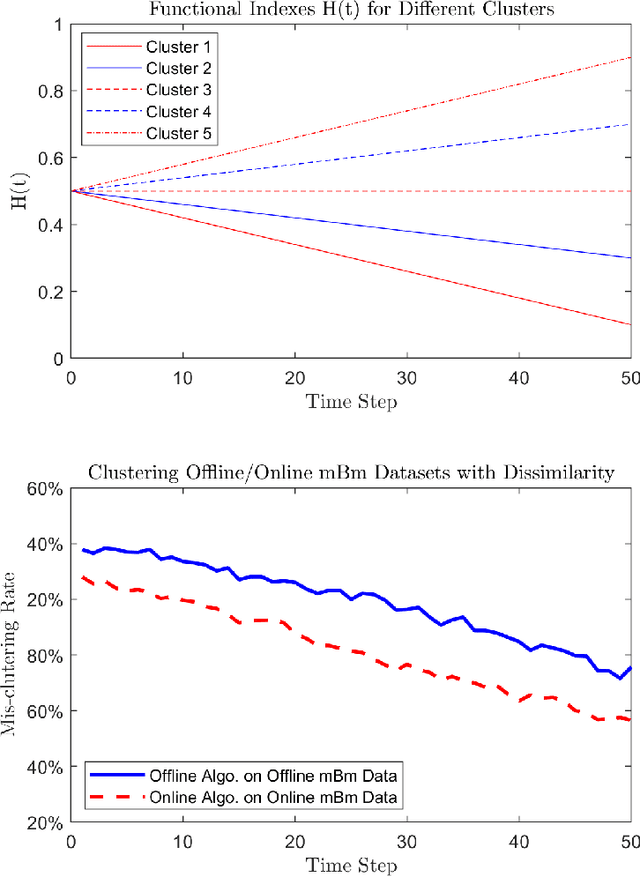

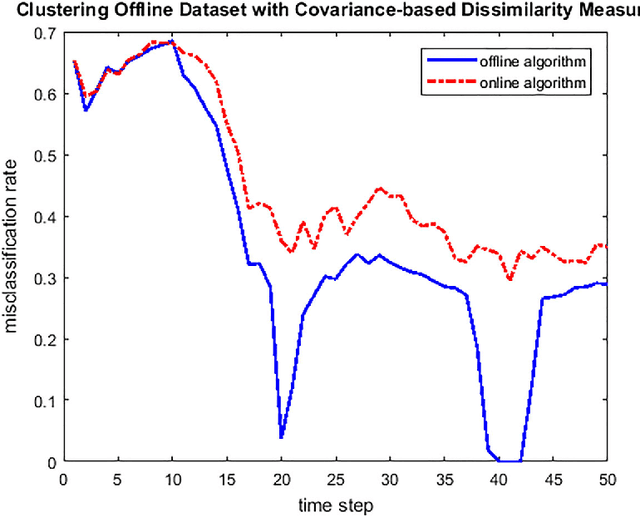

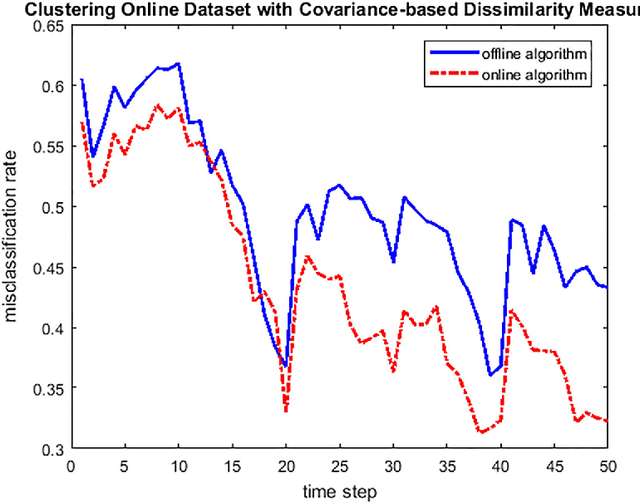

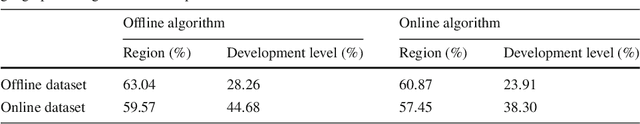

Abstract:We study the problems of clustering locally asymptotically self-similar stochastic processes, when the true number of clusters is priorly known. A new covariance-based dissimilarity measure is introduced, from which the so-called approximately asymptotically consistent clustering algorithms are obtained. In a simulation study, clustering data sampled from multifractional Brownian motions is performed to illustrate the approximated asymptotic consistency of the proposed algorithms.

Covariance-based Dissimilarity Measures Applied to Clustering Wide-sense Stationary Ergodic Processes

Jan 27, 2018

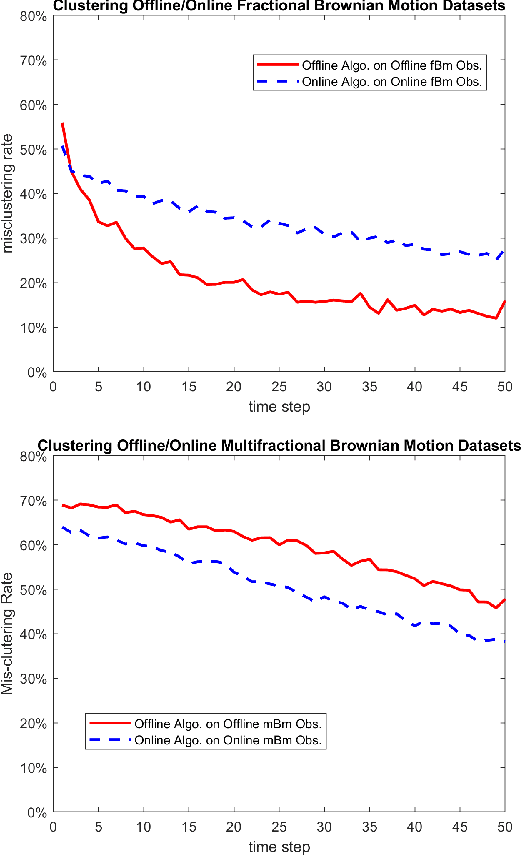

Abstract:We introduce a new unsupervised learning problem: clustering wide-sense stationary ergodic stochastic processes. A covariance-based dissimilarity measure and consistent algorithms are designed for clustering offline and online data settings, respectively. We also suggest a formal criterion on the efficiency of dissimilarity measures, and discuss of some approach to improve the efficiency of clustering algorithms, when they are applied to cluster particular type of processes, such as self-similar processes with wide-sense stationary ergodic increments. Clustering synthetic data sampled from fractional Brownian motions is provided as an example of application.

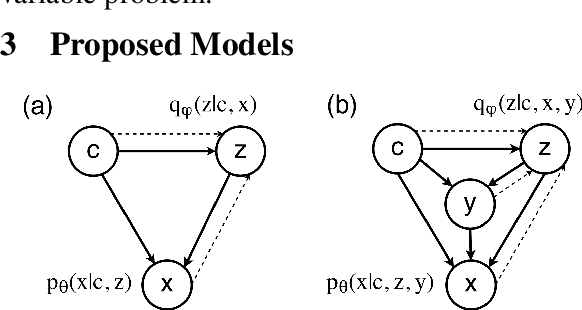

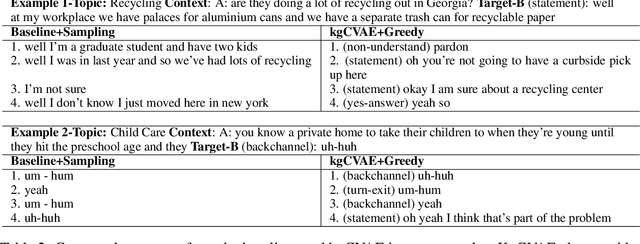

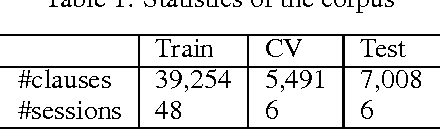

Learning Discourse-level Diversity for Neural Dialog Models using Conditional Variational Autoencoders

Oct 21, 2017

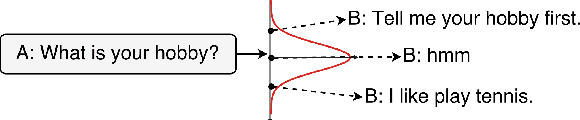

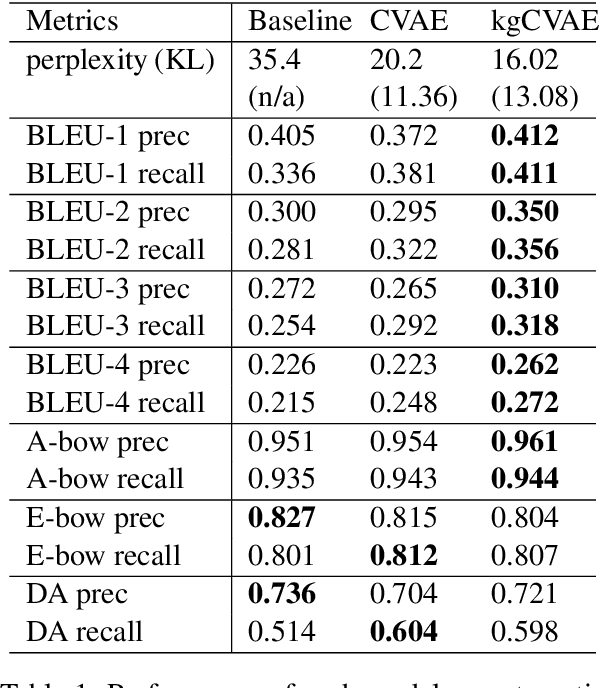

Abstract:While recent neural encoder-decoder models have shown great promise in modeling open-domain conversations, they often generate dull and generic responses. Unlike past work that has focused on diversifying the output of the decoder at word-level to alleviate this problem, we present a novel framework based on conditional variational autoencoders that captures the discourse-level diversity in the encoder. Our model uses latent variables to learn a distribution over potential conversational intents and generates diverse responses using only greedy decoders. We have further developed a novel variant that is integrated with linguistic prior knowledge for better performance. Finally, the training procedure is improved by introducing a bag-of-word loss. Our proposed models have been validated to generate significantly more diverse responses than baseline approaches and exhibit competence in discourse-level decision-making.

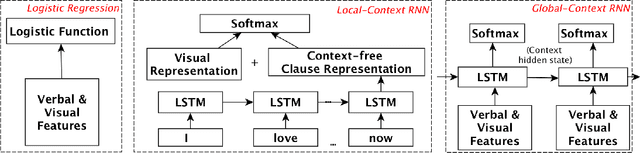

Leveraging Recurrent Neural Networks for Multimodal Recognition of Social Norm Violation in Dialog

Oct 10, 2016

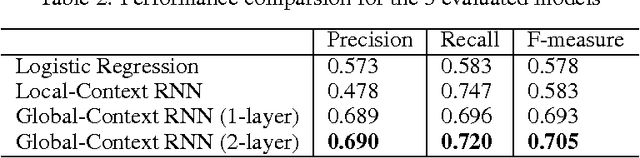

Abstract:Social norms are shared rules that govern and facilitate social interaction. Violating such social norms via teasing and insults may serve to upend power imbalances or, on the contrary reinforce solidarity and rapport in conversation, rapport which is highly situated and context-dependent. In this work, we investigate the task of automatically identifying the phenomena of social norm violation in discourse. Towards this goal, we leverage the power of recurrent neural networks and multimodal information present in the interaction, and propose a predictive model to recognize social norm violation. Using long-term temporal and contextual information, our model achieves an F1 score of 0.705. Implications of our work regarding developing a social-aware agent are discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge