Shrikanth Narayanan

Signal Analysis and Interpretation Lab, University of Southern California, Information Science Institute, University of Southern California

End-to-End Joint ASR and Speaker Role Diarization with Child-Adult Interactions

Jan 25, 2026Abstract:Accurate transcription and speaker diarization of child-adult spoken interactions are crucial for developmental and clinical research. However, manual annotation is time-consuming and challenging to scale. Existing automated systems typically rely on cascaded speaker diarization and speech recognition pipelines, which can lead to error propagation. This paper presents a unified end-to-end framework that extends the Whisper encoder-decoder architecture to jointly model ASR and child-adult speaker role diarization. The proposed approach integrates: (i) a serialized output training scheme that emits speaker tags and start/end timestamps, (ii) a lightweight frame-level diarization head that enhances speaker-discriminative encoder representations, (iii) diarization-guided silence suppression for improved temporal precision, and (iv) a state-machine-based forced decoding procedure that guarantees structurally valid outputs. Comprehensive evaluations on two datasets demonstrate consistent and substantial improvements over two cascaded baselines, achieving lower multi-talker word error rates and demonstrating competitive diarization accuracy across both Whisper-small and Whisper-large models. These findings highlight the effectiveness and practical utility of the proposed joint modeling framework for generating reliable, speaker-attributed transcripts of child-adult interactions at scale. The code and model weights are publicly available

Intelligence Requires Grounding But Not Embodiment

Jan 24, 2026Abstract:Recent advances in LLMs have reignited scientific debate over whether embodiment is necessary for intelligence. We present the argument that intelligence requires grounding, a phenomenon entailed by embodiment, but not embodiment itself. We define intelligence as the possession of four properties -- motivation, predictive ability, understanding of causality, and learning from experience -- and argue that each can be achieved by a non-embodied, grounded agent. We use this to conclude that grounding, not embodiment, is necessary for intelligence. We then present a thought experiment of an intelligent LLM agent in a digital environment and address potential counterarguments.

Encoding Emotion Through Self-Supervised Eye Movement Reconstruction

Jan 21, 2026Abstract:The relationship between emotional expression and eye movement is well-documented, with literature establishing gaze patterns are reliable indicators of emotion. However, most studies utilize specialized, high-resolution eye-tracking equipment, limiting the potential reach of findings. We investigate how eye movement can be used to predict multimodal markers of emotional expression from naturalistic, low-resolution videos. We utilize a collection of video interviews from the USC Shoah Foundation's Visual History Archive with Holocaust survivors as they recount their experiences in the Auschwitz concentration camp. Inspired by pretraining methods on language models, we develop a novel gaze detection model that uses self-supervised eye movement reconstruction that can effectively leverage unlabeled video. We use this model's encoder embeddings to fine-tune models on two downstream tasks related to emotional expression. The first is aligning eye movement with directional emotion estimates from speech. The second task is using eye gaze as a predictor of three momentary manifestations of emotional behaviors: laughing, crying/sobbing, and sighing. We find our new model is predictive of emotion outcomes and observe a positive correlation between pretraining performance and emotion processing performance for both experiments. We conclude self-supervised eye movement reconstruction is an effective method for encoding the affective signal they carry.

Quantifying Speaker Embedding Phonological Rule Interactions in Accented Speech Synthesis

Jan 20, 2026Abstract:Many spoken languages, including English, exhibit wide variation in dialects and accents, making accent control an important capability for flexible text-to-speech (TTS) models. Current TTS systems typically generate accented speech by conditioning on speaker embeddings associated with specific accents. While effective, this approach offers limited interpretability and controllability, as embeddings also encode traits such as timbre and emotion. In this study, we analyze the interaction between speaker embeddings and linguistically motivated phonological rules in accented speech synthesis. Using American and British English as a case study, we implement rules for flapping, rhoticity, and vowel correspondences. We propose the phoneme shift rate (PSR), a novel metric quantifying how strongly embeddings preserve or override rule-based transformations. Experiments show that combining rules with embeddings yields more authentic accents, while embeddings can attenuate or overwrite rules, revealing entanglement between accent and speaker identity. Our findings highlight rules as a lever for accent control and a framework for evaluating disentanglement in speech generation.

VoxCog: Towards End-to-End Multilingual Cognitive Impairment Classification through Dialectal Knowledge

Jan 12, 2026Abstract:In this work, we present a novel perspective on cognitive impairment classification from speech by integrating speech foundation models that explicitly recognize speech dialects. Our motivation is based on the observation that individuals with Alzheimer's Disease (AD) or mild cognitive impairment (MCI) often produce measurable speech characteristics, such as slower articulation rate and lengthened sounds, in a manner similar to dialectal phonetic variations seen in speech. Building on this idea, we introduce VoxCog, an end-to-end framework that uses pre-trained dialect models to detect AD or MCI without relying on additional modalities such as text or images. Through experiments on multiple multilingual datasets for AD and MCI detection, we demonstrate that model initialization with a dialect classifier on top of speech foundation models consistently improves the predictive performance of AD or MCI. Our trained models yield similar or often better performance compared to previous approaches that ensembled several computational methods using different signal modalities. Particularly, our end-to-end speech-based model achieves 87.5% and 85.9% accuracy on the ADReSS 2020 challenge and ADReSSo 2021 challenge test sets, outperforming existing solutions that use multimodal ensemble-based computation or LLMs.

Authors Should Label Their Own Documents

Dec 27, 2025Abstract:Third-party annotation is the status quo for labeling text, but egocentric information such as sentiment and belief can at best only be approximated by a third-person proxy. We introduce author labeling, an annotation technique where the writer of the document itself annotates the data at the moment of creation. We collaborate with a commercial chatbot with over 20,000 users to deploy an author labeling annotation system. This system identifies task-relevant queries, generates on-the-fly labeling questions, and records authors' answers in real time. We train and deploy an online-learning model architecture for product recommendation with author-labeled data to improve performance. We train our model to minimize the prediction error on questions generated for a set of predetermined subjective beliefs using author-labeled responses. Our model achieves a 537% improvement in click-through rate compared to an industry advertising baseline running concurrently. We then compare the quality and practicality of author labeling to three traditional annotation approaches for sentiment analysis and find author labeling to be higher quality, faster to acquire, and cheaper. These findings reinforce existing literature that annotations, especially for egocentric and subjective beliefs, are significantly higher quality when labeled by the author rather than a third party. To facilitate broader scientific adoption, we release an author labeling service for the research community at https://academic.echollm.io.

Authors Should Annotate

Dec 15, 2025Abstract:The status quo for labeling text is third-party annotation, but there are many cases where information directly from the document's source would be preferable over a third-person proxy, especially for egocentric features like sentiment and belief. We introduce author labeling, an annotation technique where the writer of the document itself annotates the data at the moment of creation. We collaborate with a commercial chatbot with over 10,000 users to deploy an author labeling annotation system for subjective features related to product recommendation. This system identifies task-relevant queries, generates on-the-fly labeling questions, and records authors' answers in real time. We train and deploy an online-learning model architecture for product recommendation that continuously improves from author labeling and find it achieved a 534% increase in click-through rate compared to an industry advertising baseline running concurrently. We then compare the quality and practicality of author labeling to three traditional annotation approaches for sentiment analysis and find author labeling to be higher quality, faster to acquire, and cheaper. These findings reinforce existing literature that annotations, especially for egocentric and subjective beliefs, are significantly higher quality when labeled by the author rather than a third party. To facilitate broader scientific adoption, we release an author labeling service for the research community at academic.echollm.io.

Informed Bootstrap Augmentation Improves EEG Decoding

Nov 15, 2025Abstract:Electroencephalography (EEG) offers detailed access to neural dynamics but remains constrained by noise and trial-by-trial variability, limiting decoding performance in data-restricted or complex paradigms. Data augmentation is often employed to enhance feature representations, yet conventional uniform averaging overlooks differences in trial informativeness and can degrade representational quality. We introduce a weighted bootstrapping approach that prioritizes more reliable trials to generate higher-quality augmented samples. In a Sentence Evaluation paradigm, weights were computed from relative ERP differences and applied during probabilistic sampling and averaging. Across conditions, weighted bootstrapping improved decoding accuracy relative to unweighted (from 68.35% to 71.25% at best), demonstrating that emphasizing reliable trials strengthens representational quality. The results demonstrate that reliability-based augmentation yields more robust and discriminative EEG representations. The code is publicly available at https://github.com/lyricists/NeuroBootstrap.

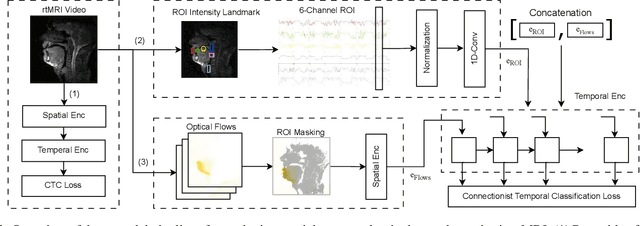

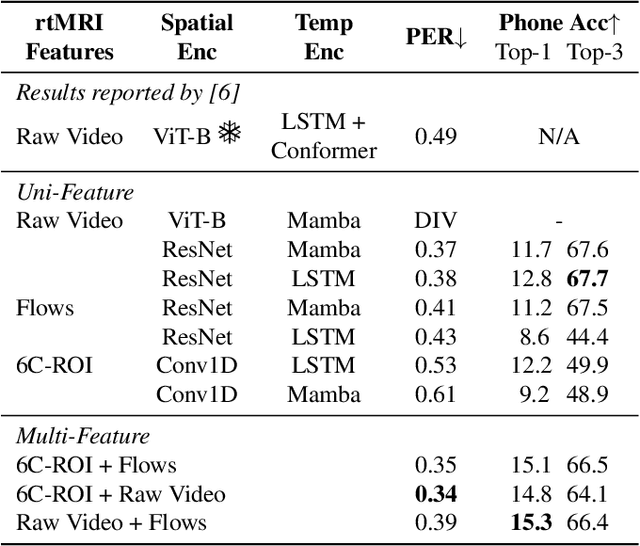

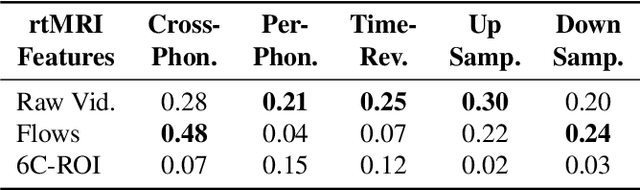

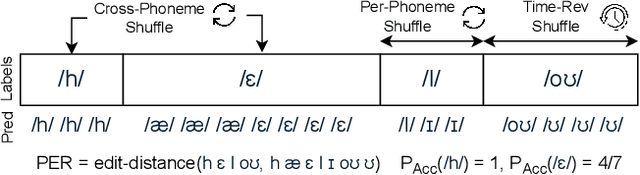

Interpretable Modeling of Articulatory Temporal Dynamics from real-time MRI for Phoneme Recognition

Sep 19, 2025

Abstract:Real-time Magnetic Resonance Imaging (rtMRI) visualizes vocal tract action, offering a comprehensive window into speech articulation. However, its signals are high dimensional and noisy, hindering interpretation. We investigate compact representations of spatiotemporal articulatory dynamics for phoneme recognition from midsagittal vocal tract rtMRI videos. We compare three feature types: (1) raw video, (2) optical flow, and (3) six linguistically-relevant regions of interest (ROIs) for articulator movements. We evaluate models trained independently on each representation, as well as multi-feature combinations. Results show that multi-feature models consistently outperform single-feature baselines, with the lowest phoneme error rate (PER) of 0.34 obtained by combining ROI and raw video. Temporal fidelity experiments demonstrate a reliance on fine-grained articulatory dynamics, while ROI ablation studies reveal strong contributions from tongue and lips. Our findings highlight how rtMRI-derived features provide accuracy and interpretability, and establish strategies for leveraging articulatory data in speech processing.

Can Layer-wise SSL Features Improve Zero-Shot ASR Performance for Children's Speech?

Aug 28, 2025Abstract:Automatic Speech Recognition (ASR) systems often struggle to accurately process children's speech due to its distinct and highly variable acoustic and linguistic characteristics. While recent advancements in self-supervised learning (SSL) models have greatly enhanced the transcription of adult speech, accurately transcribing children's speech remains a significant challenge. This study investigates the effectiveness of layer-wise features extracted from state-of-the-art SSL pre-trained models - specifically, Wav2Vec2, HuBERT, Data2Vec, and WavLM in improving the performance of ASR for children's speech in zero-shot scenarios. A detailed analysis of features extracted from these models was conducted, integrating them into a simplified DNN-based ASR system using the Kaldi toolkit. The analysis identified the most effective layers for enhancing ASR performance on children's speech in a zero-shot scenario, where WSJCAM0 adult speech was used for training and PFSTAR children speech for testing. Experimental results indicated that Layer 22 of the Wav2Vec2 model achieved the lowest Word Error Rate (WER) of 5.15%, representing a 51.64% relative improvement over the direct zero-shot decoding using Wav2Vec2 (WER of 10.65%). Additionally, age group-wise analysis demonstrated consistent performance improvements with increasing age, along with significant gains observed even in younger age groups using the SSL features. Further experiments on the CMU Kids dataset confirmed similar trends, highlighting the generalizability of the proposed approach.

* Accepted

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge