Laureano Moro-Velazquez

Spoken DialogSum: An Emotion-Rich Conversational Dataset for Spoken Dialogue Summarization

Dec 17, 2025

Abstract:Recent audio language models can follow long conversations. However, research on emotion-aware or spoken dialogue summarization is constrained by the lack of data that links speech, summaries, and paralinguistic cues. We introduce Spoken DialogSum, the first corpus aligning raw conversational audio with factual summaries, emotion-rich summaries, and utterance-level labels for speaker age, gender, and emotion. The dataset is built in two stages: first, an LLM rewrites DialogSum scripts with Switchboard-style fillers and back-channels, then tags each utterance with emotion, pitch, and speaking rate. Second, an expressive TTS engine synthesizes speech from the tagged scripts, aligned with paralinguistic labels. Spoken DialogSum comprises 13,460 emotion-diverse dialogues, each paired with both a factual and an emotion-focused summary. We release an online demo at https://fatfat-emosum.github.io/EmoDialog-Sum-Audio-Samples/, with plans to release the full dataset in the near future. Baselines show that an Audio-LLM raises emotional-summary ROUGE-L by 28% relative to a cascaded ASR-LLM system, confirming the value of end-to-end speech modeling.

Vox-Profile: A Speech Foundation Model Benchmark for Characterizing Diverse Speaker and Speech Traits

May 20, 2025Abstract:We introduce Vox-Profile, a comprehensive benchmark to characterize rich speaker and speech traits using speech foundation models. Unlike existing works that focus on a single dimension of speaker traits, Vox-Profile provides holistic and multi-dimensional profiles that reflect both static speaker traits (e.g., age, sex, accent) and dynamic speech properties (e.g., emotion, speech flow). This benchmark is grounded in speech science and linguistics, developed with domain experts to accurately index speaker and speech characteristics. We report benchmark experiments using over 15 publicly available speech datasets and several widely used speech foundation models that target various static and dynamic speaker and speech properties. In addition to benchmark experiments, we showcase several downstream applications supported by Vox-Profile. First, we show that Vox-Profile can augment existing speech recognition datasets to analyze ASR performance variability. Vox-Profile is also used as a tool to evaluate the performance of speech generation systems. Finally, we assess the quality of our automated profiles through comparison with human evaluation and show convergent validity. Vox-Profile is publicly available at: https://github.com/tiantiaf0627/vox-profile-release.

Automatic Proficiency Assessment in L2 English Learners

May 05, 2025Abstract:Second language proficiency (L2) in English is usually perceptually evaluated by English teachers or expert evaluators, with the inherent intra- and inter-rater variability. This paper explores deep learning techniques for comprehensive L2 proficiency assessment, addressing both the speech signal and its correspondent transcription. We analyze spoken proficiency classification prediction using diverse architectures, including 2D CNN, frequency-based CNN, ResNet, and a pretrained wav2vec 2.0 model. Additionally, we examine text-based proficiency assessment by fine-tuning a BERT language model within resource constraints. Finally, we tackle the complex task of spontaneous dialogue assessment, managing long-form audio and speaker interactions through separate applications of wav2vec 2.0 and BERT models. Results from experiments on EFCamDat and ANGLISH datasets and a private dataset highlight the potential of deep learning, especially the pretrained wav2vec 2.0 model, for robust automated L2 proficiency evaluation.

Detecting Neurodegenerative Diseases using Frame-Level Handwriting Embeddings

Feb 10, 2025Abstract:In this study, we explored the use of spectrograms to represent handwriting signals for assessing neurodegenerative diseases, including 42 healthy controls (CTL), 35 subjects with Parkinson's Disease (PD), 21 with Alzheimer's Disease (AD), and 15 with Parkinson's Disease Mimics (PDM). We applied CNN and CNN-BLSTM models for binary classification using both multi-channel fixed-size and frame-based spectrograms. Our results showed that handwriting tasks and spectrogram channel combinations significantly impacted classification performance. The highest F1-score (89.8%) was achieved for AD vs. CTL, while PD vs. CTL reached 74.5%, and PD vs. PDM scored 77.97%. CNN consistently outperformed CNN-BLSTM. Different sliding window lengths were tested for constructing frame-based spectrograms. A 1-second window worked best for AD, longer windows improved PD classification, and window length had little effect on PD vs. PDM.

CA-SSLR: Condition-Aware Self-Supervised Learning Representation for Generalized Speech Processing

Dec 05, 2024Abstract:We introduce Condition-Aware Self-Supervised Learning Representation (CA-SSLR), a generalist conditioning model broadly applicable to various speech-processing tasks. Compared to standard fine-tuning methods that optimize for downstream models, CA-SSLR integrates language and speaker embeddings from earlier layers, making the SSL model aware of the current language and speaker context. This approach reduces the reliance on input audio features while preserving the integrity of the base SSLR. CA-SSLR improves the model's capabilities and demonstrates its generality on unseen tasks with minimal task-specific tuning. Our method employs linear modulation to dynamically adjust internal representations, enabling fine-grained adaptability without significantly altering the original model behavior. Experiments show that CA-SSLR reduces the number of trainable parameters, mitigates overfitting, and excels in under-resourced and unseen tasks. Specifically, CA-SSLR achieves a 10% relative reduction in LID errors, a 37% improvement in ASR CER on the ML-SUPERB benchmark, and a 27% decrease in SV EER on VoxCeleb-1, demonstrating its effectiveness.

Noise-robust Speech Separation with Fast Generative Correction

Jun 11, 2024Abstract:Speech separation, the task of isolating multiple speech sources from a mixed audio signal, remains challenging in noisy environments. In this paper, we propose a generative correction method to enhance the output of a discriminative separator. By leveraging a generative corrector based on a diffusion model, we refine the separation process for single-channel mixture speech by removing noises and perceptually unnatural distortions. Furthermore, we optimize the generative model using a predictive loss to streamline the diffusion model's reverse process into a single step and rectify any associated errors by the reverse process. Our method achieves state-of-the-art performance on the in-domain Libri2Mix noisy dataset, and out-of-domain WSJ with a variety of noises, improving SI-SNR by 22-35% relative to SepFormer, demonstrating robustness and strong generalization capabilities.

Improving fairness for spoken language understanding in atypical speech with Text-to-Speech

Nov 16, 2023

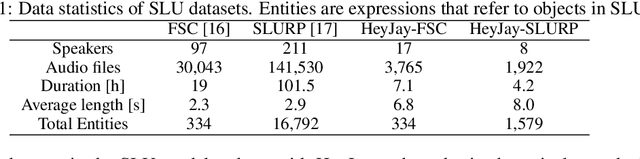

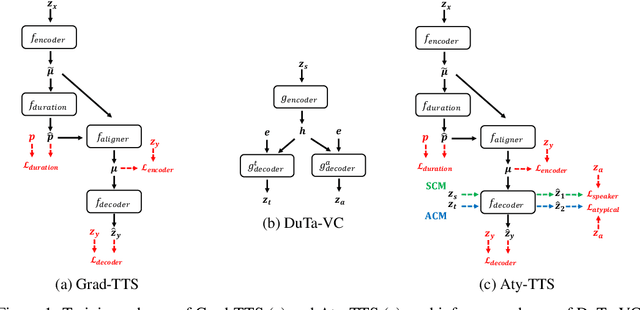

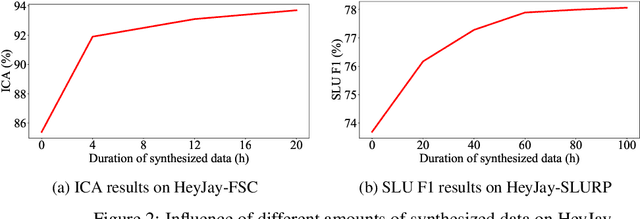

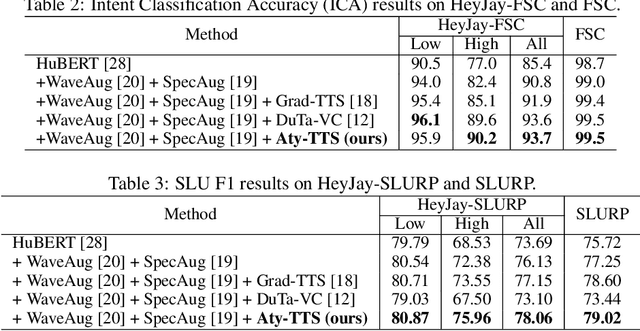

Abstract:Spoken language understanding (SLU) systems often exhibit suboptimal performance in processing atypical speech, typically caused by neurological conditions and motor impairments. Recent advancements in Text-to-Speech (TTS) synthesis-based augmentation for more fair SLU have struggled to accurately capture the unique vocal characteristics of atypical speakers, largely due to insufficient data. To address this issue, we present a novel data augmentation method for atypical speakers by finetuning a TTS model, called Aty-TTS. Aty-TTS models speaker and atypical characteristics via knowledge transferring from a voice conversion model. Then, we use the augmented data to train SLU models adapted to atypical speech. To train these data augmentation models and evaluate the resulting SLU systems, we have collected a new atypical speech dataset containing intent annotation. Both objective and subjective assessments validate that Aty-TTS is capable of generating high-quality atypical speech. Furthermore, it serves as an effective data augmentation strategy, contributing to more fair SLU systems that can better accommodate individuals with atypical speech patterns.

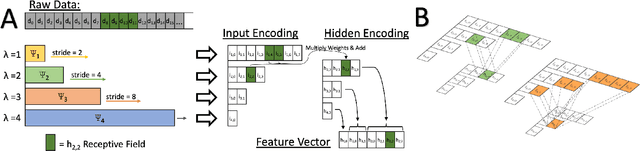

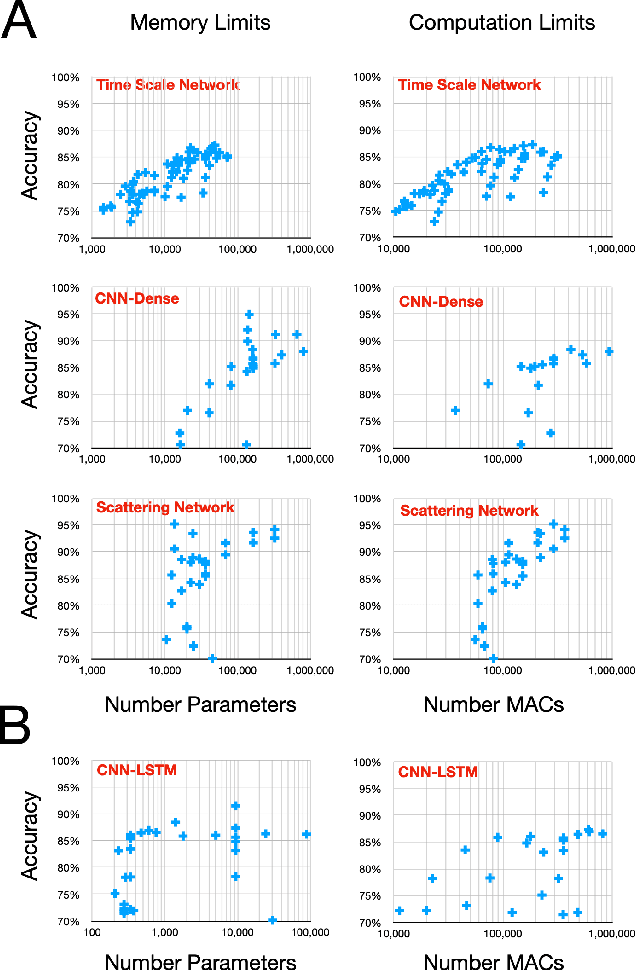

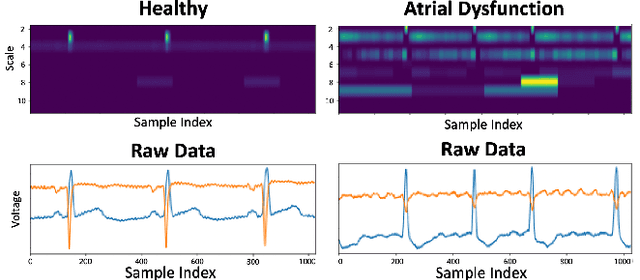

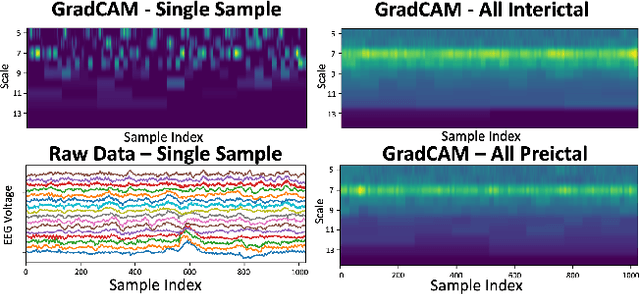

Time Scale Network: A Shallow Neural Network For Time Series Data

Nov 10, 2023

Abstract:Time series data is often composed of information at multiple time scales, particularly in biomedical data. While numerous deep learning strategies exist to capture this information, many make networks larger, require more data, are more demanding to compute, and are difficult to interpret. This limits their usefulness in real-world applications facing even modest computational or data constraints and can further complicate their translation into practice. We present a minimal, computationally efficient Time Scale Network combining the translation and dilation sequence used in discrete wavelet transforms with traditional convolutional neural networks and back-propagation. The network simultaneously learns features at many time scales for sequence classification with significantly reduced parameters and operations. We demonstrate advantages in Atrial Dysfunction detection including: superior accuracy-per-parameter and accuracy-per-operation, fast training and inference speeds, and visualization and interpretation of learned patterns in atrial dysfunction detection on ECG signals. We also demonstrate impressive performance in seizure prediction using EEG signals. Our network isolated a few time scales that could be strategically selected to achieve 90.9% accuracy using only 1,133 active parameters and consistently converged on pulsatile waveform shapes. This method does not rest on any constraints or assumptions regarding signal content and could be leveraged in any area of time series analysis dealing with signals containing features at many time scales.

Leveraging Pretrained Image-text Models for Improving Audio-Visual Learning

Sep 08, 2023

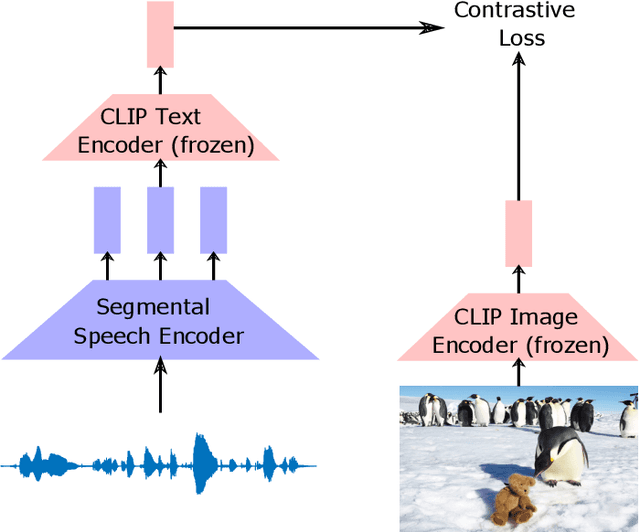

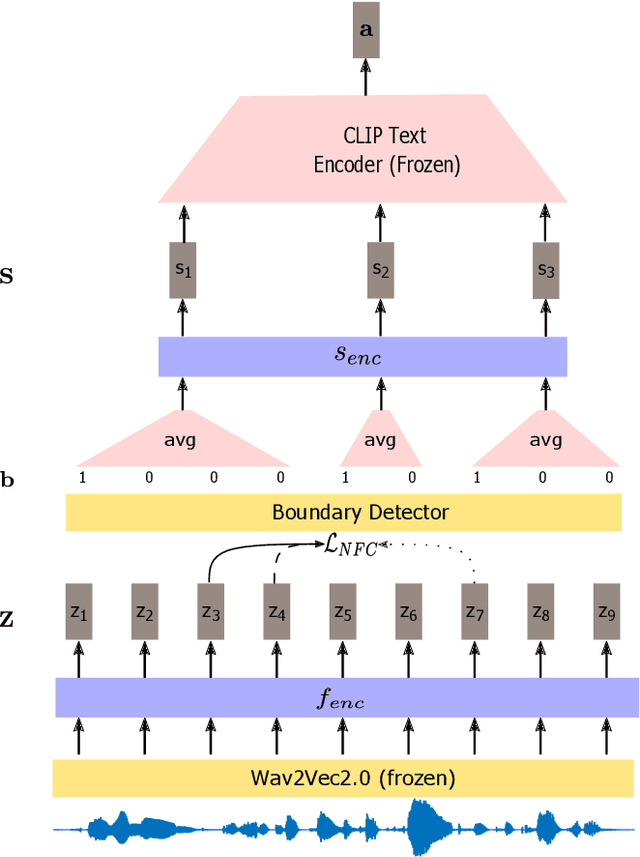

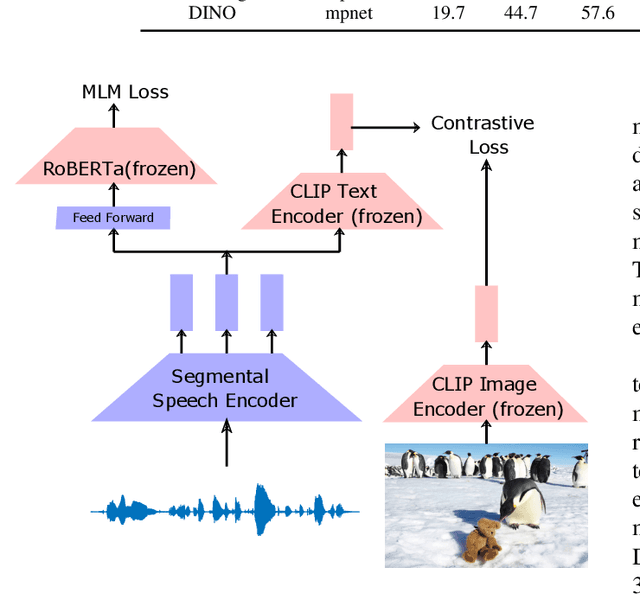

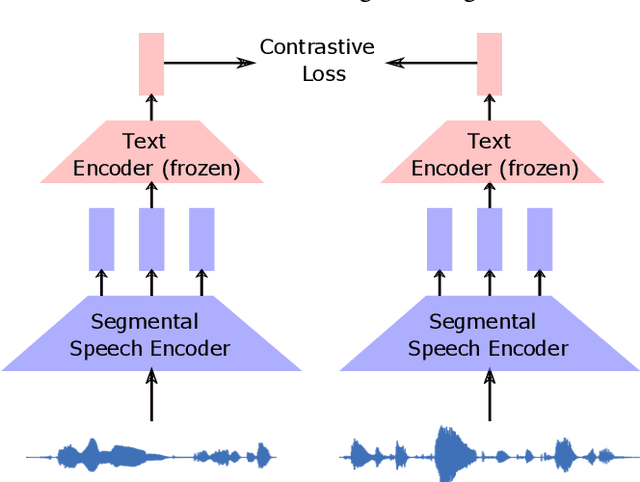

Abstract:Visually grounded speech systems learn from paired images and their spoken captions. Recently, there have been attempts to utilize the visually grounded models trained from images and their corresponding text captions, such as CLIP, to improve speech-based visually grounded models' performance. However, the majority of these models only utilize the pretrained image encoder. Cascaded SpeechCLIP attempted to generate localized word-level information and utilize both the pretrained image and text encoders. Despite using both, they noticed a substantial drop in retrieval performance. We proposed Segmental SpeechCLIP which used a hierarchical segmental speech encoder to generate sequences of word-like units. We used the pretrained CLIP text encoder on top of these word-like unit representations and showed significant improvements over the cascaded variant of SpeechCLIP. Segmental SpeechCLIP directly learns the word embeddings as input to the CLIP text encoder bypassing the vocabulary embeddings. Here, we explore mapping audio to CLIP vocabulary embeddings via regularization and quantization. As our objective is to distill semantic information into the speech encoders, we explore the usage of large unimodal pretrained language models as the text encoders. Our method enables us to bridge image and text encoders e.g. DINO and RoBERTa trained with uni-modal data. Finally, we extend our framework in audio-only settings where only pairs of semantically related audio are available. Experiments show that audio-only systems perform close to the audio-visual system.

DuTa-VC: A Duration-aware Typical-to-atypical Voice Conversion Approach with Diffusion Probabilistic Model

Jun 18, 2023

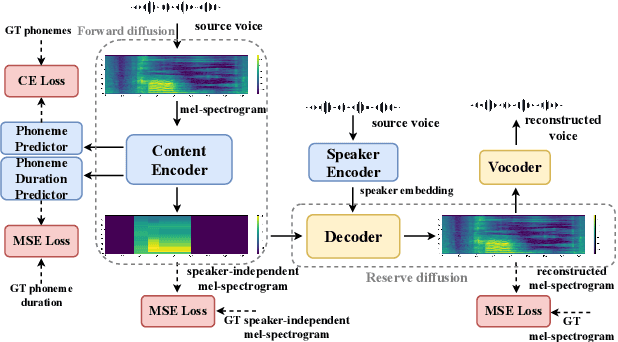

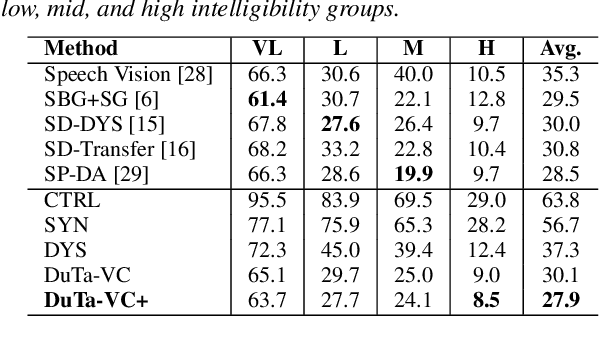

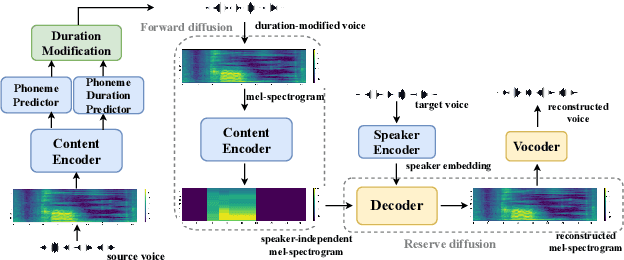

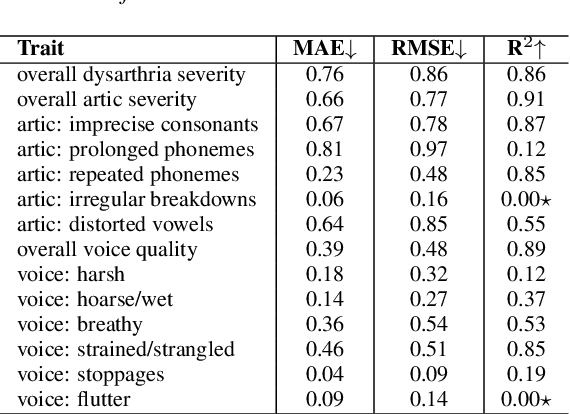

Abstract:We present a novel typical-to-atypical voice conversion approach (DuTa-VC), which (i) can be trained with nonparallel data (ii) first introduces diffusion probabilistic model (iii) preserves the target speaker identity (iv) is aware of the phoneme duration of the target speaker. DuTa-VC consists of three parts: an encoder transforms the source mel-spectrogram into a duration-modified speaker-independent mel-spectrogram, a decoder performs the reverse diffusion to generate the target mel-spectrogram, and a vocoder is applied to reconstruct the waveform. Objective evaluations conducted on the UASpeech show that DuTa-VC is able to capture severity characteristics of dysarthric speech, reserves speaker identity, and significantly improves dysarthric speech recognition as a data augmentation. Subjective evaluations by two expert speech pathologists validate that DuTa-VC can preserve the severity and type of dysarthria of the target speakers in the synthesized speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge