Saurabhchand Bhati

USAD: Universal Speech and Audio Representation via Distillation

Jun 23, 2025

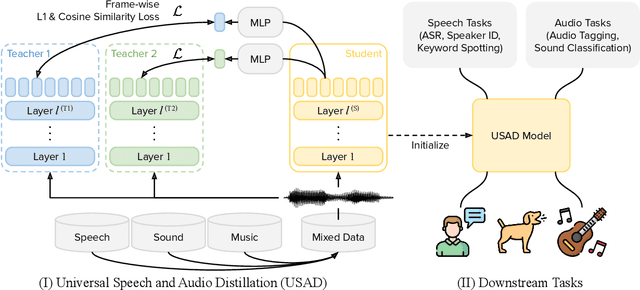

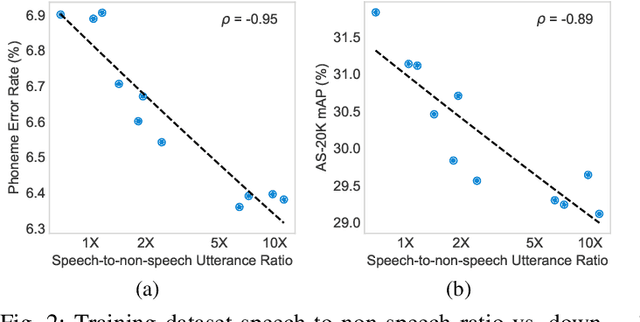

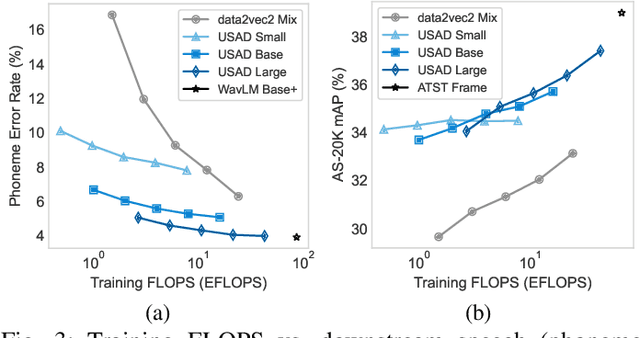

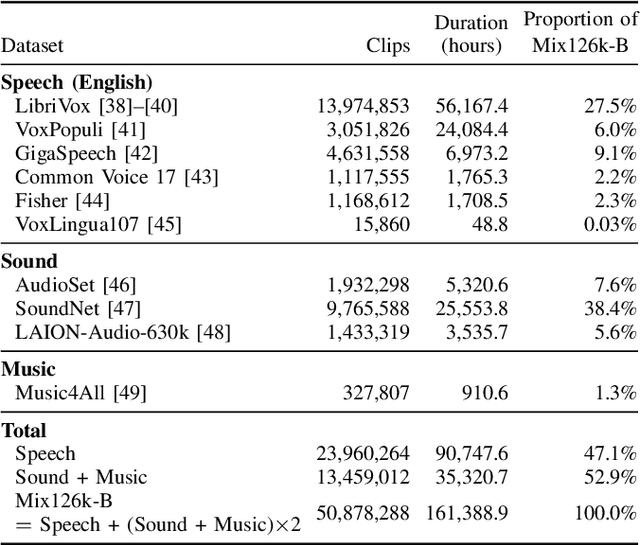

Abstract:Self-supervised learning (SSL) has revolutionized audio representations, yet models often remain domain-specific, focusing on either speech or non-speech tasks. In this work, we present Universal Speech and Audio Distillation (USAD), a unified approach to audio representation learning that integrates diverse audio types - speech, sound, and music - into a single model. USAD employs efficient layer-to-layer distillation from domain-specific SSL models to train a student on a comprehensive audio dataset. USAD offers competitive performance across various benchmarks and datasets, including frame and instance-level speech processing tasks, audio tagging, and sound classification, achieving near state-of-the-art results with a single encoder on SUPERB and HEAR benchmarks.

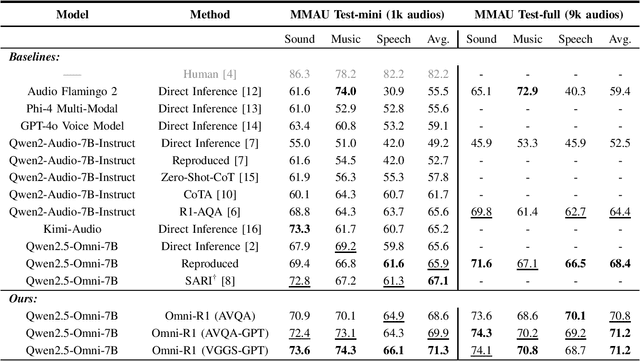

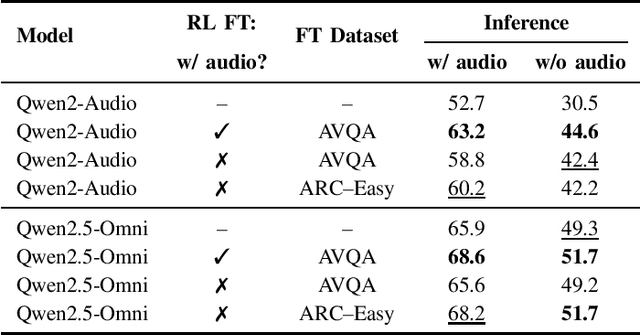

Omni-R1: Do You Really Need Audio to Fine-Tune Your Audio LLM?

May 14, 2025

Abstract:We propose Omni-R1 which fine-tunes a recent multi-modal LLM, Qwen2.5-Omni, on an audio question answering dataset with the reinforcement learning method GRPO. This leads to new State-of-the-Art performance on the recent MMAU benchmark. Omni-R1 achieves the highest accuracies on the sounds, music, speech, and overall average categories, both on the Test-mini and Test-full splits. To understand the performance improvement, we tested models both with and without audio and found that much of the performance improvement from GRPO could be attributed to better text-based reasoning. We also made a surprising discovery that fine-tuning without audio on a text-only dataset was effective at improving the audio-based performance.

CAV-MAE Sync: Improving Contrastive Audio-Visual Mask Autoencoders via Fine-Grained Alignment

May 02, 2025Abstract:Recent advances in audio-visual learning have shown promising results in learning representations across modalities. However, most approaches rely on global audio representations that fail to capture fine-grained temporal correspondences with visual frames. Additionally, existing methods often struggle with conflicting optimization objectives when trying to jointly learn reconstruction and cross-modal alignment. In this work, we propose CAV-MAE Sync as a simple yet effective extension of the original CAV-MAE framework for self-supervised audio-visual learning. We address three key challenges: First, we tackle the granularity mismatch between modalities by treating audio as a temporal sequence aligned with video frames, rather than using global representations. Second, we resolve conflicting optimization goals by separating contrastive and reconstruction objectives through dedicated global tokens. Third, we improve spatial localization by introducing learnable register tokens that reduce semantic load on patch tokens. We evaluate the proposed approach on AudioSet, VGG Sound, and the ADE20K Sound dataset on zero-shot retrieval, classification and localization tasks demonstrating state-of-the-art performance and outperforming more complex architectures.

mWhisper-Flamingo for Multilingual Audio-Visual Noise-Robust Speech Recognition

Feb 03, 2025Abstract:Audio-Visual Speech Recognition (AVSR) combines lip-based video with audio and can improve performance in noise, but most methods are trained only on English data. One limitation is the lack of large-scale multilingual video data, which makes it hard hard to train models from scratch. In this work, we propose mWhisper-Flamingo for multilingual AVSR which combines the strengths of a pre-trained audio model (Whisper) and video model (AV-HuBERT). To enable better multi-modal integration and improve the noisy multilingual performance, we introduce decoder modality dropout where the model is trained both on paired audio-visual inputs and separate audio/visual inputs. mWhisper-Flamingo achieves state-of-the-art WER on MuAViC, an AVSR dataset of 9 languages. Audio-visual mWhisper-Flamingo consistently outperforms audio-only Whisper on all languages in noisy conditions.

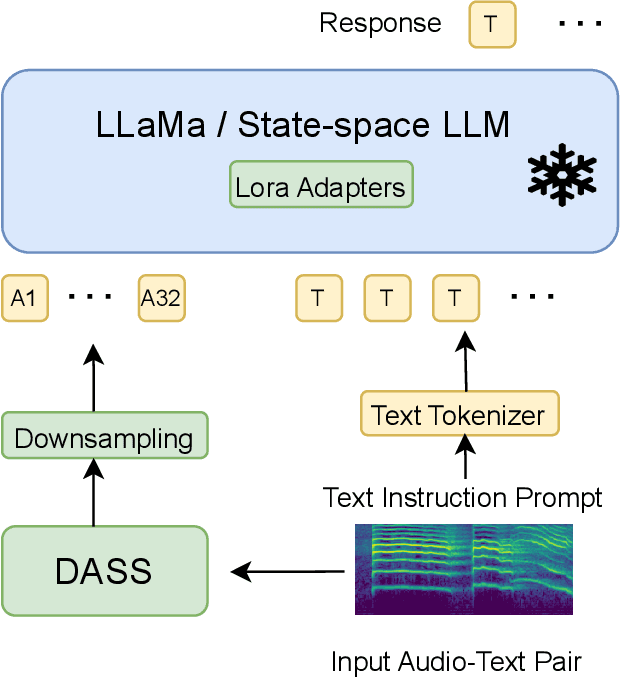

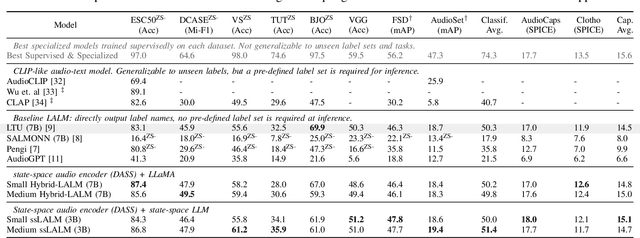

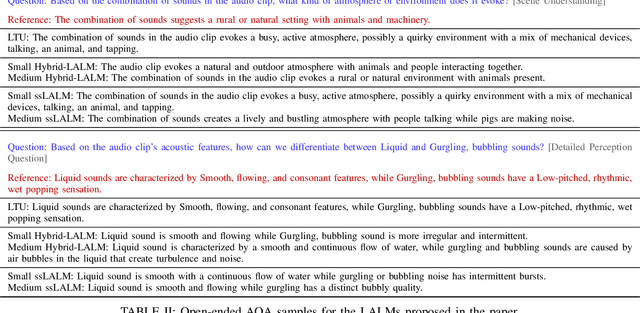

State-Space Large Audio Language Models

Nov 24, 2024

Abstract:Large Audio Language Models (LALM) combine the audio perception models and the Large Language Models (LLM) and show a remarkable ability to reason about the input audio, infer the meaning, and understand the intent. However, these systems rely on Transformers which scale quadratically with the input sequence lengths which poses computational challenges in deploying these systems in memory and time-constrained scenarios. Recently, the state-space models (SSMs) have emerged as an alternative to transformer networks. While there have been successful attempts to replace transformer-based audio perception models with state-space ones, state-space-based LALMs remain unexplored. First, we begin by replacing the transformer-based audio perception module and then replace the transformer-based LLM and propose the first state-space-based LALM. Experimental results demonstrate that space-based LALM despite having a significantly lower number of parameters performs competitively with transformer-based LALMs on close-ended tasks on a variety of datasets.

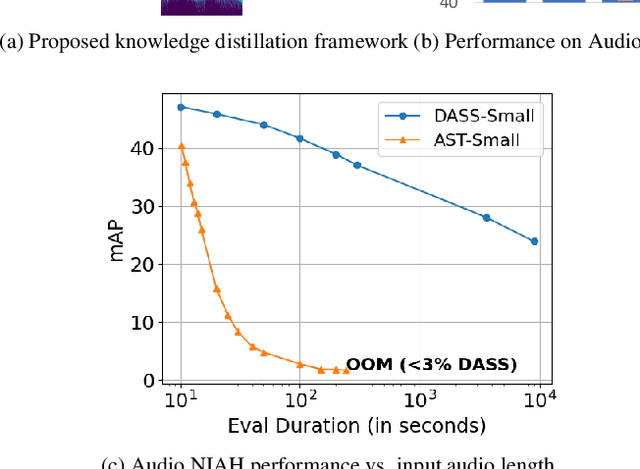

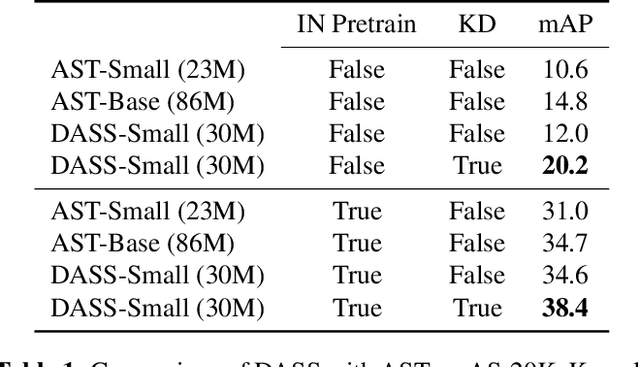

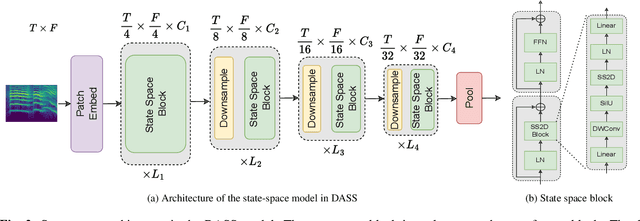

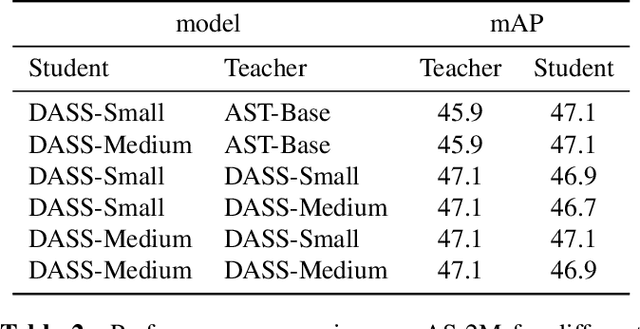

DASS: Distilled Audio State Space Models Are Stronger and More Duration-Scalable Learners

Jul 04, 2024

Abstract:State-space models (SSMs) have emerged as an alternative to Transformers for audio modeling due to their high computational efficiency with long inputs. While recent efforts on Audio SSMs have reported encouraging results, two main limitations remain: First, in 10-second short audio tagging tasks, Audio SSMs still underperform compared to Transformer-based models such as Audio Spectrogram Transformer (AST). Second, although Audio SSMs theoretically support long audio inputs, their actual performance with long audio has not been thoroughly evaluated. To address these limitations, in this paper, 1) We applied knowledge distillation in audio space model training, resulting in a model called Knowledge Distilled Audio SSM (DASS). To the best of our knowledge, it is the first SSM that outperforms the Transformers on AudioSet and achieves an mAP of 47.6; and 2) We designed a new test called Audio Needle In A Haystack (Audio NIAH). We find that DASS, trained with only 10-second audio clips, can retrieve sound events in audio recordings up to 2.5 hours long, while the AST model fails when the input is just 50 seconds, demonstrating SSMs are indeed more duration scalable.

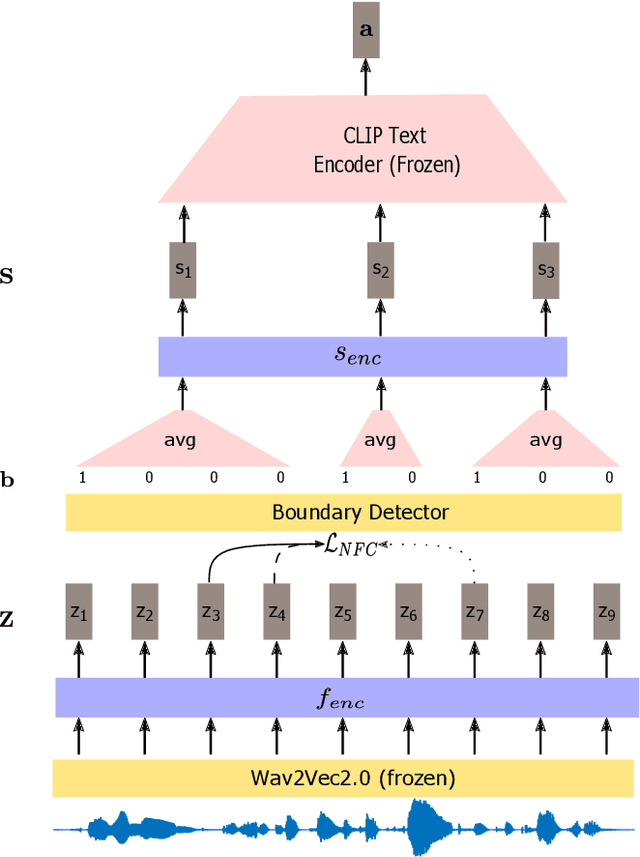

Audio-Visual Neural Syntax Acquisition

Oct 11, 2023

Abstract:We study phrase structure induction from visually-grounded speech. The core idea is to first segment the speech waveform into sequences of word segments, and subsequently induce phrase structure using the inferred segment-level continuous representations. We present the Audio-Visual Neural Syntax Learner (AV-NSL) that learns phrase structure by listening to audio and looking at images, without ever being exposed to text. By training on paired images and spoken captions, AV-NSL exhibits the capability to infer meaningful phrase structures that are comparable to those derived by naturally-supervised text parsers, for both English and German. Our findings extend prior work in unsupervised language acquisition from speech and grounded grammar induction, and present one approach to bridge the gap between the two topics.

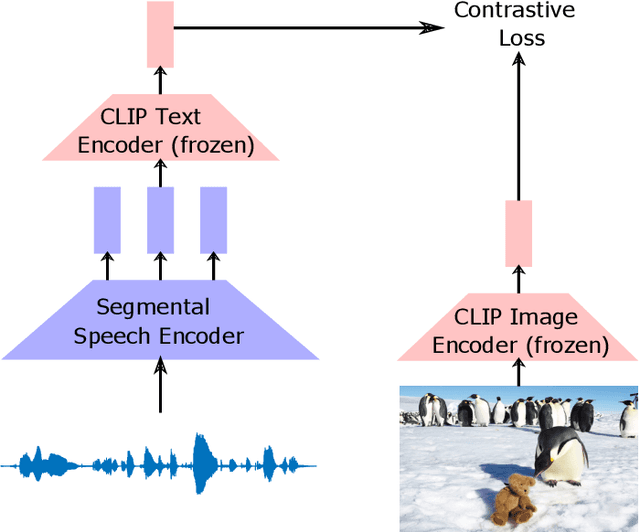

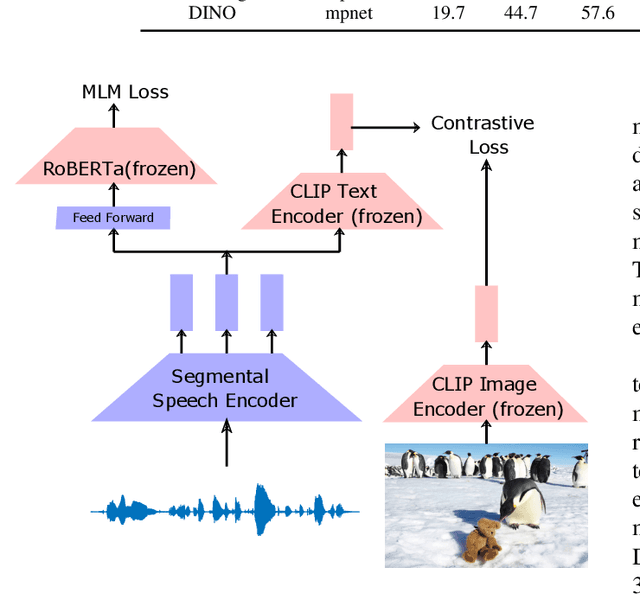

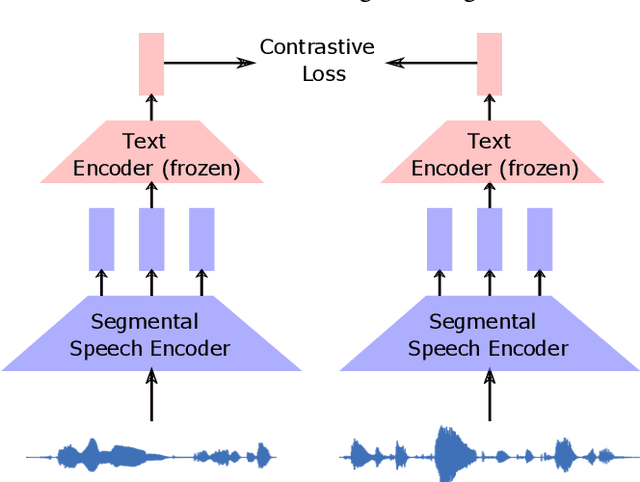

Leveraging Pretrained Image-text Models for Improving Audio-Visual Learning

Sep 08, 2023

Abstract:Visually grounded speech systems learn from paired images and their spoken captions. Recently, there have been attempts to utilize the visually grounded models trained from images and their corresponding text captions, such as CLIP, to improve speech-based visually grounded models' performance. However, the majority of these models only utilize the pretrained image encoder. Cascaded SpeechCLIP attempted to generate localized word-level information and utilize both the pretrained image and text encoders. Despite using both, they noticed a substantial drop in retrieval performance. We proposed Segmental SpeechCLIP which used a hierarchical segmental speech encoder to generate sequences of word-like units. We used the pretrained CLIP text encoder on top of these word-like unit representations and showed significant improvements over the cascaded variant of SpeechCLIP. Segmental SpeechCLIP directly learns the word embeddings as input to the CLIP text encoder bypassing the vocabulary embeddings. Here, we explore mapping audio to CLIP vocabulary embeddings via regularization and quantization. As our objective is to distill semantic information into the speech encoders, we explore the usage of large unimodal pretrained language models as the text encoders. Our method enables us to bridge image and text encoders e.g. DINO and RoBERTa trained with uni-modal data. Finally, we extend our framework in audio-only settings where only pairs of semantically related audio are available. Experiments show that audio-only systems perform close to the audio-visual system.

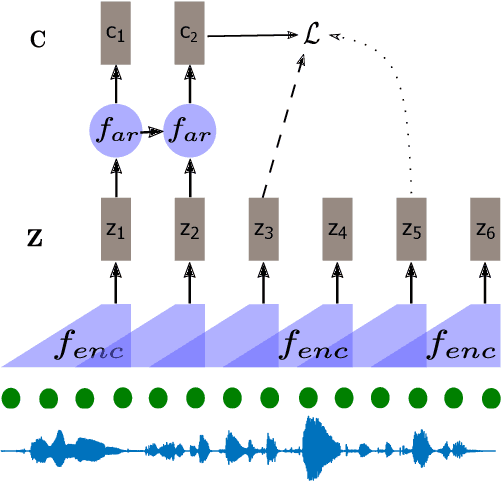

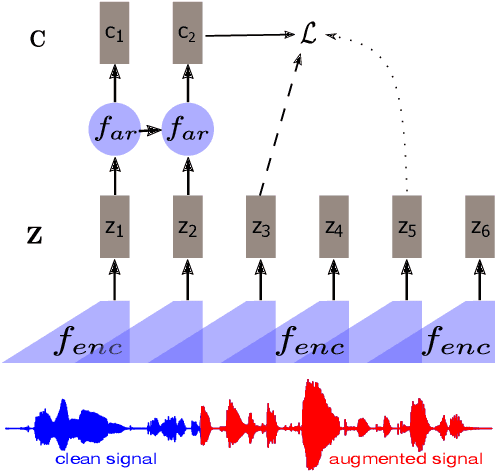

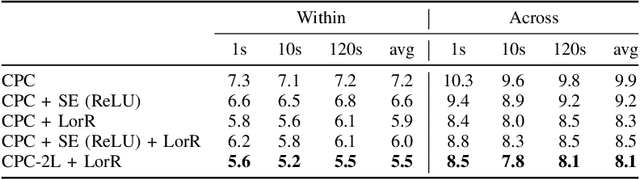

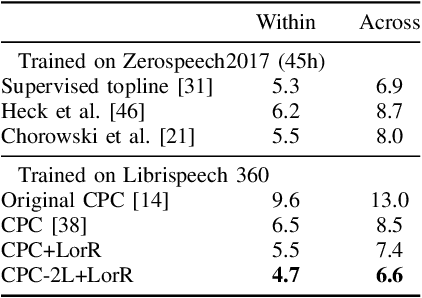

Regularizing Contrastive Predictive Coding for Speech Applications

Apr 26, 2023

Abstract:Self-supervised methods such as Contrastive predictive Coding (CPC) have greatly improved the quality of the unsupervised representations. These representations significantly reduce the amount of labeled data needed for downstream task performance, such as automatic speech recognition. CPC learns representations by learning to predict future frames given current frames. Based on the observation that the acoustic information, e.g., phones, changes slower than the feature extraction rate in CPC, we propose regularization techniques that impose slowness constraints on the features. Here we propose two regularization techniques: Self-expressing constraint and Left-or-Right regularization. We evaluate the proposed model on ABX and linear phone classification tasks, acoustic unit discovery, and automatic speech recognition. The regularized CPC trained on 100 hours of unlabeled data matches the performance of the baseline CPC trained on 360 hours of unlabeled data. We also show that our regularization techniques are complementary to data augmentation and can further boost the system's performance. In monolingual, cross-lingual, or multilingual settings, with/without data augmentation, regardless of the amount of data used for training, our regularized models outperformed the baseline CPC models on the ABX task.

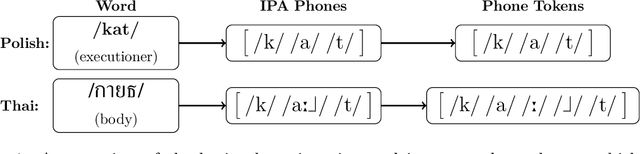

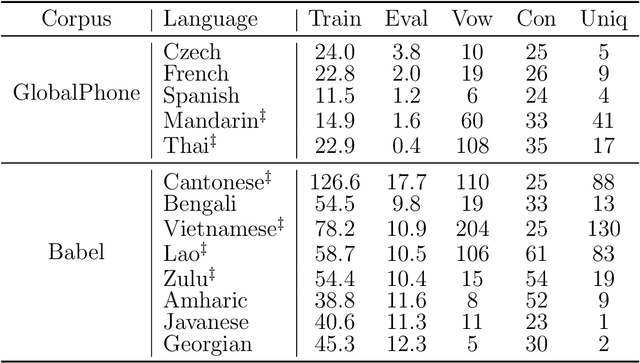

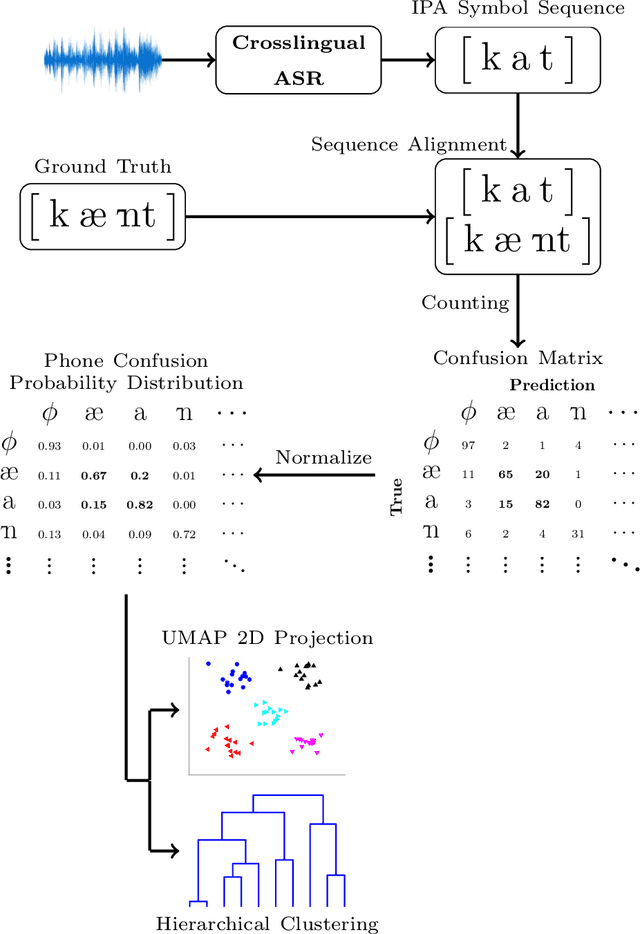

Discovering Phonetic Inventories with Crosslingual Automatic Speech Recognition

Jan 28, 2022

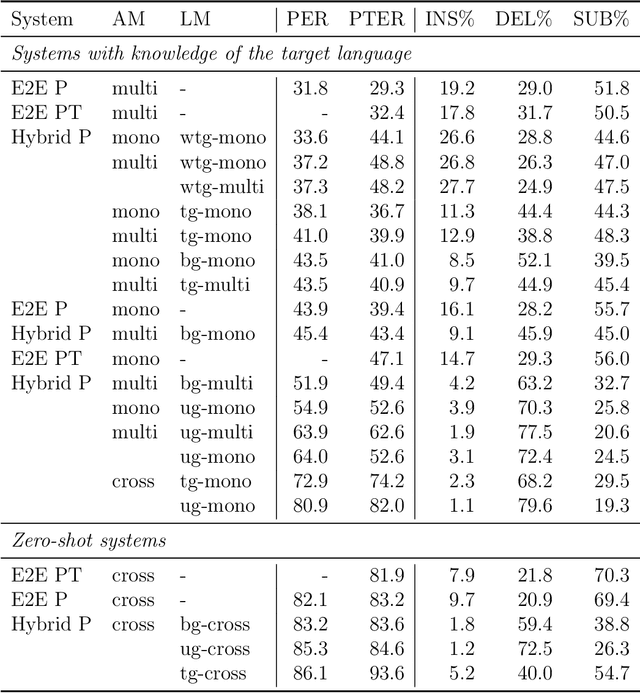

Abstract:The high cost of data acquisition makes Automatic Speech Recognition (ASR) model training problematic for most existing languages, including languages that do not even have a written script, or for which the phone inventories remain unknown. Past works explored multilingual training, transfer learning, as well as zero-shot learning in order to build ASR systems for these low-resource languages. While it has been shown that the pooling of resources from multiple languages is helpful, we have not yet seen a successful application of an ASR model to a language unseen during training. A crucial step in the adaptation of ASR from seen to unseen languages is the creation of the phone inventory of the unseen language. The ultimate goal of our work is to build the phone inventory of a language unseen during training in an unsupervised way without any knowledge about the language. In this paper, we 1) investigate the influence of different factors (i.e., model architecture, phonotactic model, type of speech representation) on phone recognition in an unknown language; 2) provide an analysis of which phones transfer well across languages and which do not in order to understand the limitations of and areas for further improvement for automatic phone inventory creation; and 3) present different methods to build a phone inventory of an unseen language in an unsupervised way. To that end, we conducted mono-, multi-, and crosslingual experiments on a set of 13 phonetically diverse languages and several in-depth analyses. We found a number of universal phone tokens (IPA symbols) that are well-recognized cross-linguistically. Through a detailed analysis of results, we conclude that unique sounds, similar sounds, and tone languages remain a major challenge for phonetic inventory discovery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge