Sadiq Hussain

Explainable Artificial Intelligence for Drug Discovery and Development -- A Comprehensive Survey

Sep 21, 2023

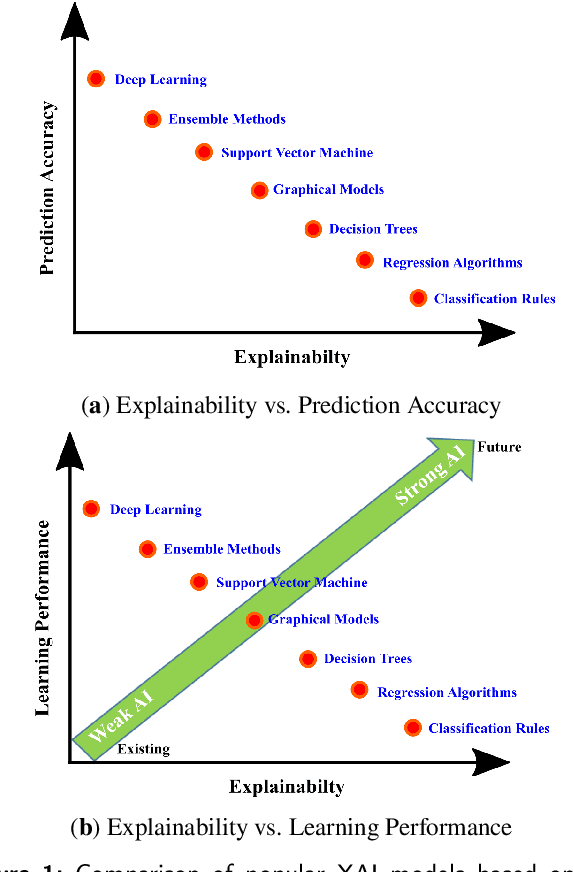

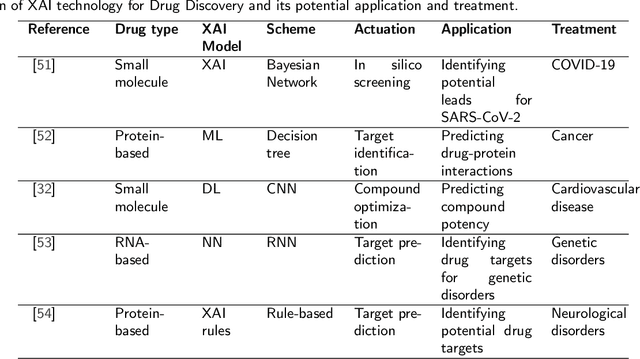

Abstract:The field of drug discovery has experienced a remarkable transformation with the advent of artificial intelligence (AI) and machine learning (ML) technologies. However, as these AI and ML models are becoming more complex, there is a growing need for transparency and interpretability of the models. Explainable Artificial Intelligence (XAI) is a novel approach that addresses this issue and provides a more interpretable understanding of the predictions made by machine learning models. In recent years, there has been an increasing interest in the application of XAI techniques to drug discovery. This review article provides a comprehensive overview of the current state-of-the-art in XAI for drug discovery, including various XAI methods, their application in drug discovery, and the challenges and limitations of XAI techniques in drug discovery. The article also covers the application of XAI in drug discovery, including target identification, compound design, and toxicity prediction. Furthermore, the article suggests potential future research directions for the application of XAI in drug discovery. The aim of this review article is to provide a comprehensive understanding of the current state of XAI in drug discovery and its potential to transform the field.

A Hybrid Deep Spatio-Temporal Attention-Based Model for Parkinson's Disease Diagnosis Using Resting State EEG Signals

Aug 14, 2023

Abstract:Parkinson's disease (PD), a severe and progressive neurological illness, affects millions of individuals worldwide. For effective treatment and management of PD, an accurate and early diagnosis is crucial. This study presents a deep learning-based model for the diagnosis of PD using resting state electroencephalogram (EEG) signal. The objective of the study is to develop an automated model that can extract complex hidden nonlinear features from EEG and demonstrate its generalizability on unseen data. The model is designed using a hybrid model, consists of convolutional neural network (CNN), bidirectional gated recurrent unit (Bi-GRU), and attention mechanism. The proposed method is evaluated on three public datasets (Uc San Diego Dataset, PRED-CT, and University of Iowa (UI) dataset), with one dataset used for training and the other two for evaluation. The results show that the proposed model can accurately diagnose PD with high performance on both the training and hold-out datasets. The model also performs well even when some part of the input information is missing. The results of this work have significant implications for patient treatment and for ongoing investigations into the early detection of Parkinson's disease. The suggested model holds promise as a non-invasive and reliable technique for PD early detection utilizing resting state EEG.

A Brief Review of Explainable Artificial Intelligence in Healthcare

Apr 04, 2023

Abstract:XAI refers to the techniques and methods for building AI applications which assist end users to interpret output and predictions of AI models. Black box AI applications in high-stakes decision-making situations, such as medical domain have increased the demand for transparency and explainability since wrong predictions may have severe consequences. Model explainability and interpretability are vital successful deployment of AI models in healthcare practices. AI applications' underlying reasoning needs to be transparent to clinicians in order to gain their trust. This paper presents a systematic review of XAI aspects and challenges in the healthcare domain. The primary goals of this study are to review various XAI methods, their challenges, and related machine learning models in healthcare. The methods are discussed under six categories: Features-oriented methods, global methods, concept models, surrogate models, local pixel-based methods, and human-centric methods. Most importantly, the paper explores XAI role in healthcare problems to clarify its necessity in safety-critical applications. The paper intends to establish a comprehensive understanding of XAI-related applications in the healthcare field by reviewing the related experimental results. To facilitate future research for filling research gaps, the importance of XAI models from different viewpoints and their limitations are investigated.

BERT-Deep CNN: State-of-the-Art for Sentiment Analysis of COVID-19 Tweets

Nov 04, 2022Abstract:The free flow of information has been accelerated by the rapid development of social media technology. There has been a significant social and psychological impact on the population due to the outbreak of Coronavirus disease (COVID-19). The COVID-19 pandemic is one of the current events being discussed on social media platforms. In order to safeguard societies from this pandemic, studying people's emotions on social media is crucial. As a result of their particular characteristics, sentiment analysis of texts like tweets remains challenging. Sentiment analysis is a powerful text analysis tool. It automatically detects and analyzes opinions and emotions from unstructured data. Texts from a wide range of sources are examined by a sentiment analysis tool, which extracts meaning from them, including emails, surveys, reviews, social media posts, and web articles. To evaluate sentiments, natural language processing (NLP) and machine learning techniques are used, which assign weights to entities, topics, themes, and categories in sentences or phrases. Machine learning tools learn how to detect sentiment without human intervention by examining examples of emotions in text. In a pandemic situation, analyzing social media texts to uncover sentimental trends can be very helpful in gaining a better understanding of society's needs and predicting future trends. We intend to study society's perception of the COVID-19 pandemic through social media using state-of-the-art BERT and Deep CNN models. The superiority of BERT models over other deep models in sentiment analysis is evident and can be concluded from the comparison of the various research studies mentioned in this article.

UncertaintyFuseNet: Robust Uncertainty-aware Hierarchical Feature Fusion with Ensemble Monte Carlo Dropout for COVID-19 Detection

May 22, 2021

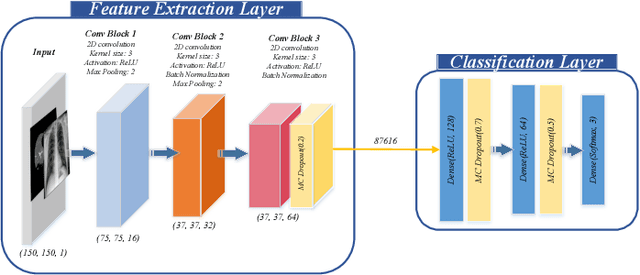

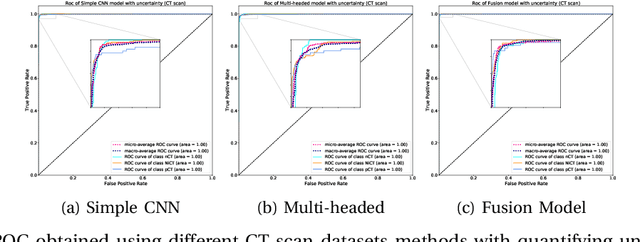

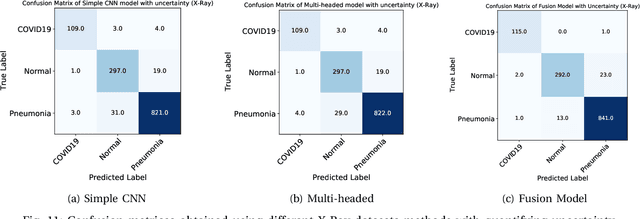

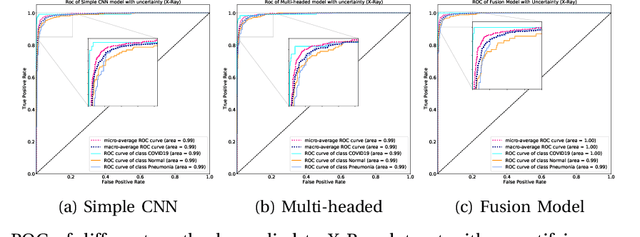

Abstract:The COVID-19 (Coronavirus disease 2019) has infected more than 151 million people and caused approximately 3.17 million deaths around the world up to the present. The rapid spread of COVID-19 is continuing to threaten human's life and health. Therefore, the development of computer-aided detection (CAD) systems based on machine and deep learning methods which are able to accurately differentiate COVID-19 from other diseases using chest computed tomography (CT) and X-Ray datasets is essential and of immediate priority. Different from most of the previous studies which used either one of CT or X-ray images, we employed both data types with sufficient samples in implementation. On the other hand, due to the extreme sensitivity of this pervasive virus, model uncertainty should be considered, while most previous studies have overlooked it. Therefore, we propose a novel powerful fusion model named $UncertaintyFuseNet$ that consists of an uncertainty module: Ensemble Monte Carlo (EMC) dropout. The obtained results prove the effectiveness of our proposed fusion for COVID-19 detection using CT scan and X-Ray datasets. Also, our proposed $UncertaintyFuseNet$ model is significantly robust to noise and performs well with the previously unseen data. The source codes and models of this study are available at: https://github.com/moloud1987/UncertaintyFuseNet-for-COVID-19-Classification.

CNN AE: Convolution Neural Network combined with Autoencoder approach to detect survival chance of COVID 19 patients

Apr 18, 2021

Abstract:In this paper, we propose a novel method named CNN-AE to predict survival chance of COVID-19 patients using a CNN trained on clinical information. To further increase the prediction accuracy, we use the CNN in combination with an autoencoder. Our method is one of the first that aims to predict survival chance of already infected patients. We rely on clinical data to carry out the prediction. The motivation is that the required resources to prepare CT images are expensive and limited compared to the resources required to collect clinical data such as blood pressure, liver disease, etc. We evaluate our method on a publicly available clinical dataset of deceased and recovered patients which we have collected. Careful analysis of the dataset properties is also presented which consists of important features extraction and correlation computation between features. Since most of COVID-19 patients are usually recovered, the number of deceased samples of our dataset is low leading to data imbalance. To remedy this issue, a data augmentation procedure based on autoencoders is proposed. To demonstrate the generality of our augmentation method, we train random forest and Na\"ive Bayes on our dataset with and without augmentation and compare their performance. We also evaluate our method on another dataset for further generality verification. Experimental results reveal the superiority of CNN-AE method compared to the standard CNN as well as other methods such as random forest and Na\"ive Bayes. COVID-19 detection average accuracy of CNN-AE is 96.05% which is higher than CNN average accuracy of 92.49%. To show that clinical data can be used as a reliable dataset for COVID-19 survival chance prediction, CNN-AE is compared with a standard CNN which is trained on CT images.

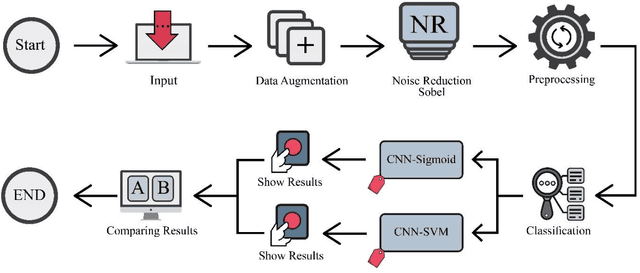

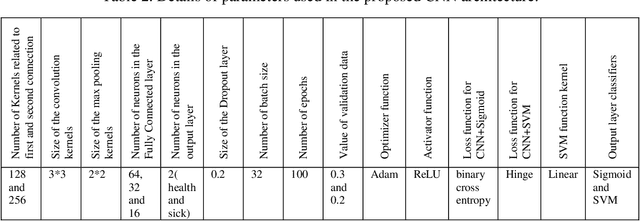

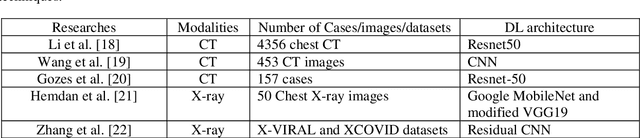

Fusion of convolution neural network, support vector machine and Sobel filter for accurate detection of COVID-19 patients using X-ray images

Feb 13, 2021

Abstract:The coronavirus (COVID-19) is currently the most common contagious disease which is prevalent all over the world. The main challenge of this disease is the primary diagnosis to prevent secondary infections and its spread from one person to another. Therefore, it is essential to use an automatic diagnosis system along with clinical procedures for the rapid diagnosis of COVID-19 to prevent its spread. Artificial intelligence techniques using computed tomography (CT) images of the lungs and chest radiography have the potential to obtain high diagnostic performance for Covid-19 diagnosis. In this study, a fusion of convolutional neural network (CNN), support vector machine (SVM), and Sobel filter is proposed to detect COVID-19 using X-ray images. A new X-ray image dataset was collected and subjected to high pass filter using a Sobel filter to obtain the edges of the images. Then these images are fed to CNN deep learning model followed by SVM classifier with ten-fold cross validation strategy. This method is designed so that it can learn with not many data. Our results show that the proposed CNN-SVM with Sobel filtering (CNN-SVM+Sobel) achieved the highest classification accuracy of 99.02% in accurate detection of COVID-19. It showed that using Sobel filter can improve the performance of CNN. Unlike most of the other researches, this method does not use a pre-trained network. We have also validated our developed model using six public databases and obtained the highest performance. Hence, our developed model is ready for clinical application

Uncertainty-Aware Semi-supervised Method using Large Unlabelled and Limited Labeled COVID-19 Data

Feb 12, 2021

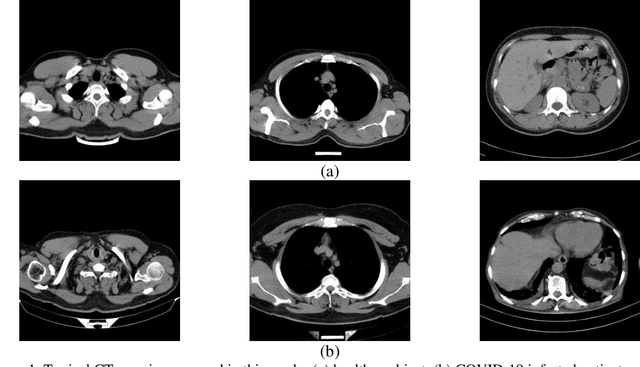

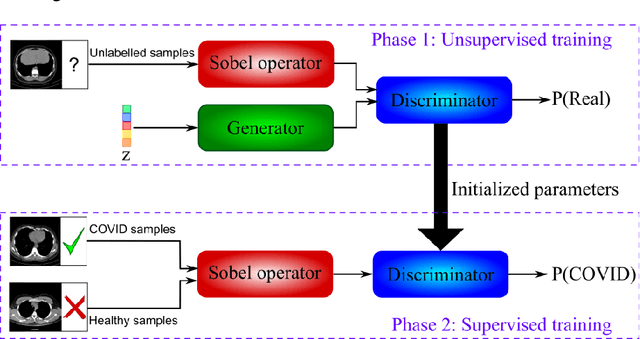

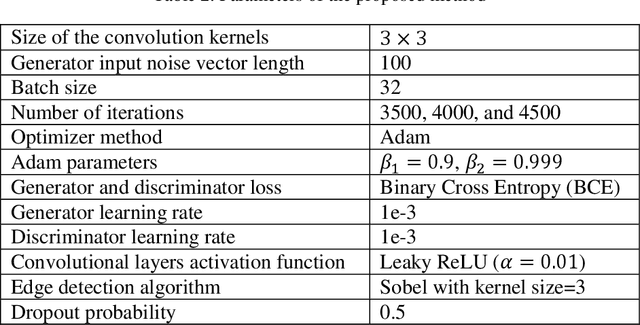

Abstract:The new coronavirus has caused more than 1 million deaths and continues to spread rapidly. This virus targets the lungs, causing respiratory distress which can be mild or severe. The X-ray or computed tomography (CT) images of lungs can reveal whether the patient is infected with COVID-19 or not. Many researchers are trying to improve COVID-19 detection using artificial intelligence. In this paper, relying on Generative Adversarial Networks (GAN), we propose a Semi-supervised Classification using Limited Labelled Data (SCLLD) for automated COVID-19 detection. Our motivation is to develop learning method which can cope with scenarios that preparing labelled data is time consuming or expensive. We further improved the detection accuracy of the proposed method by applying Sobel edge detection. The GAN discriminator output is a probability value which is used for classification in this work. The proposed system is trained using 10,000 CT scans collected from Omid hospital. Also, we validate our system using the public dataset. The proposed method is compared with other state of the art supervised methods such as Gaussian processes. To the best of our knowledge, this is the first time a COVID-19 semi-supervised detection method is presented. Our method is capable of learning from a mixture of limited labelled and unlabelled data where supervised learners fail due to lack of sufficient amount of labelled data. Our semi-supervised training method significantly outperforms the supervised training of Convolutional Neural Network (CNN) in case labelled training data is scarce. Our method has achieved an accuracy of 99.60%, sensitivity of 99.39%, and specificity of 99.80% where CNN (trained supervised) has achieved an accuracy of 69.87%, sensitivity of 94%, and specificity of 46.40%.

A Review of Uncertainty Quantification in Deep Learning: Techniques, Applications and Challenges

Nov 17, 2020

Abstract:Uncertainty quantification (UQ) plays a pivotal role in reduction of uncertainties during both optimization and decision making processes. It can be applied to solve a variety of real-world applications in science and engineering. Bayesian approximation and ensemble learning techniques are two most widely-used UQ methods in the literature. In this regard, researchers have proposed different UQ methods and examined their performance in a variety of applications such as computer vision (e.g., self-driving cars and object detection), image processing (e.g., image restoration), medical image analysis (e.g., medical image classification and segmentation), natural language processing (e.g., text classification, social media texts and recidivism risk-scoring), bioinformatics, etc. This study reviews recent advances in UQ methods used in deep learning. Moreover, we also investigate the application of these methods in reinforcement learning (RL). Then, we outline a few important applications of UQ methods. Finally, we briefly highlight the fundamental research challenges faced by UQ methods and discuss the future research directions in this field.

Handling of uncertainty in medical data using machine learning and probability theory techniques: A review of 30 years

Aug 23, 2020

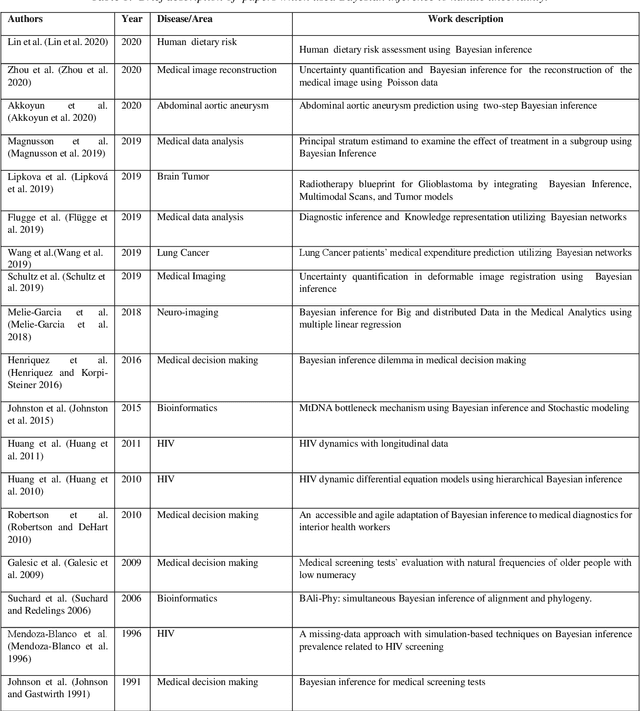

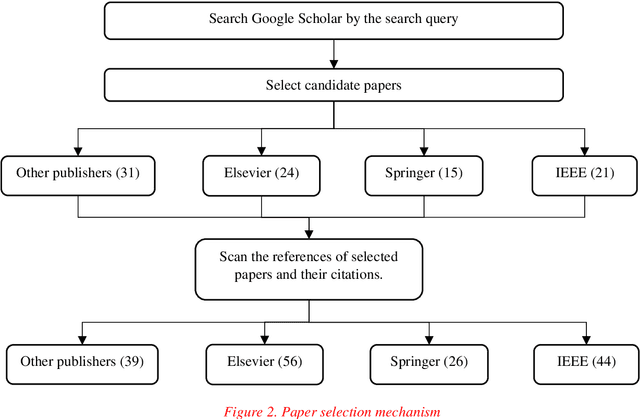

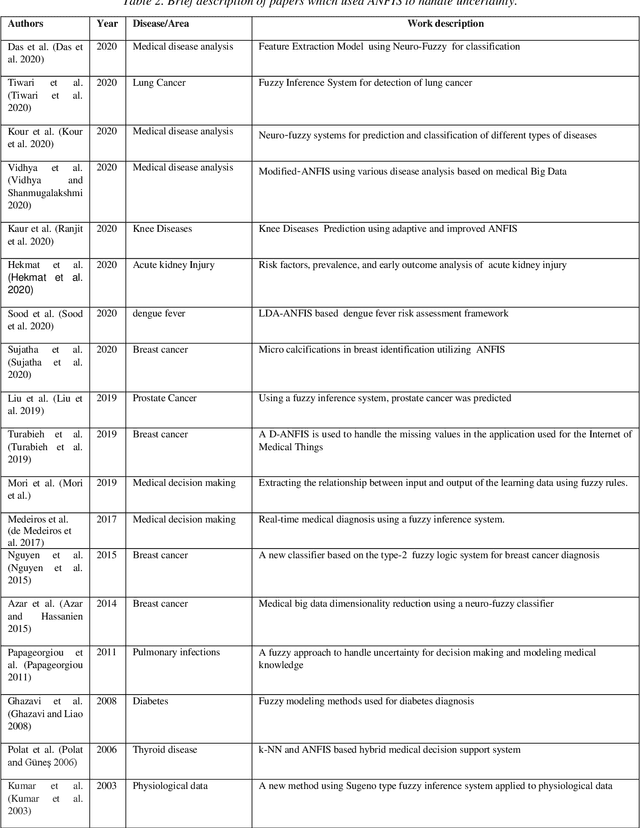

Abstract:Understanding data and reaching valid conclusions are of paramount importance in the present era of big data. Machine learning and probability theory methods have widespread application for this purpose in different fields. One critically important yet less explored aspect is how data and model uncertainties are captured and analyzed. Proper quantification of uncertainty provides valuable information for optimal decision making. This paper reviewed related studies conducted in the last 30 years (from 1991 to 2020) in handling uncertainties in medical data using probability theory and machine learning techniques. Medical data is more prone to uncertainty due to the presence of noise in the data. So, it is very important to have clean medical data without any noise to get accurate diagnosis. The sources of noise in the medical data need to be known to address this issue. Based on the medical data obtained by the physician, diagnosis of disease, and treatment plan are prescribed. Hence, the uncertainty is growing in healthcare and there is limited knowledge to address these problems. We have little knowledge about the optimal treatment methods as there are many sources of uncertainty in medical science. Our findings indicate that there are few challenges to be addressed in handling the uncertainty in medical raw data and new models. In this work, we have summarized various methods employed to overcome this problem. Nowadays, application of novel deep learning techniques to deal such uncertainties have significantly increased.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge