Soorena Salari

DINOMotion: advanced robust tissue motion tracking with DINOv2 in 2D-Cine MRI-guided radiotherapy

Aug 14, 2025Abstract:Accurate tissue motion tracking is critical to ensure treatment outcome and safety in 2D-Cine MRI-guided radiotherapy. This is typically achieved by registration of sequential images, but existing methods often face challenges with large misalignments and lack of interpretability. In this paper, we introduce DINOMotion, a novel deep learning framework based on DINOv2 with Low-Rank Adaptation (LoRA) layers for robust, efficient, and interpretable motion tracking. DINOMotion automatically detects corresponding landmarks to derive optimal image registration, enhancing interpretability by providing explicit visual correspondences between sequential images. The integration of LoRA layers reduces trainable parameters, improving training efficiency, while DINOv2's powerful feature representations offer robustness against large misalignments. Unlike iterative optimization-based methods, DINOMotion directly computes image registration at test time. Our experiments on volunteer and patient datasets demonstrate its effectiveness in estimating both linear and nonlinear transformations, achieving Dice scores of 92.07% for the kidney, 90.90% for the liver, and 95.23% for the lung, with corresponding Hausdorff distances of 5.47 mm, 8.31 mm, and 6.72 mm, respectively. DINOMotion processes each scan in approximately 30ms and consistently outperforms state-of-the-art methods, particularly in handling large misalignments. These results highlight its potential as a robust and interpretable solution for real-time motion tracking in 2D-Cine MRI-guided radiotherapy.

Reliability of deep learning models for anatomical landmark detection: The role of inter-rater variability

Nov 26, 2024

Abstract:Automated detection of anatomical landmarks plays a crucial role in many diagnostic and surgical applications. Progresses in deep learning (DL) methods have resulted in significant performance enhancement in tasks related to anatomical landmark detection. While current research focuses on accurately localizing these landmarks in medical scans, the importance of inter-rater annotation variability in building DL models is often overlooked. Understanding how inter-rater variability impacts the performance and reliability of the resulting DL algorithms, which are crucial for clinical deployment, can inform the improvement of training data construction and boost DL models' outcomes. In this paper, we conducted a thorough study of different annotation-fusion strategies to preserve inter-rater variability in DL models for anatomical landmark detection, aiming to boost the performance and reliability of the resulting algorithms. Additionally, we explored the characteristics and reliability of four metrics, including a novel Weighted Coordinate Variance metric to quantify landmark detection uncertainty/inter-rater variability. Our research highlights the crucial connection between inter-rater variability, DL-models performances, and uncertainty, revealing how different approaches for multi-rater landmark annotation fusion can influence these factors.

CAMLD: Contrast-Agnostic Medical Landmark Detection with Consistency-Based Regularization

Nov 26, 2024Abstract:Anatomical landmark detection in medical images is essential for various clinical and research applications, including disease diagnosis and surgical planning. However, manual landmark annotation is time-consuming and requires significant expertise. Existing deep learning (DL) methods often require large amounts of well-annotated data, which are costly to acquire. In this paper, we introduce CAMLD, a novel self-supervised DL framework for anatomical landmark detection in unlabeled scans with varying contrasts by using only a single reference example. To achieve this, we employed an inter-subject landmark consistency loss with an image registration loss while introducing a 3D convolution-based contrast augmentation strategy to promote model generalization to new contrasts. Additionally, we utilize an adaptive mixed loss function to schedule the contributions of different sub-tasks for optimal outcomes. We demonstrate the proposed method with the intricate task of MRI-based 3D brain landmark detection. With comprehensive experiments on four diverse clinical and public datasets, including both T1w and T2w MRI scans at different MRI field strengths, we demonstrate that CAMLD outperforms the state-of-the-art methods in terms of mean radial errors (MREs) and success detection rates (SDRs). Our framework provides a robust and accurate solution for anatomical landmark detection, reducing the need for extensively annotated datasets and generalizing well across different imaging contrasts. Our code will be publicly available at: https://github.com/HealthX-Lab/CAMLD.

Dense Error Map Estimation for MRI-Ultrasound Registration in Brain Tumor Surgery Using Swin UNETR

Aug 21, 2023Abstract:Early surgical treatment of brain tumors is crucial in reducing patient mortality rates. However, brain tissue deformation (called brain shift) occurs during the surgery, rendering pre-operative images invalid. As a cost-effective and portable tool, intra-operative ultrasound (iUS) can track brain shift, and accurate MRI-iUS registration techniques can update pre-surgical plans and facilitate the interpretation of iUS. This can boost surgical safety and outcomes by maximizing tumor removal while avoiding eloquent regions. However, manual assessment of MRI-iUS registration results in real-time is difficult and prone to errors due to the 3D nature of the data. Automatic algorithms that can quantify the quality of inter-modal medical image registration outcomes can be highly beneficial. Therefore, we propose a novel deep-learning (DL) based framework with the Swin UNETR to automatically assess 3D-patch-wise dense error maps for MRI-iUS registration in iUS-guided brain tumor resection and show its performance with real clinical data for the first time.

Weakly supervised segmentation of intracranial aneurysms using a 3D focal modulation UNet

Aug 06, 2023Abstract:Accurate identification and quantification of unruptured intracranial aneurysms (UIAs) are essential for the risk assessment and treatment decisions of this cerebrovascular disorder. Current assessment based on 2D manual measures of aneurysms on 3D magnetic resonance angiography (MRA) is sub-optimal and time-consuming. Automatic 3D measures can significantly benefit the clinical workflow and treatment outcomes. However, one major issue in medical image segmentation is the need for large well-annotated data, which can be expensive to obtain. Techniques that mitigate the requirement, such as weakly supervised learning with coarse labels are highly desirable. In this paper, we leverage coarse labels of UIAs from time-of-flight MRAs to obtain refined UIAs segmentation using a novel 3D focal modulation UNet, called FocalSegNet and conditional random field (CRF) postprocessing, with a Dice score of 0.68 and 95% Hausdorff distance of 0.95 mm. We evaluated the performance of the proposed algorithms against the state-of-the-art 3D UNet and Swin-UNETR, and demonstrated the superiority of the proposed FocalSegNet and the benefit of focal modulation for the task.

FocalErrorNet: Uncertainty-aware focal modulation network for inter-modal registration error estimation in ultrasound-guided neurosurgery

Jul 26, 2023Abstract:In brain tumor resection, accurate removal of cancerous tissues while preserving eloquent regions is crucial to the safety and outcomes of the treatment. However, intra-operative tissue deformation (called brain shift) can move the surgical target and render the pre-surgical plan invalid. Intra-operative ultrasound (iUS) has been adopted to provide real-time images to track brain shift, and inter-modal (i.e., MRI-iUS) registration is often required to update the pre-surgical plan. Quality control for the registration results during surgery is important to avoid adverse outcomes, but manual verification faces great challenges due to difficult 3D visualization and the low contrast of iUS. Automatic algorithms are urgently needed to address this issue, but the problem was rarely attempted. Therefore, we propose a novel deep learning technique based on 3D focal modulation in conjunction with uncertainty estimation to accurately assess MRI-iUS registration errors for brain tumor surgery. Developed and validated with the public RESECT clinical database, the resulting algorithm can achieve an estimation error of 0.59+-0.57 mm.

Towards multi-modal anatomical landmark detection for ultrasound-guided brain tumor resection with contrastive learning

Jul 26, 2023

Abstract:Homologous anatomical landmarks between medical scans are instrumental in quantitative assessment of image registration quality in various clinical applications, such as MRI-ultrasound registration for tissue shift correction in ultrasound-guided brain tumor resection. While manually identified landmark pairs between MRI and ultrasound (US) have greatly facilitated the validation of different registration algorithms for the task, the procedure requires significant expertise, labor, and time, and can be prone to inter- and intra-rater inconsistency. So far, many traditional and machine learning approaches have been presented for anatomical landmark detection, but they primarily focus on mono-modal applications. Unfortunately, despite the clinical needs, inter-modal/contrast landmark detection has very rarely been attempted. Therefore, we propose a novel contrastive learning framework to detect corresponding landmarks between MRI and intra-operative US scans in neurosurgery. Specifically, two convolutional neural networks were trained jointly to encode image features in MRI and US scans to help match the US image patch that contain the corresponding landmarks in the MRI. We developed and validated the technique using the public RESECT database. With a mean landmark detection accuracy of 5.88+-4.79 mm against 18.78+-4.77 mm with SIFT features, the proposed method offers promising results for MRI-US landmark detection in neurosurgical applications for the first time.

Weakly Supervised Intracranial Hemorrhage Segmentation using Head-Wise Gradient-Infused Self-Attention Maps from a Swin Transformer in Categorical Learning

Apr 11, 2023

Abstract:Intracranial hemorrhage (ICH) is a life-threatening medical emergency caused by various factors. Timely and precise diagnosis of ICH is crucial for administering effective treatment and improving patient survival rates. While deep learning techniques have emerged as the leading approach for medical image analysis and processing, the most commonly employed supervised learning often requires large, high-quality annotated datasets that can be costly to obtain, particularly for pixel/voxel-wise image segmentation. To address this challenge and facilitate ICH treatment decisions, we proposed a novel weakly supervised ICH segmentation method that leverages a hierarchical combination of head-wise gradient-infused self-attention maps obtained from a Swin transformer. The transformer is trained using an ICH classification task with categorical labels. To build and validate the proposed technique, we used two publicly available clinical CT datasets, namely RSNA 2019 Brain CT hemorrhage and PhysioNet. Additionally, we conducted an exploratory study comparing two learning strategies - binary classification and full ICH subtyping - to assess their impact on self-attention and our weakly supervised ICH segmentation framework. The proposed algorithm was compared against the popular U-Net with full supervision, as well as a similar weakly supervised approach using Grad-CAM for ICH segmentation. With a mean Dice score of 0.47, our technique achieved similar ICH segmentation performance as the U-Net and outperformed the Grad-CAM based approach, demonstrating the excellent potential of the proposed framework in challenging medical image segmentation tasks.

UncertaintyFuseNet: Robust Uncertainty-aware Hierarchical Feature Fusion with Ensemble Monte Carlo Dropout for COVID-19 Detection

May 22, 2021

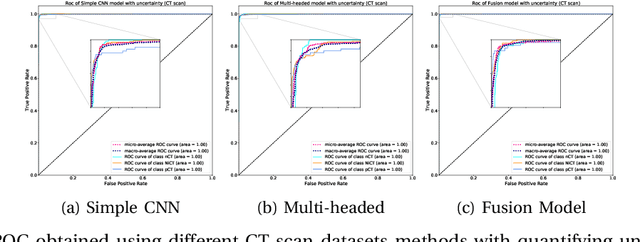

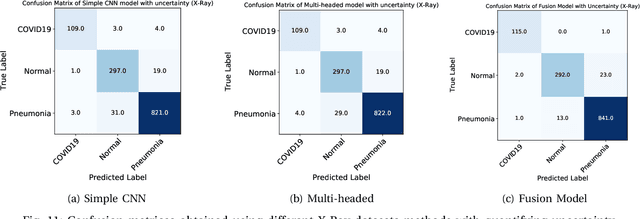

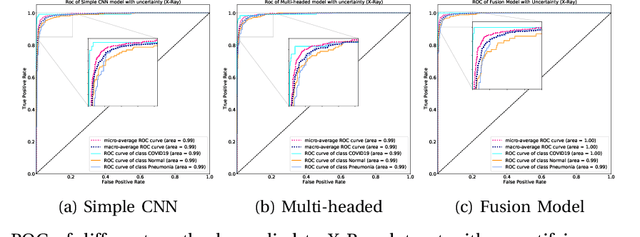

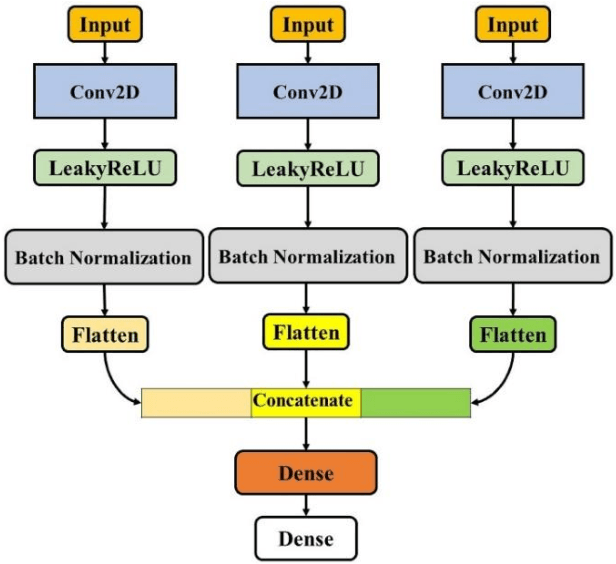

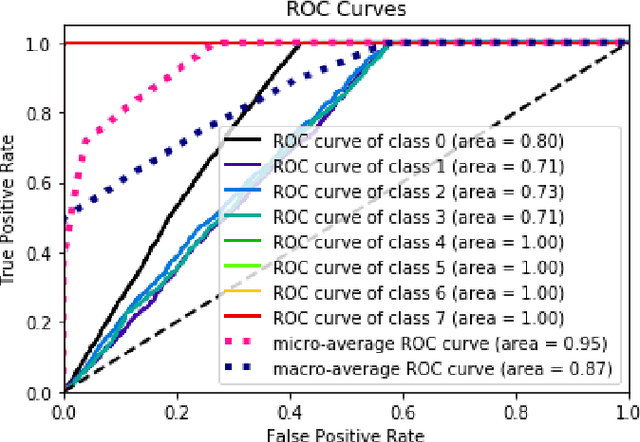

Abstract:The COVID-19 (Coronavirus disease 2019) has infected more than 151 million people and caused approximately 3.17 million deaths around the world up to the present. The rapid spread of COVID-19 is continuing to threaten human's life and health. Therefore, the development of computer-aided detection (CAD) systems based on machine and deep learning methods which are able to accurately differentiate COVID-19 from other diseases using chest computed tomography (CT) and X-Ray datasets is essential and of immediate priority. Different from most of the previous studies which used either one of CT or X-ray images, we employed both data types with sufficient samples in implementation. On the other hand, due to the extreme sensitivity of this pervasive virus, model uncertainty should be considered, while most previous studies have overlooked it. Therefore, we propose a novel powerful fusion model named $UncertaintyFuseNet$ that consists of an uncertainty module: Ensemble Monte Carlo (EMC) dropout. The obtained results prove the effectiveness of our proposed fusion for COVID-19 detection using CT scan and X-Ray datasets. Also, our proposed $UncertaintyFuseNet$ model is significantly robust to noise and performs well with the previously unseen data. The source codes and models of this study are available at: https://github.com/moloud1987/UncertaintyFuseNet-for-COVID-19-Classification.

A Generalizable Model for Fault Detection in Offshore Wind Turbines Based on Deep Learning

Nov 25, 2020

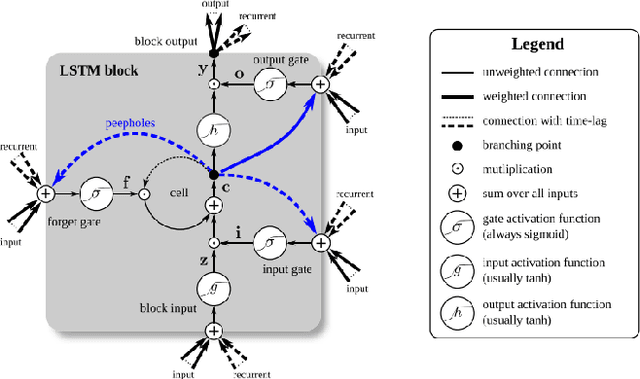

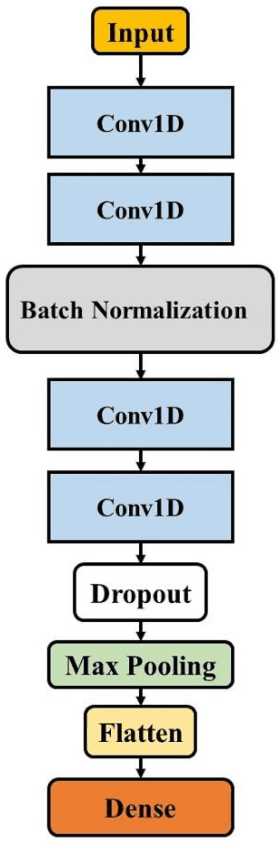

Abstract:This paper presents a new deep learning-based model for fault detection in offshore wind turbines. To design a generalizable model for fault detection, we use 5 sensors and a sliding window to exploit the inherent temporal information contained in the raw time-series data obtained from sensors. The proposed model uses the nonlinear relationships among multiple sensor variables and the temporal dependency of each sensor on others that considerably increases the performance of fault detection model. A 10-fold cross-validation is used to verify the generalization of the model and evaluate the classification metrics. To evaluate the performance of the model, simulated data from a benchmark floating offshore wind turbine (FOWT) with supervisory control and data acquisition (SCADA) are used. The results illustrate that the proposed model would accurately disclose and classify more than 99% of the faults. Moreover, it is generalizable and can be used to detect faults for different types of systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge