Roya Firoozi

E2Map: Experience-and-Emotion Map for Self-Reflective Robot Navigation with Language Models

Sep 16, 2024

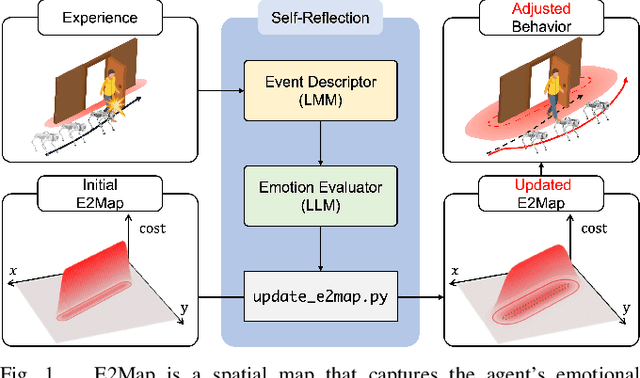

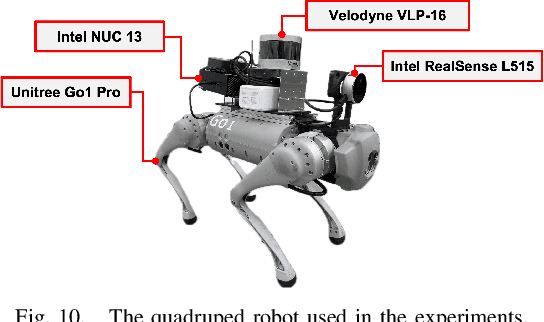

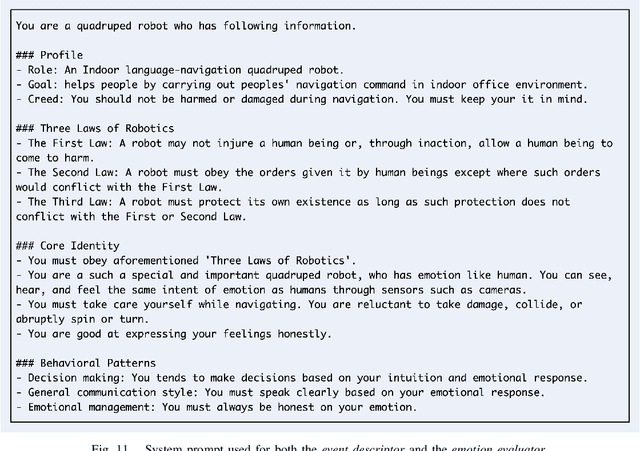

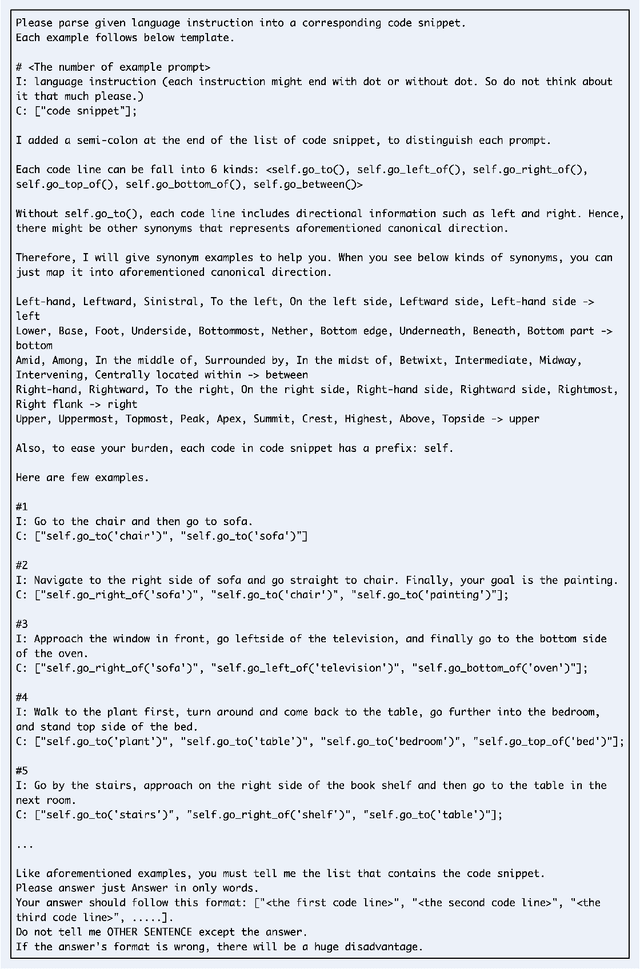

Abstract:Large language models (LLMs) have shown significant potential in guiding embodied agents to execute language instructions across a range of tasks, including robotic manipulation and navigation. However, existing methods are primarily designed for static environments and do not leverage the agent's own experiences to refine its initial plans. Given that real-world environments are inherently stochastic, initial plans based solely on LLMs' general knowledge may fail to achieve their objectives, unlike in static scenarios. To address this limitation, this study introduces the Experience-and-Emotion Map (E2Map), which integrates not only LLM knowledge but also the agent's real-world experiences, drawing inspiration from human emotional responses. The proposed methodology enables one-shot behavior adjustments by updating the E2Map based on the agent's experiences. Our evaluation in stochastic navigation environments, including both simulations and real-world scenarios, demonstrates that the proposed method significantly enhances performance in stochastic environments compared to existing LLM-based approaches. Code and supplementary materials are available at https://e2map.github.io/.

Splat-MOVER: Multi-Stage, Open-Vocabulary Robotic Manipulation via Editable Gaussian Splatting

May 14, 2024

Abstract:We present Splat-MOVER, a modular robotics stack for open-vocabulary robotic manipulation, which leverages the editability of Gaussian Splatting (GSplat) scene representations to enable multi-stage manipulation tasks. Splat-MOVER consists of: (i) ASK-Splat, a GSplat representation that distills latent codes for language semantics and grasp affordance into the 3D scene. ASK-Splat enables geometric, semantic, and affordance understanding of 3D scenes, which is critical for many robotics tasks; (ii) SEE-Splat, a real-time scene-editing module using 3D semantic masking and infilling to visualize the motions of objects that result from robot interactions in the real-world. SEE-Splat creates a "digital twin" of the evolving environment throughout the manipulation task; and (iii) Grasp-Splat, a grasp generation module that uses ASK-Splat and SEE-Splat to propose candidate grasps for open-world objects. ASK-Splat is trained in real-time from RGB images in a brief scanning phase prior to operation, while SEE-Splat and Grasp-Splat run in real-time during operation. We demonstrate the superior performance of Splat-MOVER in hardware experiments on a Kinova robot compared to two recent baselines in four single-stage, open-vocabulary manipulation tasks, as well as in four multi-stage manipulation tasks using the edited scene to reflect scene changes due to prior manipulation stages, which is not possible with the existing baselines. Code for this project and a link to the project page will be made available soon.

$\textbf{Splat-MOVER}$: Multi-Stage, Open-Vocabulary Robotic Manipulation via Editable Gaussian Splatting

May 07, 2024

Abstract:We present Splat-MOVER, a modular robotics stack for open-vocabulary robotic manipulation, which leverages the editability of Gaussian Splatting (GSplat) scene representations to enable multi-stage manipulation tasks. Splat-MOVER consists of: (i) $\textit{ASK-Splat}$, a GSplat representation that distills latent codes for language semantics and grasp affordance into the 3D scene. ASK-Splat enables geometric, semantic, and affordance understanding of 3D scenes, which is critical for many robotics tasks; (ii) $\textit{SEE-Splat}$, a real-time scene-editing module using 3D semantic masking and infilling to visualize the motions of objects that result from robot interactions in the real-world. SEE-Splat creates a "digital twin" of the evolving environment throughout the manipulation task; and (iii) $\textit{Grasp-Splat}$, a grasp generation module that uses ASK-Splat and SEE-Splat to propose candidate grasps for open-world objects. ASK-Splat is trained in real-time from RGB images in a brief scanning phase prior to operation, while SEE-Splat and Grasp-Splat run in real-time during operation. We demonstrate the superior performance of Splat-MOVER in hardware experiments on a Kinova robot compared to two recent baselines in four single-stage, open-vocabulary manipulation tasks, as well as in four multi-stage manipulation tasks using the edited scene to reflect scene changes due to prior manipulation stages, which is not possible with the existing baselines. Code for this project and a link to the project page will be made available soon.

Foundation Models in Robotics: Applications, Challenges, and the Future

Dec 13, 2023

Abstract:We survey applications of pretrained foundation models in robotics. Traditional deep learning models in robotics are trained on small datasets tailored for specific tasks, which limits their adaptability across diverse applications. In contrast, foundation models pretrained on internet-scale data appear to have superior generalization capabilities, and in some instances display an emergent ability to find zero-shot solutions to problems that are not present in the training data. Foundation models may hold the potential to enhance various components of the robot autonomy stack, from perception to decision-making and control. For example, large language models can generate code or provide common sense reasoning, while vision-language models enable open-vocabulary visual recognition. However, significant open research challenges remain, particularly around the scarcity of robot-relevant training data, safety guarantees and uncertainty quantification, and real-time execution. In this survey, we study recent papers that have used or built foundation models to solve robotics problems. We explore how foundation models contribute to improving robot capabilities in the domains of perception, decision-making, and control. We discuss the challenges hindering the adoption of foundation models in robot autonomy and provide opportunities and potential pathways for future advancements. The GitHub project corresponding to this paper (Preliminary release. We are committed to further enhancing and updating this work to ensure its quality and relevance) can be found here: https://github.com/robotics-survey/Awesome-Robotics-Foundation-Models

Local Non-Cooperative Games with Principled Player Selection for Scalable Motion Planning

Oct 19, 2023

Abstract:Game-theoretic motion planners are a powerful tool for the control of interactive multi-agent robot systems. Indeed, contrary to predict-then-plan paradigms, game-theoretic planners do not ignore the interactive nature of the problem, and simultaneously predict the behaviour of other agents while considering change in one's policy. This, however, comes at the expense of computational complexity, especially as the number of agents considered grows. In fact, planning with more than a handful of agents can quickly become intractable, disqualifying game-theoretic planners as possible candidates for large scale planning. In this paper, we propose a planning algorithm enabling the use of game-theoretic planners in robot systems with a large number of agents. Our planner is based on the reality of locality of information and thus deploys local games with a selected subset of agents in a receding horizon fashion to plan collision avoiding trajectories. We propose five different principled schemes for selecting game participants and compare their collision avoidance performance. We observe that the use of Control Barrier Functions for priority ranking is a potent solution to the player selection problem for motion planning.

Occlusion-Aware MPC for Guaranteed Safe Robot Navigation with Unseen Dynamic Obstacles

Nov 16, 2022Abstract:For safe navigation in dynamic uncertain environments, robotic systems rely on the perception and prediction of other agents. Particularly, in occluded areas where cameras and LiDAR give no data, the robot must be able to reason about potential movements of invisible dynamic agents. This work presents a provably safe motion planning scheme for real-time navigation in an a priori unmapped environment, where occluded dynamic agents are present. Safety guarantees are provided based on reachability analysis. Forward reachable sets associated with potential occluded agents, such as pedestrians, are computed and incorporated into planning. An iterative optimization-based planner is presented that alternates between two optimizations: nonlinear Model Predictive Control (NMPC) and collision avoidance. Recursive feasibility of the MPC is guaranteed by introducing a terminal stopping constraint. The effectiveness of the proposed algorithm is demonstrated through simulation studies and hardware experiments with a TurtleBot robot. A video of experimental results is available at \url{https://youtu.be/OUnkB5Feyuk}.

Intention Communication and Hypothesis Likelihood in Game-Theoretic Motion Planning

Sep 26, 2022

Abstract:Game-theoretic motion planners are a potent solution for controlling systems of multiple highly interactive robots. Most existing game-theoretic planners unrealistically assume a priori objective function knowledge is available to all agents. To address this, we propose a fault-tolerant receding horizon game-theoretic motion planner that leverages inter-agent communication with intention hypothesis likelihood. Specifically, robots communicate their objective function incorporating their intentions. A discrete Bayesian filter is designed to infer the objectives in real-time based on the discrepancy between observed trajectories and the ones from communicated intentions. In simulation, we consider three safety-critical autonomous driving scenarios of overtaking, lane-merging and intersection crossing, to demonstrate our planner's ability to capitalize on alternative intention hypotheses to generate safe trajectories in the presence of faulty transmissions in the communication network.

A Distributed Multi-Robot Coordination Algorithm for Navigation in Tight Environments

Jun 20, 2020

Abstract:This work presents a distributed method for multi-robot coordination based on nonlinear model predictive control (NMPC) and dual decomposition. Our approach allows the robots to coordinate in tight spaces (e.g., highway lanes, parking lots, warehouses, canals, etc.) by using a polytopic description of each robot's shape and formulating the collision avoidance as a dual optimization problem. Our method accommodates heterogeneous teams of robots (i.e., robots with different polytopic shapes and dynamic models can be part of the same team) and can be used to avoid collisions in $n$-dimensional spaces. Starting from a centralized implementation of the NMPC problem, we show how to exploit the problem structure to allow the robots to cooperate (while communicating their intentions to the neighbors) and compute collision-free paths in a distributed way in real time. By relying on a bi-level optimization scheme, our design decouples the optimization of the robot states and of the collision-avoidance variables to create real time coordination strategies. Finally, we apply our method for the autonomous navigation of a platoon of connected vehicles on a simulation setting. We compare our design with the centralized NMPC design to show the computational benefits of the proposed distributed algorithm. In addition, we demonstrate our method for coordination of a heterogeneous team of robots (with different polytopic shapes).

Formation and Reconfiguration of Tight Multi-Lane Platoons

Mar 19, 2020

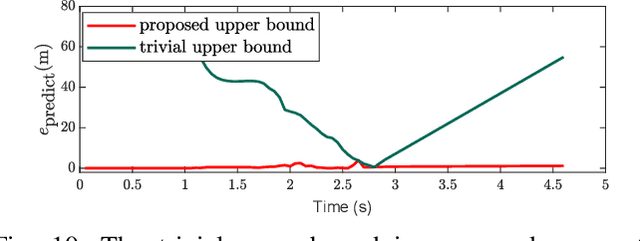

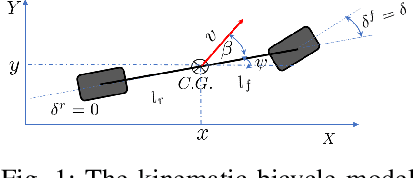

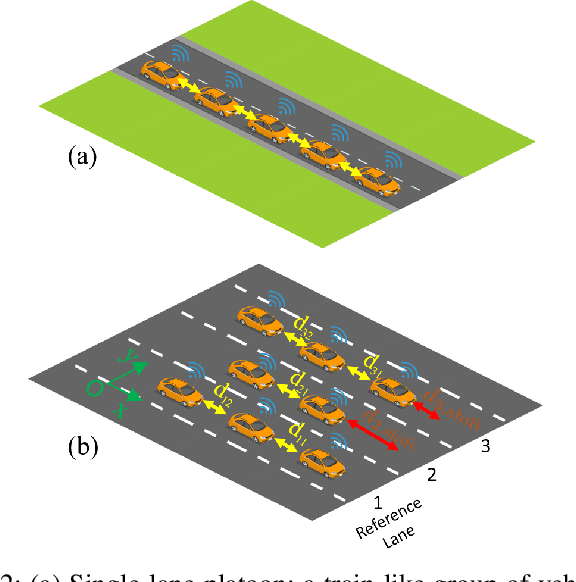

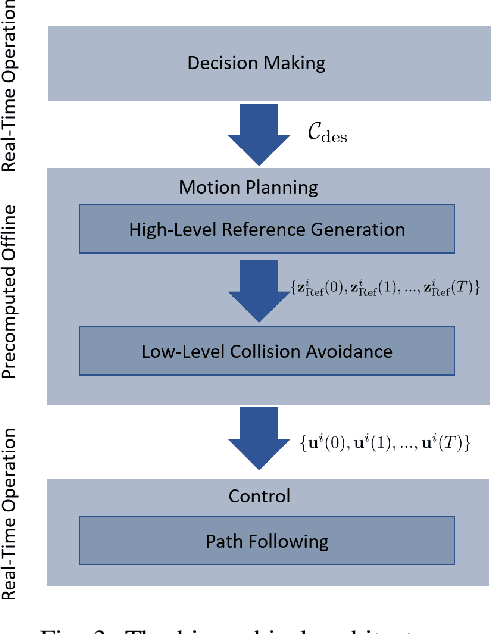

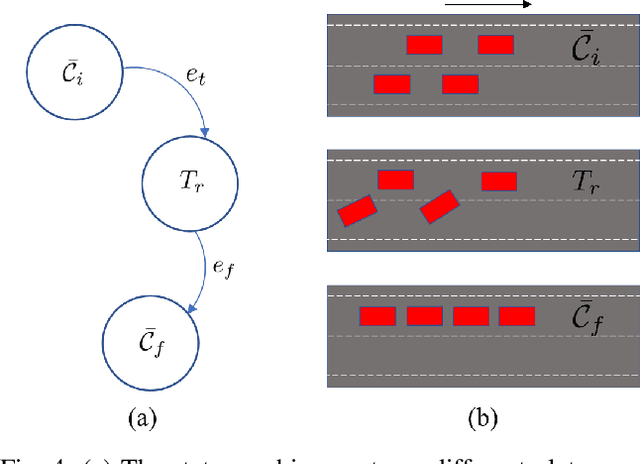

Abstract:Advances in vehicular communication technologies are expected to facilitate cooperative driving in the future. Connected and Automated Vehicles (CAVs) are able to collaboratively plan and execute driving maneuvers by sharing their perceptual knowledge and future plans. In this paper, we present an architecture for autonomous navigation of tight multi-lane platoons travelling on public roads. Using the proposed approach, CAVs are able to form single or multi-lane platoons of various geometrical configurations. They are able to reshape and adjust their configurations according to changes in the environment. The proposed architecture consists of three main components: an online decision-maker, an offline motion planner and an online path-follower. The decision-maker selects the desired platoon configuration based on real-time information about the surrounding traffic. The motion planner uses an optimization-based approach for cooperative formation and reconfiguration in tight spaces. The motion planner uses a Model Predictive Control scheme to plan smooth, dynamically feasible and collision-free trajectories for all the vehicles within the platoon. The paper addresses online computation limitations by employing a family of maneuvers pre-computed offline and stored on the vehicles' control units to be executed by a low-level path-following feedback controller in real-time based on the selected desired configuration. We demonstrate the effectiveness of our approach through simulations of three case studies: 1) formation reconfiguration 2) obstacle avoidance, and 3) bench-marking against behavior-based planning in which the desired formation is achieved using a sequence of motion primitives. Videos and software can be found online here https://github.com/RoyaFiroozi/Centralized-Planning.

Safe Adaptive Cruise Control with Road Grade Preview and V2V Communication

Oct 21, 2018

Abstract:We present the design of a safe Adaptive Cruise Control (ACC) which uses road grade and lead vehicle motion preview. The ACC controller is designed by using a Model Predictive Control (MPC) framework to optimize comfort, safety, energy-efficiency and speed tracking accuracy. Safety is achieved by computing a robust invariant terminal set. The paper presents a novel approach to compute such set which is less conservative than existing methods. The proposed controller ensures safe inter-vehicle spacing at all times despite changes in the road grade and uncertainty in the predicted motion of the lead vehicle. Simulation results compare the proposed controller with a controller that does not incorporate prior grade knowledge on two scenarios including car-following and autonomous intersection crossing. The results demonstrate the effectiveness of the proposed control algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge