Radoslav Ivanov

Analyzing Neural Network Information Flow Using Differential Geometry

Jan 22, 2026Abstract:This paper provides a fresh view of the neural network (NN) data flow problem, i.e., identifying the NN connections that are most important for the performance of the full model, through the lens of graph theory. Understanding the NN data flow provides a tool for symbolic NN analysis, e.g.,~robustness analysis or model repair. Unlike the standard approach to NN data flow analysis, which is based on information theory, we employ the notion of graph curvature, specifically Ollivier-Ricci curvature (ORC). The ORC has been successfully used to identify important graph edges in various domains such as road traffic analysis, biological and social networks. In particular, edges with negative ORC are considered bottlenecks and as such are critical to the graph's overall connectivity, whereas positive-ORC edges are not essential. We use this intuition for the case of NNs as well: we 1)~construct a graph induced by the NN structure and introduce the notion of neural curvature (NC) based on the ORC; 2)~calculate curvatures based on activation patterns for a set of input examples; 3)~aim to demonstrate that NC can indeed be used to rank edges according to their importance for the overall NN functionality. We evaluate our method through pruning experiments and show that removing negative-ORC edges quickly degrades the overall NN performance, whereas positive-ORC edges have little impact. The proposed method is evaluated on a variety of models trained on three image datasets, namely MNIST, CIFAR-10 and CIFAR-100. The results indicate that our method can identify a larger number of unimportant edges as compared to state-of-the-art pruning methods.

Analyzing Neural Network Robustness Using Graph Curvature

Oct 25, 2024

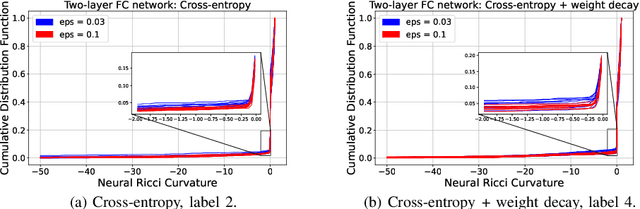

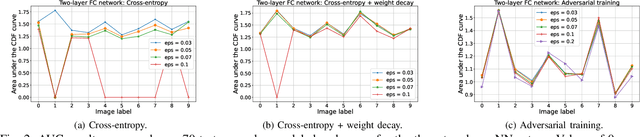

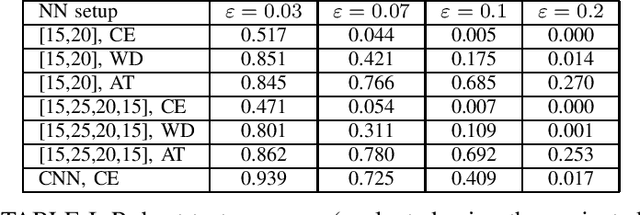

Abstract:This paper presents a new look at the neural network (NN) robustness problem, from the point of view of graph theory analysis, specifically graph curvature. Graph curvature (e.g., Ricci curvature) has been used to analyze system dynamics and identify bottlenecks in many domains, including road traffic analysis and internet routing. We define the notion of neural Ricci curvature and use it to identify bottleneck NN edges that are heavily used to ``transport data" to the NN outputs. We provide an evaluation on MNIST that illustrates that such edges indeed occur more frequently for inputs where NNs are less robust. These results will serve as the basis for an alternative method of robust training, by minimizing the number of bottleneck edges.

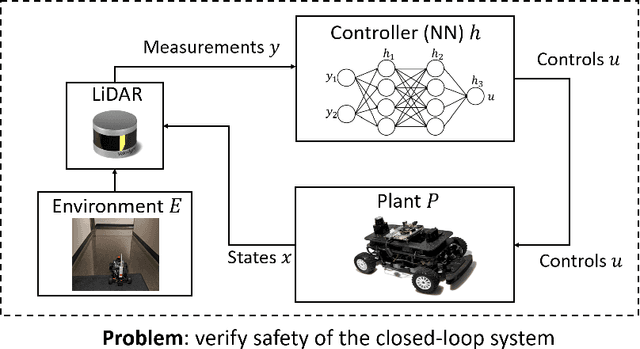

Data-Driven Modeling and Verification of Perception-Based Autonomous Systems

Dec 11, 2023Abstract:This paper addresses the problem of data-driven modeling and verification of perception-based autonomous systems. We assume the perception model can be decomposed into a canonical model (obtained from first principles or a simulator) and a noise model that contains the measurement noise introduced by the real environment. We focus on two types of noise, benign and adversarial noise, and develop a data-driven model for each type using generative models and classifiers, respectively. We show that the trained models perform well according to a variety of evaluation metrics based on downstream tasks such as state estimation and control. Finally, we verify the safety of two systems with high-dimensional data-driven models, namely an image-based version of mountain car (a reinforcement learning benchmark) as well as the F1/10 car, which uses LiDAR measurements to navigate a racing track.

Imprecise Bayesian Neural Networks

Feb 19, 2023

Abstract:Uncertainty quantification and robustness to distribution shifts are important goals in machine learning and artificial intelligence. Although Bayesian neural networks (BNNs) allow for uncertainty in the predictions to be assessed, different sources of uncertainty are indistinguishable. We present imprecise Bayesian neural networks (IBNNs); they generalize and overcome some of the drawbacks of standard BNNs. These latter are trained using a single prior and likelihood distributions, whereas IBNNs are trained using credal prior and likelihood sets. They allow to distinguish between aleatoric and epistemic uncertainties, and to quantify them. In addition, IBNNs are robust in the sense of Bayesian sensitivity analysis, and are more robust than BNNs to distribution shift. They can also be used to compute sets of outcomes that enjoy PAC-like properties. We apply IBNNs to two case studies. One, to model blood glucose and insulin dynamics for artificial pancreas control, and two, for motion prediction in autonomous driving scenarios. We show that IBNNs performs better when compared to an ensemble of BNNs benchmark.

Confidence Composition for Monitors of Verification Assumptions

Nov 03, 2021

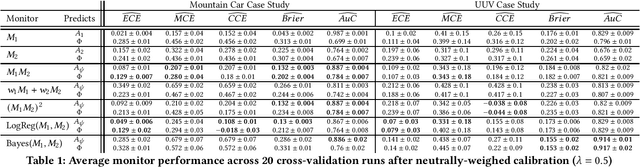

Abstract:Closed-loop verification of cyber-physical systems with neural network controllers offers strong safety guarantees under certain assumptions. It is, however, difficult to determine whether these guarantees apply at run time because verification assumptions may be violated. To predict safety violations in a verified system, we propose a three-step framework for monitoring the confidence in verification assumptions. First, we represent the sufficient condition for verified safety with a propositional logical formula over assumptions. Second, we build calibrated confidence monitors that evaluate the probability that each assumption holds. Third, we obtain the confidence in the verification guarantees by composing the assumption monitors using a composition function suitable for the logical formula. Our framework provides theoretical bounds on the calibration and conservatism of compositional monitors. In two case studies, we demonstrate that the composed monitors improve over their constituents and successfully predict safety violations.

ModelGuard: Runtime Validation of Lipschitz-continuous Models

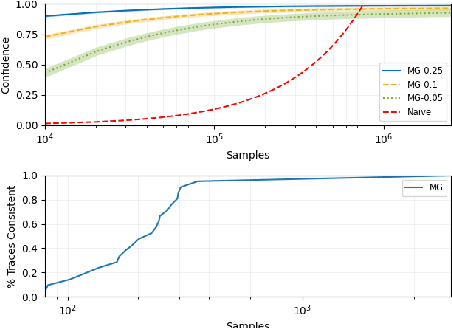

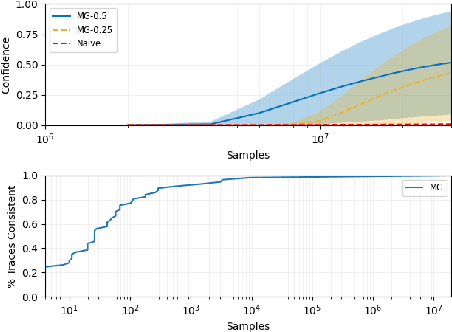

Apr 30, 2021

Abstract:This paper presents ModelGuard, a sampling-based approach to runtime model validation for Lipschitz-continuous models. Although techniques exist for the validation of many classes of models the majority of these methods cannot be applied to the whole of Lipschitz-continuous models, which includes neural network models. Additionally, existing techniques generally consider only white-box models. By taking a sampling-based approach, we can address black-box models, represented only by an input-output relationship and a Lipschitz constant. We show that by randomly sampling from a parameter space and evaluating the model, it is possible to guarantee the correctness of traces labeled consistent and provide a confidence on the correctness of traces labeled inconsistent. We evaluate the applicability and scalability of ModelGuard in three case studies, including a physical platform.

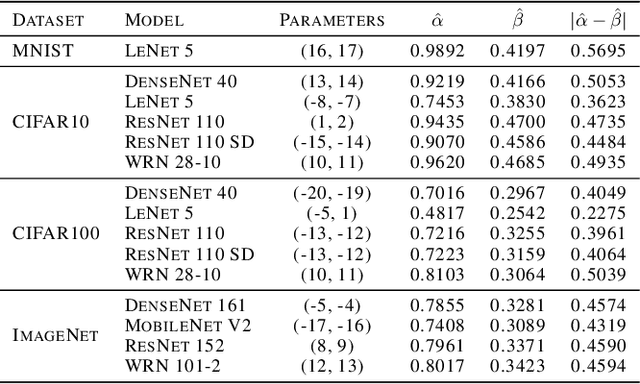

Confidence Calibration with Bounded Error Using Transformations

Feb 25, 2021

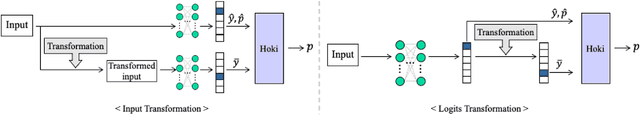

Abstract:As machine learning techniques become widely adopted in new domains, especially in safety-critical systems such as autonomous vehicles, it is crucial to provide accurate output uncertainty estimation. As a result, many approaches have been proposed to calibrate neural networks to accurately estimate the likelihood of misclassification. However, while these methods achieve low expected calibration error (ECE), few techniques provide theoretical performance guarantees on the calibration error (CE). In this paper, we introduce Hoki, a novel calibration algorithm with a theoretical bound on the CE. Hoki works by transforming the neural network logits and/or inputs and recursively performing calibration leveraging the information from the corresponding change in the output. We provide a PAC-like bounds on CE that is shown to decrease with the number of samples used for calibration, and increase proportionally with ECE and the number of discrete bins used to calculate ECE. We perform experiments on multiple datasets, including ImageNet, and show that the proposed approach generally outperforms state-of-the-art calibration algorithms across multiple datasets and models - providing nearly an order or magnitude improvement in ECE on ImageNet. In addition, Hoki is fast algorithm which is comparable to temperature scaling in terms of learning time.

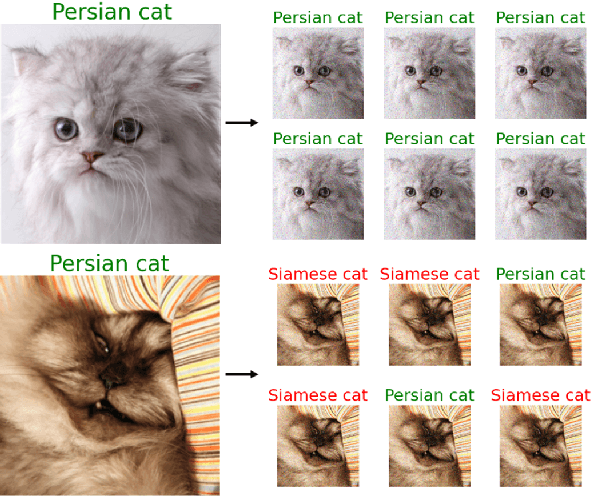

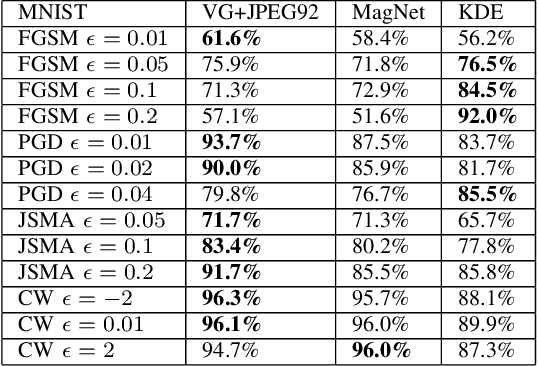

VisionGuard: Runtime Detection of Adversarial Inputs to Perception Systems

Feb 23, 2020

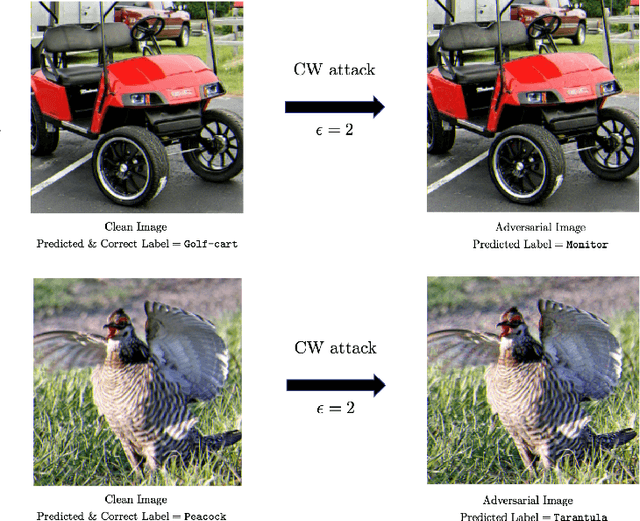

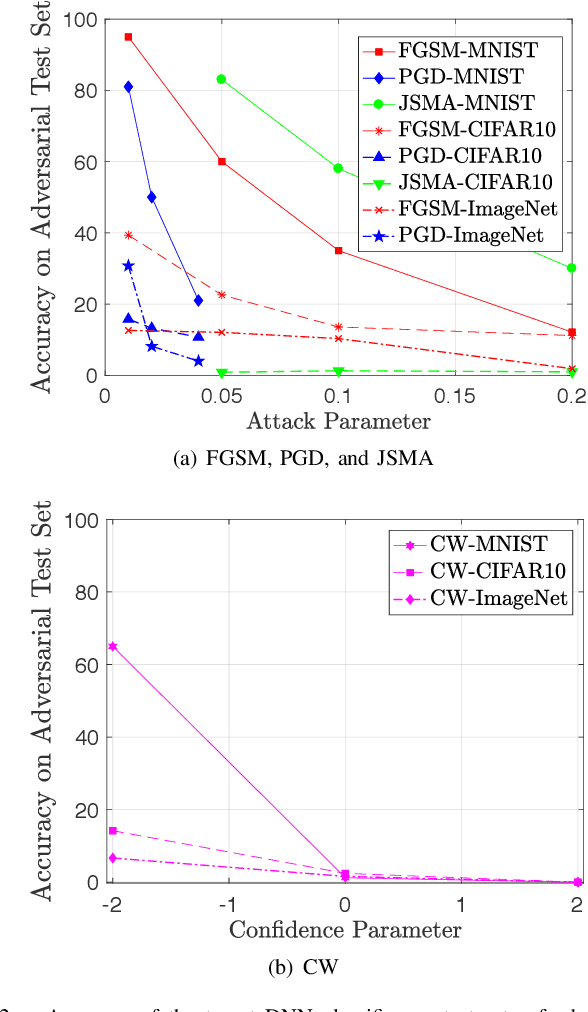

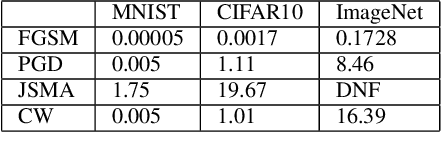

Abstract:Deep neural network (DNN) models have proven to be vulnerable to adversarial attacks. In this paper, we propose VisionGuard, a novel attack- and dataset-agnostic and computationally-light defense mechanism for adversarial inputs to DNN-based perception systems. In particular, VisionGuard relies on the observation that adversarial images are sensitive to lossy compression transformations. Specifically, to determine if an image is adversarial, VisionGuard checks if the output of the target classifier on a given input image changes significantly after feeding it a transformed version of the image under investigation. Moreover, we show that VisionGuard is computationally-light both at runtime and design-time which makes it suitable for real-time applications that may also involve large-scale image domains. To highlight this, we demonstrate the efficiency of VisionGuard on ImageNet, a task that is computationally challenging for the majority of relevant defenses. Finally, we include extensive comparative experiments on the MNIST, CIFAR10, and ImageNet datasets that show that VisionGuard outperforms existing defenses in terms of scalability and detection performance.

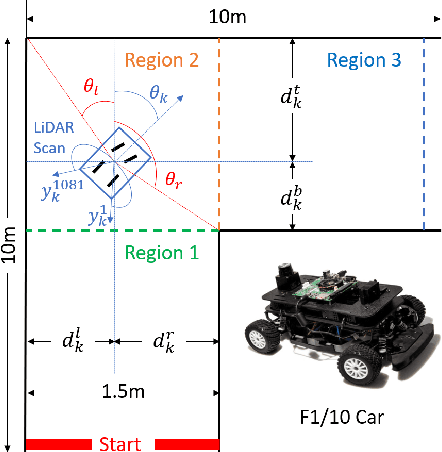

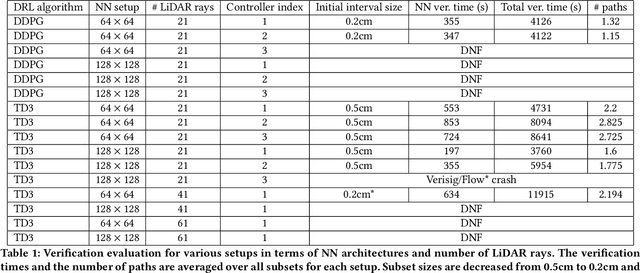

Case Study: Verifying the Safety of an Autonomous Racing Car with a Neural Network Controller

Oct 24, 2019

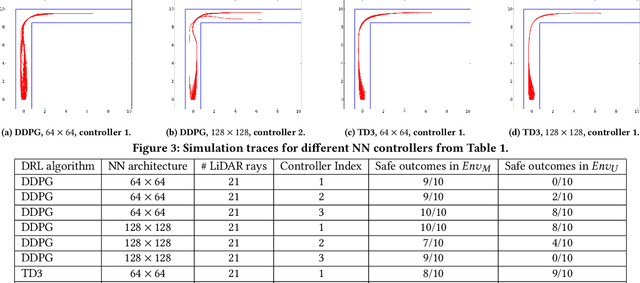

Abstract:This paper describes a verification case study on an autonomous racing car with a neural network (NN) controller. Although several verification approaches have been proposed over the last year, they have only been evaluated on low-dimensional systems or systems with constrained environments. To explore the limits of existing approaches, we present a challenging benchmark in which the NN takes raw LiDAR measurements as input and outputs steering for the car. We train a dozen NNs using two reinforcement learning algorithms and show that the state of the art in verification can handle systems with around 40 LiDAR rays, well short of a typical LiDAR scan with 1081 rays. Furthermore, we perform real experiments to investigate the benefits and limitations of verification with respect to the sim2real gap, i.e., the difference between a system's modeled and real performance. We identify cases, similar to the modeled environment, in which verification is strongly correlated with safe behavior. Finally, we illustrate LiDAR fault patterns that can be used to develop robust and safe reinforcement learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge